Professor Junil Choi’s lab received the Best Paper Award at the 2022 ICT Academic Paper Competition.

Professor Junil Choi’s lab received the Best Paper Award at the 2022 ICT Academic Paper Competition.

[Prof. Junil Choi]

EE Professor Junil Choi was awarded the Early Achievement Award by the IEEE Communications Society Communication Theory Technical Committee (CTTC), becoming the first Korean member to receive the honor.

Although professor Choi was chosen as the recipient for the 2021 award, the award ceremony was held at the belated Communication Theory Workshop (CTW) last week, due to the COVID-19 pandemic.

The IEEE CTTC was established in 1964 as one of the first technical committees within the IEEE Communications Society (ComSoc).

Since 2016, the CTTC Early Achievement Award has celebrated the achievements of members with early career visibility within 10 years of their Ph.D., with a history of recipients from prestigious institutions such as Stanford University, Imperial College London, Virginia Tech and KTH.

[(from the left) Professor Kang Joonhyuk, Gong Jinu (Ph.D candidate)]

Abstract

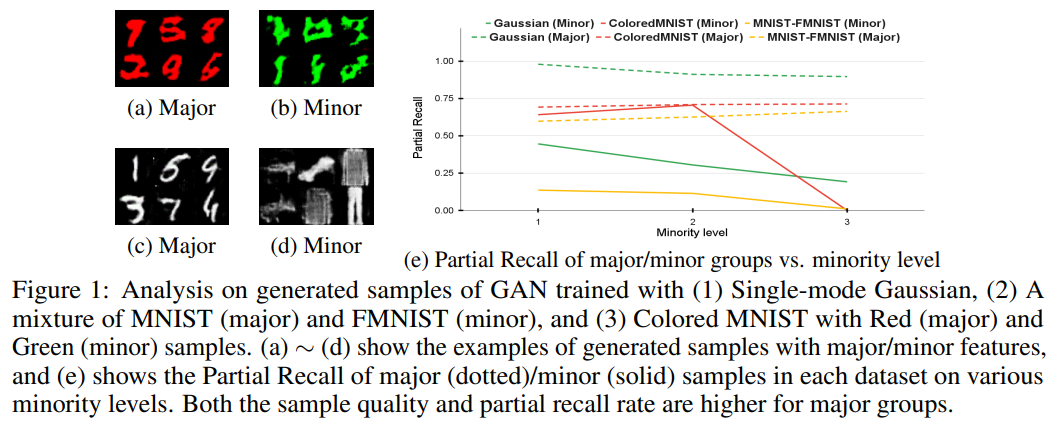

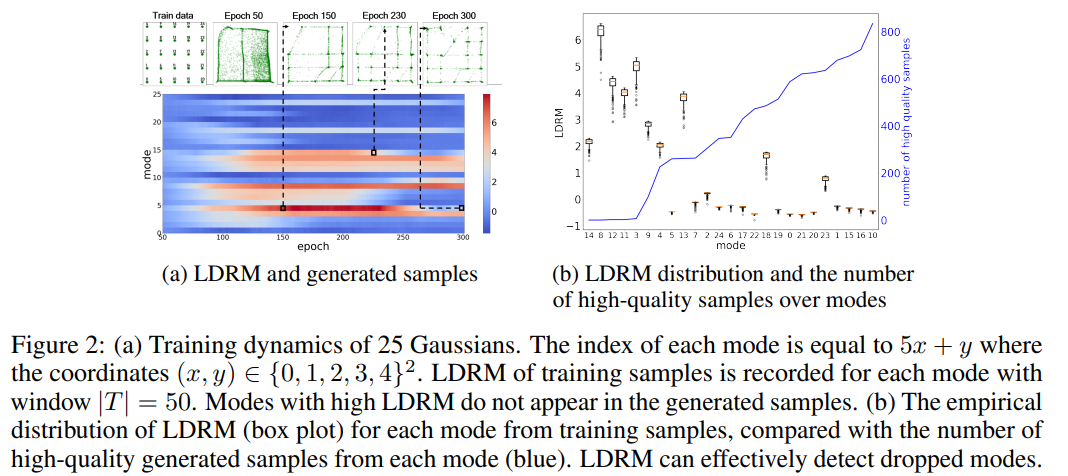

Despite remarkable performance in producing realistic samples, Generative Adversarial Networks (GANs) often produce low-quality samples near low-density regions of the data manifold, e.g., samples of minor groups. Many techniques have been developed to improve the quality of generated samples, either by postprocessing generated samples or by pre-processing the empirical data distribution, but at the cost of reduced diversity. To promote diversity in sample generation without degrading the overall quality, we propose a simple yet effective method to diagnose and emphasize underrepresented samples during training of a GAN. The main idea is to use the statistics of the discrepancy between the data distribution and the model distribution at each data instance. Based on the observation that the underrepresented samples have a high average discrepancy or high variability in discrepancy, we propose a method to emphasize those samples during training of a GAN. Our experimental results demonstrate that the proposed method improves GAN performance on various datasets, and it is especially effective in improving the quality and diversity of sample generation for minor groups.

Abstract

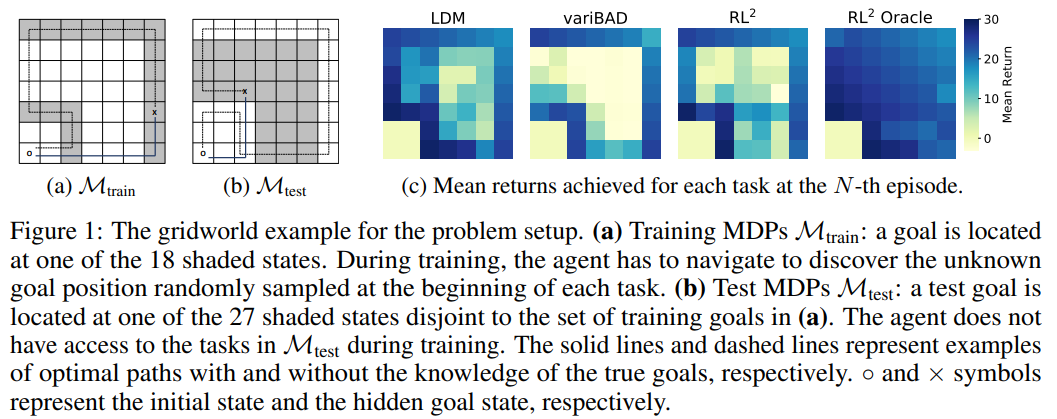

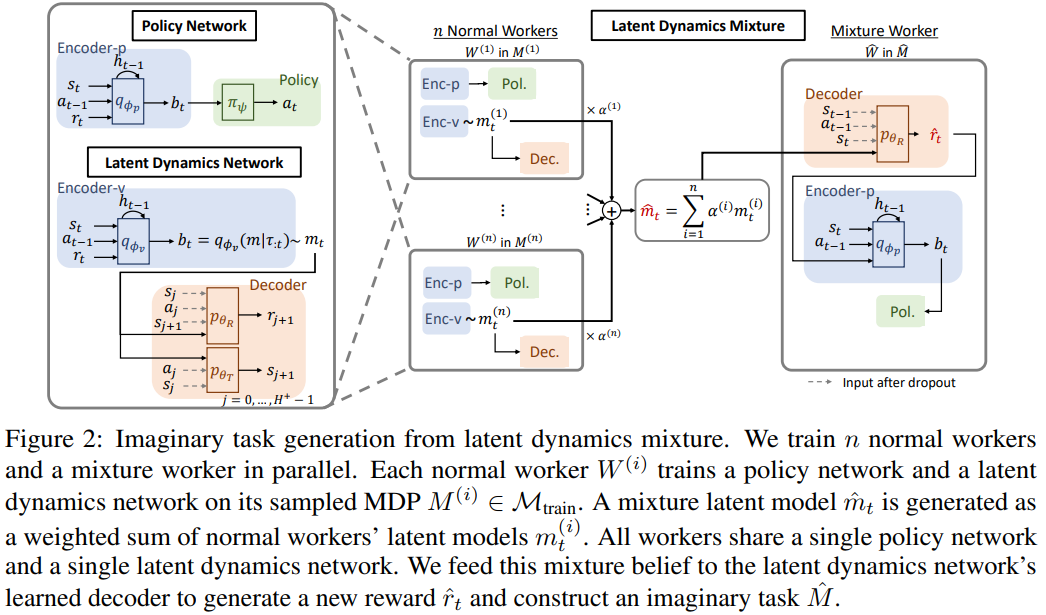

The generalization ability of most meta-reinforcement learning (meta-RL) methods is largely limited to test tasks that are sampled from the same distribution used to sample training tasks. To overcome the limitation, we propose Latent Dynamics Mixture (LDM) that trains a reinforcement learning agent with imaginary tasks generated from mixtures of learned latent dynamics. By training a policy on mixture tasks along with original training tasks, LDM allows the agent to prepare for unseen test tasks during training and prevents the agent from overfitting the training tasks. LDM significantly outperforms standard meta-RL methods in test returns on the gridworld navigation and MuJoCo tasks where we strictly separate the training task distribution and the test task distribution.

Abstract

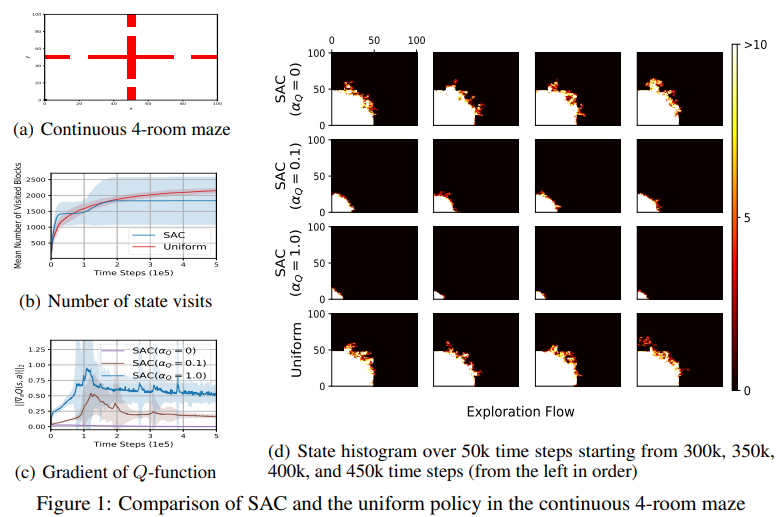

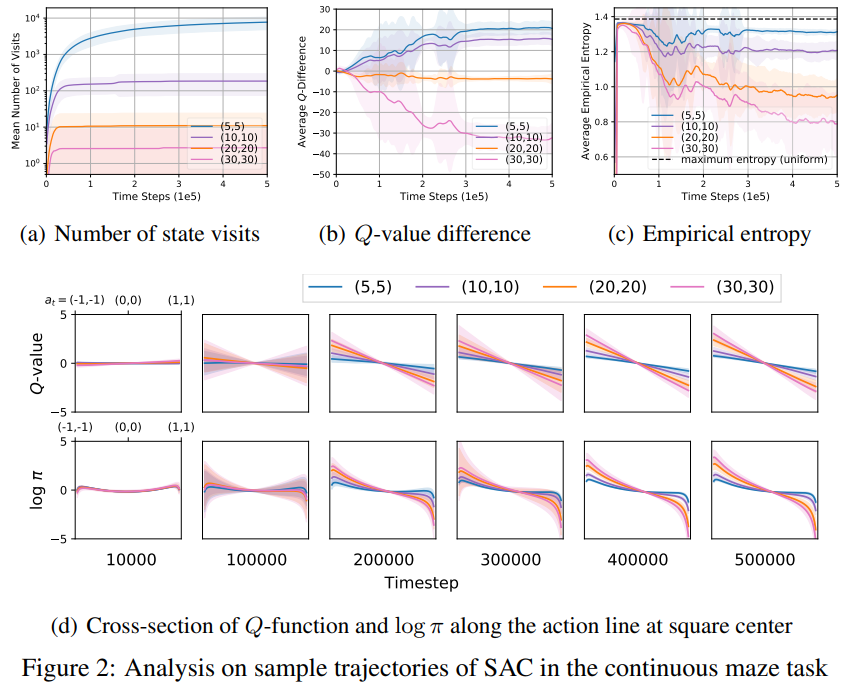

In this paper, we propose a max-min entropy framework for reinforcement learning (RL) to overcome the limitation of the soft actor-critic (SAC) algorithm implementing the maximum entropy RL in model-free sample-based learning. Whereas the maximum entropy RL guides learning for policies to reach states with high entropy in the future, the proposed max-min entropy framework aims to learn to visit states with low entropy and maximize the entropy of these low-entropy states to promote better exploration. For general Markov decision processes (MDPs), an efficient algorithm is constructed under the proposed max-min entropy framework based on disentanglement of exploration and exploitation. Numerical results show that the proposed algorithm yields drastic performance improvement over the current state-of-the-art RL algorithms.

Abstract

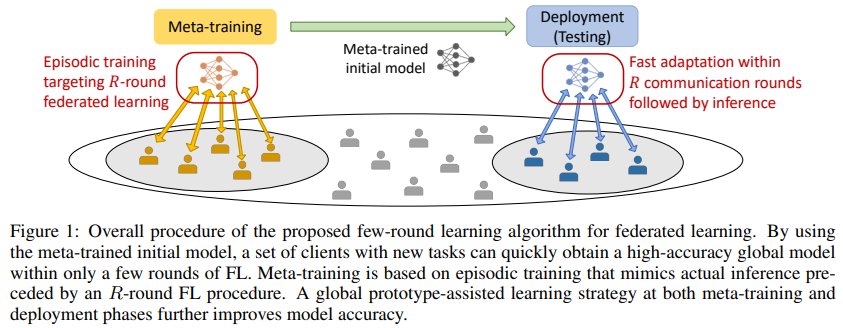

Federated learning (FL) presents an appealing opportunity for individuals who are willing to make their private data available for building a communal model without revealing their data contents to anyone else. Of central issues that may limit a widespread adoption of FL is the significant communication resources required in the exchange of updated model parameters between the server and individual clients over many communication rounds. In this work, we focus on limiting the number of model exchange rounds in FL to some small fixed number, to control the communication burden. Following the spirit of meta-learning for few-shot learning, we take a meta-learning strategy to train the model so that once the meta-training phase is over, only rounds of FL would produce a model that will satisfy the needs of all participating clients. A key advantage of employing meta-training is that the main labeled dataset used in training could differ significantly (e.g., different classes of images) from the actual data sample presented at inference time. Compared to the meta-training approaches to optimize personalized local models at distributed devices, our method better handles the potential lack of data variability at individual nodes. Extensive experimental results indicate that meta-training geared to few-round learning provide large performance improvements compared to various baselines.

Abstract

While federated learning (FL) allows efficient model training with local data at edge devices, among major issues still to be resolved are: slow devices known as stragglers and malicious attacks launched by adversaries. While the presence of both of these issues raises serious concerns in practical FL systems, no known schemes or combinations of schemes effectively address them at the same time. We propose Sageflow, staleness-aware grouping with entropy-based filtering and loss-weighted averaging, to handle both stragglers and adversaries simultaneously. Model grouping and weighting according to staleness (arrival delay) provides robustness against stragglers, while entropy-based filtering and loss-weighted averaging, working in a highly complementary fashion at each grouping stage, counter a wide range of adversary attacks. A theoretical bound is established to provide key insights into the convergence behavior of Sageflow. Extensive experimental results show that Sageflow outperforms various existing methods aiming to handle stragglers/adversaries.