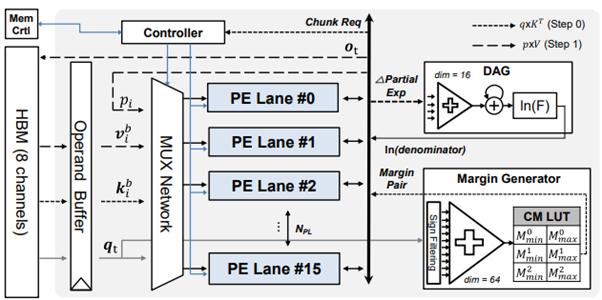

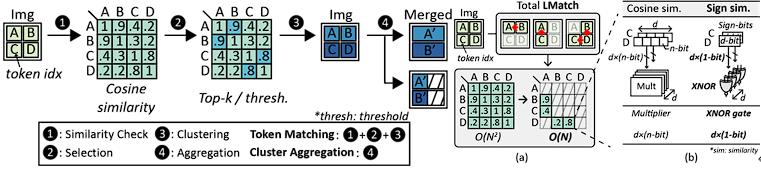

Title: AdapTiV: Sign-Similarity based Image-Adaptive Token Merging for Vision Transformer Acceleration

Venue: MICRO 2024

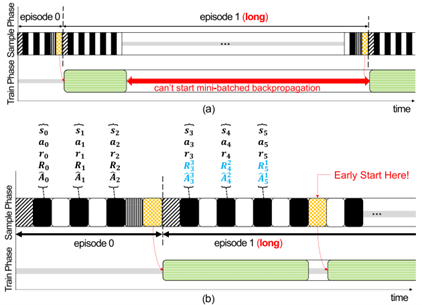

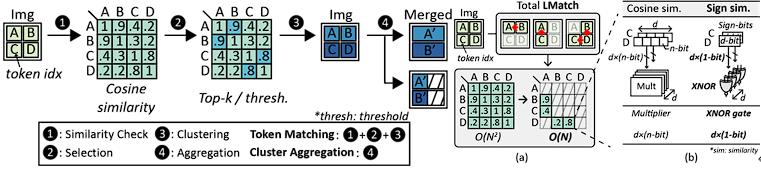

Abstract: The advent of Vision Transformers (ViT) has set a new performance leap in computer vision by leveraging self-attention mechanisms. However, the computational efficiency of ViTs is limited by the quadratic complexity of self-attention and redundancy among image tokens. To address these issues, token merging strategies have been explored to reduce input size by merging similar tokens. Nonetheless, implementing token merging presents a degradation of latency performance due to its two factors: inefficient computations and fixed merge rate nature. This paper introduces AdapTiV, a novel hardware-software co-designed accelerator that accelerates ViTs through image-adaptive token merging, effectively addressing the aforementioned challenges. Under the design philosophy of reducing the overhead of token merging and concealing its latency within the Layer Normalization (LN) process, AdapTiV incorporates algorithmic innovations such as Local Matching, which restricts the search space for token merging, thereby reducing the computational complexity; Sign Similarity, which simplifies the calculation of similarity between tokens; and Dynamic Merge Rate, which enables image-adaptive token merging. Additionally, the hardware component that supports AdapTiV’s algorithms, named the Adaptive Token Merging Engine, employs Sign-Driven Scheduling to conceal the overhead of token merging effectively. This engine integrates submodules such as a Sign Similarity Computing Unit, which calculates the similarity between tokens using a newly introduced similarity metric; a Sign Scratchpad, which is a lightweight, image-width-sized memory that stores previous tokens; a Sign Scratchpad Managing Unit, which controls the Sign Scratchpad; and a Token Integration Map to facilitate efficient, image-adaptive token merging. Our evaluations demonstrate that AdapTiV achieves, on average, 309.4×, 18.4×, 89.8×, 6.3× speedups and 262.1×, 21.5×, 496.6×, 11.2× improvements in energy efficiency over edge CPUs, edge GPUs, server CPUs, and server GPUs, while maintaining an accuracy loss below 1% without additional training.

Main Figure:

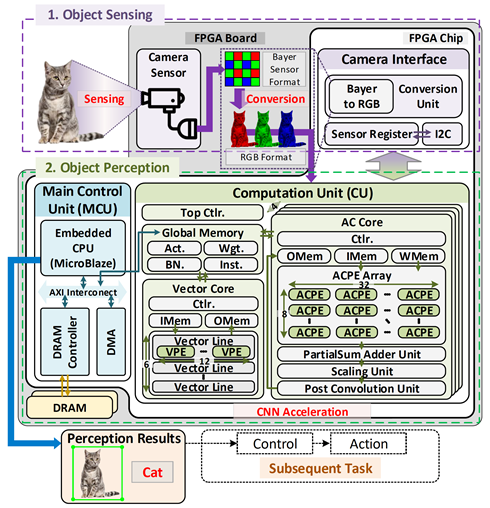

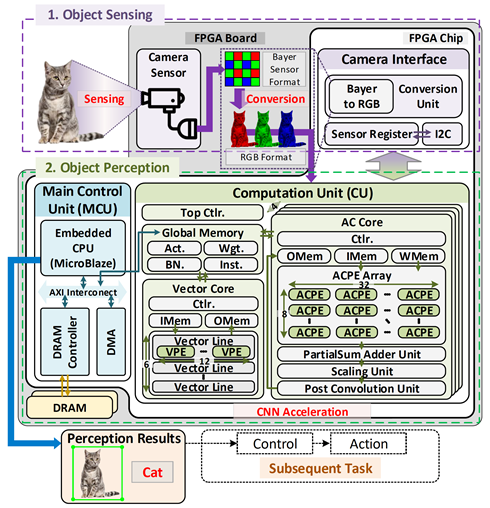

Title: ACane: An Efficient FPGA-based Embedded Vision Platform with

Accumulation-as-Convolution Packing for Autonomous Mobile Robots

Venue: ASP-DAC 2024

Abstract: Convolutional Neural Networks (CNNs) have been extensively deployed on autonomous mobile robots in recent years, and embedded platforms based on field-programmable gate arrays (FPGAs) that involve digital signal processors (DSPs) effectively utilize low-precision quantization with DSP-packing methods to implement large CNN models. However, DSP-packing has a limitation in improving computation performance due to zero bits that prevent bit contamination of output operands. In this paper, we propose ACane, a compact FPGA-based vision platform for autonomous mobile robots, based on a novel DSP-packing technique called accumulation-as-convolution packing, which effectively packs low-bit values to a single DSP, with boosting convolution operations. It also applies optimized data

mapping and dataflow to improve computation parallelism of the DSP-packing. ACane successfully achieves the highest DSP efficiency (1.465 GOPS/DSP) and energy efficiency (361.8 GOPS/W), which are 1.98-8.32× and 4.03-25.5× higher compared to the state-of-the-art FPGA-based vision works, respectively.

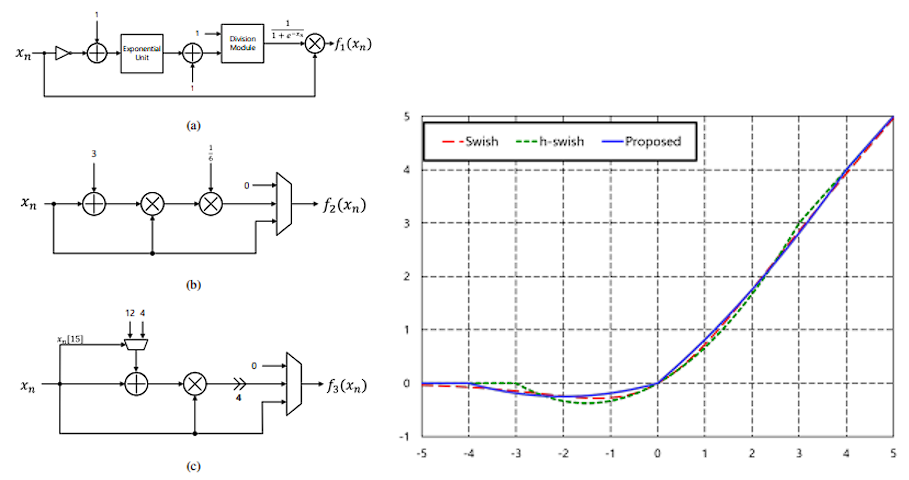

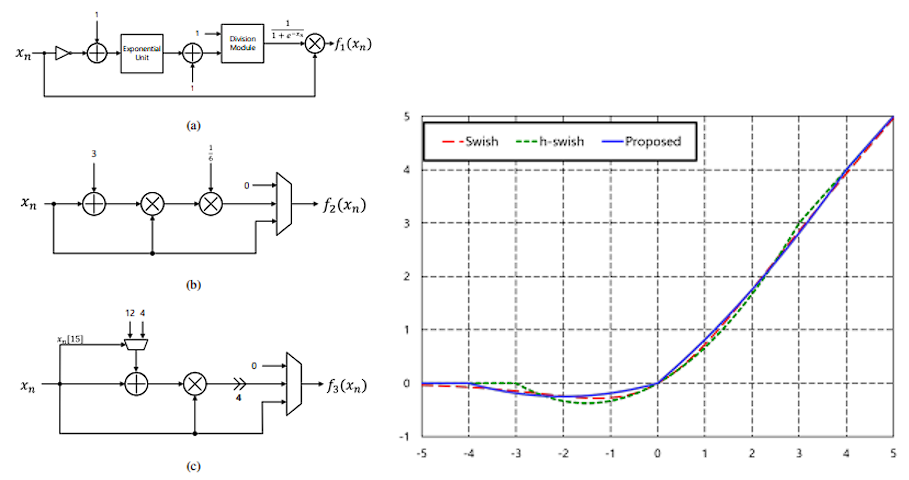

Kangjoon Choi, Sungho Kim, Jeongmin Kim, and In-Cheol Park, “Hardware-friendly Approximation for Swish Activation and its Implementation,” IEEE Transactions on Circuits and Systems II: Express Briefs, Apr. 2024

Abstract: This paper addresses the challenges associated with implementing the Swish activation function in hardware. While Swish exhibits improved training performance over the ReLU activation function, its computational complexity poses difficulties in hardware implementation. To mitigate the drawback, an approximation called h-swish was introduced to reduce the computational complexity; however, there were still rooms for improvement. This paper proposes a novel hardware-friendly approximation of Swish and its implementation, which demonstrates significant improvements over h-swish in terms of delay, area, and power consumption.

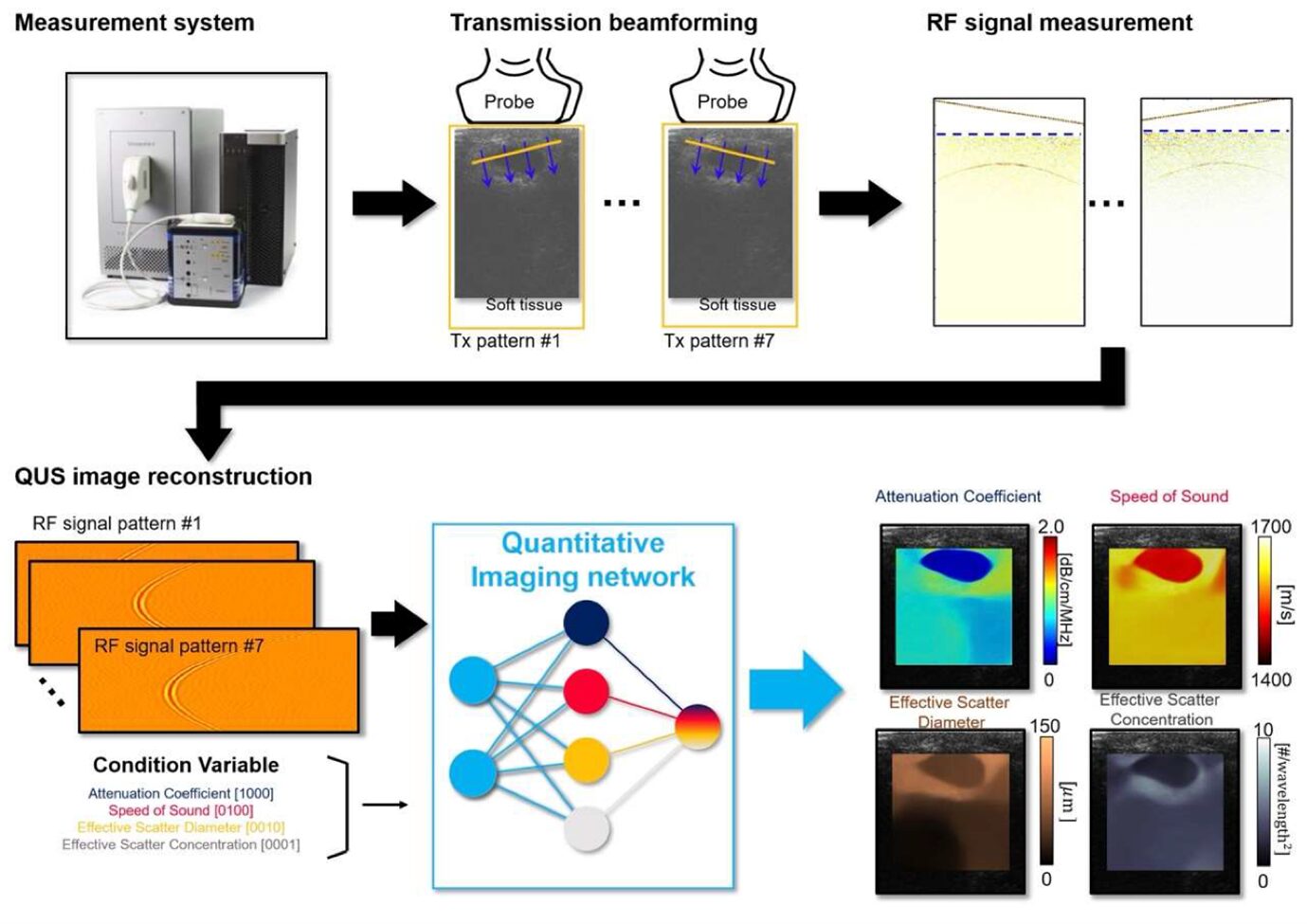

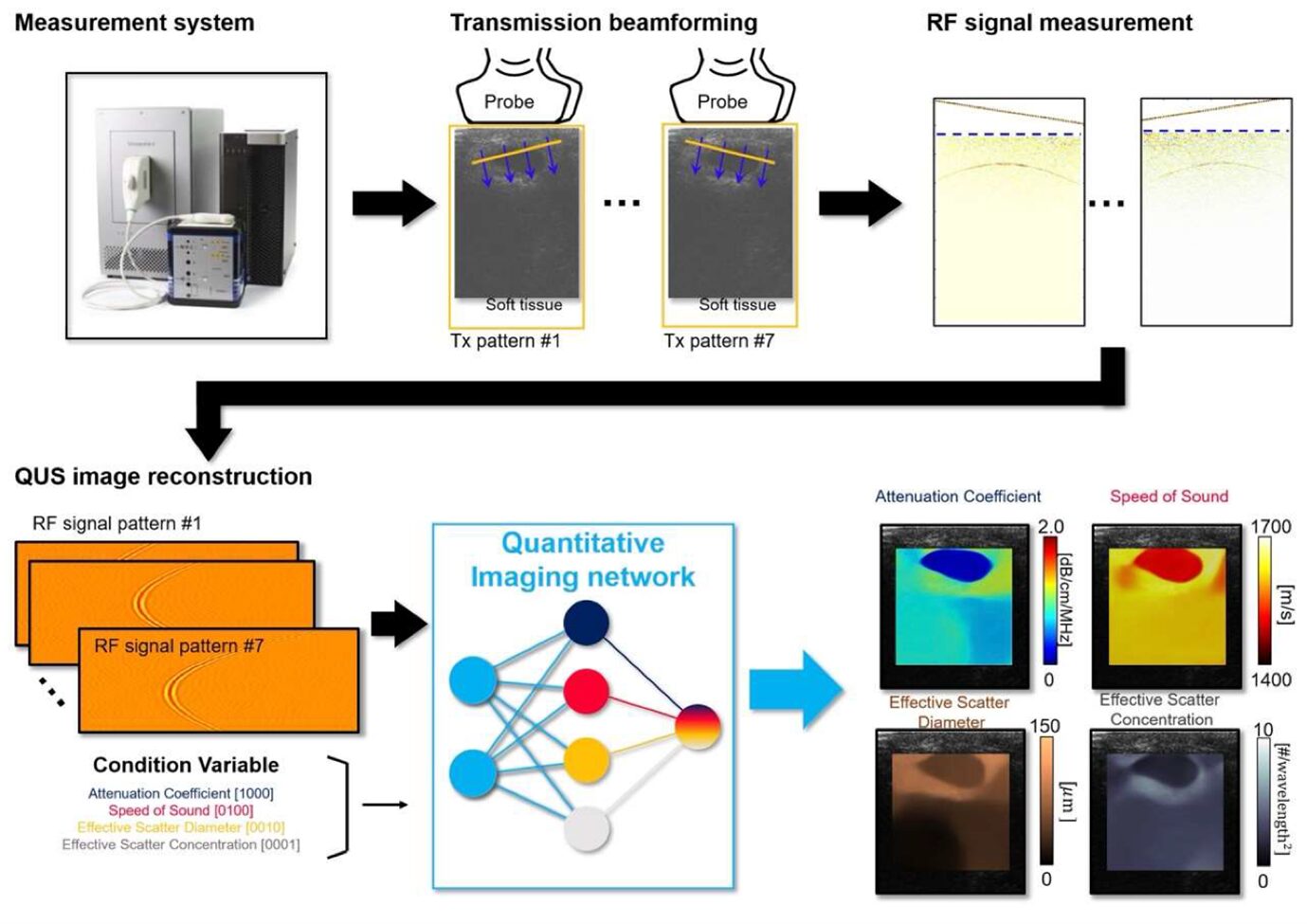

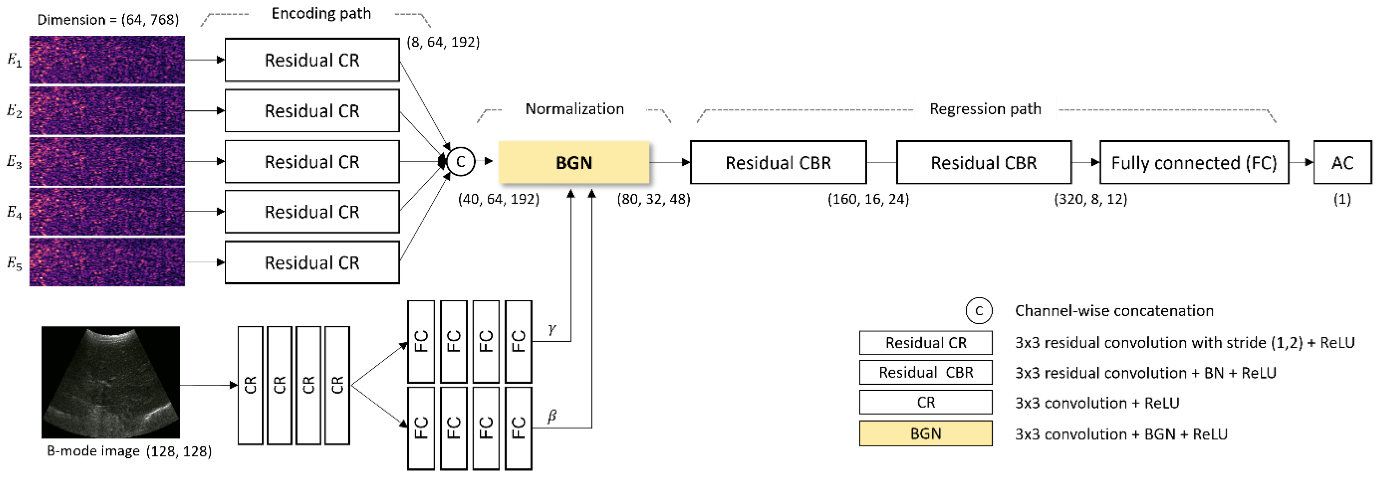

Hyuksool Kwon*, Seok-Hwan Oh*, Myeong-Gee Kim, Youngmin Kim, Guil Jung, Hyeon-Jik Lee, Sang-Yun Kim, Hyeon-Min Bae, “Artificial Intelligence-Enhanced Quantitative Ultrasound for Breast Cancer: Pilot Study on Quantitative Parameters and Biopsy Outcomes”, Diagnostics, June 2024.

Abstract: Traditional B-mode ultrasound has difficulties distinguishing benign from malignant breast lesions. It appears that Quantitative Ultrasound (QUS) may offer advantages. We examined the QUS imaging system’s potential, utilizing parameters like Attenuation Coefficient (AC), Speed of Sound (SoS), Effective Scatterer Diameter (ESD), and Effective Scatterer Concentration (ESC) to enhance diagnostic accuracy. B-mode images and radiofrequency signals were gathered from breast lesions. These parameters were processed and analyzed by a QUS system trained on a simulated acoustic dataset and equipped with an encoder-decoder structure. Fifty-seven patients were enrolled over six months. Biopsies served as the diagnostic ground truth. AC, SoS, and ESD showed significant differences between benign and malignant lesions (p < 0.05), but ESC did not. A logistic regression model was developed, demonstrating an area under the receiver operating characteristic curve of 0.90 (95% CI: 0.78, 0.96) for distinguishing between benign and malignant lesions. In conclusion, the QUS system shows promise in enhancing diagnostic accuracy by leveraging AC, SoS, and ESD. Further studies are needed to validate these findings and optimize the system for clinical use.

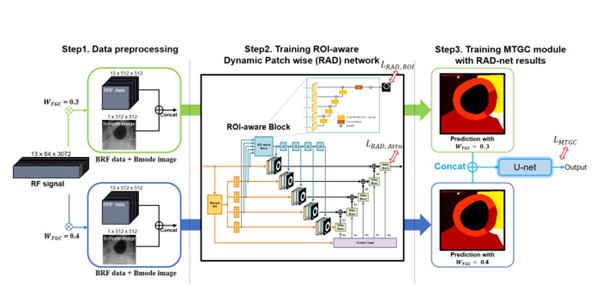

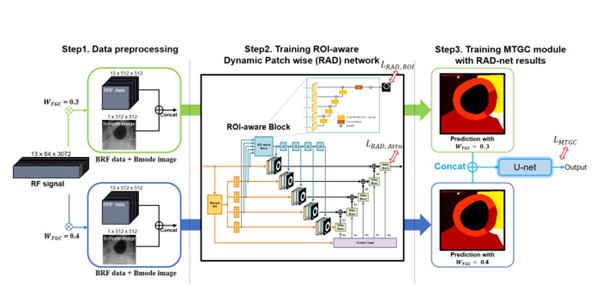

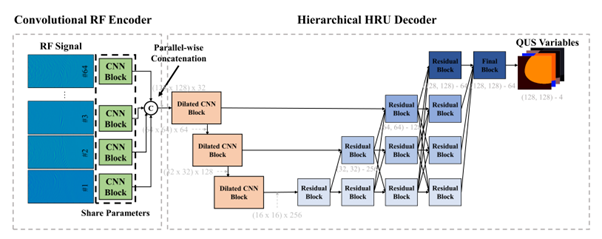

Guil Jung, Youngmin Kim, Hyeon-Jik Lee, Seok-Hwan Oh, Myeong-Gee Kim, Hyuk-Sool Kwon, Hyeon-Min Bae, “Myocardium Tissue Characteristics Quantification in Echocardiography”, IEEE International Symposium on Biomedical Imaging (ISBI), May 2024.

Abstract: Echocardiography is a preemptive imaging modality for assessing cardiac conditions in emergencies. However, its operator-dependent nature presents critical limitations. To address such challenges, we introduce a learning-based quantitative ultrasound (QUS) system that reconstructs tissue biomechanical properties for broad-and-deep area with a sector probe for echocardiography. A ROI-aware dynamic patch-wise network is employed, efficiently decoding received data by guiding distinct weights based on the position of the left ventricle wall. In addition, the proposed MTGC module compensates for errors in reconstructing attenuation coefficients stemming from variations in signal attenuation at different depths due to tissue heterogeneity. The proposed network demonstrated a performance improvement of 2.34% compared to the baseline in the ablation study, and we validated its effectiveness in diagnosing heart disease through phantom and ex-vivo test. A series of experiments show the potential for a new diagnostic paradigm based on quantitative indices in echocardiography

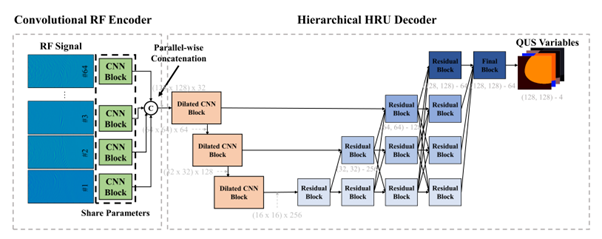

Youngmin Kim, Myeong-Gee Kim, Seok-Hwan Oh, Guil Jung, Hyeon-Jik Lee, Hyuk-Sool Kwon, Hyeon-Min Bae, “Quantitative Ultrasound Imaging Using Conventional Multi-Scanline Beamforming”, IEEE International Symposium on Biomedical Imaging (ISBI), May 2024.

Abstract: Recent studies have introduced quantitative ultrasound (QUS) to extract tissue acoustic properties from pulse-echo data, employing neural network models to extract pathological features in raw radio-frequency (RF) signals. Nonetheless, implementing QUS on widely deployed ultrasound equipment faces two significant challenges. Firstly, the TX beam pattern required for QUS may differ from the pattern optimized for B-mode imaging, requiring additional steps such as a firmware update. Secondly, the variation in the number of transducer elements and their corresponding region of interests (ROI) requires retraining for each transducer. This paper presents a QUS imaging technique based on multi-scanline transmission (MST) beamforming, commonly employed in conventional ultrasound equipment. Additionally, we propose a unique network architecture and training data augmentation techniques that leverage MST’s distinctive properties. Furthermore, we demonstrate the effectiveness of the proposed QUS approach in untrained environments, making it readily applicable for clinical use.

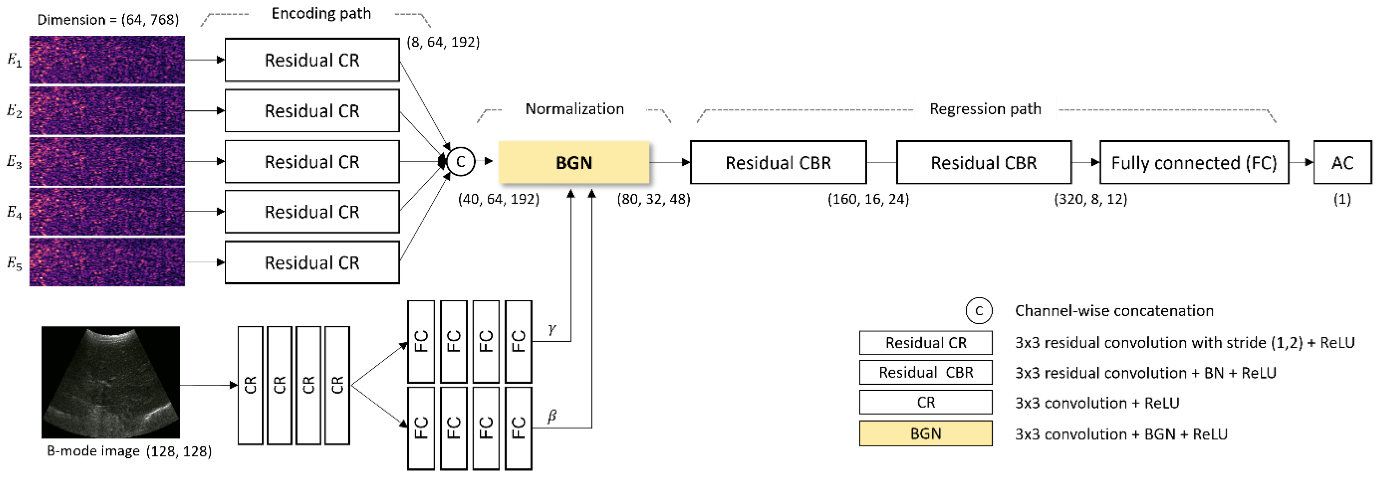

Hyuksool Kwon*, Myeong-Gee Kim*, Seok-Hwan Oh, Youngmin Kim, Guil Jung, Hyeon-Jik Lee, Sang-Yun Kim, Hyeon-Min Bae, “Application of Quantitative Ultrasonography and Artificial Intelligence for Assessing Severity of Fatty Liver: A Pilot Study, Diagnostics”, Diagnostics, Feb. 2024.

Abstract: Non-alcoholic fatty liver disease (NAFLD), prevalent among conditions like obesity and diabetes, is globally significant. Existing ultrasound diagnosis methods, despite their use, often lack accuracy and precision, necessitating innovative solutions like AI. This study aims to validate an AI-enhanced quantitative ultrasound (QUS) algorithm for NAFLD severity assessment and compare its performance with Magnetic Resonance Imaging Proton Density Fat Fraction (MRI-PDFF), a conventional diagnostic tool. A single-center cross-sectional pilot study was conducted. Liver fat content was estimated using an AI-enhanced quantitative ultrasound attenuation coefficient (QUS-AC) of Barreleye Inc. with an AI-based QUS algorithm and two conventional ultrasound techniques, FibroTouch Ultrasound Attenuation Parameter (UAP) and Canon Attenuation Imaging (ATI). The results were compared with MRI-PDFF values. The intraclass correlation coefficient (ICC) was also assessed. Significant correlation was found between the QUS-AC and the MRI-PDFF, reflected by an R value of 0.95. On other hand, ATI and UAP displayed lower correlations with MRI-PDFF, yielding R values of 0.73 and 0.51, respectively. In addition, ICC for QUS-AC was 0.983 for individual observations. On the other hand, the ICCs for ATI and UAP were 0.76 and 0.39, respectively. Our findings suggest that AC with AI-enhanced QUS could serve as a valuable tool for the non-invasive diagnosis of NAFLD.

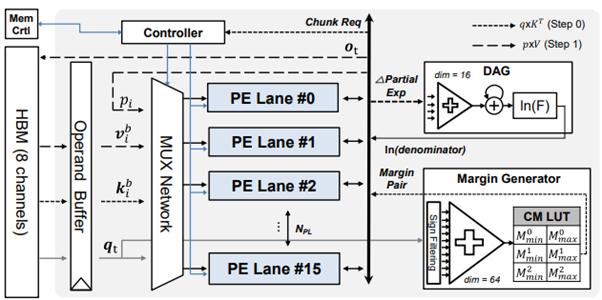

Junyoung Park, Myeonggu Kang, Yunki Han, Yanggon Kim, Jaekang Shin, Lee-Sup Kim, “ Token-Picker: Accelerating Attention in Text Generation with Minimized Memory Transfer via Probability Estimation,” IEEE/ACM Design Automation Conference, 2024

Abstract: The attention mechanism in text generation is memory-bounded due to its sequential characteristics. Therefore, off-chip memory accesses should be minimized for faster execution. Although previous methods addressed this by pruning unimportant tokens, they fall short in selectively removing tokens with near-zero attention probabilities in each instance. Our method estimates the probability before the softmax function, effectively removing low probability tokens and achieving an 12.1x pruning ratio without fine-tuning. Additionally, we present a hardware design supporting seamless on-demand off-chip access. Our approach shows 2.6x reduced memory accesses, leading to an average 2.3x speedup and a 2.4x energy efficiency.

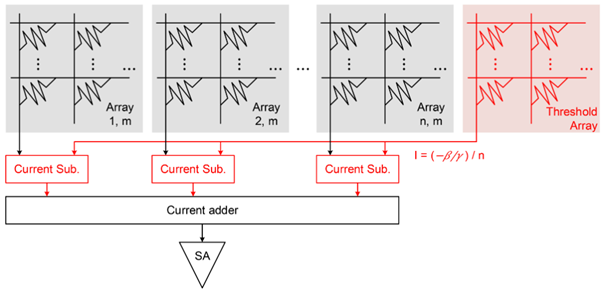

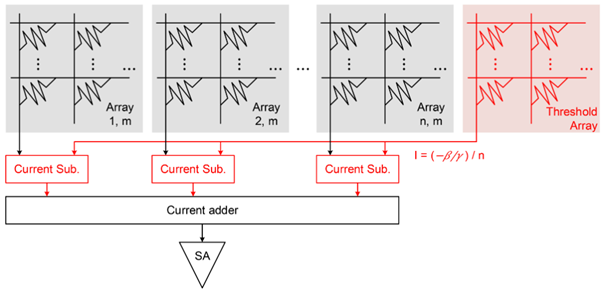

Hyeonuk Kim; Youngbeom Jung; Lee-Sup Kim, “ADC-Free ReRAM-Based In-Situ Accelerator for Energy-Efficient Binary Neural Networks,” IEEE Transactions on Computers ( Volume: 73, Issue: 2, February 2024)

Abstract: With the ever-increasing parameter size of deep learning models, conventional ASIC-based accelerators in mobile environments suffer from low energy budget due to limited memory capacity and frequent data movements. Binary neural networks (BNNs) deployed in ReRAM-based in-situ accelerators provide a promising solution, and various related architectures have been proposed recently. However, their performances are largely compromised by the tremendous cost of domain conversion via analog-to-digital converters (ADCs), essential for mixed-signal processing in ReRAM. This article identifies two root causes of the need for such ADCs and proposes effective solutions to address them. First, we minimize redundant operations in BNNs and reduce the number of ReRAM arrays with ADCs approximately by half. We also propose a partial-sum range adjustment technique based on a layer remapping to deal with the remaining ADCs. Proper handling of the partial-sum distribution allows ReRAM-based in-situ processing without domain conversion, completely bypassing the need for ADCs. Experimental results show that the proposed architecture achieves a 3.44x speedup and 91.5% energy savings, making it an attractive solution for on-device AI at the edge.

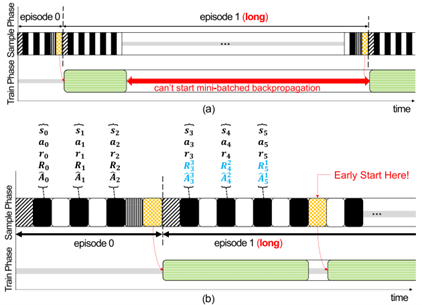

Yang-Gon Kim; Yun-Ki Han; Jae-Kang Shin; Jun-Kyum Kim; Lee-Sup Kim, “Accelerating Deep Reinforcement Learning via Phase-Level Parallelism for Robotics Applications,” IEEE Computer Architecture Letters ( Volume: 23, Issue: 1, Jan.-June 2024)

Abstract: Deep Reinforcement Learning (DRL) plays a critical role in controlling future intelligent machines like robots and drones. Constantly retrained by newly arriving real-world data, DRL provides optimal autonomous control solutions for adapting to ever-changing environments. However, DRL repeats inference and training that are computationally expensive on resource-constraint mobile/embedded platforms. Even worse, DRL produces a severe hardware underutilization problem due to its unique execution pattern. To overcome the inefficiency of DRL, we propose Train Early Start , a new execution pattern for building the efficient DRL algorithm. Train Early Start parallelizes the inference and training execution, hiding the serialized performance bottleneck and improving the hardware utilization dramatically. Compared to the state-of-the-art mobile SoC, Train Early Start achieves 1.42x speedup and 1.13x energy efficiency.