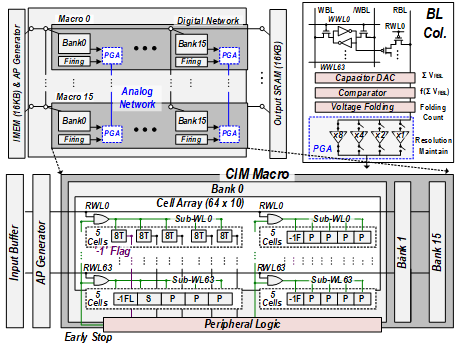

이전 메모리 컴퓨팅 프로세서들은 병렬 처리를 위해 다중 WL 구동과 높은 정확도를 위해 많은 고정밀 ADC가 필요하기 때문에 100TOPS/W 이상의 높은 에너지 효율을 달성하지 못했다. 일부 프로세서들은 높은 에너지 효율성을 얻기 위해 가중치 데이터 희소성을 많이 이용했지만, 실제 사례(예: ResNet-18을 사용한 ImageNet 작업)에서는 희소성이 30% 미만으로 제한되므로 성능에 제한이 존재한다.

본 논문에서는 에너지 효율적인 뉴로모픽 메모리 컴퓨팅 프로세서가 4가지 주요 특징들과 함께 제안된다. BL 활성도를 줄이기 위해 최상위 비트(MSB) 워드 건너뛰기가 제안되었으며, 더 낮은 BL 활성도를 달성하기 위한 early stopping이 제안되었다. 그리고, 다중 macro 간의 aggregation을 위한 mixed mode firing이 제안되었고, dynamic range를 확장하기 위한 voltage folding이 제안되었다. 그 결과, 제안된 메모리 컴퓨팅 프로세서는 62.1 TOPS/W (I=4b, W=8b) 및 310.4 TOPS/W(I=4b, W=1b)의 세계 최고 수준의 에너지 효율을 달성하였다.

Related papers:

- Kim, et al. “Neuro-CIM: A 310.4TOPS/W Neuromorphic Computing-in-Memory Processor with Low WL/BL activity and Digital-Analog Mixed-mode Neuron Firing”, Symposium on VLSI Circuits (S. VLSI), Jun. 2022

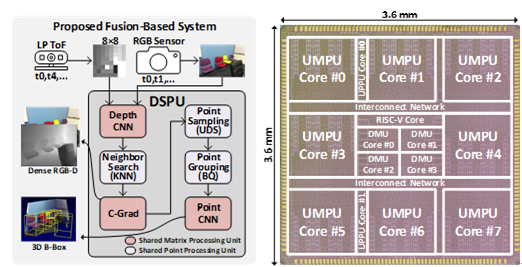

A low-latency and low-power dense RGB-D acquisition and 3D bounding-box extraction system-on-chip, DSPU, is proposed. The DSPU produces accurate dense RGB-D data through CNN-based monocular depth estimation and sensor fusion with a low-power ToF sensor. Furthermore, it performs a 3D point cloud-based neural network for 3D bounding-box extraction. The architecture of the DSPU accelerates the system by alleviating the data-intensive and computation-intensive operations. Finally, the DSPU achieves real-time implementation with 281.6 mW of end-to-end RGB-D and 3D bounding-box extraction.

Related papers:

Im, Dongseok, et al. “DSPU: A 281.6 mW Real-Time Depth Signal Processing Unit for Deep Learning-Based Dense RGB-D Data Acquisition with Depth Fusion and 3D Bounding Box Extraction in Mobile Platforms.” 2022 IEEE International Solid-State Circuits Conference (ISSCC). Vol. 65. IEEE, 2022.

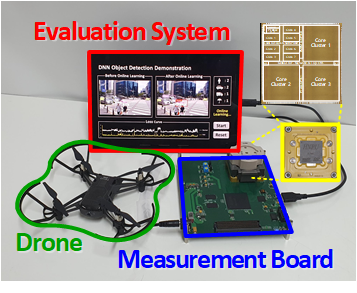

This paper presents HNPU, which is an energy-efficient DNN training processor by adopting algorithm-hardware co-design. The HNPU supports stochastic dynamic fixed-point representation and layer-wise adaptive precision searching unit for low-bit-precision training. It additionally utilizes slice-level reconfigurability and sparsity to maximize its efficiency both in DNN inference and training. Adaptive-bandwidth reconfigurable accumulation network enables reconfigurable DNN allocation and maintains its high core utilization even in various bit-precision conditions. Fabricated in a 28nm process, the HNPU accomplished at least 5.9 × higher energy-efficiency and 2.5 × higher area efficiency in actual DNN training compared with the previous state-of-the-art on-chip learning processors.

Related papers:

Han, Donghyeon, et al. “HNPU: An adaptive DNN training processor utilizing stochastic dynamic fixed-point and active bit-precision searching.” IEEE Journal of Solid-State Circuits 56.9 (2021): 2858-2869.

The authors propose a heterogeneous floating-point (FP) computing architecture to maximize energy efficiency by separately optimize exponent processing and mantissa processing. The proposed exponent-computing-in-memory (ECIM) architecture and mantissa-free-exponent-computing (MFEC) algorithm reduce the power consumption of both memory and FP MAC while resolving previous FP computing-in-memory processors’ limitations. Also, a bfloat16 DNN training processor with proposed features and sparsity exploitation support is implemented and fabricated in 28 nm CMOS technology. It achieves 13.7 TFLOPS/W energy efficiency while supporting FP operations with CIM architecture.

Related papers:

- Lee et al., “ECIM: Exponent Computing in Memory for an Energy-Efficient Heterogeneous Floating-Point DNN Training Processor,” in IEEE Micro, Jan 2022

- Lee et al., “An Energy-efficient Floating-Point DNN Processor using Heterogeneous Computing Architecture with Exponent-Computing-in-Memory”, 2021 IEEE Hot Chips 33 Symposium (HCS), 2021

- Lee et al., “A 13.7 TFLOPS/W Floating-point DNN Processor using Heterogeneous Computing Architecture with Exponent-Computing-in-Memory,” 2021 Symposium on VLSI Circuits, 2021

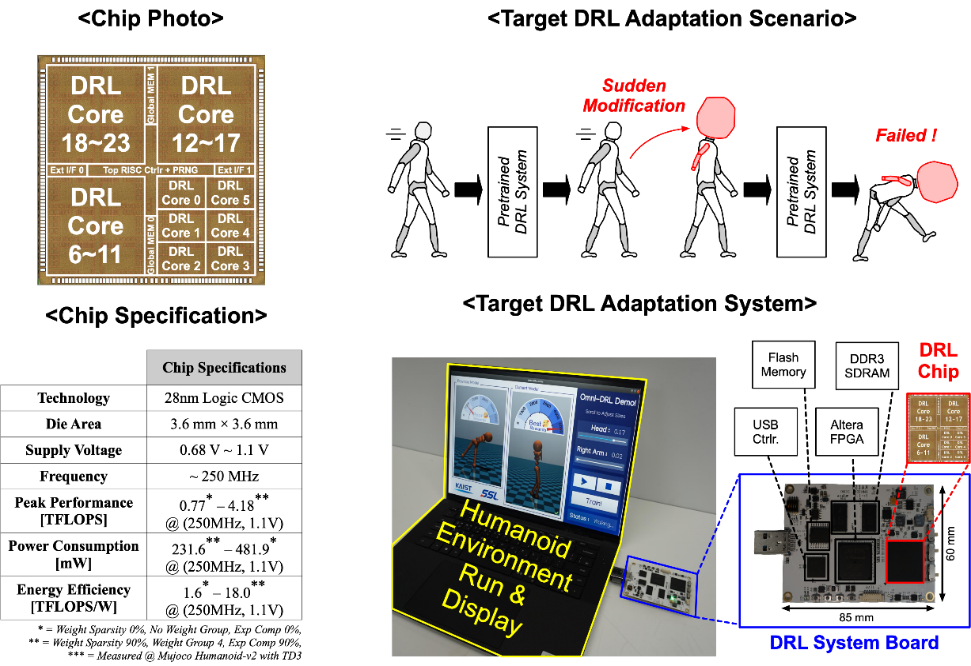

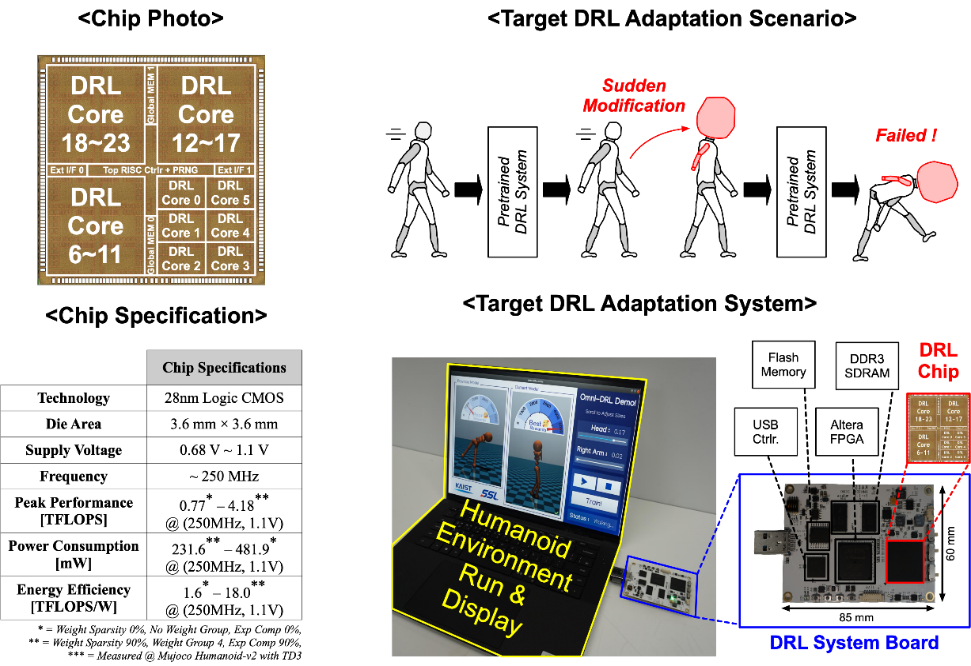

We present an energy-efficient deep reinforcement learning (DRL) processor, OmniDRL, for DRL training on edge devices. Recently, the need for DRL training is growing due to the DRL’s distinct characteristics that can be adapted to each user. However, a massive amount of external and internal memory access limits the implementation of DRL training on resource-constrained platforms. OmniDRL proposes 4 key features that can reduce external memory access by compressing as much data as possible, and can reduce internal memory access by directly processing compressed data. A group-sparse training enables a high weight compression ratio for every DRL iteration. A group-sparse training core is proposed to fully take advantage of compressed weight from GST. An exponent mean delta encoding additionally compresses exponent of both weight and feature map. A world-first on-chip sparse-weight-transposer enables the DRL training process of compressed weight without off-chip transposer. As a result, OmniDRL is fabricated in 28nm CMOS technology and occupies a 3.6×3.6 mm2 die area. It achieved 7.42 TFLOPS/W energy efficiency for training robot agent (Mujoco Halfcheetah, TD3), which is 2.4× higher than the previous state-of-the-art.

Related papers:

- Lee et al., “OmniDRL: An Energy-Efficient Deep Reinforcement Learning Processor With Dual-Mode Weight Compression and Sparse Weight Transposer,” in IEEE Journal of Solid-State Circuits, April 2022

- Lee et al., “OmniDRL: An Energy-Efficient Mobile Deep Reinforcement Learning Accelerators with Dual-mode Weight Compression and Direct Processing of Compressed Data”, 2021 IEEE Hot Chips 33 Symposium (HCS), 2021

- Lee et al., “OmniDRL: A 29.3 TFLOPS/W Deep Reinforcement Learning Processor with Dual-mode Weight Compression and On-chip Sparse Weight Transposer,” 2021 Symposium on VLSI Circuits, 2021

- Lee et al., “Low-power Autonomous Adaptation System with Deep Reinforcement Learning,” 2022 AICAS, 2022

- Lee et al., “Energy-Efficient Deep Reinforcement Learning Accelerator Designs for Mobile Autonomous Systems,” 2021 AICAS, 2021

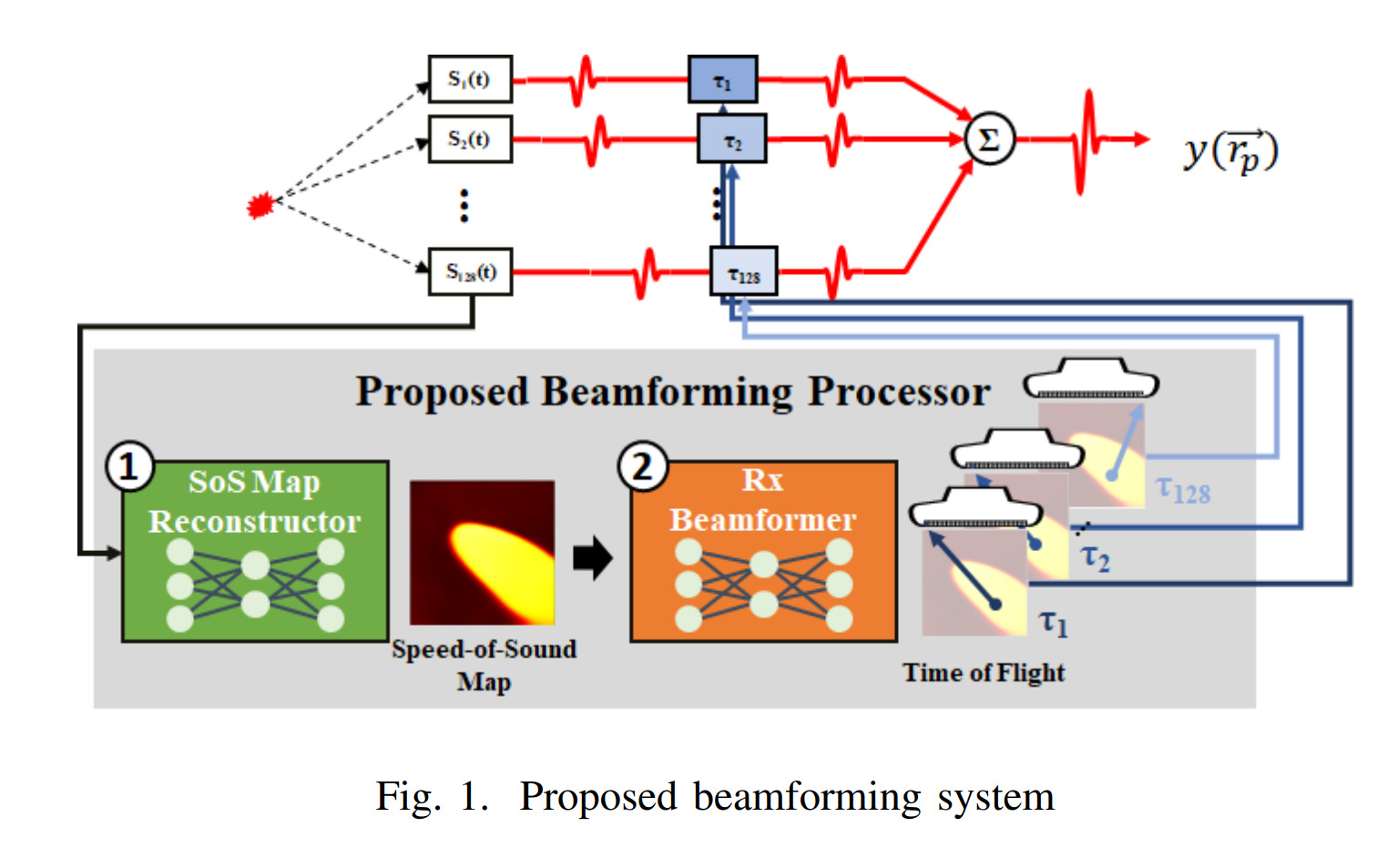

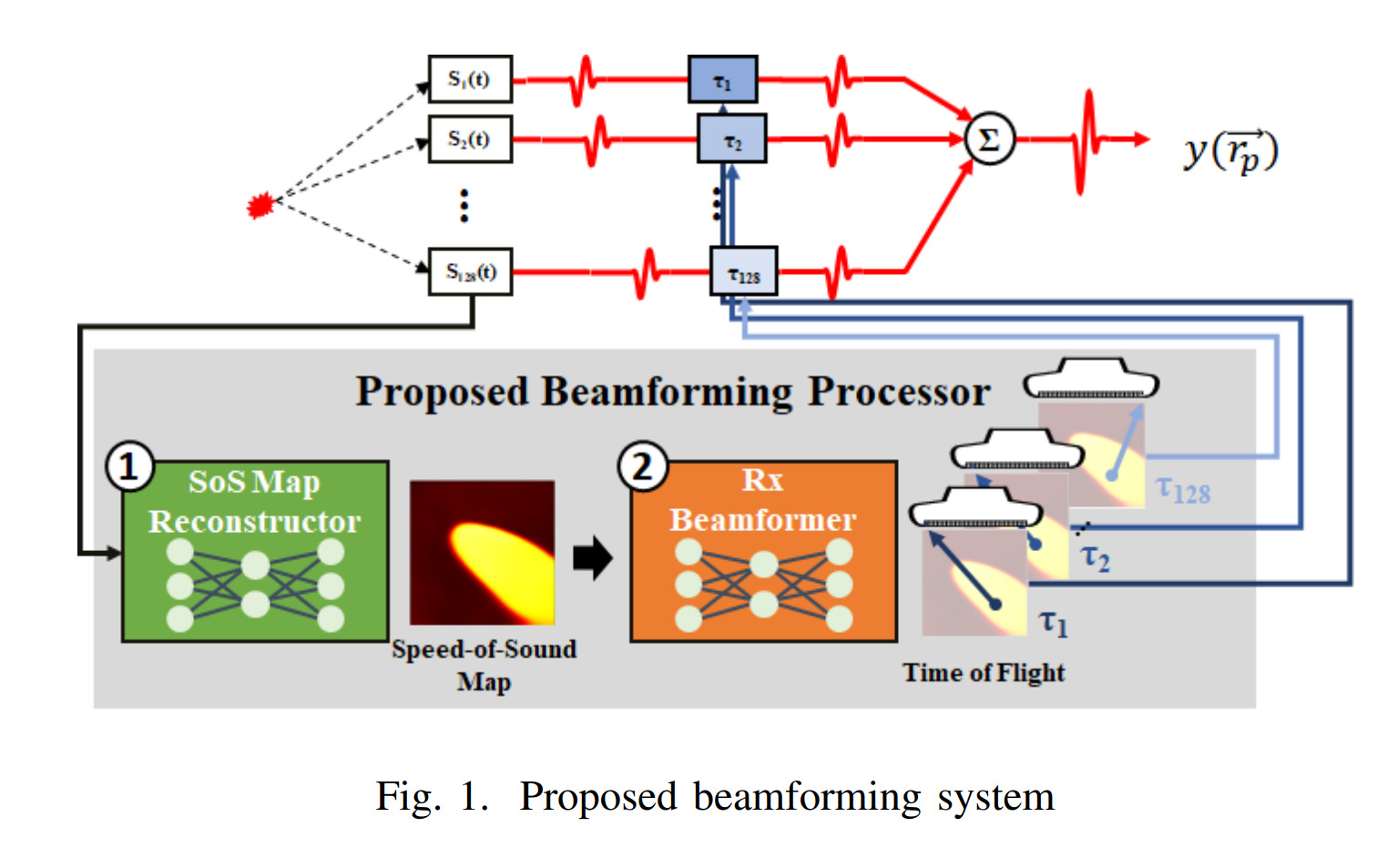

Abstract: The conventional delay-and-sum algorithm is based on the assumption that a target object is composed of substances with identical speed-of-sound (SoS)(i.e. 1540 m/s) and proper delay is applied to received RF signals to synthesize output images. However, such an assumption compromises the resolution of images due to the inhomogeneity of body tissues. In this paper, we propose an SoS adaptive Rx beamforming method that generates high-resolution ultrasonic images. A neural network (NN) approach has been adopted to reconstruct SoS distribution and determine the accurate time-of-flight (ToF) of each channel from the generated SoS map

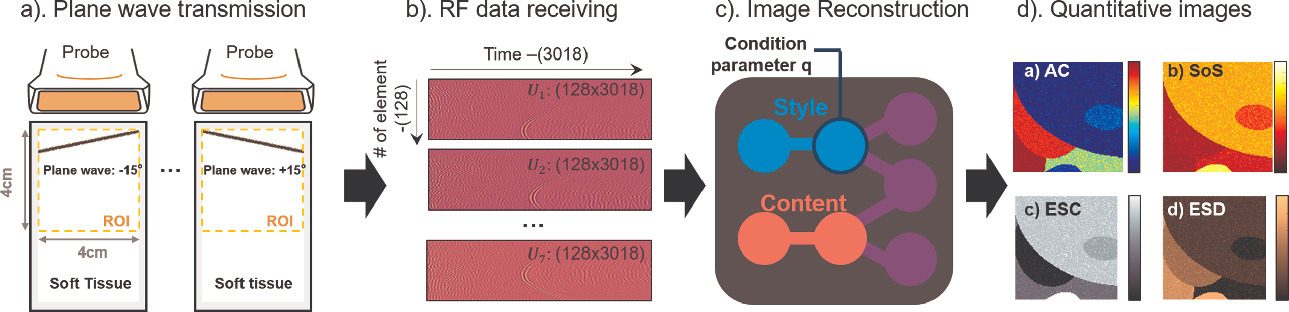

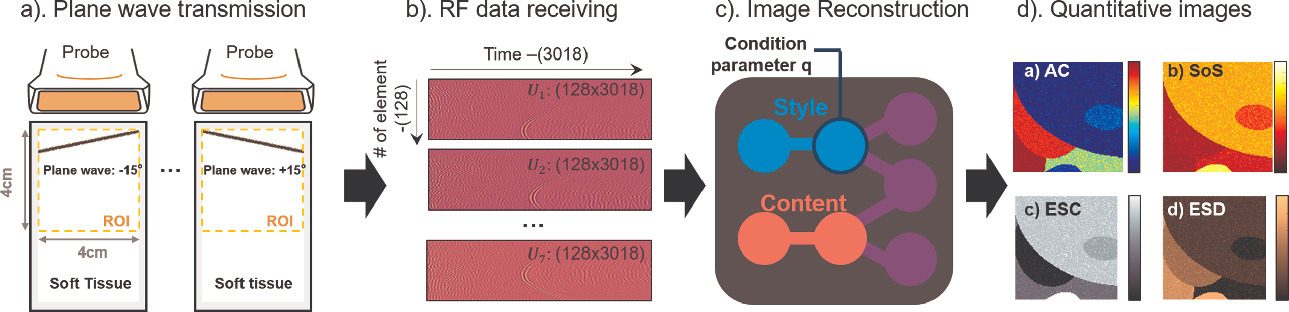

Abstract: In this paper, we present a scalable lesion-quantifying neural network based on b-mode-to-quantitative neural style transfer. Quantitative tissue characteristics have great potential in diagnostic ultrasound since pathological changes cause variations in biomechanical properties. The proposed system provides four clinically critical quantitative tissue images such as sound speed, attenuation coefficient, effective scatterer diameter, and effective scatterer concentration simultaneously by applying quantitative style information to structurally accurate b-mode images. The proposed system was evaluated through numerical simulation, and phantom and ex-vivo measurements. The numerical simulation shows that the proposed framework outperforms the baseline model as well as existing state-of-the-art methods while achieving significant parameter reduction per quantitative variables. In phantom and ex-vivo studies, the BQI-Net demonstrates that the proposed system achieves sufficient sensitivity and specificity in identifying and classifying cancerous lesions.

Myeong-Gee Kim, Seok-Hwan Oh, Youngmin Kim, Hyuksool Kwon, Hyeon-Min Bae, “Learning-based attenuation quantification in abdominal ultrasound”, International Conference on Medical Image Computing & Computer Assisted Intervention (MICCAI), Sept. 2021

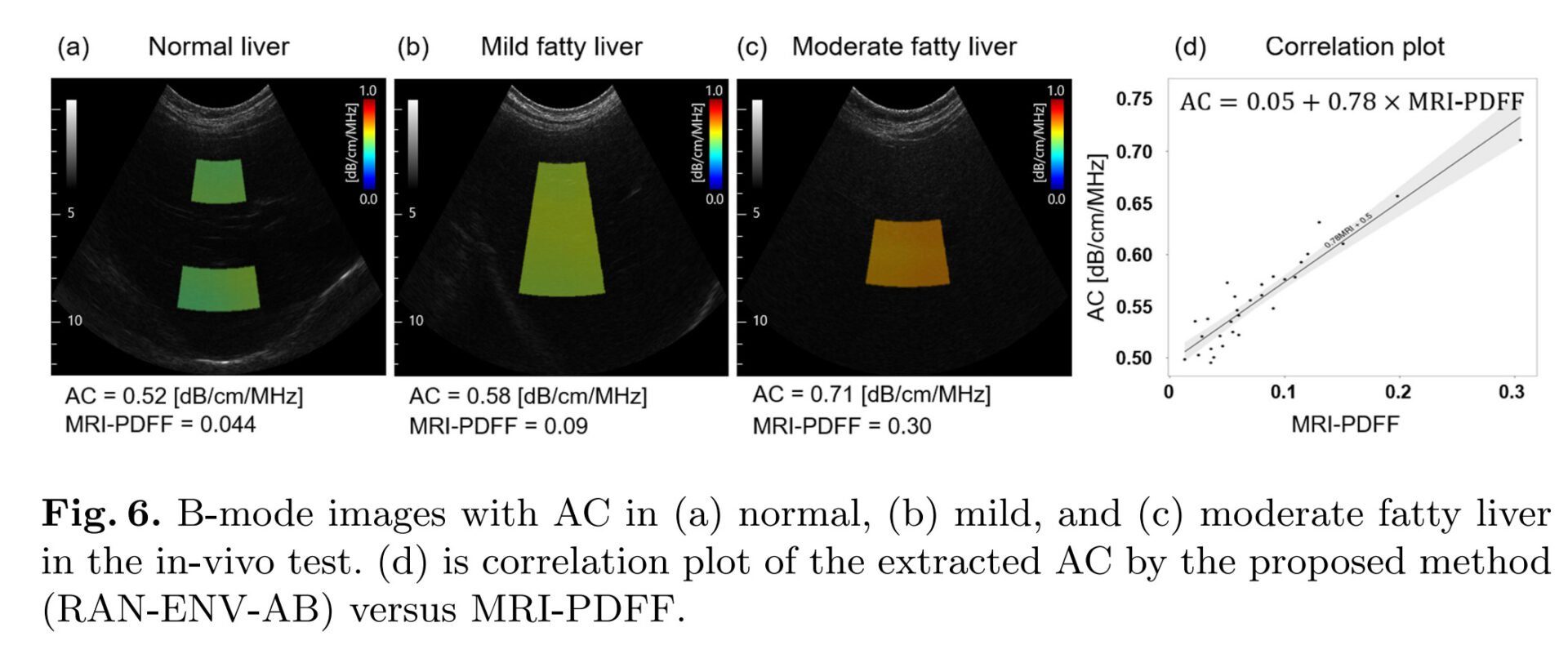

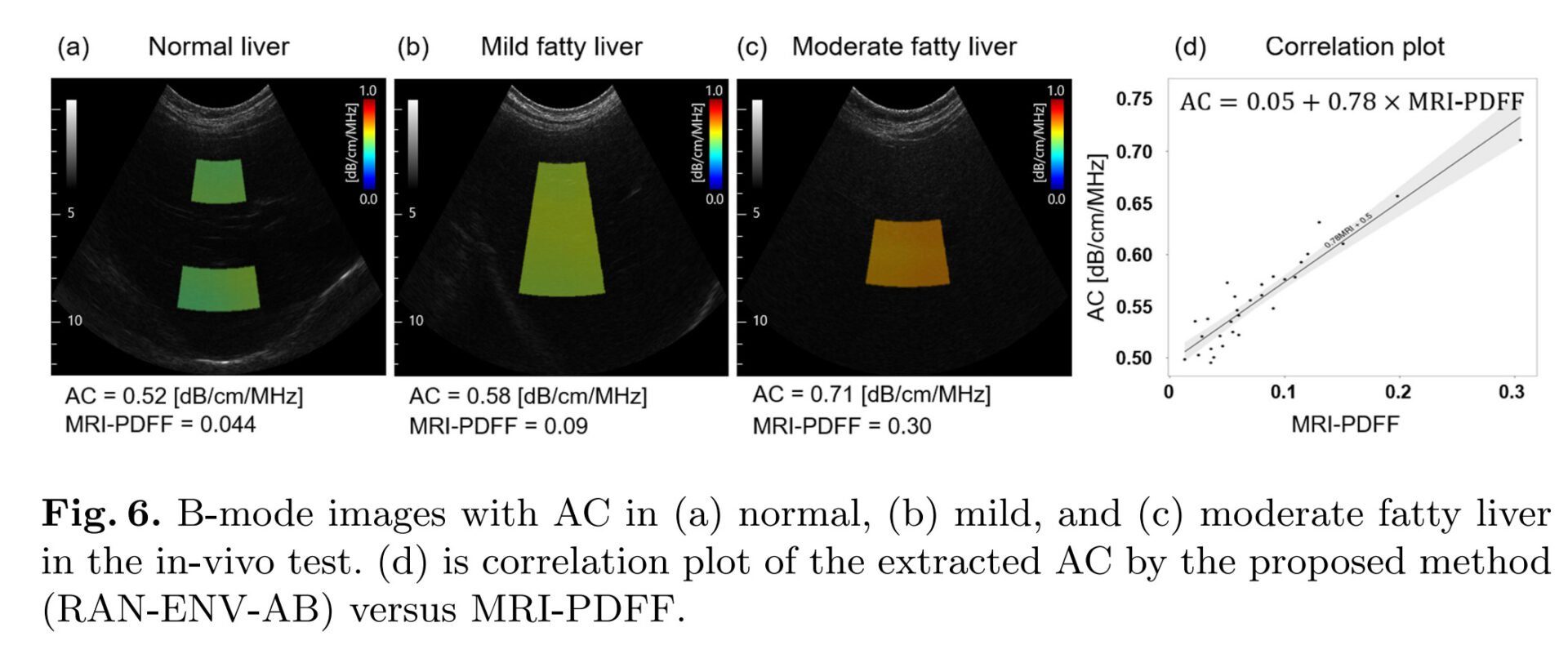

Abstract: The attenuation coefficient (AC) of tissue in medical ultrasound has great potential as a quantitative biomarker due to its high sensitivity to pathological properties. In particular, AC is emerging as a new quantitative biomarker for diagnosing and quantifying hepatic steatosis. In this paper, a learning-based technique to quantify AC from pulse-echo data obtained through a single convex probe is presented. In the proposed method, ROI adaptive transmit beam focusing (TxBF) and envelope detection schemes are employed to increase the estimation accuracy and noise resilience, respectively. In addition, the proposed network is designed to extract accurate AC of the target region considering attenuation/sound speed/scattering of the propagating waves in the vicinities of the target region. The accuracy of the proposed method is verified through simulation and phantom tests. In addition, clinical pilot studies show that the estimated liver AC values using the proposed method are correlated strongly with the fat fraction obtained from magnetic resonance imaging (R2=0.89R2=0.89, p<0.001p<0.001). Such results indicate the clinical validity of the proposed learning-based AC estimation method for diagnosing hepatic steatosis.

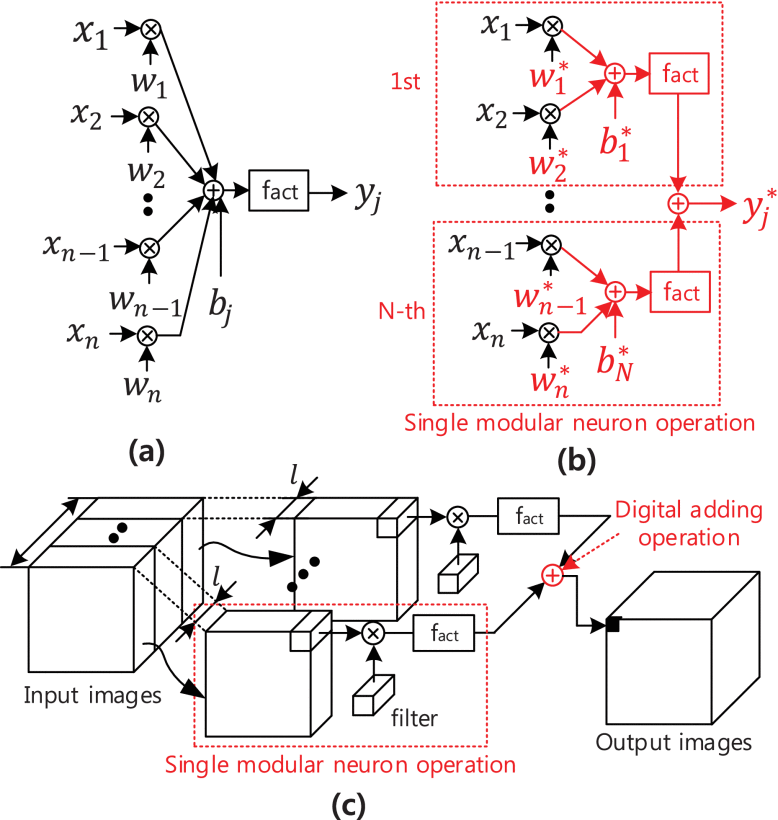

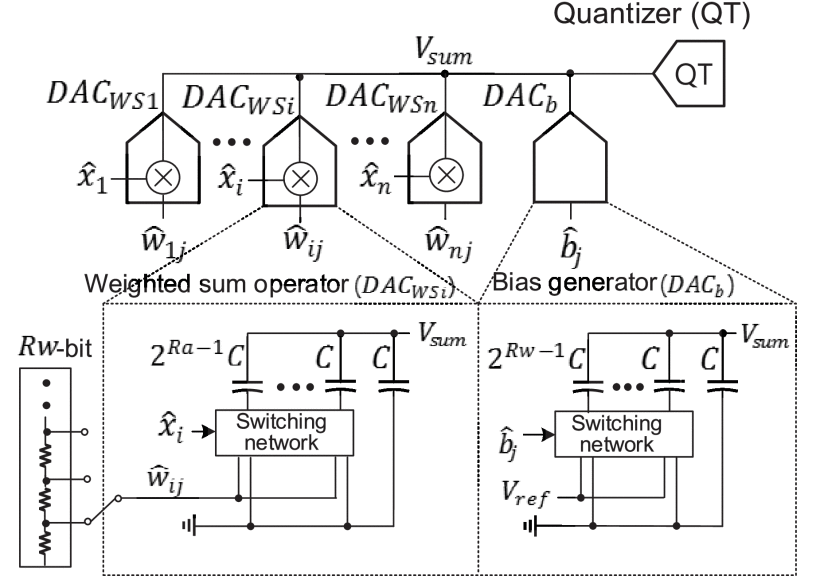

“Compact Mixed-Signal Convolutional Neural Network Using a Single Modular Neuron”, IEEE Transactions on Circuits and Systems I: Regular Papers ( Volume: 67, Issue: 12, Dec. 2020)

Abstract:

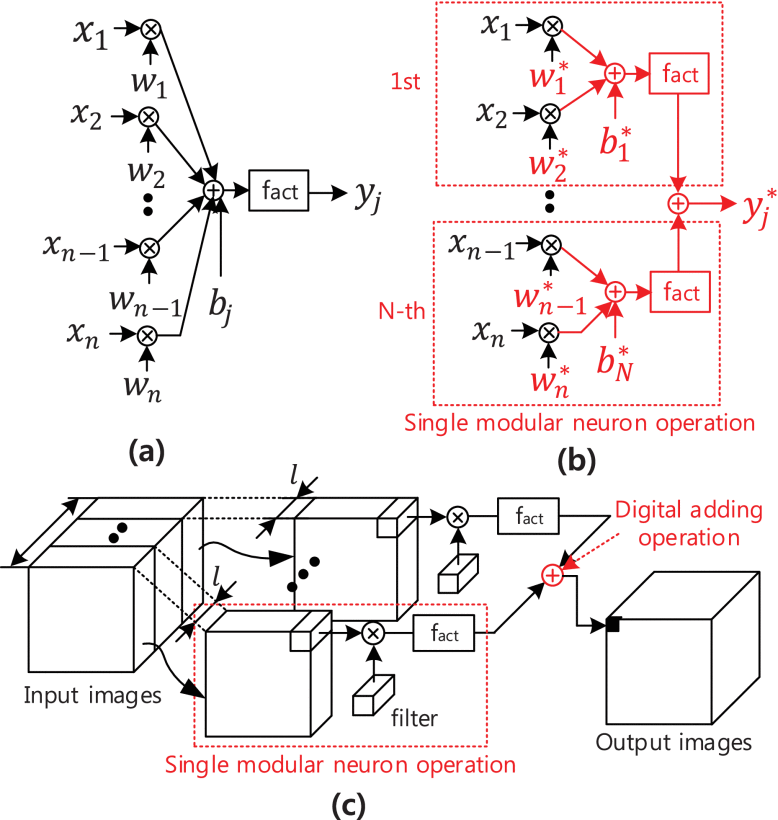

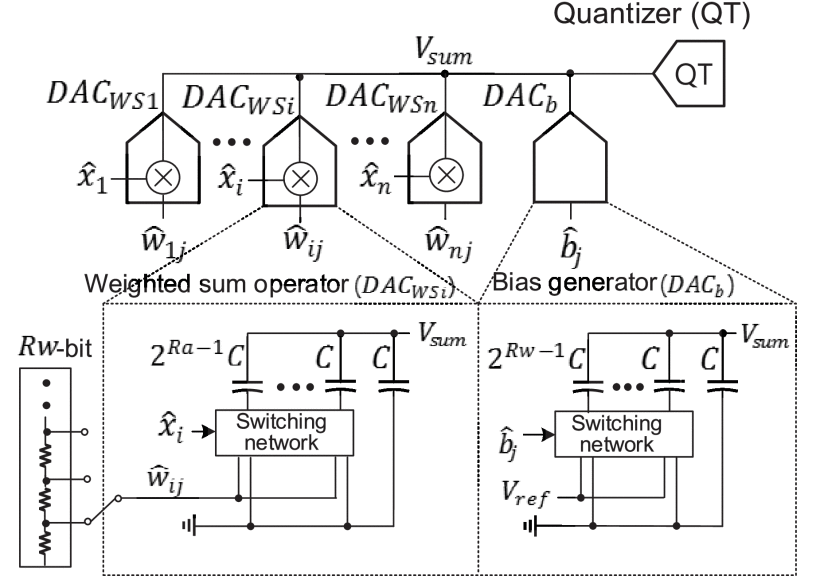

This paper demonstrates a compact mixed-signal (MS) convolutional neural network (CNN) design procedure by proposing a MS modular neuron unit that alleviates analog circuit related design issues such as noise. Through the first step of the proposed procedure, we design a CNN in software with a minimized number of channels for each layer while satisfying the network performance to the target, which creates low representational and computational cost. Then, the network is reconstructed and retrained with a single modular neuron that is recursively utilized for the entire network for the maximum hardware efficiency with a fixed number of parameters that consider signal attenuation. For the last step of the proposed procedure, the parameters of the networks are quantized to an implementable level of MS neurons. We designed the networks for MNIST and Cifar-10 and achieved compact CNNs with a single MS neuron with 97% accuracy for MNIST and 85% accuracy for Cifar-10 whose representational cost and computational cost are reduced to least two times smaller than prior works. The estimated energy per classification of the hardware network for Cifar-10 with a single MS neuron, designed with optimum noise and matching requirements, is 0.5μ J, which is five times smaller than its digital counterpart.

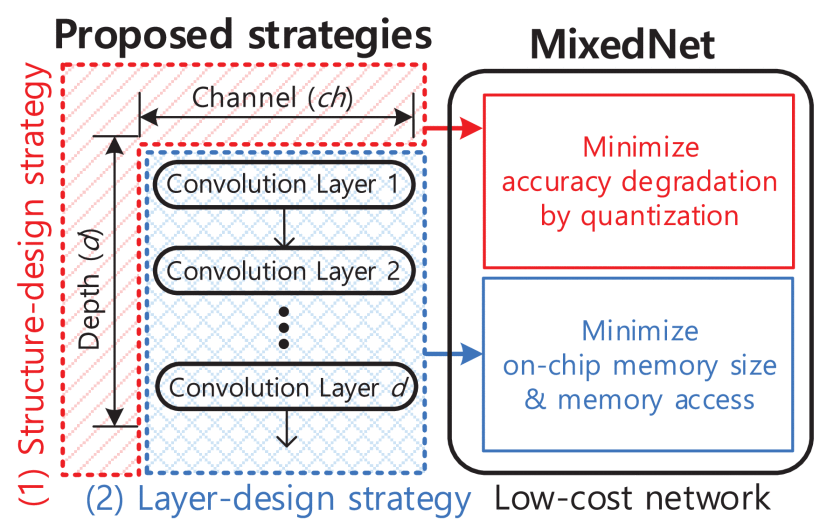

This paper proposes design strategies for a low-cost quantized neural network. To prevent the classification accuracy from being degraded by quantization, a structure-design strategy that utilizes a large number of channels rather than deep layers is proposed. In addition, a squeeze-and-excitation (SE) layer is adopted to enhance the performance of the quantized network. Through a quantitative analysis and simulations of the quantized key convolution layers of ResNet and MobileNets, a low-cost layer-design strategy for use when building a neural network is proposed. With this strategy, a low-cost network referred to as a MixedNet is constructed. A 4-bit quantized MixedNet example achieves an on-chip memory size reduction of 60% and fewer memory access by 53% with negligible classification accuracy degradation in comparison with conventional networks while also showing classification accuracy rates of approximately 73% for Cifar-100 and 93% for Cifar-10.