Title: A Full HD 60 fps CNN Super Resolution Processor with Selective Caching based Layer Fusion for Mobile Devices

Authors: Ju-Hyoung Lee, Dong-Joo Shin, Jin-Su Lee, Jin-Mook Lee, Sang-Hoon Kang, and Hoi-Jun Yoo

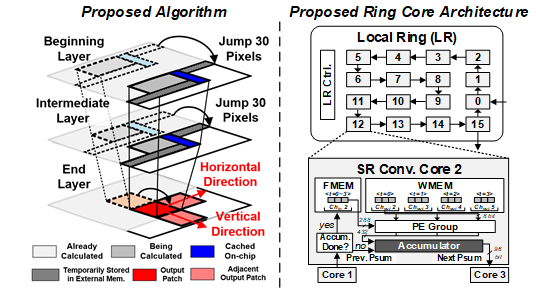

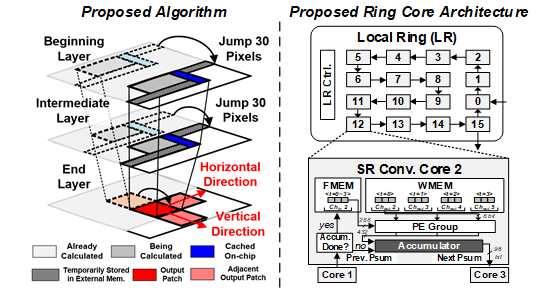

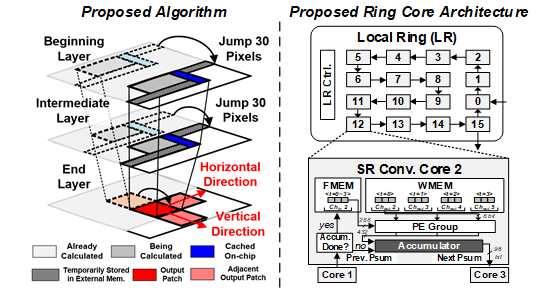

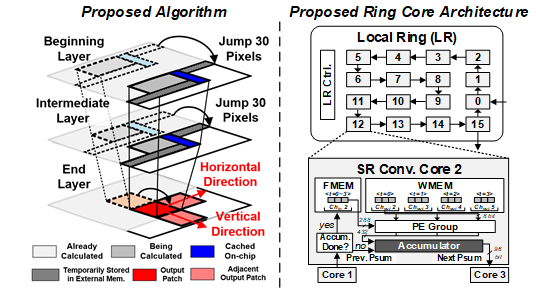

Recently, super resolution algorithms based on convolution neural network (SR-CNN) has been broadly utilized to enable mobile devices to support better user experience (UX) from video quality enhancement or far object recognition. However, SRCNN’s distinct architecture makes it harder to meet the high throughput requirement in conventional hardware targeting classification CNNs. It is because the intermediate feature maps of SR do not decrease when they pass through the layers, while classification CNN’s feature maps shrink due to pooling or strided convolutions. Because of the huge amount of feature maps in SR-CNN, it requires larger external memory access (EMA), on-chip memory footprint and computation workload than the classification CNN.

In this work, we propose a high throughput SR-CNN processor which minimizes the amount of EMA and on-chip memory footprint with three key features; 1) Selective caching based layer fusion (SCLF) algorithm to reduce the overall memory cost (product of on-chip memory size and EMA), 2) memory compaction scheme to reduce the on-chip memory footprint further and 3) cyclic ring core architecture to increase the PE utilization for SCLF. As a result, the implemented processor achieves 60 frames-per-second throughput in generating full HD images.

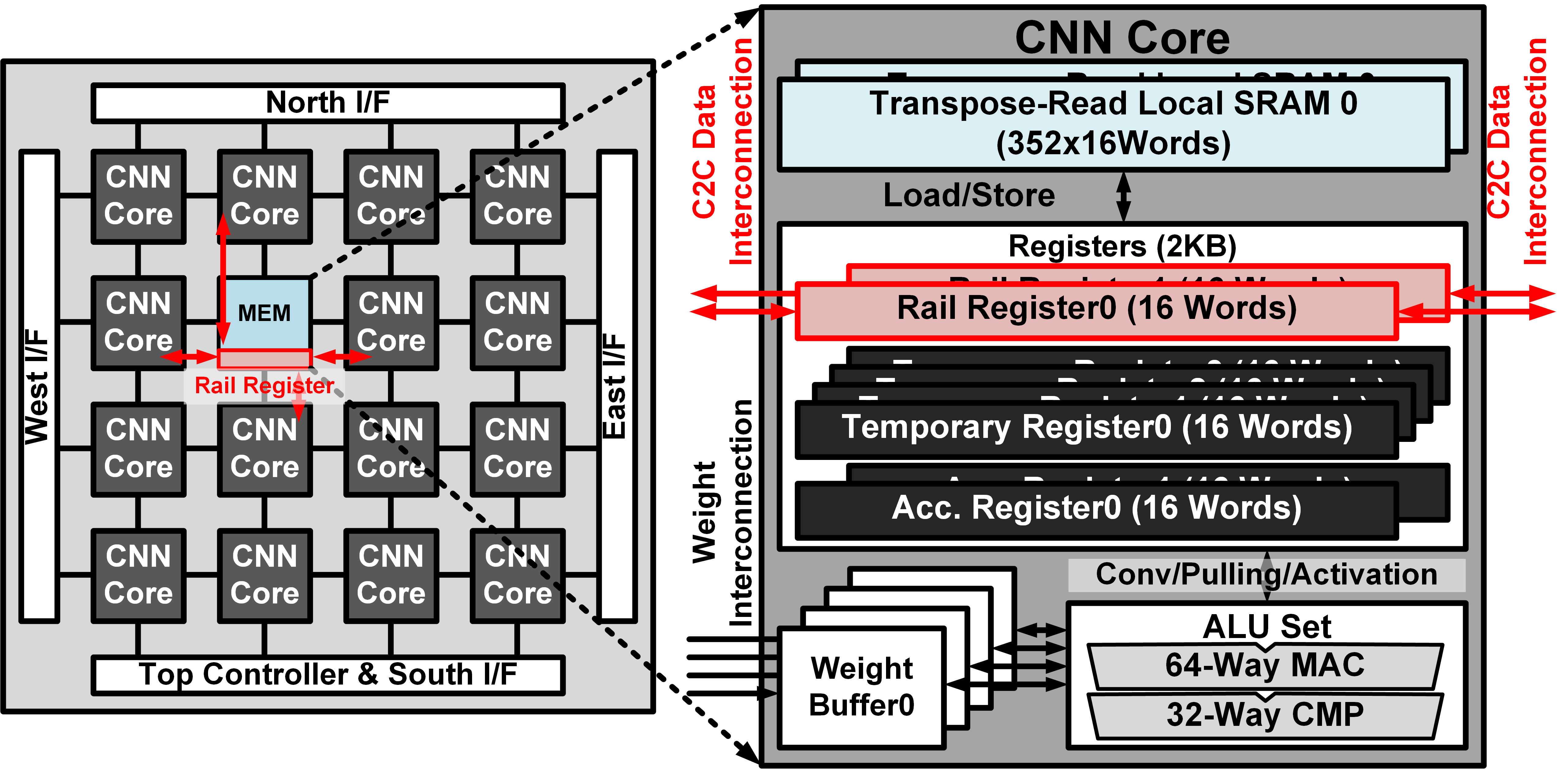

Figure 1. An illustration of the proposed SR computing algorithm & proposed ring core architecture

Title: 1.32 TOPS/W Energy Efficient Deep Neural Network Learning Processor with Direct Feedback Alignment based Heterogeneous Core Architecture

Authors: Dong-Hyeon Han, Jin-Su Lee, Jin-Mook Lee and Hoi-Jun Yoo

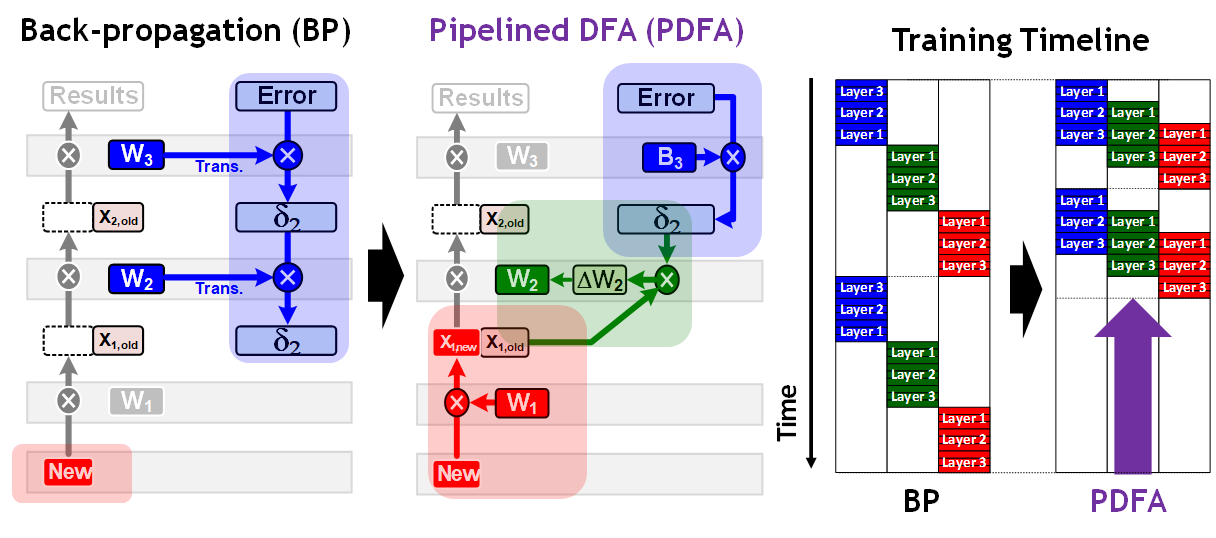

An energy efficient deep neural network (DNN) learning processor is proposed using direct feedback alignment (DFA).

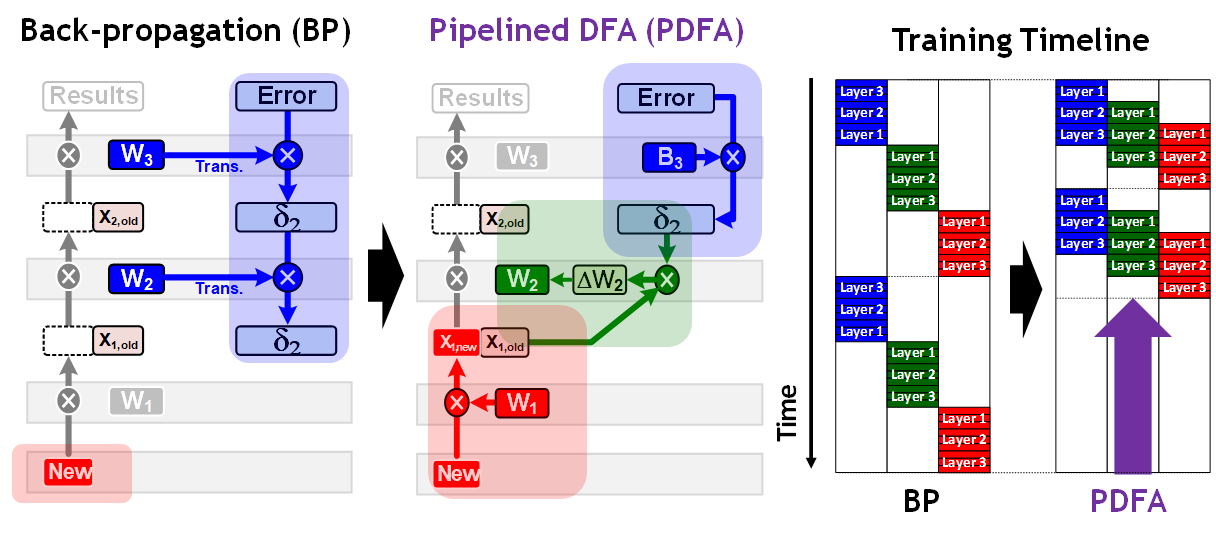

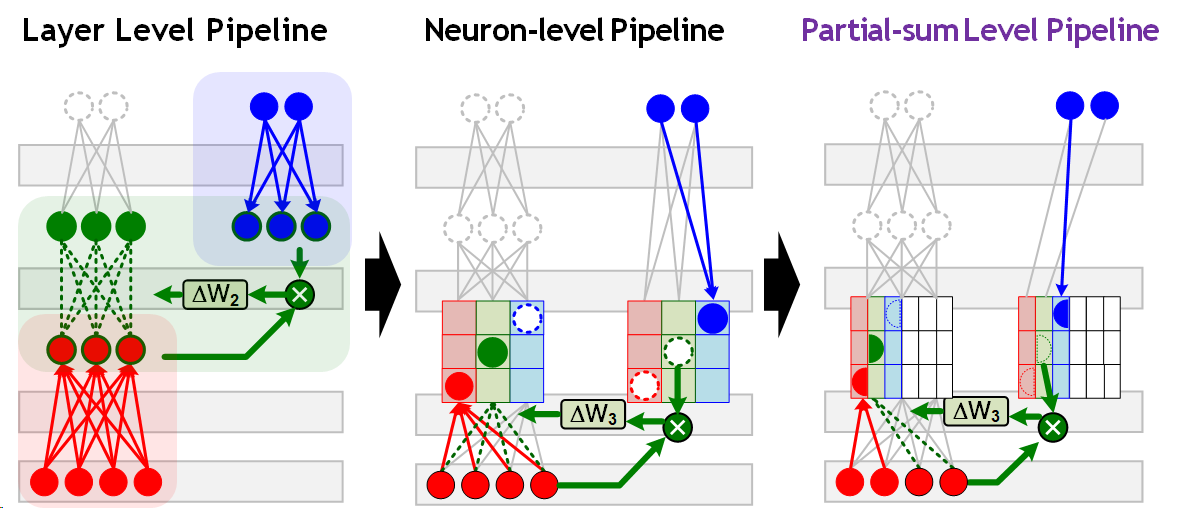

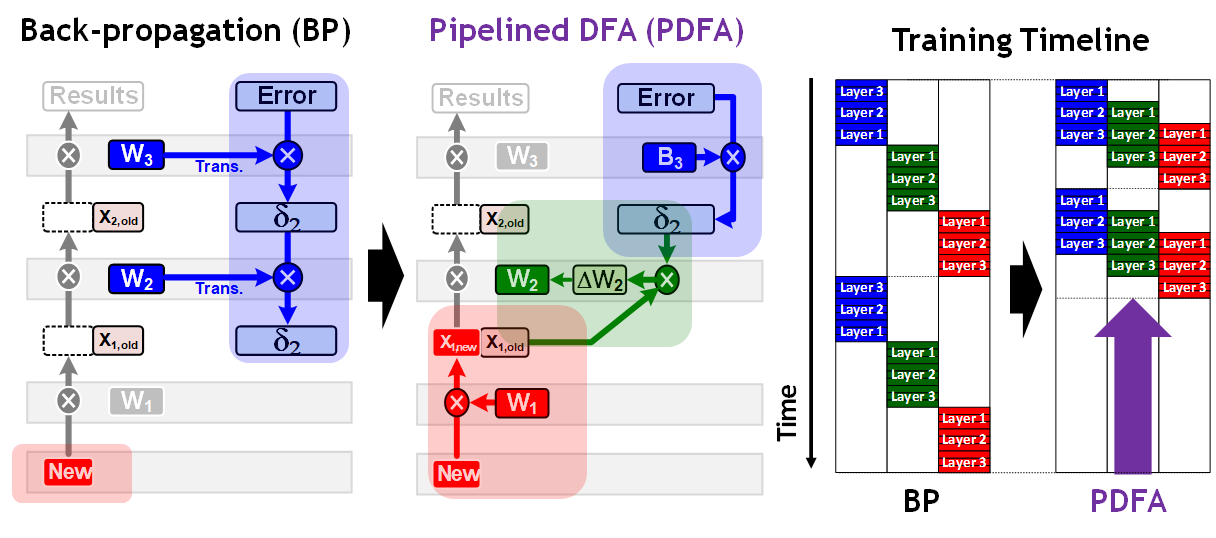

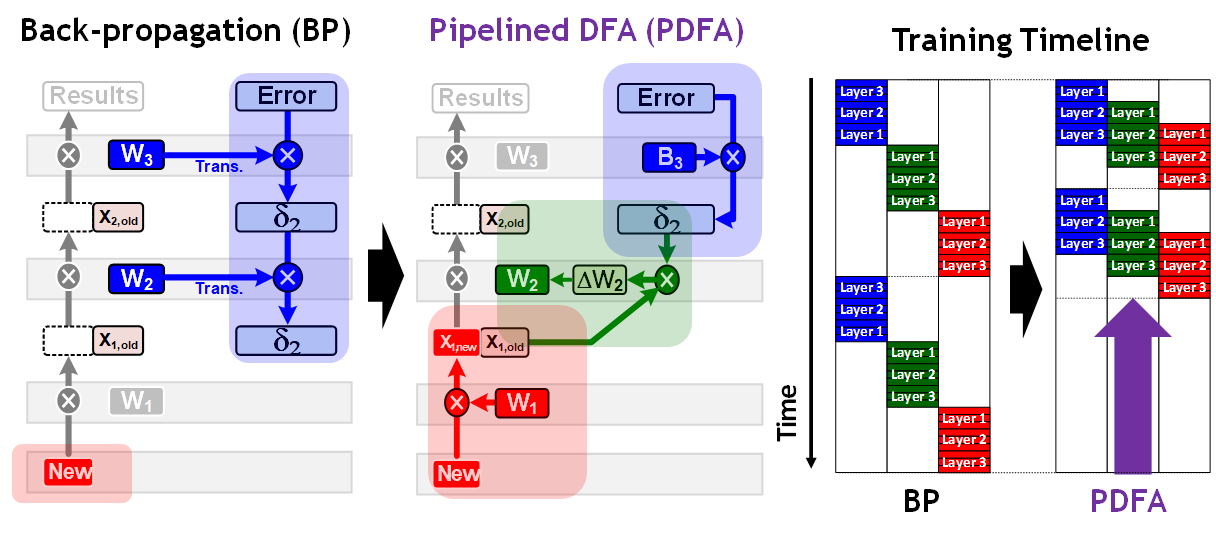

The proposed processor achieves 2.2 × faster learning speed compared with the previous learning processors by the pipelined DFA (PDFA). Since the computation direction of the back-propagation (BP) is reversed from the inference, the gradient of the 1st layer cannot be generated until the errors are propagated from the last layer to the 1st layer. On the other hand, the proposed processor applies DFA which can propagate the errors directly from the last layer. This means that the PDFA can propagate errors during the next inference computation and that weight update of the 1st layer doesn’t need to wait for error propagation of all the layers. In order to enhance the energy efficiency by 38.7%, the heterogeneous learning core (LC) architecture is optimized with the 11-stage pipeline data-path. It show 2 × longer data reusing compared with the conventional BP. Furthermore, direct error propagation core (DEPC) utilizes random number generators (RNG) to remove external memory access (EMA) caused by error propagation (EP) and improve the energy efficiency by 19.9%.

The proposed PDFA based learning processor is evaluated on the object tracking (OT) application, and as a result, it shows 34.4 frames-per-second (FPS) throughput with 1.32 TOPS/W energy efficiency.

Figure 1. Back-propagation vs Pipelined DFA

Figure 2. Layer Level vs Neuron-level vs Partial-sum Level Pipeline

Figure 3. Overall Architecture of Proposed Processor

Title: LNPU: A 25.3TFLOPS/W Sparse Deep-Neural-Network Learning Processor with Fine-Grained Mixed Precision of FP8-FP16

Authors: Jin-Su Lee, Ju-Hyoung Lee, Dong-Hyeon Han, Jin-Mook Lee, Gwang-Tae Park, and Hoi-Jun Yoo

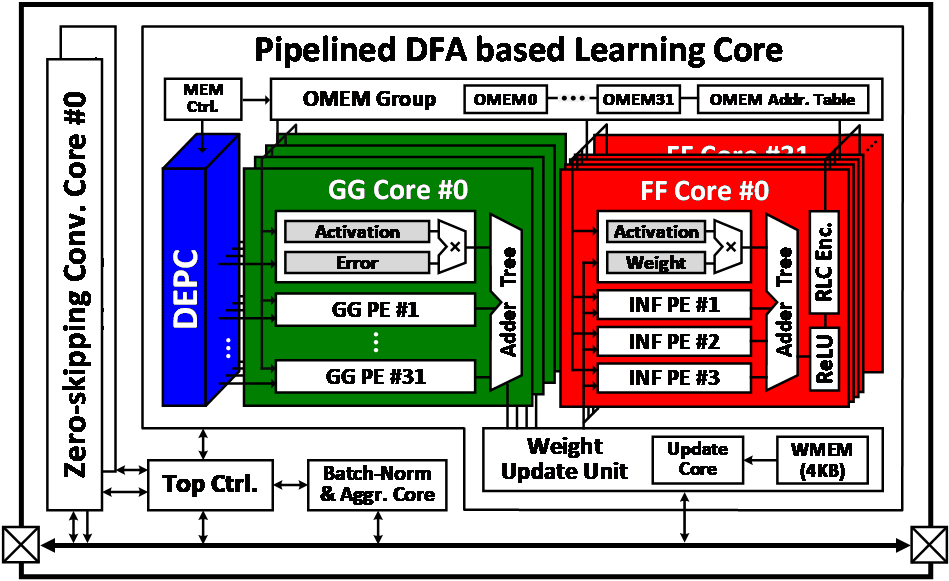

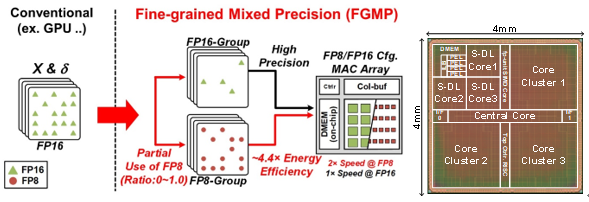

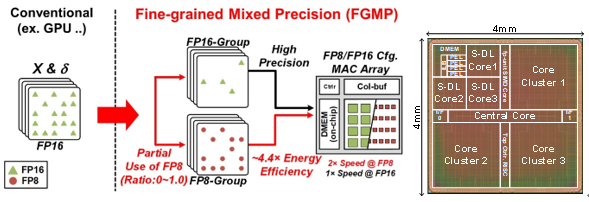

Recently, deep neural network (DNN) hardware accelerators have been reported for energy-efficient deep learning (DL) acceleration. Most of the previous DNN inference accelerators trained their DNN parameters at the cloud server using public datasets and downloaded the parameters to them to implement AI. However, the local DNN learning with domain-specific and private data is required to adapt to various user’s preferences on the edge or mobile devices. Since the edge and mobile devices contain only limited computation capability with battery power, energy-efficient DNN learning processor is necessary. In this paper, we present an energy-efficient on-chip learning accelerator. Its data precision is optimized while maintaining the training accuracy with fine-grained mixed precision (FGMP) of FP8-FP16 to reduce external memory access (EMA) and to enhance throughput with high accuracy. In addition, sparsity is exploited with intra-channel accumulation as well as inter-channel accumulation to support 3 DNN learning steps with higher throughput to enhance energy-efficiency. Also, the input load balancer (ILB) is integrated to improve PE utilization under the unbalanced amount of input data caused by irregular sparsity. The external memory access is reduced by 38.9% and energy-efficiency is improved 2.08 times for ResNet-18 training. The fabricated chip occupies 16mm2 in 65nm CMOS and the energy efficiency is 3.48TFLOPS/W (FP8) for 0.0% sparsity and 25.3TFLOPS/W (FP8) for 90% sparsity.

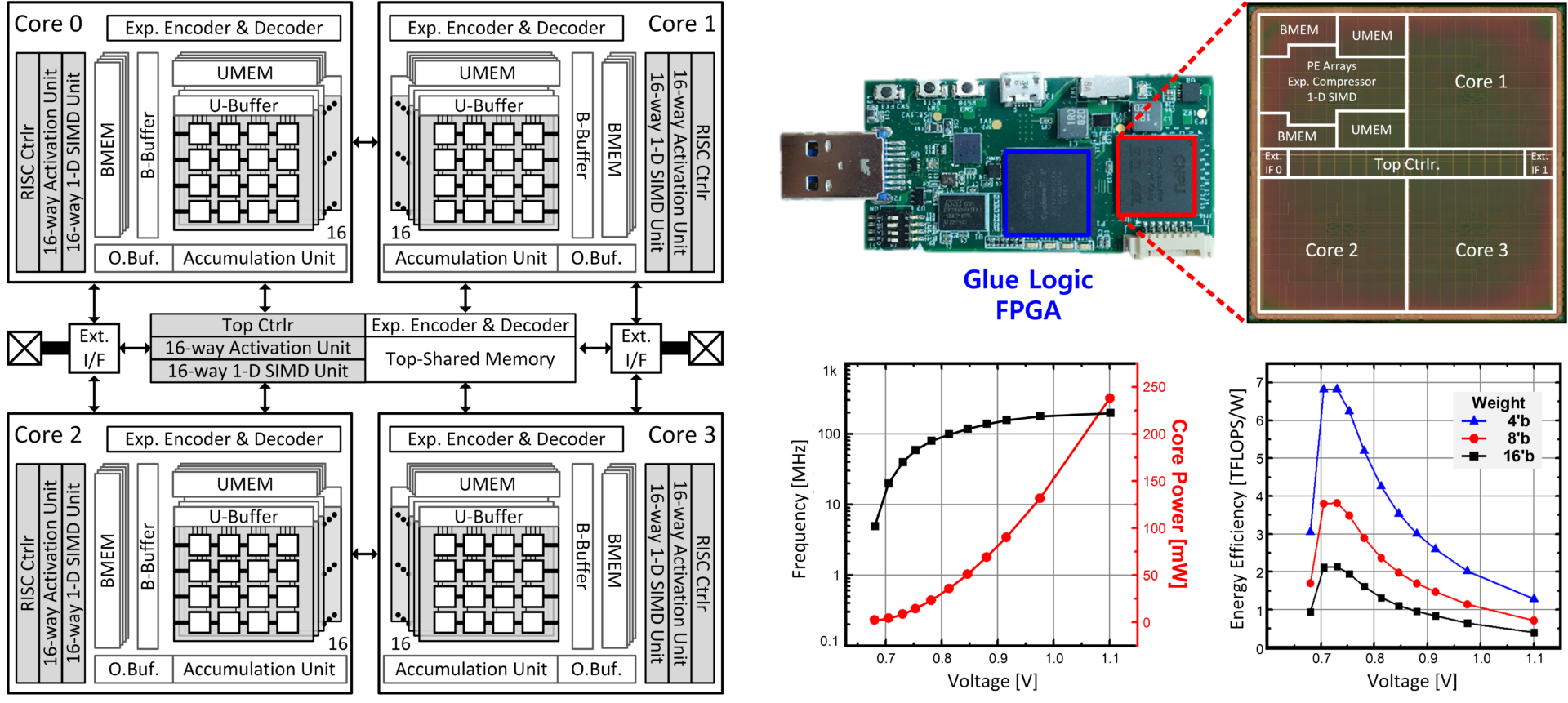

Title: A 2.1TFLOPS/W Mobile Deep RL Accelerator with Transposable PE Array and Experience Compression

Authors: Chang-Hyeon Kim, Sang-Hoon Kang, Don-Joo Shin, Sung-Pill Choi, Young-Woo Kim and Hoi-Jun Yoo

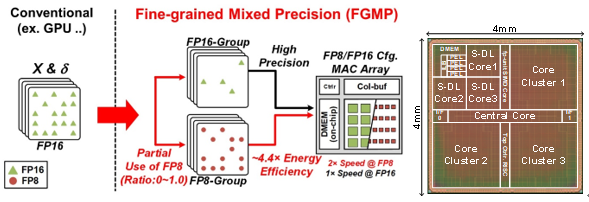

Recently, deep neural networks (DNNs) are actively used for action control so that an autonomous system, such as the robot, can perform human-like behaviors and operations. Unlike recognition tasks, the real-time operation is essential in action control, and it is too slow to use remote learning on a server communicating through a network. New learning techniques, such as reinforcement learning (RL), are needed to determine and select the correct robot behavior locally. In this paper, We propose DRL accelerator with transposable PE array and experience compressor to realize real-time DRL operation of autonomous agents in dynamic environments. It supports on-chip data compression and decompression that ~10,000 of DRL experiences can be compressed by 65%. And it enables adaptive data reuse for inferencing and training, which results in power and peak memory bandwidth reduction by 31% and 41%, respectively. The proposed DRL accelerator is fabricated with 65nm CMOS technology and occupies 4×4 mm2 die area. This is the first fully trainable DRL processor, and it achieves 2.16 TFLOPS/W energy-efficiency at 0.73V with 16b weights@50MHz.

Fig. 1. DRL Accelerator with tPE Array, Implementation & Measurement Results

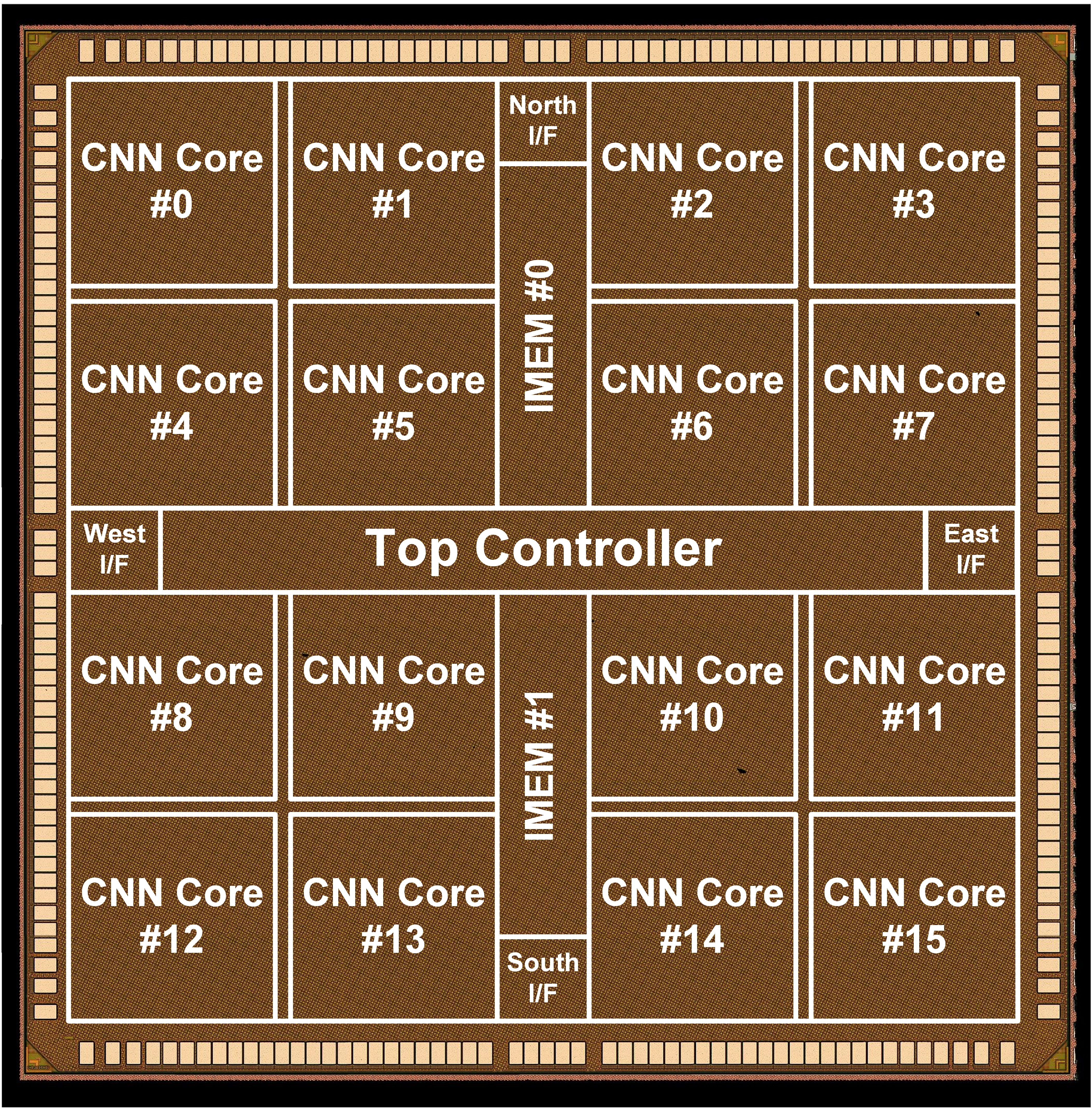

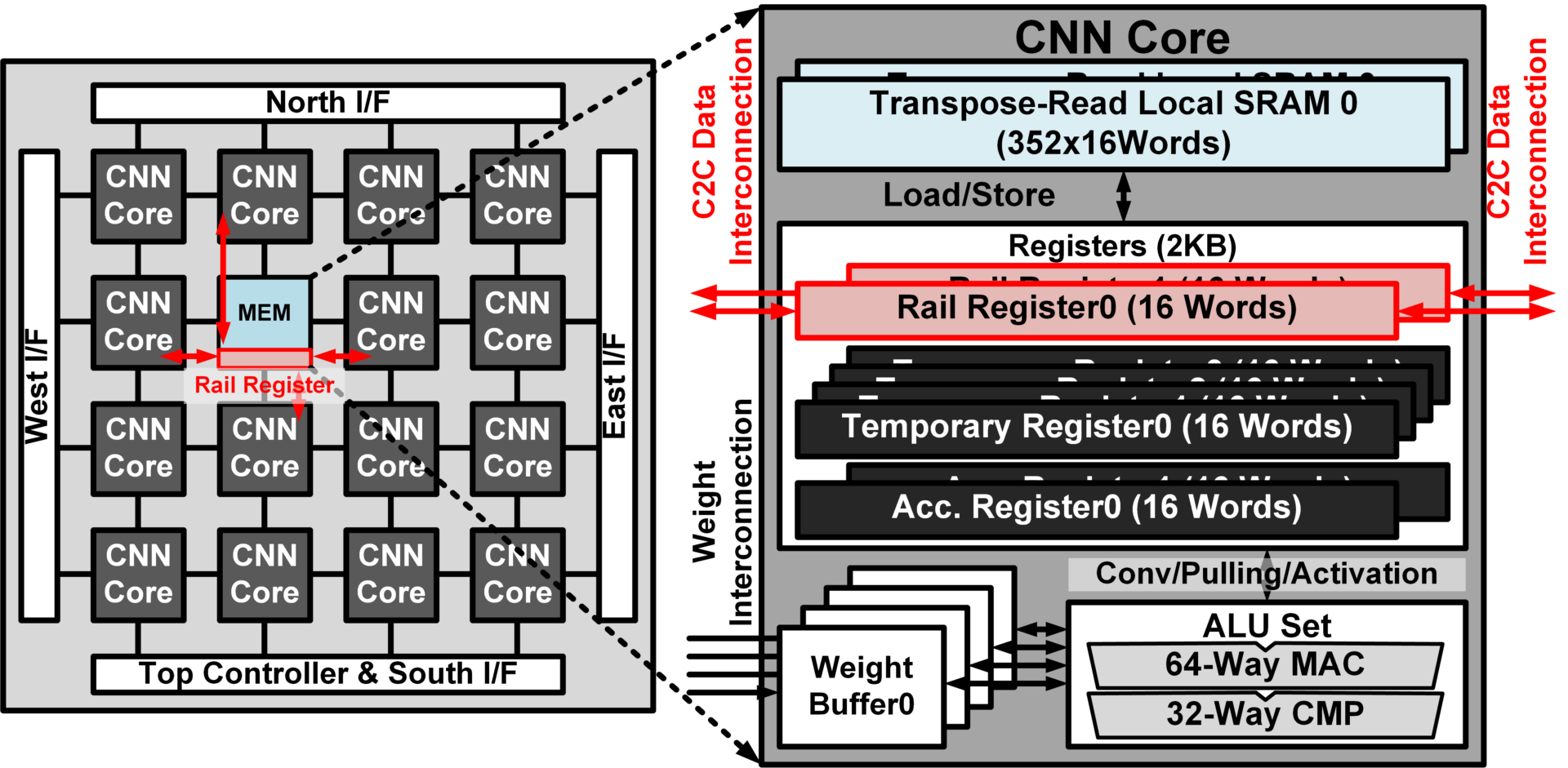

Title: CNNP-v2: An Energy Efficient Memory-Centric Convolutional Neural Network Processor Architecture

Authors: Sung-Pill Choi, Kyeong-Ryeol Bong, Dong-Hyeon Han, and Hoi-Jun Yoo

An energy efficient memory-centric convolution-al neural network (CNN) processor architecture is proposed for smart devices such as wearable devices or internet of things (IoT) devices. To achieve energy-efficient processing, it has 2 key features: First, 1-D shift convolution PEs with fully distributed memory architecture achieve 3.1TOPS/W energy efficiency. Compared with conventional architecture, even though it has massively parallel 1024 MAC units, it achieve high energy efficiency by scaling down voltage to 0.46V due to its fully local routed design. Next, fully configurable 2-D mesh core-to-core interconnection support various size of input features to maximize utilization. The proposed architecture is evaluated 16mm2 chip which is fabricated with 65nm CMOS process and it performs real-time face recognition with only 9.4mW at 10MHz and 0.48V.

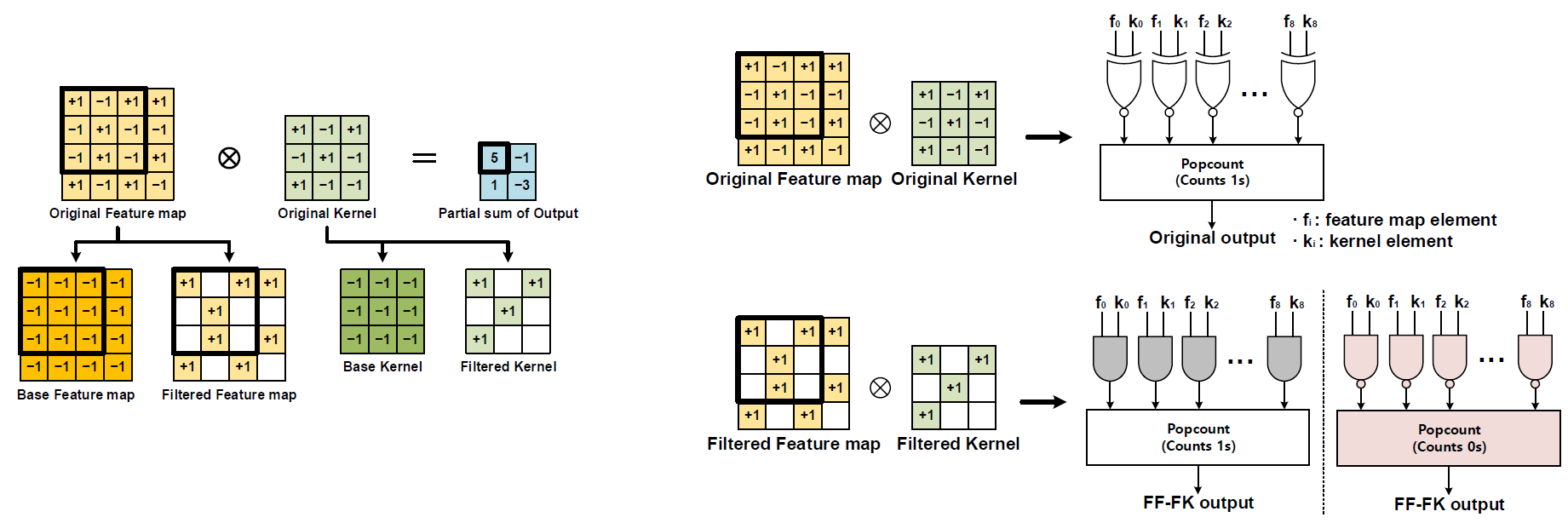

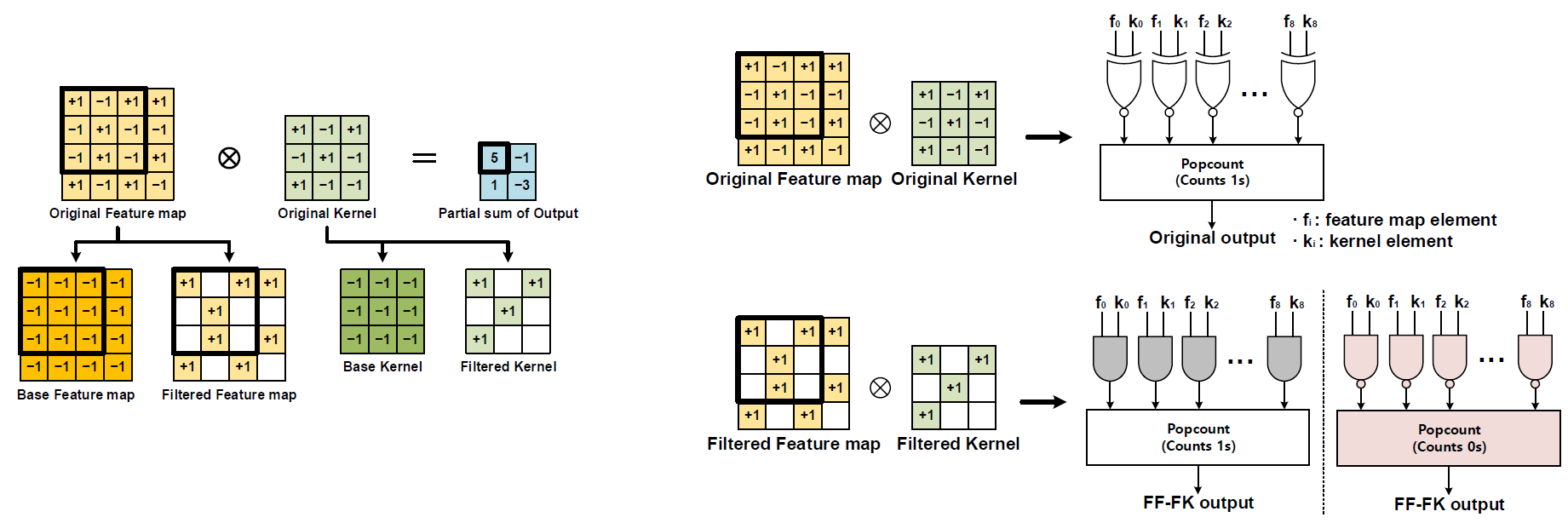

Title: NAND-Net: Minimizing Computational Complexity of In-Memory Processing for Binary Neural Networks

Authors: Hyeon-Uk Kim, Jae-Hyeong Sim, Yeong-Jae Choi, Lee-Sup Kim

Popular deep learning technologies suffer from memory bottlenecks, which significantly degrade the energy-efficiency, especially in mobile environments. In-memory processing for binary neural networks (BNNs) has emerged as a promising solution to mitigate such bottlenecks, and various relevant works have been presented accordingly. However, their performances are severely limited by the overheads induced by the modification of the conventional memory architectures. To alleviate the performance degradation, we propose NAND-Net, an efficient architecture to minimize the computational complexity of in-memory processing for BNNs. Based on the observation that BNNs contain many redundancies, we decomposed each convolution into sub-convolutions and eliminated the unnecessary operations. In the remaining convolution, each binary multiplication (bitwise XNOR) is replaced by a bitwise NAND operation, which can be implemented without any bit cell modifications. This NAND operation further brings an opportunity to simplify the subsequent binary accumulations (popcounts). We reduced the operation cost of those popcounts by exploiting the data patterns of the NAND outputs. Compared to the prior state-of-the-art designs, NAND-Net achieves 1.04-2.4x speedup and 34-59% energy saving, thus making it a suitable solution to implement efficient in-memory processing for BNNs.

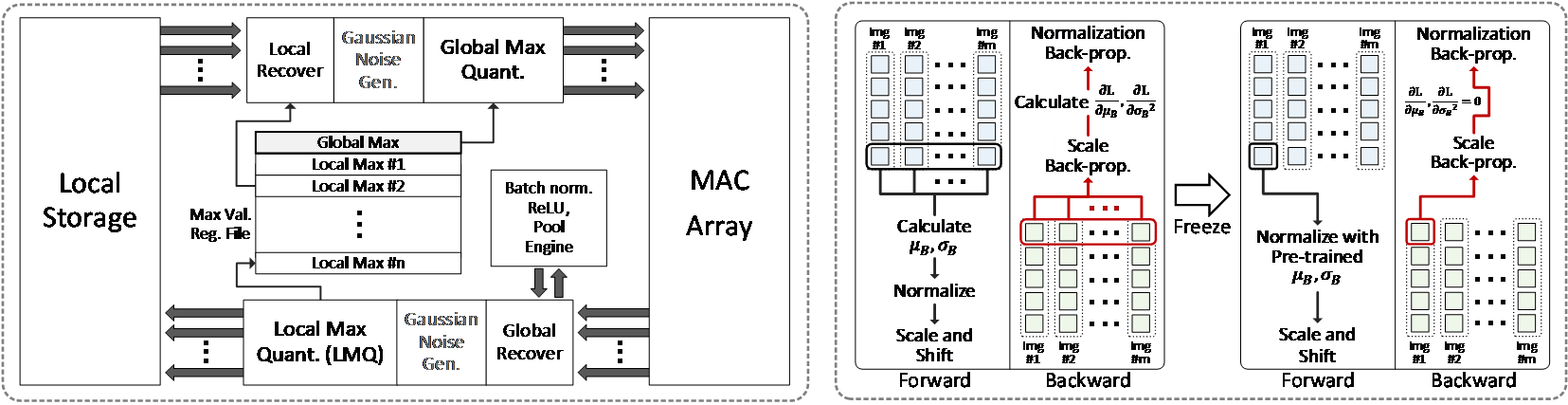

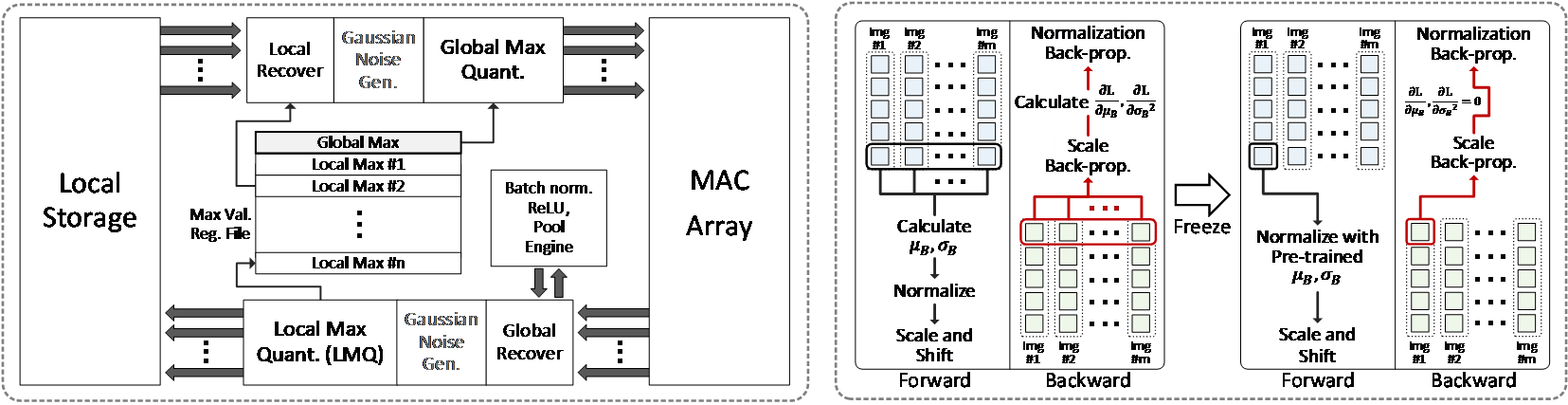

Title: An Optimized Design Technique of Low-bit Neural Network Training for Personalization on IoT Devices

Authors: Seung-Kyu Choi, Jae-Kang Shin, Yeong-Jae Choi, and Lee-Sup Kim

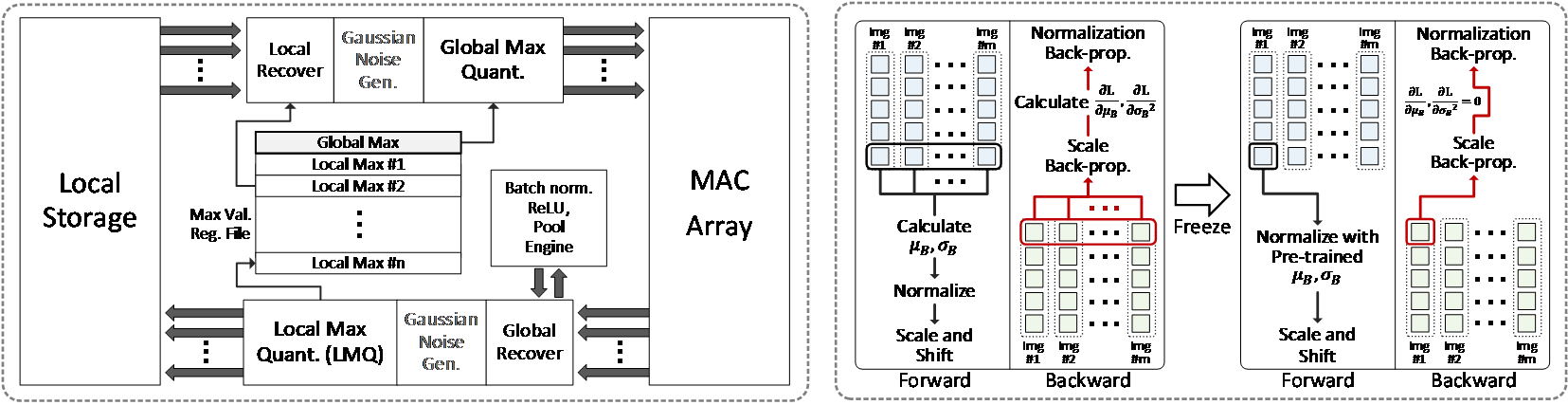

Personalization by incremental learning has become essential for IoT devices to enhance the performance of the deep learning models trained with global datasets. To avoid massive transmission traffic in the network, exploiting on-device learning is necessary. We propose a software/hardware co-design technique that builds an energy-efficient low-bit trainable system: (1) software optimizations by local low-bit quantization and computation freezing to minimize the on-chip storage requirement and computational complexity, (2) hardware design of a bit-flexible multiply-and-accumulate (MAC) array sharing the same resources in inference and training. Our scheme saves 99.2% on on-chip buffer storage and achieves 12.8x higher peak energy efficiency compared to previous trainable accelerators.

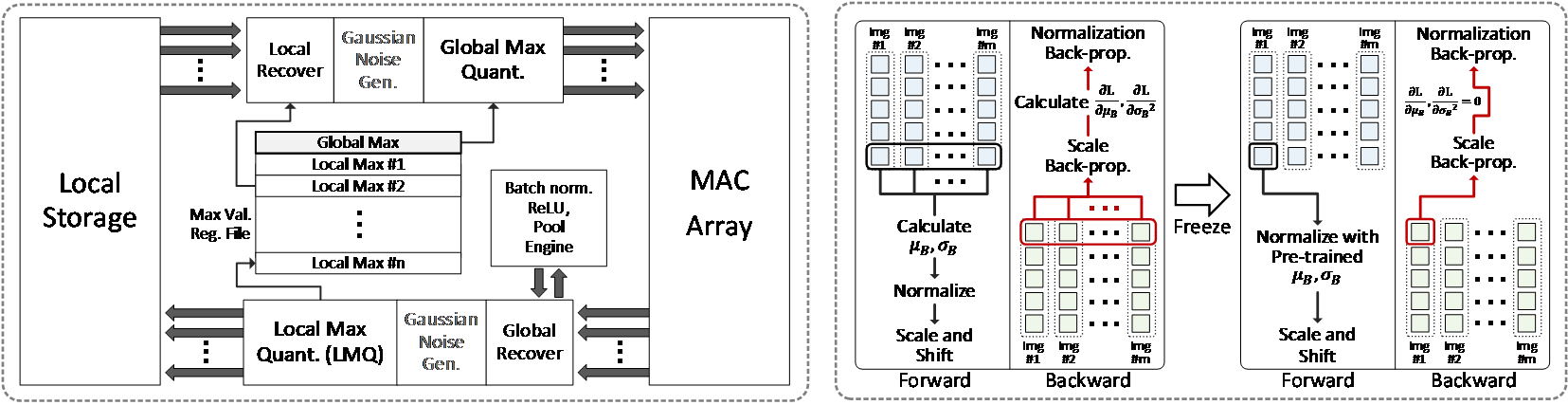

Figure 1. Optimizations to operate multiply-and-accumulate for CNN training in fixed-point based MAC units

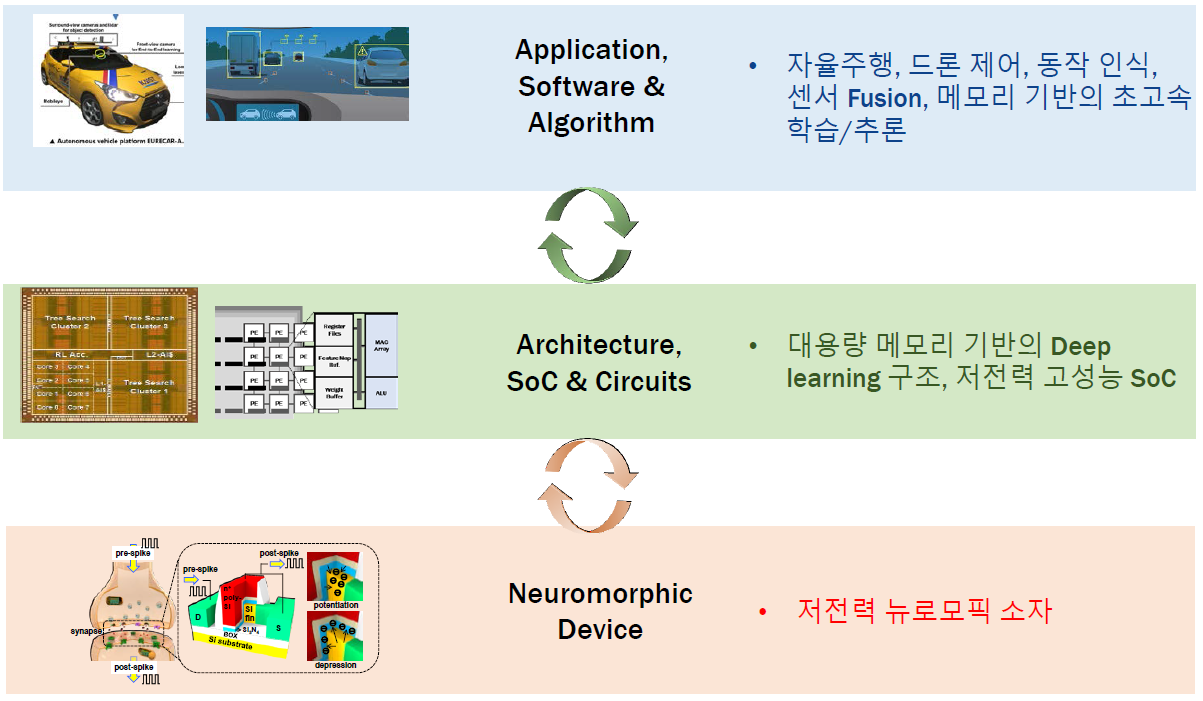

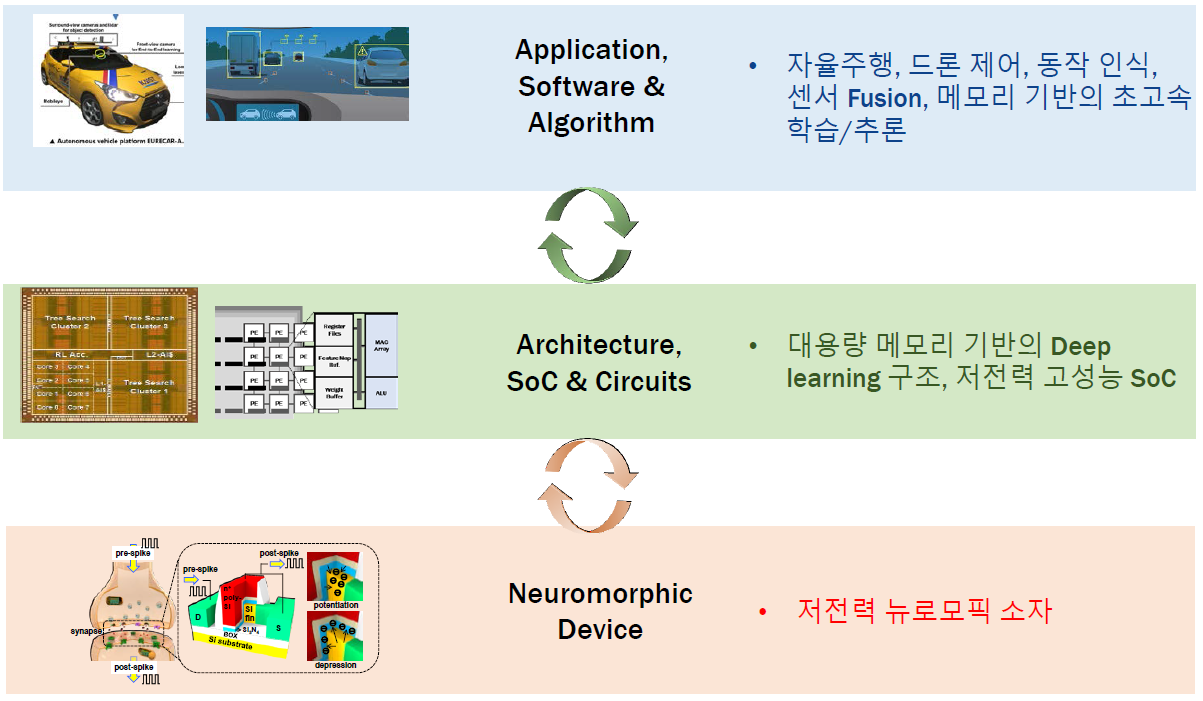

SK하이닉스와 KAIST는 2018년 11월 30일 대전 KAIST 본원에서 ‘차세대 인공지능 반도체 시스템 연구 센터’ 협약식을 가졌습니다. (센터장: 조성환 교수).

차세대 인공지능 반도체 시스템 연구 센터는 4차 산업 혁명을 대비하는 인공지능 반도체 시스템 개발을 목표로 SK하이닉스와 KAIST의 센서, 알고리즘, 머신러닝 SoC 및 소자 분야 10개 연구실의 전문 인력이 공동으로 참여할 계획입니다.

SK하이닉스와 KAIST는 개소식에서 인공지능에 최적화된 새로운 반도체 소자 개발, 머신러닝 가속기 칩 개발, 자동차 및 드론 등의 자율주행으로의 응용 기술 개발을 골자로 하는 세부 연구 계획을 공유하였으며, 센터의 공동 운영을 통해 연구 인력 상호 교류 및 교육 등을 통한 협력체계도 구축할 예정입니다.

이번 협약식을 통해 SK하이닉스는 메모리 분야의 탄탄한 입지를 바탕으로 KAIST와 더불어 4차 산업혁명에 따른 비메모리 분야의 핵심 기술 개발을 선도하여 새로운 시장 개척을 도모하는 계기를 마련하게 되었습니다.

본 센터 설립은 인공지능 분야의 체계적인 기술 개발과 전문 인력 양성 측면에서 국내 학계와 산업계에 모두 도움이 될 것으로 기대됩니다.