황의종 교수 연구팀, 공정한 엑티브 러닝 기법 개발

전기및전자공학부 황의종 교수님 연구팀에서 MAB(Multi-armed Bandit)을 이용한 공정한 엑티브 러닝 기법을 개발했다. 본 연구는 태기현 박사과정(주저자), 박재영 박사과정, 조지아텍 컴퓨터과학과 Kexin Rong 교수님과 Hantian Zhang 박사과정의 공동연구로 이뤄졌다.

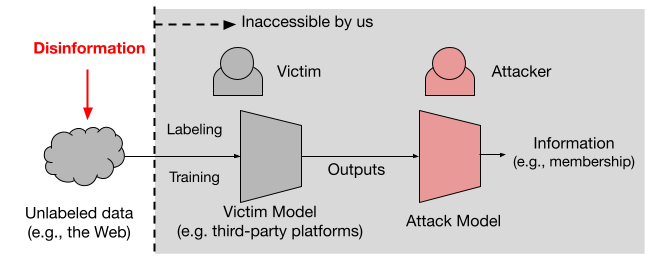

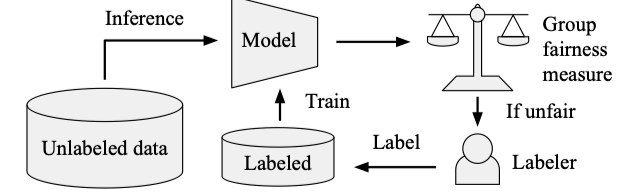

인공지능이 널리 보급되고 고용 및 의료 시스템에도 적용됨에 따라, 인공지능 모델의 높은 정확도 뿐만 아니라 인공지능 공정성(AI fairness)의 개념이 중요해지고 있다. 연구팀은 이러한 불공정성의 주된 원인은 인공지능 모델 학습에 사용된 편향된 데이터에 있다는 점에 착안해 레이블이 없는 데이터가 충분한 환경에서 데이터 라벨링(data labeling)을 통해 학습 모델의 불공정성을 완화하고자 하였다.

일반적으로 데이터 라벨링은 비용이 많이 들기 때문에, 유용한 데이터를 우선적으로 선별할 수 있는 다양한 액티브 러닝(active learning) 기법들이 제안되어 왔는데 기존 액티브 러닝 기법들은 모델 정확도를 최대화하는 것에만 초점을 맞추고 있기에 공정성도 함께 고려한 기술에 대한 연구가 부족한 반면 연구팀에서 제시한 기법(FALCON)의 경우 정확도 뿐만 아니라 공정성 지표 또한 개선할 수 있도록 하였다.

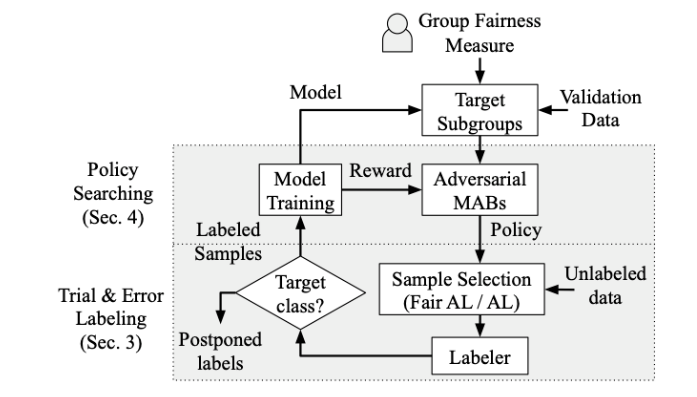

FALCON에서는 크게 두가지 기법을 활용하여 공정성을 개선시키는데, 먼저 모델의 공정성을 악화시킬 수 있는 데이터의 경우 모델 훈련에 사용하지 않고 지연시키는 시행착오 전략을 사용하였다. 또한 적대적 MAB를 통해 모델의 공정성을 개선시킬 수 있는 최적의 데이터를 선택하도록 하였다. 본 연구 성과는 데이터베이스 분야 최고 권위 학회중 하나인 VLDB 2024에서 발표될 예정이다.

해당 연구에 대한 자세한 내용은 연구실 홈페이지(https://sites.google.com/view/whanglab/di-lab)에서 확인할 수 있다.

전기및전자공학부 황의종 교수님 연구팀에서 MAB(Multi-armed Bandit)을 이용한 공정한 엑티브 러닝 기법을 개발했다. 본 연구는 태기현 박사과정(주저자), 박재영 박사과정, 조지아텍 컴퓨터과학과 Kexin Rong 교수님과 Hantian Zhang 박사과정의 공동연구로 이뤄졌다.

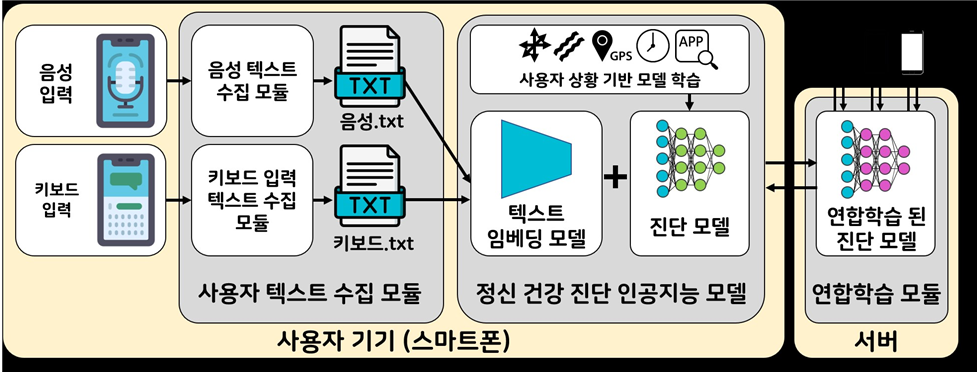

인공지능이 널리 보급되고 고용 및 의료 시스템에도 적용됨에 따라, 인공지능 모델의 높은 정확도 뿐만 아니라 인공지능 공정성(AI fairness)의 개념이 중요해지고 있다. 연구팀은 이러한 불공정성의 주된 원인은 인공지능 모델 학습에 사용된 편향된 데이터에 있다는 점에 착안해 레이블이 없는 데이터가 충분한 환경에서 데이터 라벨링(data labeling)을 통해 학습 모델의 불공정성을 완화하고자 하였다.

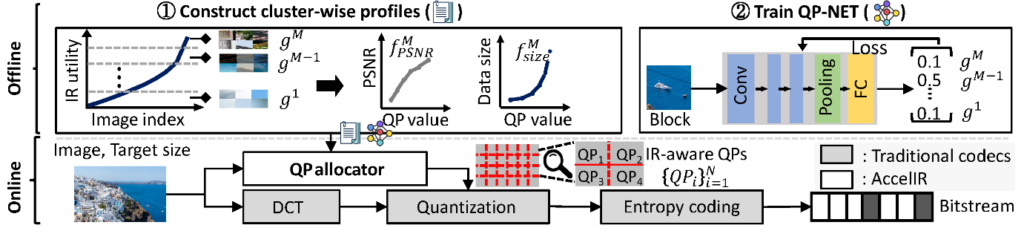

일반적으로 데이터 라벨링은 비용이 많이 들기 때문에, 유용한 데이터를 우선적으로 선별할 수 있는 다양한 액티브 러닝(active learning) 기법들이 제안되어 왔는데 기존 액티브 러닝 기법들은 모델 정확도를 최대화하는 것에만 초점을 맞추고 있기에 공정성도 함께 고려한 기술에 대한 연구가 부족한 반면 연구팀에서 제시한 기법(FALCON)의 경우 정확도 뿐만 아니라 공정성 지표 또한 개선할 수 있도록 하였다.

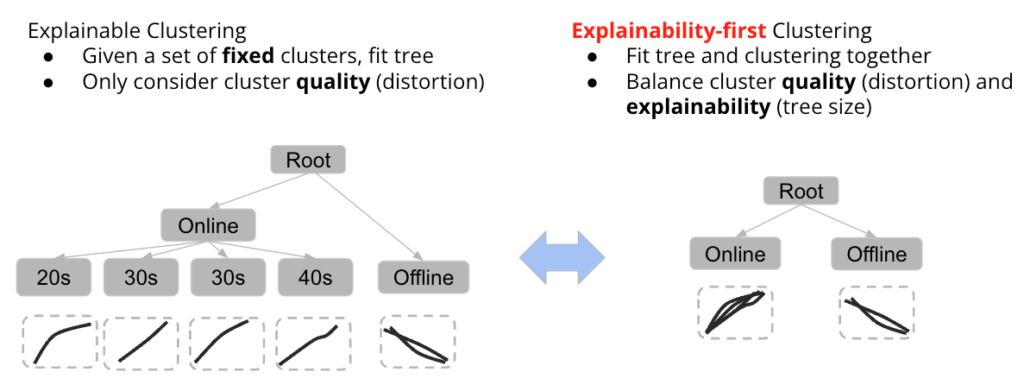

FALCON에서는 크게 두가지 기법을 활용하여 공정성을 개선시키는데, 먼저 모델의 공정성을 악화시킬 수 있는 데이터의 경우 모델 훈련에 사용하지 않고 지연시키는 시행착오 전략을 사용하였다. 또한 적대적 MAB를 통해 모델의 공정성을 개선시킬 수 있는 최적의 데이터를 선택하도록 하였다. 본 연구 성과는 데이터베이스 분야 최고 권위 학회중 하나인 VLDB 2024에서 발표될 예정이다.

해당 연구에 대한 자세한 내용은 연구실 홈페이지(https://sites.google.com/view/whanglab/di-lab)에서 확인할 수 있다.

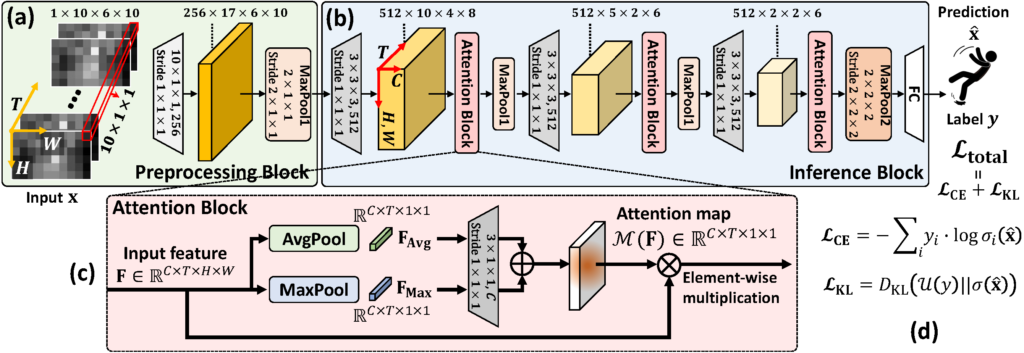

[그림 1. 공정한 엑티브 러닝]

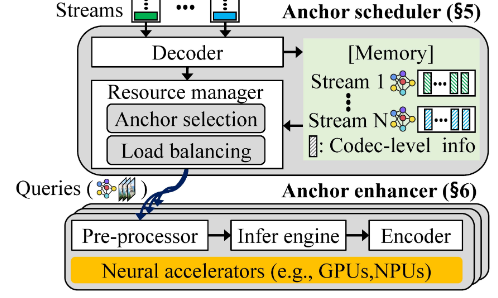

[그림 2. FALCON 워크플로우]