A research team led by Professor Junmo Kim from the Department of Electrical Engineering at KAIST has developed an innovative AI technology that can envision and understand how images change, similar to how humans imagine transformations like rotation or recoloring. This breakthrough goes beyond simply analyzing images, enabling the AI to comprehend and express the processes involved in transforming visual data. The technology holds promise for diverse applications, including medical imaging, autonomous driving, and robotics, where precision and adaptability are essential.

AI That Imagine Changes Like Humans (Understands How Images Change, Like Humans)

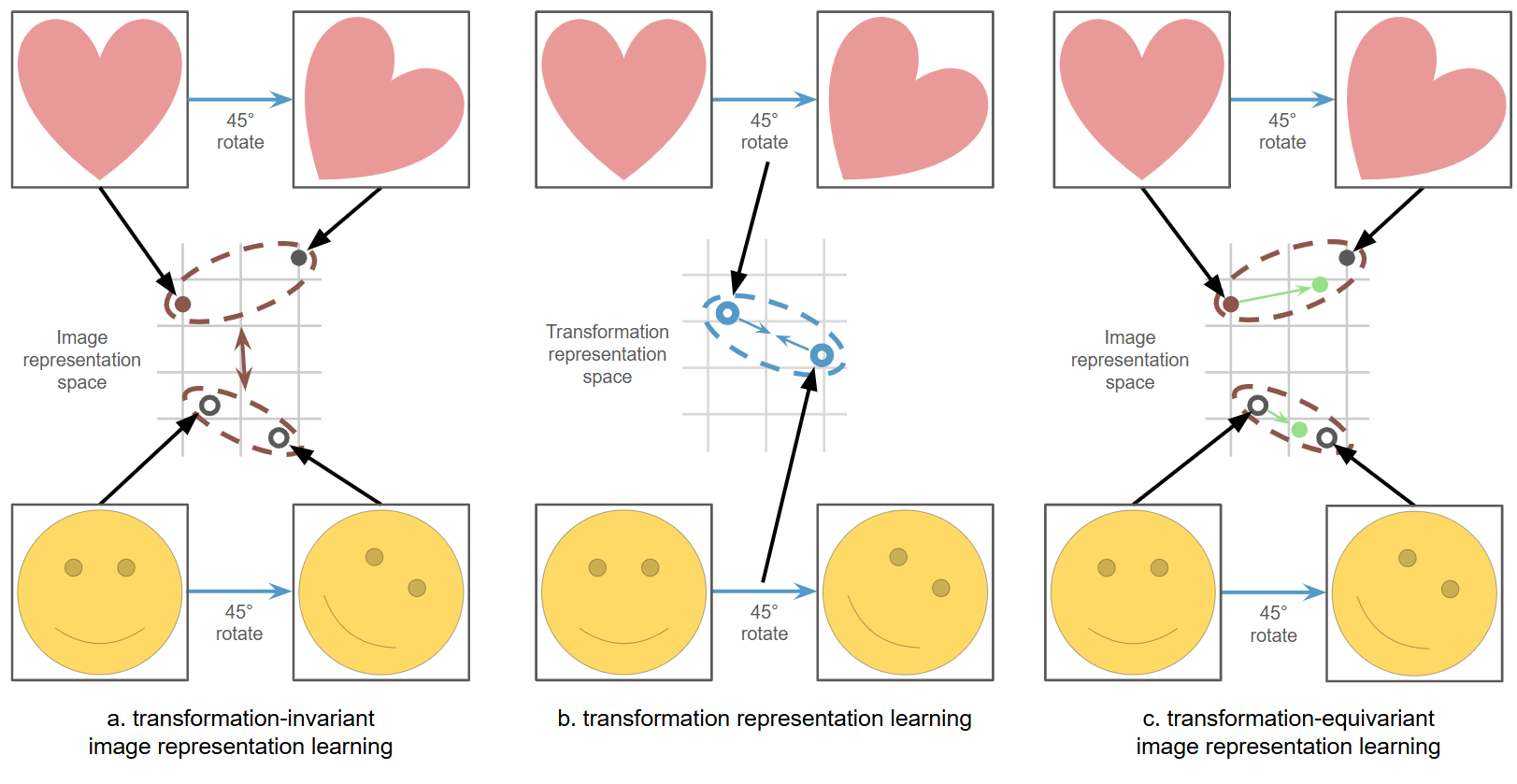

The newly developed technology, Self-supervised Transformation Learning (STL), focuses on enabling AI to learn how images transform. STL operates without relying on human-provided labels; instead, it learns transformations by comparing original images with their transformed versions. It independently recognizes changes such as, “This has been rotated,” or, “The color has changed.” This process parallels the way humans observe, imagine, and interpret variations in visual data.

Overcoming the Limitations of Conventional Methods

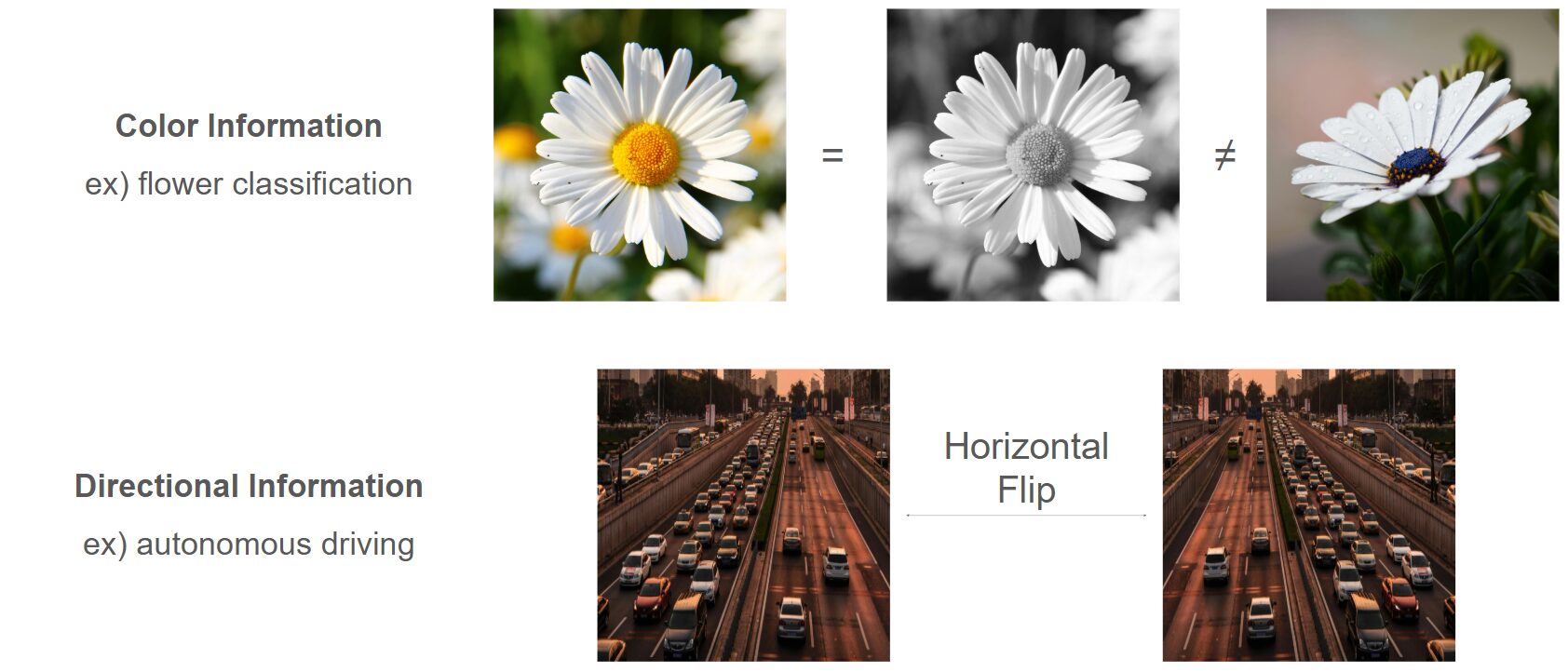

Traditional AI systems often struggle with subtle transformations, focusing primarily on capturing large, overarching features while ignoring finer details. This limitation becomes a significant challenge in scenarios where precise understanding of intricate changes is crucial.

STL addresses this gap by learning to encode even the smallest transformations in an image into its feature space—a conceptual map representing the relationships between different data points. Rather than ignoring these changes, STL incorporates them into its feature representations, enabling more accurate and nuanced outcomes.

For example, STL excels at recognizing specific alterations, such as random cropping, brightness adjustments, and color modifications, achieving performance improvements of up to 42% over conventional methods. It is particularly adept at handling complex transformations that were previously difficult for AI to manage.

Smarter AI for Broader Applications

What sets STL apart is its ability to not only understand visual content but also learn and represent transformations themselves. This capability allows STL to detect subtle changes in medical images, such as CT scans, and better interpret diverse conditions in autonomous driving. By incorporating transformations into its understanding, STL can deliver safer and more precise results across various applications.

Toward Human-Like Understanding

“STL represents a significant leap forward in AI technology, closely mirroring the way humans perceive and interpret changes in images,” said Professor Junmo Kim. “This approach has the potential to drive innovations in fields such as healthcare, robotics, and self-driving cars, where understanding transformations is critical.”

The research, conducted by Jaemyung Yu, a PhD candidate at KAIST as the first author, was presented at NeurIPS 2024, one of the world’s leading AI conferences, under the title Self-supervised Transformation Learning for Equivariant Representations. It was supported by the Ministry of Science and ICT through the Institute of Information and Communications Technology Planning and Evaluation (IITP) as part of the SW StarLab program (No. RS-2024-00439020, Development of Sustainable Real-time Multimodal Interactive Generative AI).