to be the world’s

top IT powerhouse.We thrive to be the world’s top IT powerhouse.

Our mission is to lead innovations

in information technology, create lasting impact,

and educate next-generation leaders of the world.

- 1

- 6

to be the world’s

top IT powerhouse.We thrive to be the world’s top IT powerhouse.

Our mission is to lead innovations

in information technology, create lasting impact,

and educate next-generation leaders of the world.

- 2

- 6

to be the world’s

top IT powerhouse.We thrive to be the world’s top IT powerhouse.

Our mission is to lead innovations

in information technology, create lasting impact,

and educate next-generation leaders of the world.

- 3

- 6

to be the world’s

top IT powerhouse.We thrive to be the world’s top IT powerhouse.

Our mission is to lead innovations

in information technology, create lasting impact,

and educate next-generation leaders of the world.

- 4

- 6

to be the world’s

top IT powerhouse.We thrive to be the world’s top IT powerhouse.

Our mission is to lead innovations

in information technology, create lasting impact,

and educate next-generation leaders of the world.

- 5

- 6

are a key thrust

in EE researchAI and machine learning are a key thrust in EE research

AI/machine learning efforts are already a big part of ongoing

research in all 6 divisions - Computer, Communication, Signal,

Wave, Circuit and Device - of KAIST EE

- 6

- 6

Jae-Woong Jeong’s

Team Develops

Phase-Change Metal Ink

Kyeongha Kwon’s Team

Enables Battery-Free

CO₂ Monitoring

Yongdae Kim · Insu Yun’s

Team Uncovers

Risks in Mandatory

KSA Tools

Wins Global Robotics Challenge

Hyunchul Shim’s Team

Wins 3rd Place

at A2RL Autonomous

Drone Racing Competition

develops a simulation

framework called vTrain

Achieves Human-Level Tactile Sensing with

Breakthrough Pressure Sensor

Seungwon Shin’s Team

Validates Cyber Risks

of LLMs

Wearable Carbon Dioxide Sensor

Highlights

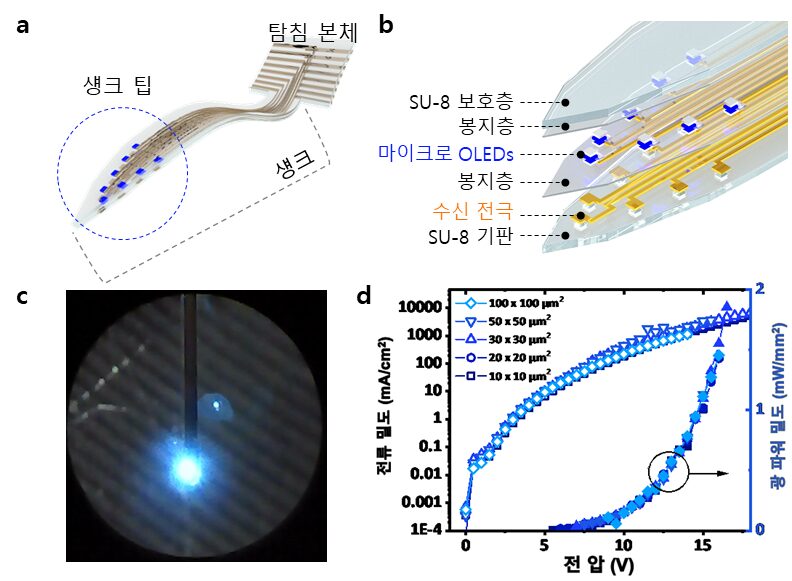

However, the device’s electrical properties degrade easily in the presence of moisture or water, which limited their use as implantable bioelectronics. Furthermore, optimizing the high-resolution integration process on thin, flexible probes remained a challenge.

To address this, the team enhanced the operational reliability of OLEDs in moist, oxygen-rich environments and minimized tissue damage during implantation. They patterned an ultrathin, flexible encapsulation layer* composed of aluminum oxide and parylene-C (Al₂O₃/parylene-C) at widths of 260–600 micrometers (μm) to maintain biocompatibility. *Encapsulation layer: A barrier that completely blocks oxygen and water molecules from the external environment, ensuring the longevity and reliability of the device.

When integrating the high-resolution micro OLEDs, the researchers also used parylene-C, the same biocompatible material as the encapsulation layer, to maintain flexibility and safety. To eliminate electrical interference between adjacent OLED pixels and spatially separate them, they introduced a pixel define layer (PDL), enabling the independent operation of eight micro OLEDs.

Furthermore, they precisely controlled the residual stress and thickness in the multilayer film structure of the device, ensuring its flexibility even in biological environments. This optimization allowed for probe insertion without bending or external shuttles or needles, minimizing mechanical stress during implantation.

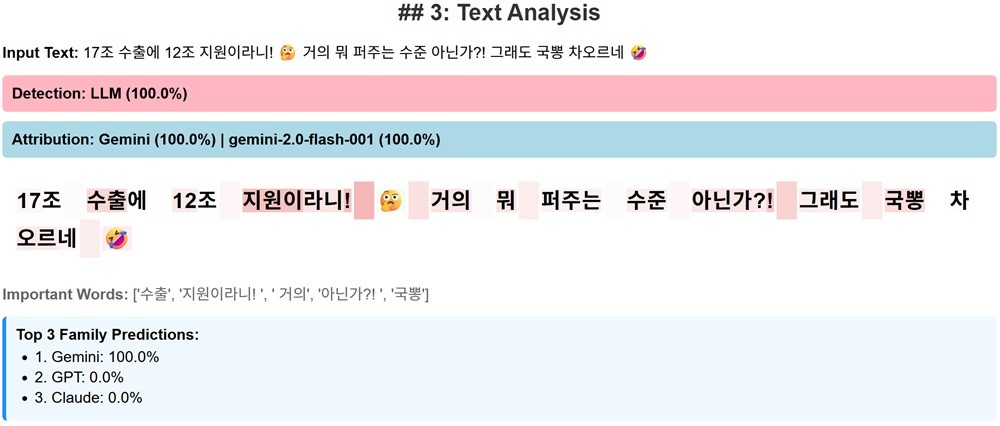

As generative AI technology advances, so do concerns about its potential misuse in manipulating online public opinion. Although detection tools for AI-generated text have been developed previously, most are based on long, standardized English texts and therefore perform poorly on short (average 51 characters), colloquial Korean news comments. The research team from KAIST has made headlines by developing the first technology to detect AI-generated comments in Korean.

A research team led by Professor Yongdae Kim from KAIST’s School of Electrical Engineering, in collaboration with the National Security Research Institute, has developed XDAC, the world’s first system for detecting AI-generated comments in Korean.

Recent generative AI can adjust sentiment and tone to match the context of a news article and can automatically produce hundreds of thousands of comments within hours—enabling large-scale manipulation of public discourse. Based on the pricing of OpenAI’s GPT-4o API, generating a single comment costs approximately 1 KRW. At this rate, producing the average 200,000 daily comments on major news platforms would cost only about 200,000 KRW (approx. USD 150) per day. Public LLMs, with their own GPU infrastructure, can generate massive volumes of comments at virtually no cost.

The team conducted a human evaluation to see whether people could distinguish AI-generated comments from human-written ones. Of 210 comments tested, participants mistook 67% of AI-generated comments for human-written, while only 73% of genuine human comments were correctly identified. In other words, even humans find it difficult to accurately tell AI comments apart. Moreover, AI-generated comments scored higher than human comments in relevance to article context (95% vs. 87%), fluency (71% vs. 45%), and exhibited a lower perceived bias rate (33% vs. 50%).

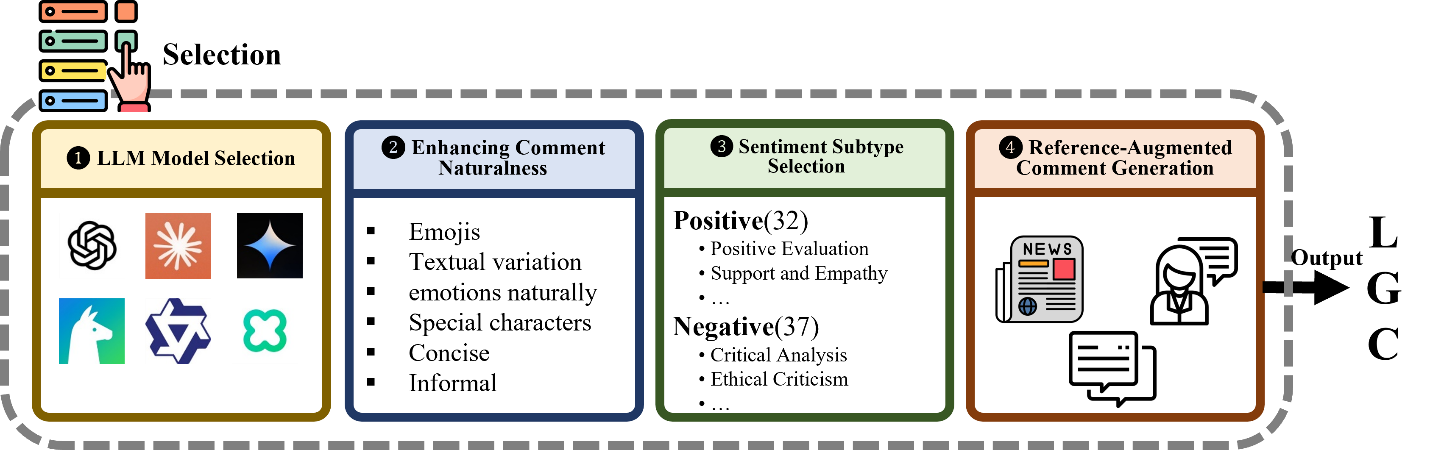

Until now, AI-generated text detectors have relied on long, formal English prose and fail to perform well on brief, informal Korean comments. Such short comments lack sufficient statistical features and abound in nonstandard colloquial elements, such as emojis, slang, repeated characters, where existing models do not generalize well. Additionally, realistic datasets of Korean AI-generated comments have been scarce, and simple prompt-based generation methods produced limited diversity and authenticity.

To overcome these challenges, the team developed an AI comment generation framework that employs four core strategies: 1) leveraging 14 different LLMs, 2) enhancing naturalness, 3) fine-grained emotion control, and 4) reference-based augmented generation, to build a dataset mirroring real user styles. A subset of this dataset has been released as a benchmark. By applying explainable AI (XAI) techniques to precise linguistic analysis, they uncovered unique linguistic and stylistic features of AI-generated comments through XAI analysis.

For example, AI-generated comments tended to use formal expressions like “것 같다” (“it seems”) and “에 대해” (“about”), along with a high frequency of conjunctions, whereas human commentators favored repeated characters (ㅋㅋㅋㅋ), emotional interjections, line breaks, and special symbols.

In the use of special characters, AI models predominantly employed globally standardized emojis, while real humans incorporated culturally specific characters including Korean consonants (ㅋ, ㅠ, ㅜ) and symbols (ㆍ, ♡, ★, •).

Notably, 26% of human comments included formatting characters (line breaks, multiple spaces), compared to just 1% of AI-generated ones. Similarly, repeated-character usage (e.g. ㅋㅋㅋㅋ, ㅎㅎㅎㅎ, etc.) appeared in 52% of human comments but only 12% of AI comments.

XDAC captures these distinctions to boost detection accuracy. It transforms formatting characters (line breaks, spaces) and normalizes repeated-character patterns into machine-readable features. It also learns each LLM’s unique linguistic fingerprint, enabling it to identify which model generated a given comment.

With these optimizations, XDAC achieves a 98.5% F1 score in detecting AI-generated comments, a 68% improvement over previous methods, and records an 84.3% F1 score in identifying the specific LLM used for generation.

Professor Yongdae Kim emphasized, “This study is the world’s first to detect short comments written by generative AI with high accuracy and to attribute them to their source model. It lays a crucial technical foundation for countering AI-based public opinion manipulation.”

The team also notes that XDAC’s detection capability may have a chilling effect, much like sobriety checkpoints, drug testing, or CCTV installation, which can reduce the incentive to misuse AI simply through its existence.

Platform operators can deploy XDAC to monitor and respond to suspicious accounts or coordinated manipulation attempts, with strong potential for expansion into real-time surveillance systems or automated countermeasures.

The core contribution of this work is the XAI-driven detection framework. It has been accepted to the main conference of ACL 2025, the premier venue in natural language processing, taking place on July 27th.

※Paper Title:

XDAC: XAI-Driven Detection and Attribution of LLM-Generated News Comments in Korean

※Full Paper:

https://github.com/airobotlab/XDAC/blob/main/paper/250611_XDAC_ACL2025_camera_ready.pdf

This research was conducted under the supervision of Professor Yongdae Kim at KAIST, with Senior Researcher Wooyoung Go (NSR and PhD candidate at KAIST) as the first author, and Professors Hyoungshick Kim (Sungkyunkwan University) and Alice Oh (KAIST) as co-authors.

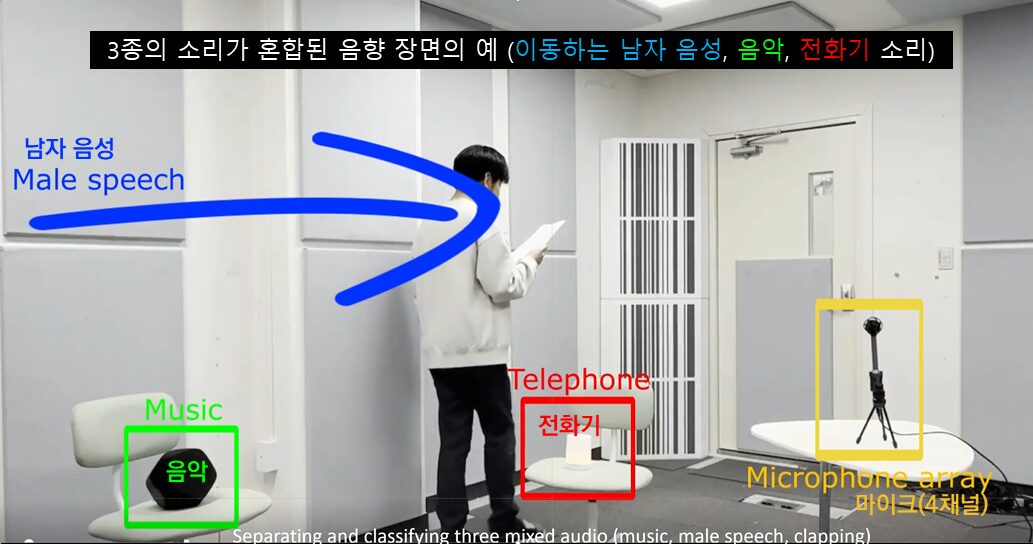

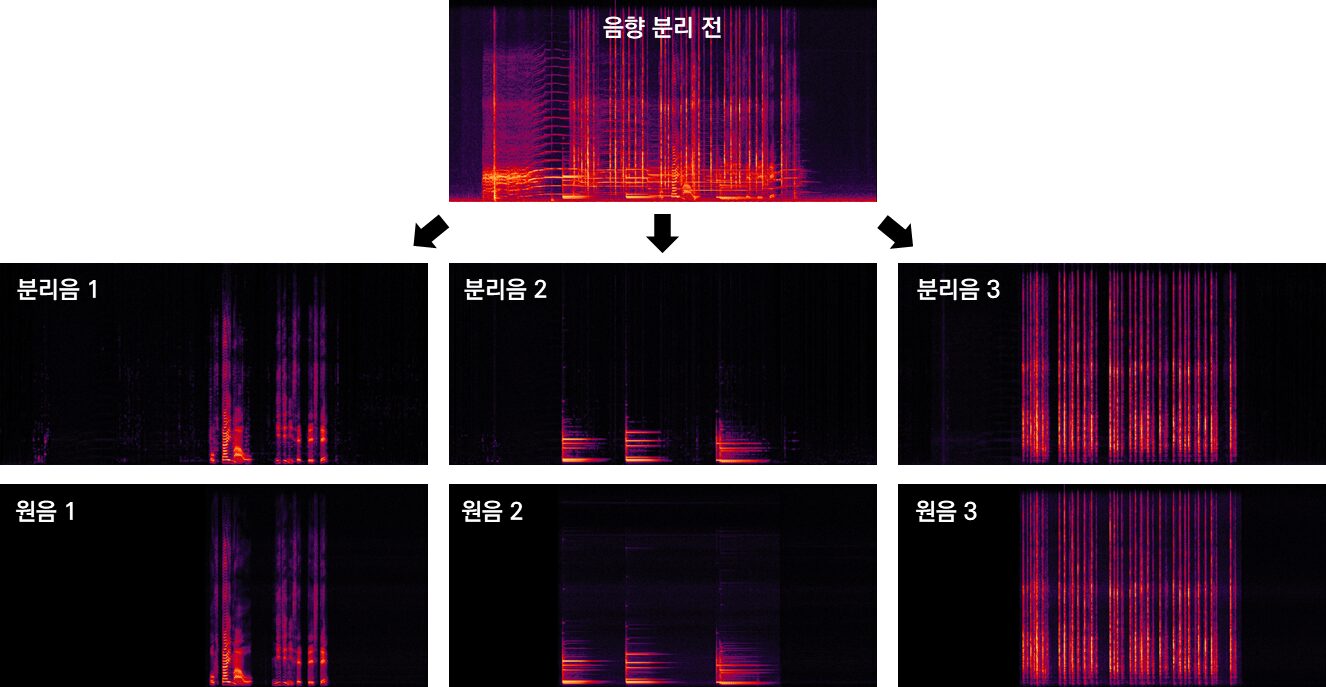

Acoustic source separation and classification is a key next-generation AI technology for early detection of anomalies in drone operations piping faults or border surveillance and for enabling spatial audio editing in AR VR content production.

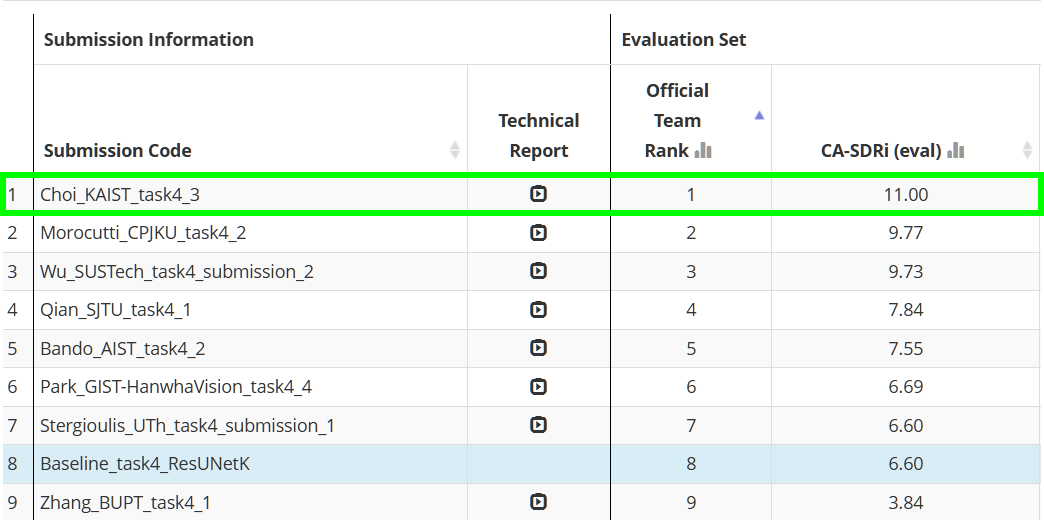

Professor Jung-Woo Choi’s research team from the School of Electrical Engineering won first place in the “Spatial Semantic Segmentation of Sound Scenes” task of the “IEEE DCASE Challenge 2025.”

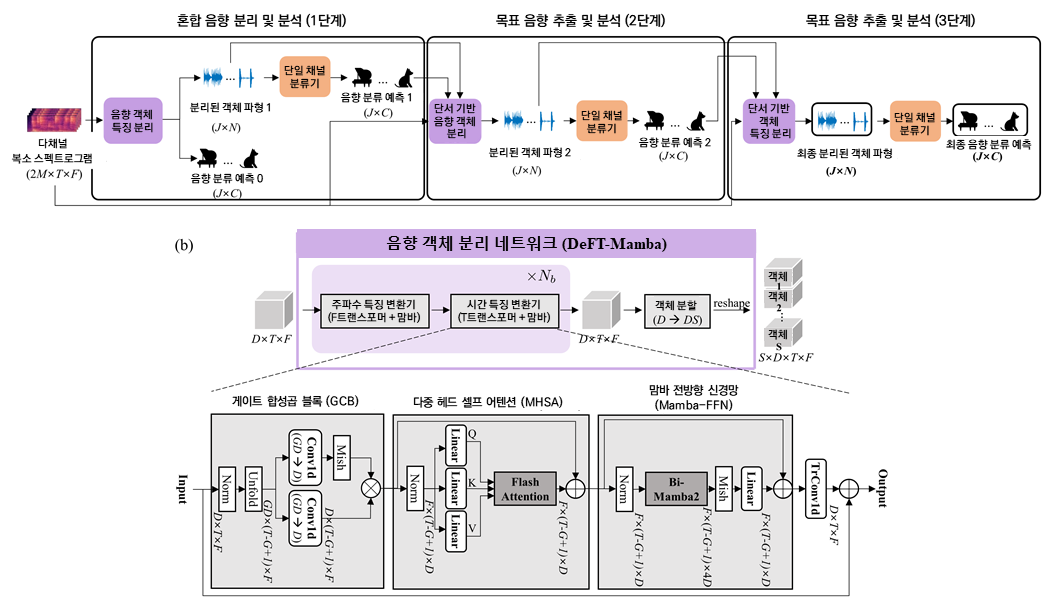

This year’s challenge featured 86 teams competing across six tasks. In their first-ever participation, KAIST’s team ranked first in Task 4: Spatial Semantic Segmentation of Sound Scenes—a highly demanding task requiring the analysis of spatial information in multi-channel audio signals with overlapping sound sources. The goal was to separate individual sounds and classify them into 18 predefined categories. The team, composed of Dr. Dongheon Lee, integrated MS-PhD student Younghoo Kwon, and MS student Dohwan Kim, will present their results at the DCASE Workshop in Barcelona this October.

Earlier this year, Dr. Dongheon Lee developed a state-of-the-art sound source separation AI combining Transformer and Mamba architectures. Furthermore, at the challenge, led by Younghoo Kwon, the team established the chain-of-inference architecture that first separates waveforms and source types and then refines the estimation by utilizing the estimated waveforms and classes as clues for target signal extraction in the next stage.

This chain-of-inference approach is inspired by human’s auditory scene analysis mechanism that isolates individual sounds by focusing on incomplete clues such as sound type, rhythm, or direction.

In the evaluation metric CA-SDRi (Class-aware Signal-to-distortion Ratio improvement)*, the team was the only participant to achieve a double-digit improvement of 11 dB, demonstrating their technical excellence. *CA-SDRi (Class-aware Signal-to-distortion Ratio improvement) measures how much clearer and less distorted the target sound is compared with the original mix.

Professor Choi remarked, “I am proud that our team’s world leading acoustic separation AI models over the past three years have now received formal recognition. Despite the greatly increased difficulty and the limited development window due to other conference schedules and final exams, each member demonstrated focused research that led to first place.”

The “IEEE DCASE Challenge 2025” was held online from April 1st to June 15th for submissions, with results announced on June 30th. Since its inception in 2013 under the IEEE Signal Processing Society, the challenge has served as a global stage for AI models in the acoustic field.

Go to the IEEE DCASE Challenge 2025 website (Click)

This research was supported by the National Research Foundation of Korea’s Mid-Career Researcher Program and STEAM Research Project, funded by the Ministry of Education, and the Future Defense Research Center, funded by the Defense Acquisition Program Administration and the Agency for Defense Development.

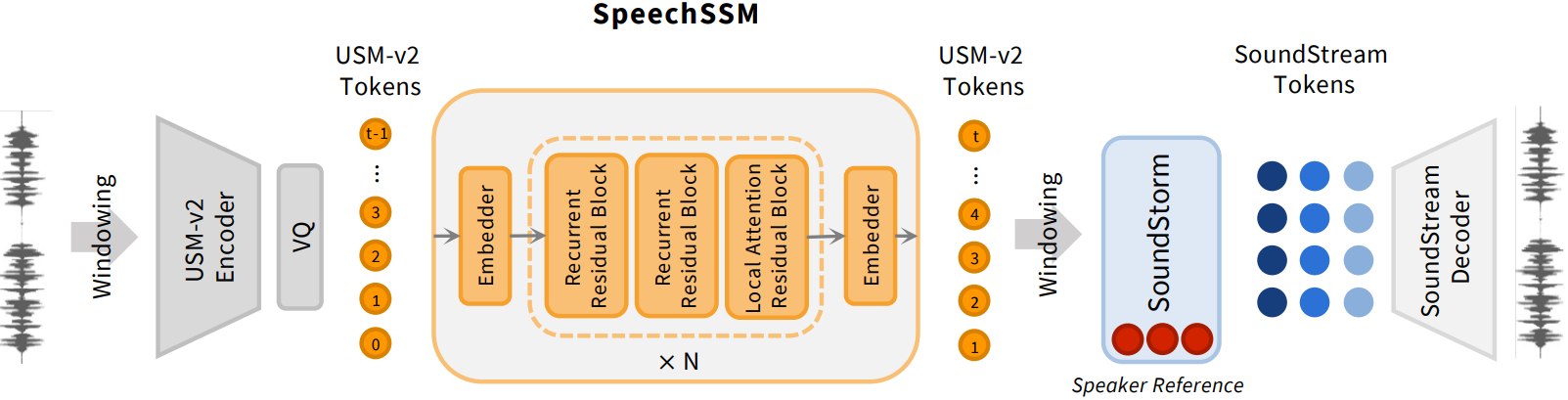

Recently, Spoken Language Models (SLMs) have been spotlighted as next-generation technology that surpasses the limitations of text-based language models by learning human speech without text to understand and generate linguistic and non-linguistic information. However, existing models showed significant limitations in generating long-duration content required for podcasts, audiobooks, and voice assistants. Now, KAIST researcher has succeeded in overcoming these limitations by developing ‘SpeechSSM,’ which enables consistent and natural speech generation without time constraints.

Ph.D. candidate Sejin Park from Professor Yong Man Ro’s research team in the School of Electrical Engineering has developed ‘SpeechSSM,’ a spoken language model capable of generating long-duration speech.

A major advantage of Spoken Language Models (SLMs) is their ability to directly process speech without intermediate text conversion, leveraging the unique acoustic characteristics of human speakers, allowing for the rapid generation of high-quality speech even in large-scale models.

However, existing models faced difficulties in maintaining semantic and speaker consistency for long-duration speech due to increased ‘speech token resolution’ and memory consumption when capturing very detailed information by breaking down speech into fine fragments.

To solve this problem, Se Jin Park developed ‘SpeechSSM,’ a spoken language model using a Hybrid State-Space Model, designed to efficiently process and generate long speech sequences.

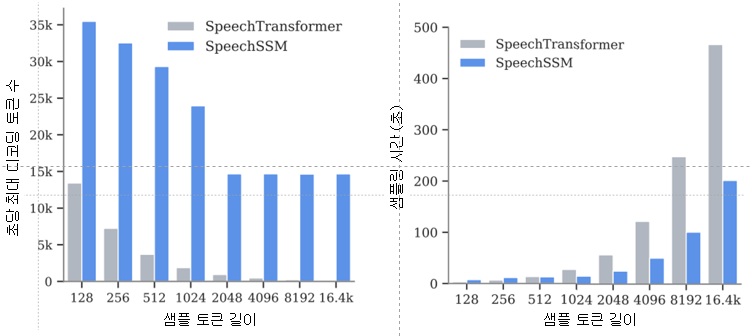

This model employs a ‘hybrid structure’ that alternately places ‘attention layers’ focusing on recent information and ‘recurrent layers’ that remember the overall narrative flow (long-term context). This allows the story to flow smoothly without losing coherence even when generating speech for a long time. Furthermore, memory usage and computational load do not increase sharply with input length, enabling stable and efficient learning and the generation of long-duration speech.

SpeechSSM effectively processes unbounded speech sequences by dividing speech data into short, fixed units (windows), processing each unit independently, and then combining them to create long speech.

Additionally, in the speech generation phase, it uses a ‘Non-Autoregressive’ audio synthesis model (SoundStorm), which rapidly generates multiple parts at once instead of slowly creating one character or one word at a time, enabling the fast generation of high-quality speech.

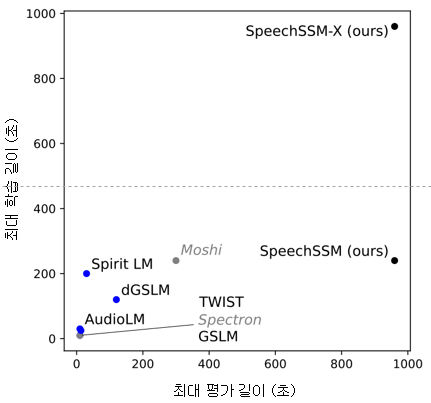

While existing models typically evaluated short speech models of about 10 seconds, Se Jin Park created new evaluation tasks for speech generation based on their self-built benchmark dataset, ‘LibriSpeech-Long,’ capable of generating up to 16 minutes of speech.

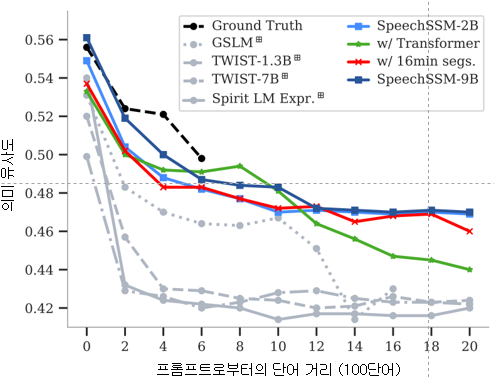

Compared to PPL (Perplexity), an existing speech model evaluation metric that only indicates grammatical correctness, she proposed new evaluation metrics such as ‘SC-L (semantic coherence over time)’ to assess content coherence over time, and ‘N-MOS-T (naturalness mean opinion score over time)’ to evaluate naturalness over time, enabling more effective and precise evaluation.

Whereas conventional SLMs have been trained and evaluated on sequences up to 200 seconds in length, SpeechSSM is capable of training and evaluating speech up to 16 minutes. While the proposed model can theoretically generate speech of infinite length with constant memory usage, the experiments were limited to 16 minutes for evaluation purposes.>

Through these new evaluations, it was confirmed that speech generated by the SpeechSSM spoken language model consistently featured specific individuals mentioned in the initial prompt, and new characters and events unfolded naturally and contextually consistently, despite long-duration generation. This contrasts sharply with existing models, which tended to easily lose their topic and exhibit repetition during long-duration generation.

PhD candidate Sejin Park explained, “Existing spoken language models had limitations in long-duration generation, so our goal was to develop a spoken language model capable of generating long-duration speech for actual human use.” She added, “This research achievement is expected to greatly contribute to various types of voice content creation and voice AI fields like voice assistants, by maintaining consistent content in long contexts and responding more efficiently and quickly in real time than existing methods.”

This research, with Se Jin Park as the first author, was conducted in collaboration with Google DeepMind and is scheduled to be presented as an oral presentation at ICML (International Conference on Machine Learning) 2025 on July 16th.

- Paper Title: Long-Form Speech Generation with Spoken Language Models

- DOI: 10.48550/arXiv.2412.18603

Ph.D. candidate Se Jin Park has demonstrated outstanding research capabilities as a member of Professor Yong Man Ro’s MLLM (multimodal large language model) research team, through her work integrating vision, speech, and language. Her achievements include a spotlight paper presentation at 2024 CVPR (Computer Vision and Pattern Recognition) and an Outstanding Paper Award at 2024 ACL (Association for Computational Linguistics).

For more information, you can refer to the publication and accompanying demo: SpeechSSM Publications.

Three Ph.D. students from EE—Kyeongha Rho (advisor: Joon Son Chung), Seokjun Park (advisor: Jinseok Choi), and Juntaek Lim (advisor: Minsoo Rhu)—have been selected as recipients of the 2nd Presidential Science Scholarship for Graduate Students.

Kyeongha Rho is conducting research on multimodal self-supervised learning, as well as multimodal perception and generation models. Seokjun Park is currently researching optimization techniques for low-power beamforming in satellite and multi-access systems for next-generation 6G wireless communications, as well as predictive beamforming utilizing artificial intelligence in integrated sensing and communication (ISAC) systems. Juntaek Lim focuses on developing high-performance, secure computing systems by integrating security across both hardware and software.

The Presidential Science Scholarship for Graduate Students is a new initiative launched last year by the Korea Student Aid Foundation to foster world-class research talent in science and engineering. Final awardees receive a certificate of scholarship in the name of the President, along with financial support—KRW 1.5 million per month (KRW 18 million annually) for master’s students and KRW 2 million per month (KRW 24 million annually) for Ph.D. students.

This year’s selection process for the scholarship was highly competitive, with 2,355 applicants vying for 120 spots, resulting in a competition ratio of approximately 20:1.

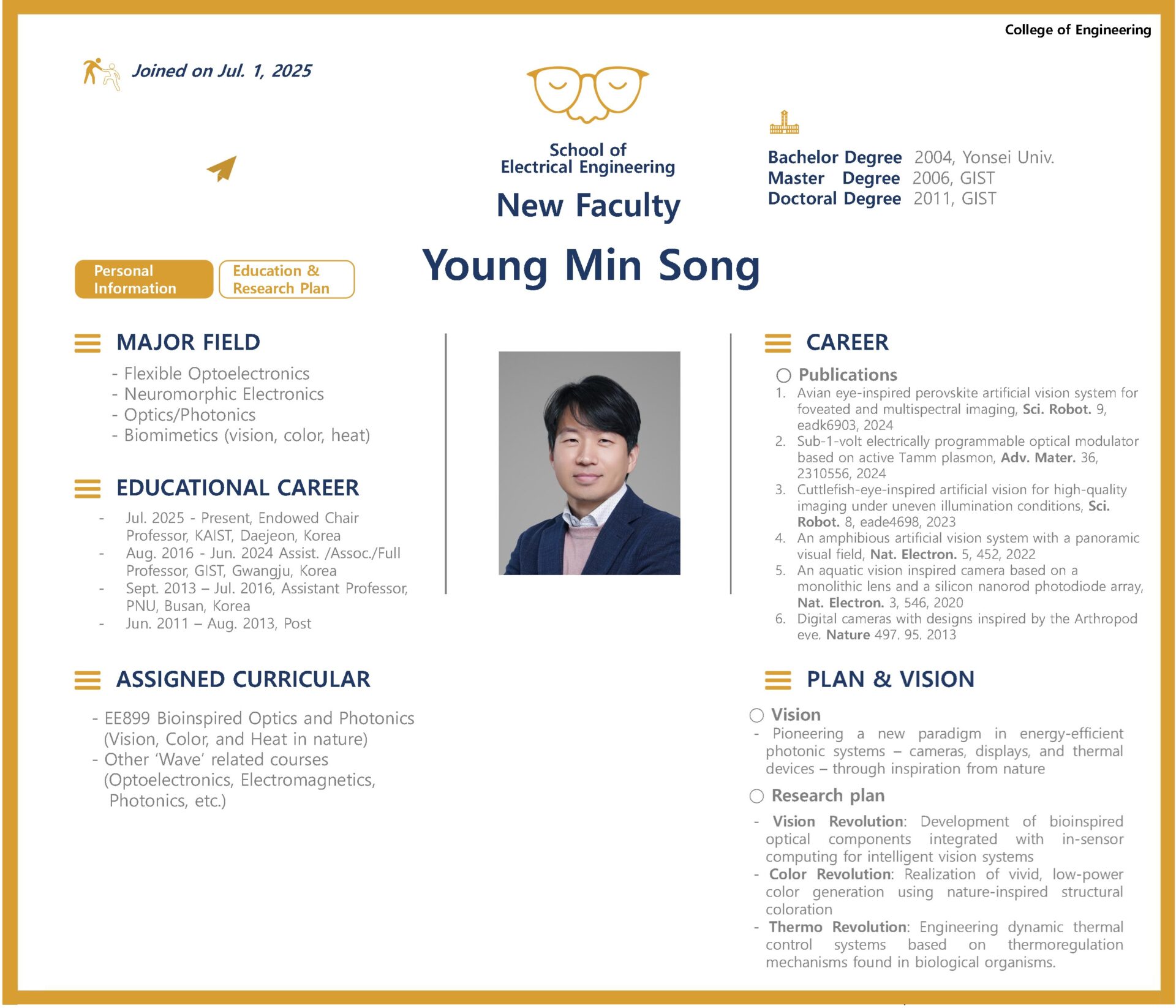

We are pleased to announce that Professor Young Min Song will be joining the KAIST School of Electrical Engineering as of July 1, 2025. Congratulations!

Professor Song’s temporary office is located in Room 1410, Saenel-dong (E3-4). His primary research areas include flexible optoelectronic devices and nanophotonics. He is actively working on biomimetic cameras for intelligent robotics, opto-neuromorphic devices and systems, nanophotonics-based reflective displays, and radiative cooling devices through infrared control. For more details on his research, please visit his homepage.

Homepage: https://www.ymsong.net

Click here to read Professor Song’s recent interview in Nature

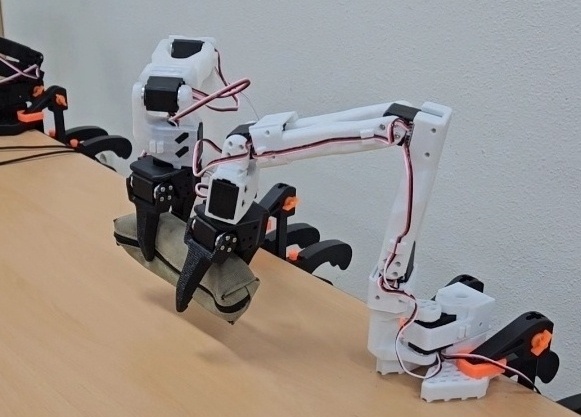

‘Team ACE’ from Professor Dong Eui Cang’s lab in our department achieved outstanding results by winning a Third Prize at the ‘Hugging Face LeRobot Worldwide Hackathon’, held over three days from June 14 to 16.

Composed of Seokjoon Kwon (Master’s Program, Team Leader), Hee-Deok Jang (Ph.D. Program), Hojun Kwon (Master’s Program), Guining Pertin (Master’s Program), and Kyeongdon Lee (Master’s Program) from Professor Dong Eui Chang’s lab, ‘Team ACE’ developed a VLA-based collaborative robot object transfer system and placed 20th out of more than 600 teams worldwide, earning a Third Prize (awarded to teams ranked 6th-24th). In addition, the team also received the KIRIA President’s Award (awarded by the Korea Institute for Robot Industry Advancement) from the local organizing committee in Daegu, South Korea.

‘Hugging Face’ is a U.S.-based AI startup known as one of the world’s largest platforms for artificial intelligence, offering widely used machine learning libraries such as Transformers and Datasets. More recently, the company has also been actively providing AI resources for robotics applications.

Hugging Face regularly hosts global hackathons that bring together researchers and students from around the world to compete and collaborate on innovative AI-driven solutions.

This year’s ‘LeRobot Worldwide Hackathon’ gathered over 2,500 AI and robotics experts from 45 countries. Participants were challenged to freely propose and implement solutions to real-world problems in industry and everyday life by applying technologies such as VLA (Vision Language Action) models and reinforcement learning to robotic arms.

Through their achievement in the competition, ‘Team ACE’ was recognized for their technical excellence and creativity by both the global robotics community and experts in South Korea.

The team’s performance at the competition drew considerable attention from local media and was actively reported in regional news outlets.

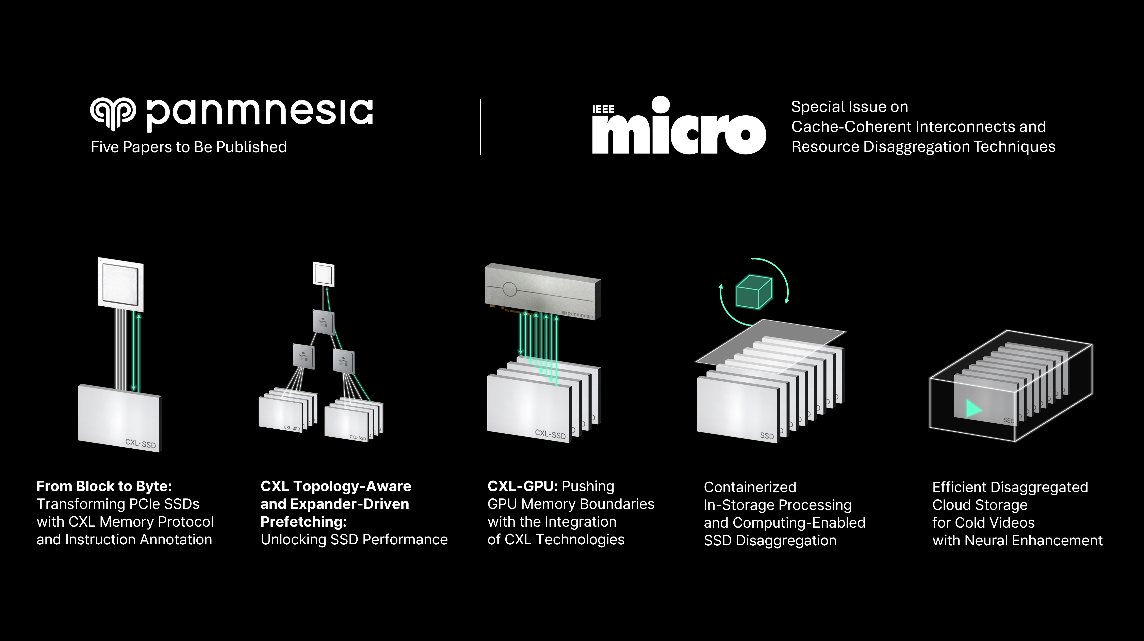

Professor Myoungsoo Jung’s research team is going to reveal their works on next-generation interconnect / semiconductor technologies in IEEE Micro, a leading journal in computer architecture.

IEEE Micro, established in 1981, is a bimonthly publication featuring recent advances in computer architecture and semiconductor technologies. Professor Jung’s team will present a total of five papers in the upcoming May-June issue on “Cache Coherent Interconnects and Resource Disaggregation Technology”.

Among them, three papers focus on applying Compute Express Link (CXL) to storage systems. Especially, the team introduces solutions to improve the performance of CXL-SSD, a concept for which Professor Jung suggested a practical implementation in early 2022. These technologies enable memory expansion through large-capacity SSDs while providing performance comparable to DRAM.

The team also explored storage architectures incorporating In-Storage Processing (ISP), which performs computation directly inside the storage pool. By processing data within storage, this approach reduces data movement and thereby improves efficiency in large-scale applications such as large language models (LLMs).

These papers, conducted in collaboration with the faculty-led startup Panmnesia, will be published through IEEE Micro’s official website and in its regular print issue.

- Early Access Link #1: From Blocks to Byte: Transforming PCIe SSDs with CXL Memory Protocol and Instruction Annotation

- Early Access Link #2: CXL Topology-Aware and Expander-Driven Prefetching: Unlocking SSD Performance

- Early Access Link #3: CXL-GPU: Pushing GPU Memory Boundaries with the Integration of CXL Technologies

- Early Access Link #4: Containerized In-Storage Processing and Computing-Enabled SSD Disaggregation

- Early Access Link #5: Efficient Disaggregated Cloud Storage for Cold Videos with Neural Enhancement