EE Professor Kim Joo-Young Developed A ChatGPT Core AI Semiconductor with A 2.4-fold Improvement in Price efficiency

The ChatGPT released by OpenAI has captured global attention, and everyone is closely observing the changes this technology will bring out.

This technology is based on large language models (LLM), which represent an unprecedented scale of artificial intelligence (AI) models compared to conventional AI.

However, the operation of these models requires a significant number of high-performance GPUs, leading to astronomical computing costs.

KAIST (President: Lee Kwang-Hyung) announced that research team led by EE Professor Kim Joo-Young Kim has successfully developed an AI semiconductor that efficiently accelerates the inference operations of large language models, which play a crucial role in ChatGPT.

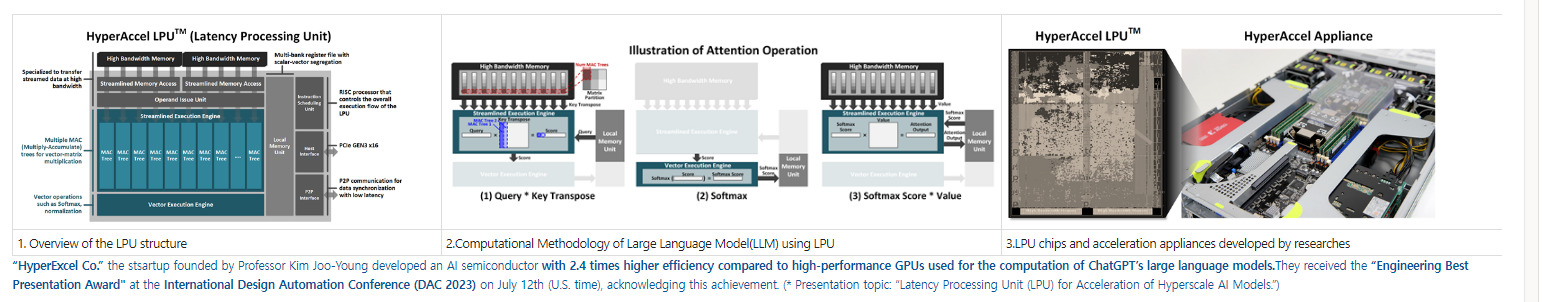

The developed AI semiconductor, named the ‘Latency Processing Unit (LPU),’ efficiently accelerates the inference operations of large language models. It incorporates a high-speed computing engine capable of maximizing memory bandwidth utilization and performing all necessary inference computations rapidly.

Additionally, it comes equipped built-in networking capabilities, making it easily expandable with multiple accelerators. This LPU-based acceleration appliance server achieved up to a 50% higher performance and approximately 2.4 times better performance-to-price ratio compared to a supercomputer based on the industry-leading high-performance GPU, NVIDIA A100.

This advancement holds the potential to replace high-performance GPUs in data centers that are experiencing a rapid surge in demand for generative AI services. This research was conducted by Professor HyperExcel Co., founded by Professor Kim Joo-Young and achieved the remarkable accomplishment of receiving the “Engineering Best Presentation Award” at the International Design Automation Conference (DAC 2023) held in San Francisco on July 12th (U.S. time).

DAC is a prestigious international conference in the field of semiconductor design, particularly showcasing global semiconductor design technologies related to Electronic Design Automation (EDA) and Semiconductor Intellectual Property (IP).

DAC attracts participation from renowned semiconductor design companies such as Intel, NVIDIA, AMD, Google, Microsoft, Samsung, TSMC, as well as top universities including Harvard, MIT, and Stanford.

Among the world’s notable semiconductor technologies, Professor Kim’s team stands out as the sole recipient of an award for AI semiconductor technology tailored for large language models.

This award acknowledges their AI semiconductor solution as a groundbreaking means to drastically reduce the substantial costs associated with inference operations for large language models on the global stage.

Professor Kim stated, “With the new processor ‘LPU’ for future large AI computations, I intend to pioneer the global market and take a lead over big tech companies in terms of technological prowess.”

(Note: The provided translation is an elaboration and summary of the original text for clarity and readability.)