Highlights

nd Professor Dong-Ho Kang (Gwangju Institute of Science and Technology, GIST). >

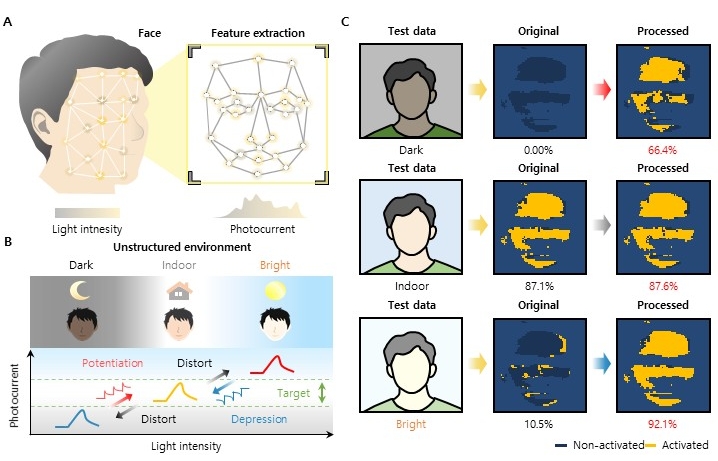

Our school’s research team has developed a next-generation image sensor that can autonomously adapt to drastic changes in illumination without any external image-processing pipeline. The technology is expected to be applicable to autonomous vehicles, intelligent robotics, security and surveillance, and other vision-centric systems.

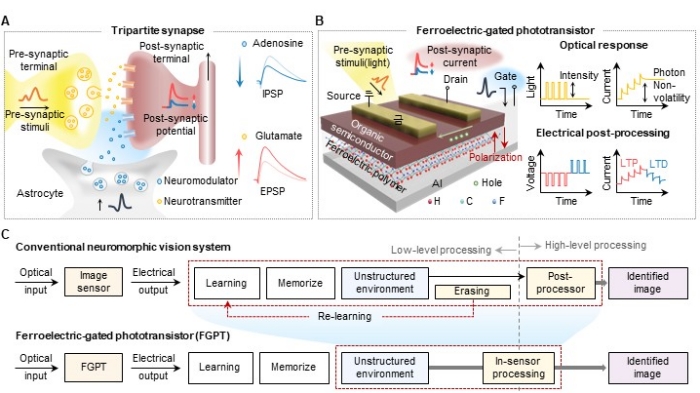

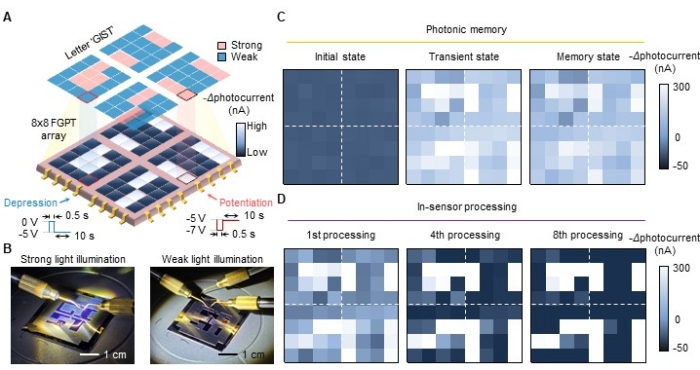

In this joint work, KAIST School of Electrical Engineering Professor Young Min Song and GIST Professor Dong-Ho Kang designed a ferroelectric-based optoelectronic device inspired by the brain’s neural architecture. The device integrates light sensing, memory (recording), and in-sensor processing within a single element, enabling a new class of image sensors.

As demand grows for “Visual AI,” there is an urgent need for high-performance visual sensors that operate robustly across diverse environments. Conventional CMOS-based image sensors* process each pixel’s signal independently; when scene brightness changes abruptly, they are prone to saturation, overexposure or underexposure, leading to information loss. *CMOS (complementary metal-oxide semiconductor) image sensors are fabricated using semiconductor processes and are widely used in digital cameras, smartphones, and other consumer electronics.

In particular, they struggle to adapt instantly to extremes such as day/night transitions, strong backlighting, or rapid indoor-outdoor changes, often requiring separate calibration or post-processing of the captured data.

To address these limitations, the team designed a ferroelectric-based image sensor that draws on biological neural structures and learning principles to remain adaptive under extreme environmental variation. By controlling the ferroelectric polarization state, the device can retain sensed optical information for extended periods and selectively amplify or suppress it. As a result, it performs contrast enhancement, illumination compensation, and noise suppression on-sensor, eliminating the need for complex post-processing. The team demonstrated stable face recognition across day/night and indoor/outdoor conditions solely via in-sensor processing, without reconstructing training datasets or performing additional training to handle unstructured environments.

The proposed device is also highly compatible with established AI training algorithms such as convolutional neural networks (CNNs).

CNNs are deep-learning architectures specialized for 2D data such as images and videos, which extract features through convolution operations and perform classification. They are widely used in visual tasks including face recognition, autonomous driving, and medical image analysis.

Professor Young Min Song commented, “This study expands ferroelectric devices, traditionally used as electrical memory, into the domains of neuromorphic vision and in-sensor computing. Going forward, we plan to advance this platform into next-generation vision systems capable of precisely sensing and processing wavelength, polarization, and phase of light.”

This research was supported by the Mid-career Researcher Program of the Ministry of Science and ICT and the National Research Foundation of Korea (NRF). The results were published online in the international journal “Advanced Materials” on July 28th.

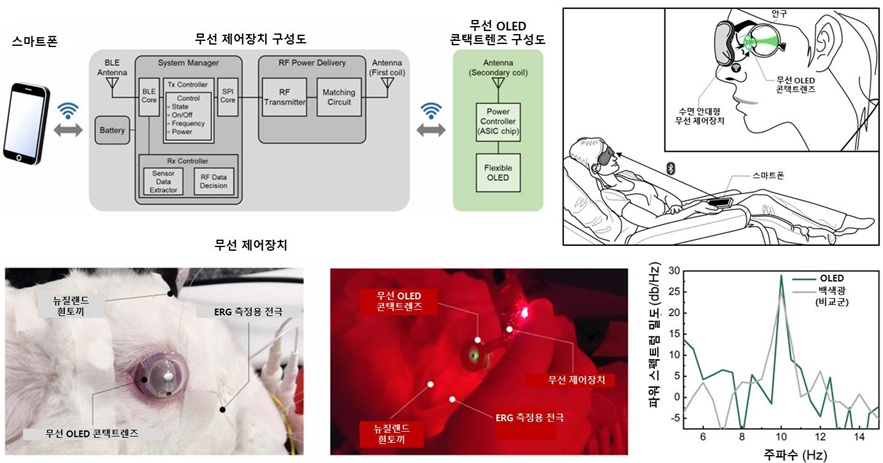

Electroretinography (ERG) is an ophthalmic diagnostic method used to determine whether the retina is functioning normally. It is widely employed for diagnosing hereditary retinal diseases or assessing retinal function decline.

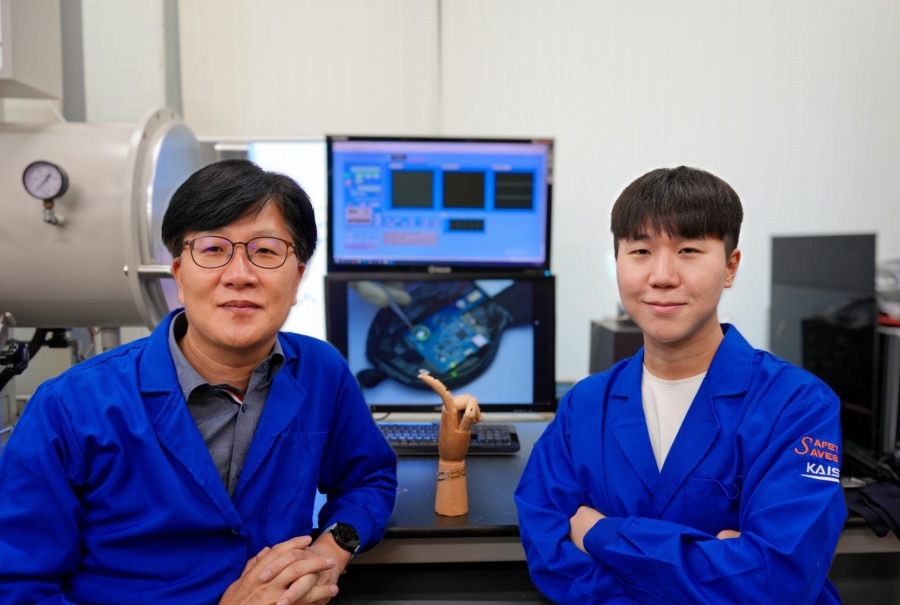

A team of Korean researchers has developed a next-generation wireless ophthalmic diagnostic technology that replaces the existing stationary, darkroom-based retinal testing method by incorporating an “ultrathin OLED” into a contact lens. This breakthrough is expected to have applications in diverse fields such as myopia treatment, ocular biosignal analysis, augmented-reality (AR) visual information delivery, and light-based neurostimulation.

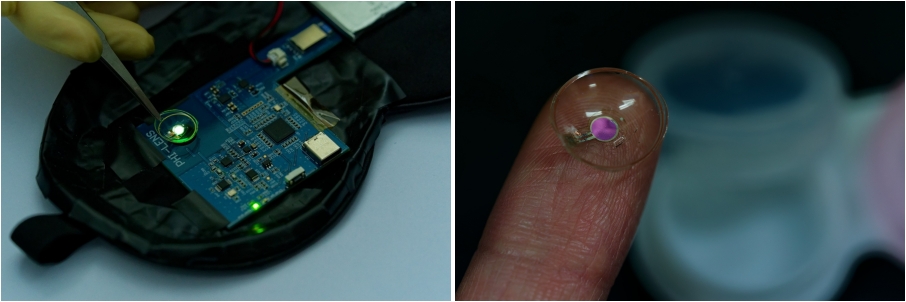

A research team led by Professor Seunghyup Yoo from the School of Electrical Engineering, in collaboration with Professor Se Joon Woo of Seoul National University Bundang Hospital, Professor Sei Kwang Hahn of POSTECH, the CEO of PHI Biomed Co., and the Electronics and Telecommunications Research Institute under the National Research Council of Science & Technology, has developed the world’s first wireless, contact lens-based wearable retinal diagnostic platform using organic light-emitting diodes (OLEDs).

This technology enables ERG simply by wearing the lens, eliminating the need for large specialized light sources and dramatically simplifying the conventional, complex ophthalmic diagnostic environment.

Traditionally, ERG requires the use of a stationary Ganzfeld device in a dark room, where patients must keep their eyes open and remain still during the test. This setup imposes spatial constraints and can lead to patient fatigue and compliances challenges.

To overcome these limitations, the joint research team integrated an ultrathin flexible OLED —approximately 12.5 μm thick, or 6–8 times thinner than a human hair— into a contact lens electrode for ERG. They also equipped it with a wireless power receiving antenna and a control chip, completing a system capable of independent operation.

For power transmission, the team adopted a wireless power transfer method using a 433 MHz resonant frequency suitable for stable wireless communication. This was also demonstrated in the form of a wireless controller embedded in a sleep mask, which can be linked to a smartphone —further enhancing practical usability.

While most smart contact lens–type light sources developed for ocular illumination have used inorganic LEDs, these rigid devices emit light almost from a single point, which can lead to excessive heat accumulation and thus usable light intensity. In contrast, OLEDs are areal light sources and were shown to induce retinal responses even under low luminance conditions. In this study, under a relatively low luminance* of 126 nits, the OLED contact lens successfully induced stable ERG signals, producing diagnostic results equivalent to those obtained with existing commercial light sources. *Luminance: A value indicating how brightly a surface or screen emits light; for reference, the luminance of a smartphone screen is about 300–600 nits (can exceed 1000 nits at maximum).

Animal tests confirmed that the surface temperature of a rabbit’s eye wearing the OLED contact lens remained below 27°C, avoiding corneal heat damage, and that the light-emitting performance was maintained even in humid environments—demonstrating its effectiveness and safety as an ERG diagnostic tool in real clinical settings.

Professor Seunghyup Yoo stated that “integrating the flexibility and diffusive light characteristics of ultrathin OLEDs into a contact lens is a world-first attempt,” and that “this research can help expand smart contact lens technology into on-eye optical diagnostic and phototherapeutic platforms, contributing to the advancement of digital healthcare technology.”

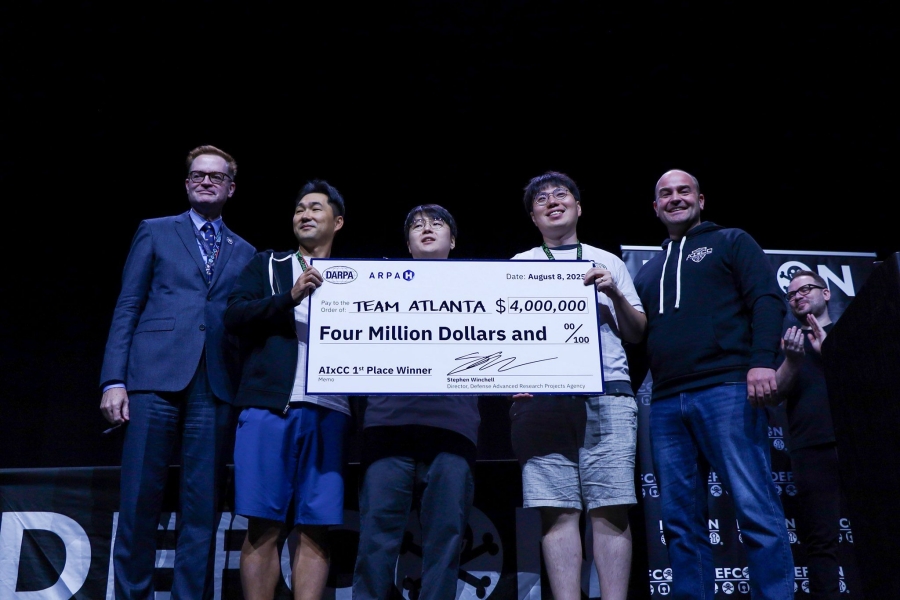

With participation from Professor Insu Yun’s research team at KAIST’s School of Electrical Engineering, Samsung Research, POSTECH, and Georgia Institute of Technology formed “Team Atlanta” and won first place in the AI Cyber Challenge (AIxCC) hosted by the U.S. Defense Advanced Research Projects Agency (DARPA) at the world’s largest hacking conference, DEF CON 33, held in Las Vegas on August 8 (local time).

Led by Taesoo Kim of Samsung Research and Georgia Institute of Technology, Team Atlanta earned USD 4 million (approx. KRW 5.5 billion) in prize money, proving the excellence of AI-based autonomous cyber defense technology on the global stage.

The AI Cyber Challenge (AIxCC) is a two-year global competition jointly organized by DARPA and the U.S. Advanced Research Projects Agency for Health (ARPA-H). It challenges teams to use AI-based Cyber Reasoning Systems (CRS) to automatically analyze, detect, and fix software vulnerabilities. The total prize pool is USD 29.5 million, with USD 4 million awarded to the final winner.

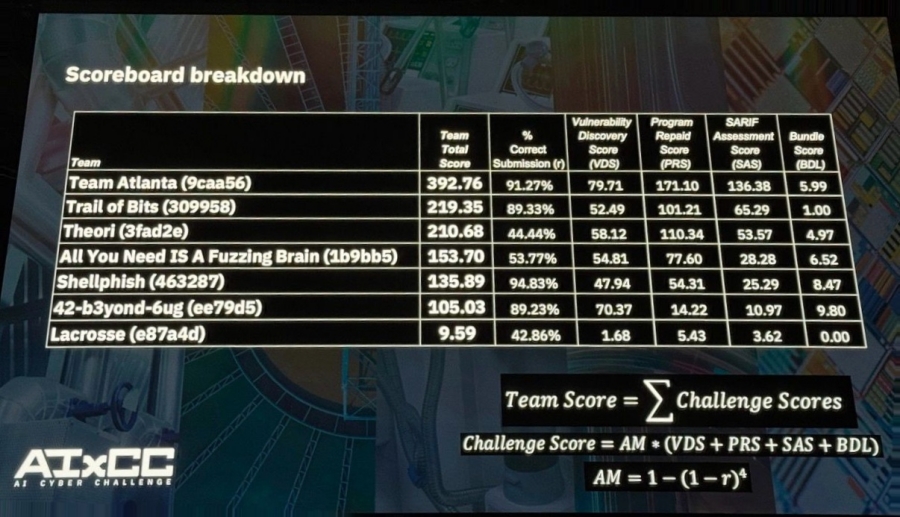

In the final round, Team Atlanta scored 392.76 points, beating second-place Trail of Bits by more than 170 points to secure a decisive victory.

The Cyber Reasoning System (CRS) developed by Team Atlanta successfully detected various types of vulnerabilities and patched many of them in real time during the competition. Among the 70 artificially injected vulnerabilities in the final, the seven finalist teams detected an average of 77% and patched 61% of them. In addition, they discovered 18 previously unknown vulnerabilities in real-world software, demonstrating the potential of AI security technology.

All CRS technologies, including that of the winning team, will be made open source and are expected to be used to strengthen the security of critical infrastructure such as hospitals, water systems, and power grids.

Professor Insu Yun said, “I am very pleased with this tremendous achievement. This victory demonstrates that Korea’s cybersecurity research has reached the highest global standards, and it was meaningful to showcase the capabilities of Korean researchers on the world stage. We will continue research that combines AI and security technologies to safeguard the digital safety of both our nation and the global community.”

KAIST President Kwang Hyung Lee stated, “This victory is another proof that KAIST is a global leader in the convergence of future cybersecurity and artificial intelligence. We will continue to provide full support so that our researchers can compete confidently on the world stage and achieve outstanding results.”

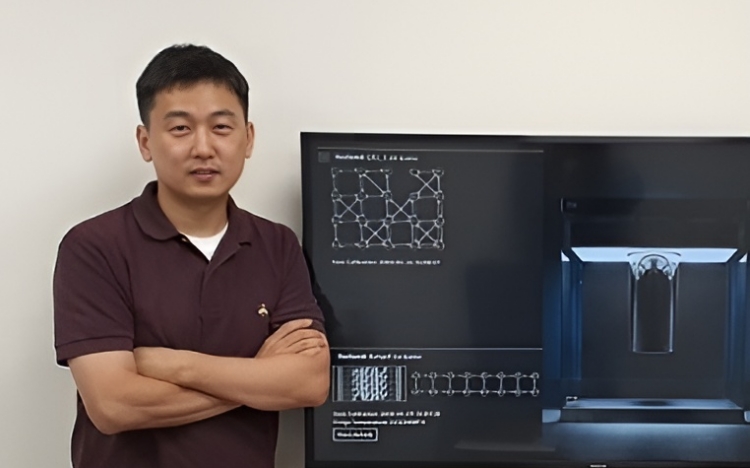

For the first time world, a Korean research team has devised and experimentally validated a “Measurement‐protection (MP)” theory that enables stable quantum key distribution (QKD) without any measurement calibration.

Professor Joonwoo Bae’s team from our School, in collaboration with the Quantum Communications Laboratory at the Electronics and Telecommunications Research Institute (ETRI), has developed a new technology that enables stable quantum communication in moving environments such as satellites, ships, and drones.

Quantum communication is a high-precision technology that transmits information via the quantum states of light, but in wireless, moving environments it has suffered from severe instability due to weather and surrounding environmental changes. In particular, in rapidly changing settings like the sky, sea, or air, reliably delivering quantum states has been extremely challenging.

This research is significant as it overcomes those limitations and opens up possibility of exchanging quantum information stably even while in motion. It is expected that quantum technology can be applied in the future to secure communications between satellites and ground stations, as well as to drone and maritime communications.

Quantum key distribution (QKD) is a technology that uses the principles of quantum mechanics to distribute cryptographic keys that are fundamentally immune to eavesdropping. Existing QKD protocols required repeated recalibration of the receiver’s measurement devices whenever the channel conditions changed.

However, in this work the team proved that, with only simple local operations, stable key distribution is possible regardless of channel conditions. The theory was developed by Professor Bae’s group, and the experiments were carried out by ETRI researchers.

To generate single-photon pulses, the researchers used a 100 MHz light source: a vertical-cavity surface-emitting laser (VCSEL). A VCSEL is a type of semiconductor laser whose beam is emitted vertically from the top surface of the chip.

They emulated a long-distance free-space link with up to 30 dB loss over a 10 m path and inserted various polarization noise to simulate a wireless environment. Even under these harsh conditions, they confirmed that quantum transmission and measurement remained reliable. Both the transmitter and receiver were equipped with three waveplates each to implement the required local operations.

As a result, they demonstrated that an MP-based QKD system can raise the system’s maximum tolerable quantum bit error rate (QBER), the fraction of transmitted qubits received in error, by up to 20.7% compared to conventional approaches.

In other words, if the received QBER is below 20.7%, stable quantum key distribution is possible without any measurement calibration. This establishes the foundation for implementing reliable quantum communication across a variety of noisy channel environments. The team believes this achievement can be applied to scenarios similar to satellite-ground links.

The study was published on June 25 in the IEEE’s prestigious communications journal, “Journal on Selected Areas in Communications”, with ETRI’s Heasin Ko and KAIST’s Spiros Kechrimparis serving as co-first authors.

Professor Bae commented, “This result will be a decisive turning point in bringing reliable quantum-secure communication into practical reality, even under complex environments.”

This research was supported by the Ministry of Science and ICT and the Institute for Information & Communications Technology Planning & Evaluation (IITP) through the “Core Technology Development for Quantum Internet,” “ETRI R&D Support Project,” “Quantum Cryptography Communication Industry Expansion and Next-Generation Technology Development Project,” “Quantum Cryptography Communication Integration and Transmission Technology Advancement Project,” and “SW Computing Industrial Core Technology Development Project”; by the National Research Foundation of Korea through the “Quantum Common-Base Technology Development Project” and “Mid-Career Researcher Program”; and as part of the Future Space Education Center initiative of the Korea Aerospace Agency.

Sihyun Joo, a graduate of our School’s Artificial Intelligence & Machine Learning Lab (U-AIM) under the supervision of Professor Chang D. Yoo, has been promoted to the youngest Executive Director at Hyundai Motor Group.

Mr. Joo obtained his master’s degree in artificial intelligence and machine learning from the U-AIM Lab in February 2011. Following graduation, he joined Hyundai Motor Group, where he has served as the Robotics Intelligence Software Team Leader (Principal Researcher). Throughout his career, he has demonstrated outstanding research capabilities and leadership, significantly contributing to the advancement of robotics and software technologies within the group. This recent promotion recognizes the deep trust and respect he has earned within the organization.

His current research focuses on AI-driven software technologies for robotic intelligence, particularly vision and voice-based AI models. He has implemented and validated various robotic systems and services such as indoor and outdoor delivery, patrol, and factory maintenance, while also working towards enhancing the mass production readiness of these technologies. Earlier in his career, he successfully developed deep learning and machine learning-based gesture and handwriting recognition technologies, which were effectively integrated into production vehicles.

Mr. Joo is expected to play a crucial role in strengthening Hyundai Motor Group’s competitiveness in robotics and AI technologies.

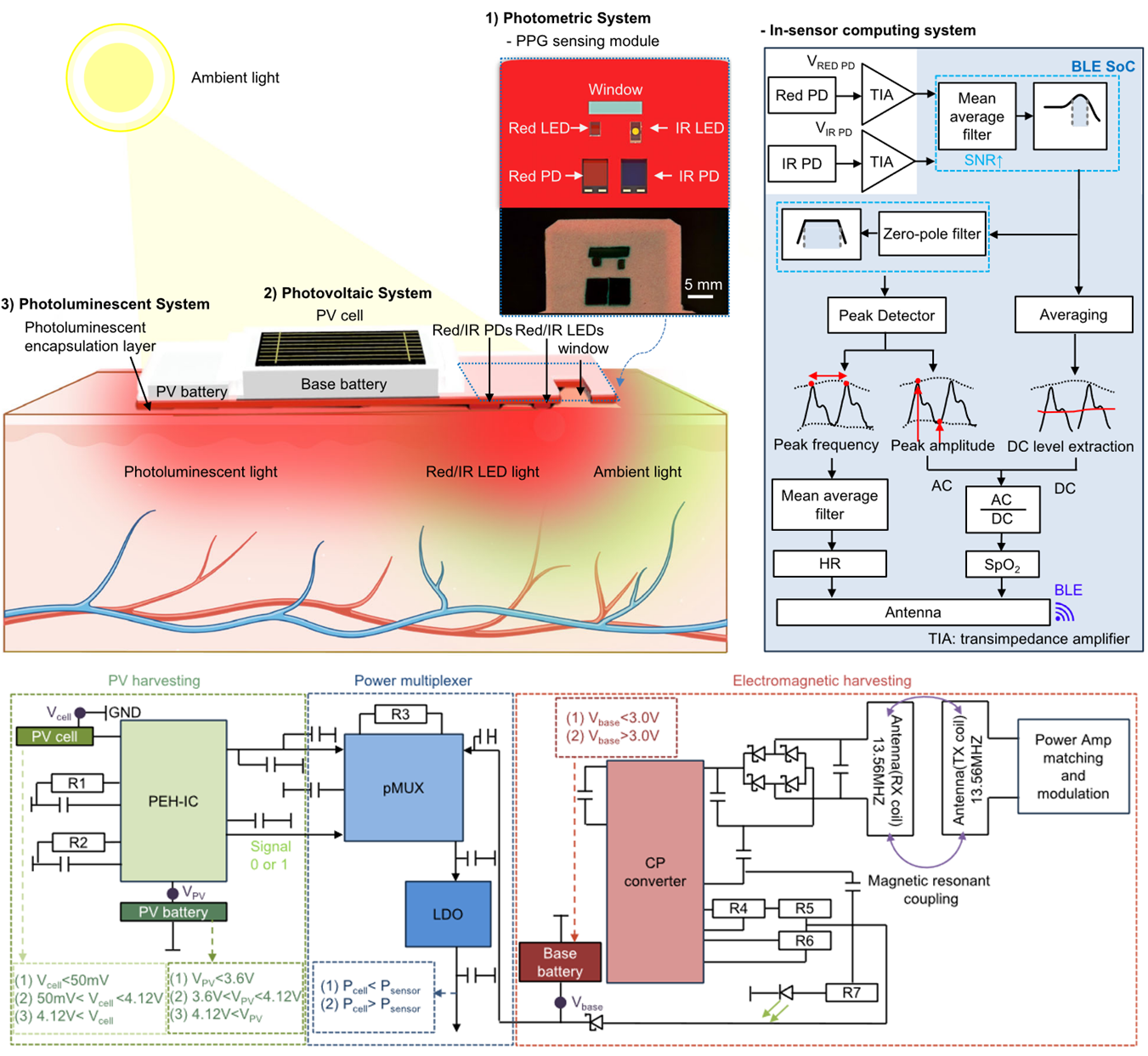

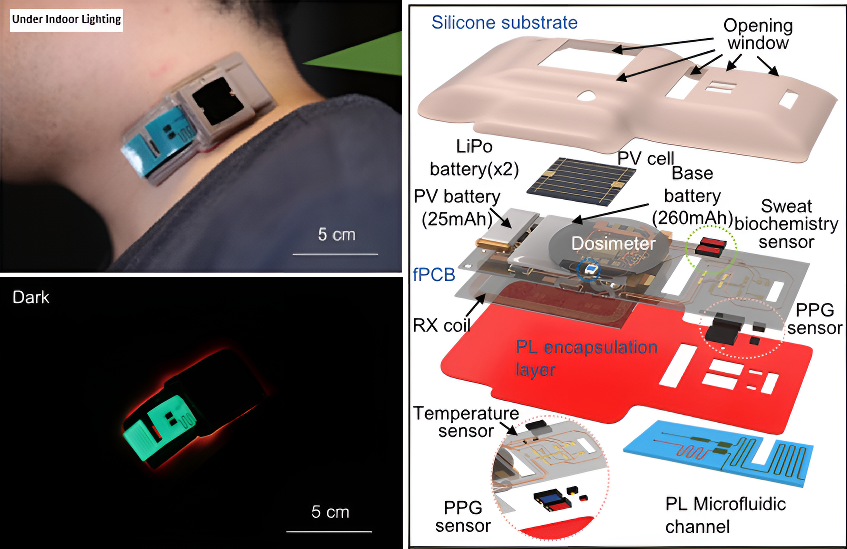

Miniaturization and weight reduction of medical wearable devices for continuous health monitoring such as heart rate, blood oxygen saturation, and sweat component analysis remain major challenges. In particular, optical sensors consume a significant amount of power for LED operation and wireless transmission, requiring heavy and bulky batteries. To overcome these limitations, KAIST EE researchers have developed a next-generation wearable platform that enables 24-hour continuous measurement by using ambient light as an energy source and optimizing power management according to the power environment.

The first core technology, the Photometric Method, is a technique that adaptively adjusts LED brightness depending on the intensity of the ambient light source. By combining ambient natural light with LED light to maintain a constant total illumination level, it automatically dims the LED when natural light is strong and brightens it when natural light is weak.

Whereas conventional sensors had to keep the LED on at a fixed brightness regardless of the environment, this technology optimizes LED power in real time according to the surrounding environment. Experimental results showed that it reduced power consumption by as much as 86.22% under sufficient lighting conditions.

The second is the Photovoltaic Method using high-efficiency multijunction solar cells. This goes beyond simple solar power generation to convert light in both indoor and outdoor environments into electricity. In particular, the adaptive power management system automatically switches among 11 different power configurations based on ambient conditions and battery status to achieve optimal energy efficiency.

The third innovative technology is the Photoluminescent Method. By mixing strontium aluminate microparticles* into the sensor’s silicone encapsulation structure, light from the surroundings is absorbed and stored during the day and slowly released in the dark. As a result, after being exposed to 500W/m² of sunlight for 10 minutes, continuous measurement is possible for 2.5 minutes even in complete darkness. *Strontium aluminate microparticles: A photoluminescent material used in glow-in-the-dark paint or safety signs, which absorbs light and emits it in the dark for an extended time.

These three technologies work complementarily—during bright conditions, the first and second methods are active, and in dark conditions, the third method provides additional support—enabling 24-hour continuous operation.

The research team applied this platform to various medical sensors to verify its practicality. The photoplethysmography sensor monitors heart rate and blood oxygen saturation in real time, allowing early detection of cardiovascular diseases. The blue light dosimeter accurately measures blue light, which causes skin aging and damage, and provides personalized skin protection guidance. The sweat analysis sensor uses microfluidic technology to simultaneously analyze salt, glucose, and pH in sweat, enabling real-time detection of dehydration and electrolyte imbalances.

Additionally, introducing in-sensor data computing significantly reduced wireless communication power consumption. Previously, all raw data had to be transmitted externally, but now only the necessary results are calculated and transmitted within the sensor, reducing data transmission requirements from 400B/s to 4B/s—a 100-fold decrease.

To validate performance, the research tested the device on healthy adult subjects in four different environments: bright indoor lighting, dim lighting, infrared lighting, and complete darkness. The results showed measurement accuracy equivalent to that of commercial medical devices in all conditions A mouse model experiment confirmed accurate blood oxygen saturation measurement in hypoxic conditions.

Professor Kyeongha Kwon of KAIST, who led the research, stated, “This technology will enable 24-hour continuous health monitoring, shifting the medical paradigm from treatment-centered to prevention-centered shifting the medical paradigm from treatment-centered to prevention-centered,” further stating that “cost savings through early diagnosis as well as strengthened technological competitiveness in the next-generation wearable healthcare market are anticipated.”

This research was published on July 1 in the international journal Nature Communications, with Do Yun Park, a doctoral student in the AI Semiconductor Graduate Program, as co–first author.

※ Paper title: Adaptive Electronics for Photovoltaic, Photoluminescent and Photometric Methods in Power Harvesting for Wireless and Wearable Sensors

※ DOI: https://doi.org/10.1038/s41467-025-60911-1

※ URL: https://www.nature.com/articles/s41467-025-60911-1

This research was supported by the National Research Foundation of Korea (Outstanding Young Researcher Program and Regional Innovation Leading Research Center Project), the Ministry of Science and ICT and Institute of Information & Communications Technology Planning & Evaluation (IITP) AI Semiconductor Graduate Program, and the BK FOUR Program (Connected AI Education & Research Program for Industry and Society Innovation, KAIST EE).

Our department’s Professor Young Min Song, in collaboration with Professor Hyeon‑Ho Jeong’s research team at GIST School of EECS, has developed a replication‑impossible security authentication technology based on nature‑inspired nanophotonic structures.

This technology can be easily embedded into physical products such as ID cards or QR codes and, being visually indistinguishable from existing items, provides strong tamper‑proof protection without compromising design. It holds broad potential for applications requiring genuine‑product authentication, including premium consumer goods, pharmaceuticals, and electronics.

Until now, anti‑tampering measures like QR codes and barcodes have been limited by their ease of replication and the difficulty of assigning truly unique identifiers to each item. A recently spotlighted solution is the physically unclonable function (PUF)*, which leverages the natural randomness arising during manufacturing to grant each device a unique physical signature, thereby enhancing security and authentication reliability.

However, existing PUF technologies, while achieving randomness and uniqueness, have struggled with color consistency control and are easily identified (and thus attacked) from the outside. * Physically Unclonable Function (PUF): A technique that uses physical variations formed during the manufacturing process to generate a unique authentication key. Because these variations are inherently random and unclonable, even if the authentication data is stolen, constructing the exact hardware for authentication is effectively impossible.

In response, the research team turned its attention to the unique phenomenon of structural color* observed in natural organisms. For example, the wings of butterflies, feathers of birds, and leaves of seaweed all contain nanoscale microstructures arranged in a form of quasiorder*—a pattern that is neither completely ordered nor entirely random. These structures appear to exhibit uniform coloration to the naked eye, but internally contain subtle randomness that enables survival functions such as camouflage, communication, and predator evasion.

* Quasi‑order: A structural arrangement that is neither fully ordered nor fully disordered. In nature, nano‑scale elements are arranged in a pattern that blends order with randomness—found, for example, in butterfly wings, seaweed leaves, and bird feathers—producing uniform color at a macroscopic scale while embedding unique optical features.

* Structural Color: Color produced not by pigments but by nano‑meter‑scale structures that interact with light, commonly seen in living organisms. Classic examples include the iridescent wings of butterflies and the feathers of peacocks.

The researchers drew inspiration from these natural phenomena. They deposited a thin dielectric layer of HfO₂ onto a metallic mirror and then used electrostatic self‑assembly to arrange gold nanoparticles (tens of nanometers in size) into a quasi‑ordered plasmonic metasurface*. Visually, this nanostructure exhibits a uniform reflection color; under a high‑magnification optical microscope, however, each region reveals a distinct random scattering pattern—an “optical fingerprint*”—that is impossible to replicate. * Plasmonic Metasurface: An ultrathin optical structure comprising precisely arranged metallic nano‑elements that exploit surface plasmon resonance to locally enhance electromagnetic fields, enabling far more compact and precise light–matter interaction than conventional optics. * Optical Fingerprint: A unique pattern of reflection, scattering, and interference produced when light interacts with a micro‑ or nano‑scale structure. Because these patterns arise from random structural variations that cannot be exactly duplicated, they serve as a practically unclonable security feature.

The team confirmed that leveraging these nano‑scale stochastic patterns enhances PUF performance compared to conventional approaches.

In a hypothetical hacking scenario where an attacker attempts to recreate the device, the time required to decrypt the optical fingerprint would exceed the age of the Earth, rendering replication virtually impossible. Through demonstration experiments on pharmaceuticals, semiconductors, and QR codes, the researchers validated the technology’s practical industrial applicability.

Analysis of over 500 generated PUF keys showed an average bit‑value distribution of 0.501, which is remarkably close to the ideal balance of 0.5, and an average inter‑key Hamming distance of 0.494, demonstrating high uniqueness and reliability. Additionally, the scattering patterns remained stable under various environmental stresses, including high temperature, high humidity, and friction, confirming excellent durability.

Professor Young Min Song emphasized, “Whereas conventional security labels can be deformed by even minor damage, our technology secures both structural stability and unclonability. In particular, by separating visible color information from the invisible unique‑key information, it offers a new paradigm in security authentication.”

Professor Hyeon‑Ho Jeong added, “By reproducing structures in which order and disorder coexist in nature through nanotechnology, we have created optical information that appears identical externally yet is fundamentally unclonable. This technology can serve as a powerful anti‑counterfeiting measure across diverse fields, from premium consumer goods to pharmaceutical authentication and even national security.”

This work, guided by Professor Young Min Song (KAIST School of Electrical Engineering) and Professor Hyeon‑Ho Jeong (GIST School of EECS), and carried out by Gyurin Kim, Doeun Kim, JuHyeong Lee, Juhwan Kim, and Se‑Yeon Heo, was supported by the Ministry of Science and ICT and the National Research Foundation’s Early‑Career Research Program, the Regional Innovation Mega Project in R&D Special Zones, and the GIST‑MIT AI International Collaboration Project.

The results were published online on July 8, 2025, in the international journal Nature Communications.

* Paper title: Quasi‑ordered plasmonic metasurfaces with unclonable stochastic scattering for secure authentication

With recent advancements in artificial intelligence’s ability to understand both language and visual information, there is growing interest in Physical AI, AI systems that can comprehend high-level human instructions and perform physical tasks such as object manipulation or navigation in the real world. Physical AI integrates large language models (LLMs), vision-language models (VLMs), reinforcement learning (RL), and robot control technologies, and is expected to become a cornerstone of next-generation intelligent robotics.

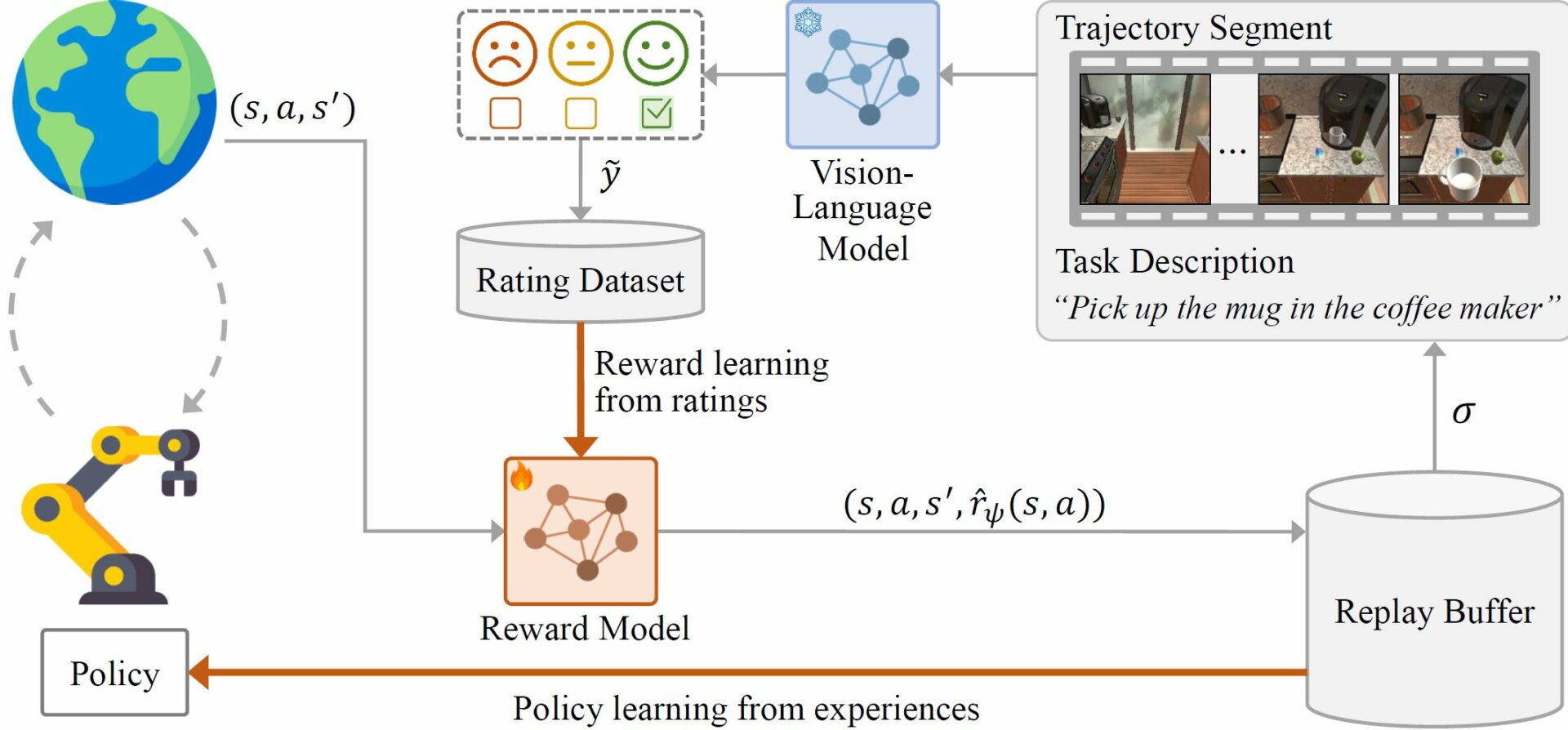

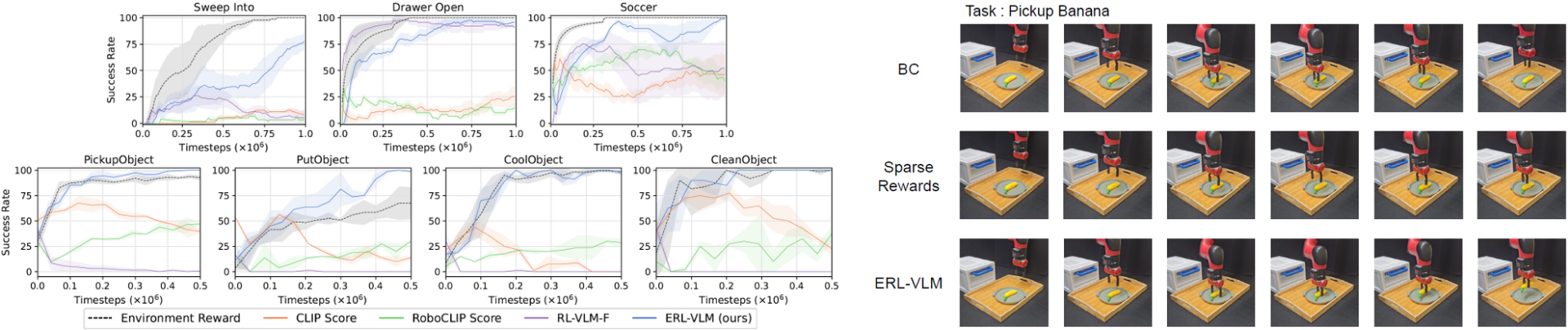

To advance research in Physical AI, an EE research team led by Professor Chang D. Yoo (U-AIM: Artificial Intelligence & Machine Learning Lab) has developed two novel reinforcement learning frameworks leveraging large vision-language models. The first, introduced in ICML 2025, is titled ERL-VLM (Enhancing Rating-based Learning to Effectively Leverage Feedback from Vision-Language Models). In this framework, a VLM provides absolute rating-based feedback on robot behavior, which is used to train a reward function. That reward is then used to learn a robot control AI model. This method removes the need for manually crafting complex reward functions and enables the efficient collection of large-scale feedback, significantly reducing the time and cost required for training.

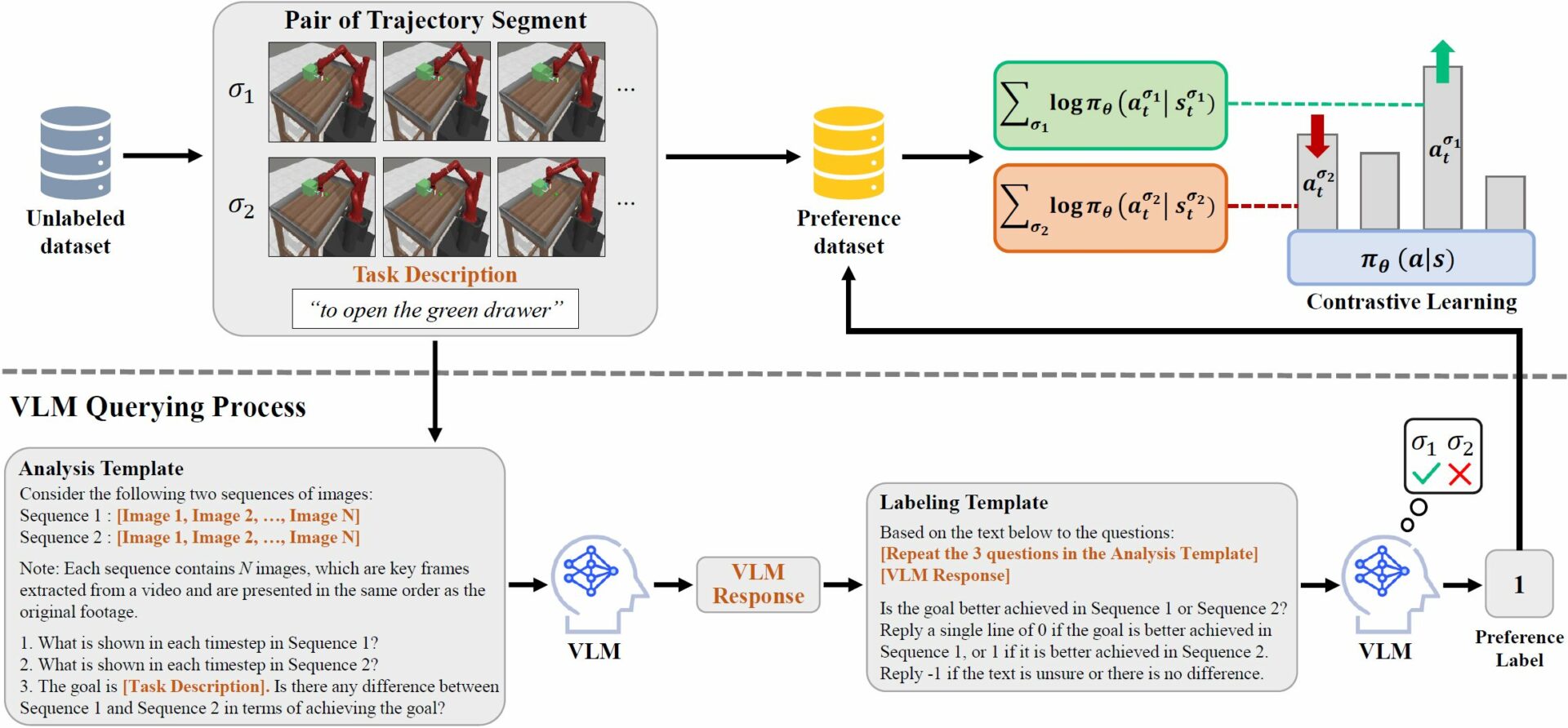

The second, published in IROS 2025, is titled PLARE (Preference-based Learning from Vision-Language Model without Reward Estimation). Unlike previous approaches, PLARE skips reward modeling entirely and instead uses pairwise preference feedback from a VLM to directly train the robot control AI model. This makes the training process simpler and more computationally efficient, without compromising performance.

Both frameworks demonstrated superior performance not only in simulation environments but also in real-world experiments using physical robots, achieving higher success rates and more stable behavior than existing methods—thereby verifying their practical applicability.

This research provides a more efficient and practical approach to enabling robots to understand and act upon human language instructions by leveraging large vision-language models—bringing us a step closer to the realization of Physical AI. Moving forward, Professor Changdong Yoo’s team plans to continue advancing research in robot control, vision-language-based interaction, and scalable feedback learning to further develop key technologies in Physical AI.