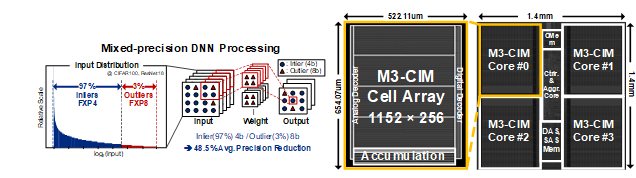

A Mixed-mode Computing-in memory (CIM) processor for the mixed-precision Deep Neural Network (DNN) processing is proposed. Due to the bit-serial processing for the multi-bit data, the previous CIM processors could not exploit the energy-efficient computation of mixed-precision DNNs. This paper proposes an energy-efficient mixed-mode CIM processor with two key features: 1) Mixed-Mode Mixed precision CIM (M3-CIM) which achieves 55.46% energy efficiency improvement. 2) Digital-CIM for In-memory MAC for the increased throughput of M3-CIM. The proposed CIM processor was simulated in 28nm CMOS technology and occupies 1.96 mm2. It achieves a state-of-the-art energy efficiency of 161.6 TOPS/W with 72.8% accuracy at ImageNet (ResNet50).

Related papers:

Wooyoung Jo, Sangjin Kim, Juhyoung Lee, Soyeon Um, Zhiyong Li, and Hoi-jun Yoo, “A 161.6 TOPS/W Mixed-mode Computing-in-Memory Processor for Energy-Efficient Mixed-Precision Deep Neural Networks”, Int’l Symp. on Circuits and Systems (ISCAS), May 2022.