Title: A 26.55TOPS/W Explainable AI Processor with Dynamic Workload Allocation and Heat Map Compression/Pruning

Venue: 2023 IEEE Custom Integrated Circuits Conference (CICC)

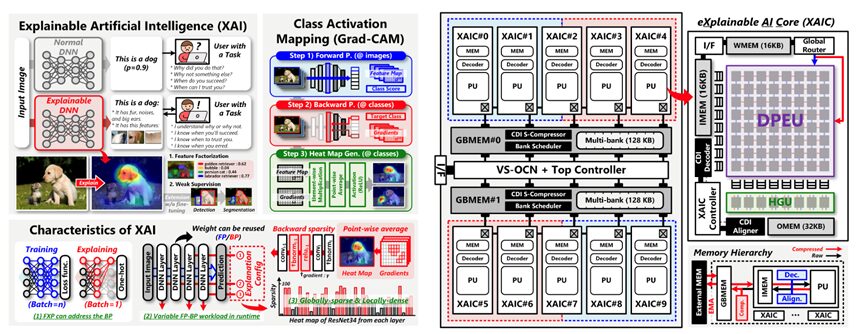

Abstract: Explainable AI aims to provide a clear and human-understandable explanation of the model’s decision, thereby building more reliable systems. However, the explanation task differs from well-known inference and training processes as it involves interactions with the user. Consequently, existing inference and training accelerators face inefficiencies when processing explainable AI on edge devices. This paper introduces explainable processing unit (EPU), the first hardware accelerator designed for explainable AI workloads. The EPU utilizes a novel data compression format for the output heat maps and intermediate gradients to enhance the overall system performance by reducing both memory footprint and external memory access. Its sparsity-free computing core efficiently handles the input sparsity with negligible control overhead, resulting in a throughput boost of up to 9.48x. It also proposes a dynamic workload scheduling with a customized on-chip network for distinct inference and explanation tasks to maximize internal data reuse hence reducing external memory access by 63.7%. Furthermore, the EPU incorporates point-wise gradient pruning that can significantly reduce the size of heat maps by a factor of 7.01x combined with the proposed compression format. Finally, the EPU chip fabricated in a 28nm CMOS process achieves the state-of-the-art area and energy efficiency of 112.3 GOPS/mm2 and 26.55 TOPS/W, respectively.

Main Figure: