Title: AdapTiV: Sign-Similarity based Image-Adaptive Token Merging for Vision Transformer Acceleration

Venue: MICRO 2024

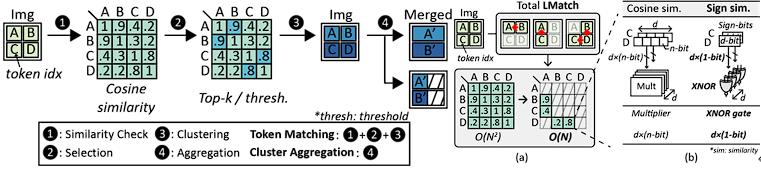

Abstract: The advent of Vision Transformers (ViT) has set a new performance leap in computer vision by leveraging self-attention mechanisms. However, the computational efficiency of ViTs is limited by the quadratic complexity of self-attention and redundancy among image tokens. To address these issues, token merging strategies have been explored to reduce input size by merging similar tokens. Nonetheless, implementing token merging presents a degradation of latency performance due to its two factors: inefficient computations and fixed merge rate nature. This paper introduces AdapTiV, a novel hardware-software co-designed accelerator that accelerates ViTs through image-adaptive token merging, effectively addressing the aforementioned challenges. Under the design philosophy of reducing the overhead of token merging and concealing its latency within the Layer Normalization (LN) process, AdapTiV incorporates algorithmic innovations such as Local Matching, which restricts the search space for token merging, thereby reducing the computational complexity; Sign Similarity, which simplifies the calculation of similarity between tokens; and Dynamic Merge Rate, which enables image-adaptive token merging. Additionally, the hardware component that supports AdapTiV’s algorithms, named the Adaptive Token Merging Engine, employs Sign-Driven Scheduling to conceal the overhead of token merging effectively. This engine integrates submodules such as a Sign Similarity Computing Unit, which calculates the similarity between tokens using a newly introduced similarity metric; a Sign Scratchpad, which is a lightweight, image-width-sized memory that stores previous tokens; a Sign Scratchpad Managing Unit, which controls the Sign Scratchpad; and a Token Integration Map to facilitate efficient, image-adaptive token merging. Our evaluations demonstrate that AdapTiV achieves, on average, 309.4×, 18.4×, 89.8×, 6.3× speedups and 262.1×, 21.5×, 496.6×, 11.2× improvements in energy efficiency over edge CPUs, edge GPUs, server CPUs, and server GPUs, while maintaining an accuracy loss below 1% without additional training.

Main Figure: