Title: JNPU: A 1.04TFLOPS Joint-DNN Training Processor with Speculative Cyclic Quantization and Triple Heterogeneity on Microarchitecture / Precision / Dataflow

Venue: ESSCIRC 23

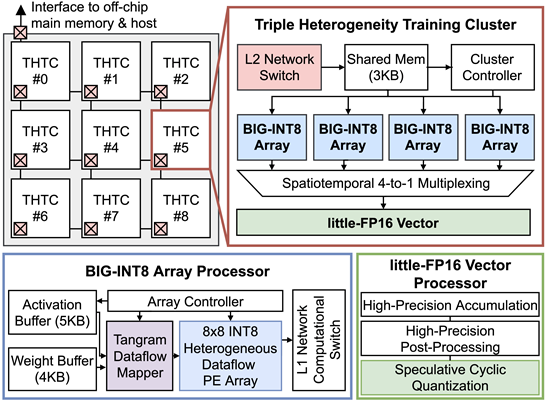

Abstract: This paper presents JNPU, a 1.04TFLOPS joint- DNN accelerator that can simultaneously run joint-DNN (MobileNet + GoogLeNet) models with 245FPS (inference) and 1.26TFLOPS/W (training). It proposes speculative cyclic quantization that enables integer-dominant operations and reduces external memory access by 87.5%. Its tangram dataflow mapper provides optimized sets of heterogeneous stationary types for both forward and backward propagation, enhancing efficiency up to 71.6%. Lastly, its novel processing cluster leverages triple heterogeneity on INT8 arrays and FP16 vector processor, saving 56.3% and 26.9% of computing area and power, respectively.

Main Figure: