Prasetiyo, Adiwena Putra, and Joo-Young Kim, “Morphling: A Throughput-Maximized TFHE-based Accelerator using Transform-domain Reuse ,” IEEE HPCA 2024

Abstract:

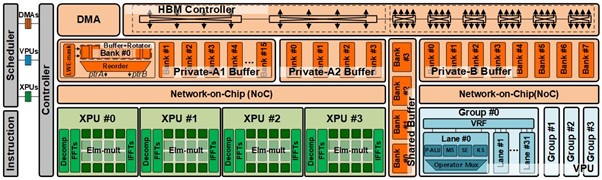

Fully Homomorphic Encryption (FHE) has become an increasingly important aspect in modern computing, particularly in preserving privacy in cloud computing by enabling computation directly on encrypted data. Despite its potential, FHE generally poses major computational challenges, including huge computational and memory requirements. The bootstrapping operation, which is essential particularly in Torus-FHE (TFHE) scheme, involves intensive computations characterized by an enormous number of polynomial multiplications. For instance, performing a single bootstrapping at the 128-bit security level requires more than 10,000 polynomial multiplications. Our in-depth analysis reveals that domain-transform operations, i.e., Fast Fourier Transform (FFT), contribute up to 88% of these operations, which is the bottleneck of the TFHE system. To address these challenges, we propose Morphling, an accelerator architecture that combines the 2D systolic array and strategic use of transform-domain reuse in order to reduce the overhead of domain-transform in TFHE. This novel approach effectively reduces the number of required domain-transform operations by up to 83.3%, allowing more computational cores in a given die area. In addition, we optimize its microarchitecture design for end-to-end TFHE operation, such as merge-split pipelined-FFT for efficient domain-transform operation, double-pointer method for high-throughput polynomial rotation, and specialized buffer design. Furthermore, we introduce custom instructions for tiling, batching, and scheduling of multiple ciphertext operations. This facilitates software-hardware co-optimization, effectively mapping high-level applications such as XG-Boost classifier, Neural-Network, and VGG-9. As a result, Morphling, with four 2D systolic arrays and four vector units with domain transform reuse, takes 74.79 mm2 die area and 53.00 W power consumption in 28nm process. It achieves a throughput of up to 147,615 bootstrappings per second, demonstrating improvements of 3440× over the CPU, 143× over the GPU, and 14.7× over the state-of-the-art TFHE accelerator. It can run various deep learning models with sub-second latency.