Heo, Jaehoon, et al. “SP-PIM: A Super-Pipelined Processing-In-Memory Accelerator With Local Error Prediction for Area/Energy-Efficient On-Device Learning.” IEEE Journal of Solid-State Circuits (2024).

Abstract: On-device learning (ODL) is crucial for edge devices as it restores machine learning (ML) model accuracy in changing environments. However, implementing ODL on battery-limited devices faces challenges due to large intermediate data generation and frequent processor-memory data movement, causing significant power consumption. To address this, some edge ML accelerators use processing-in-memory (PIM), but they still suffer from high latency, power overheads, and incomplete handling of data sparsity during training. This paper presents SP-PIM, a high-throughput super-pipelined PIM accelerator that overcomes these limitations. SP-PIM implements multi-level pipelining based on local error prediction (EP), increasing training speed by 7.31× and reducing external memory access by 59.09%. It exploits activation and error sparsity with an optimized PIM macro. Fabricated using 28-nm CMOS technology, SP-PIM achieves a training speed of 8.81 epochs/s, showing state-of-the-art area (560.6 GFLOPS/mm²) and power efficiency (22.4 TFLOPS/W). A cycle-level simulator further demonstrates SP-PIM’s scalability and efficiency.

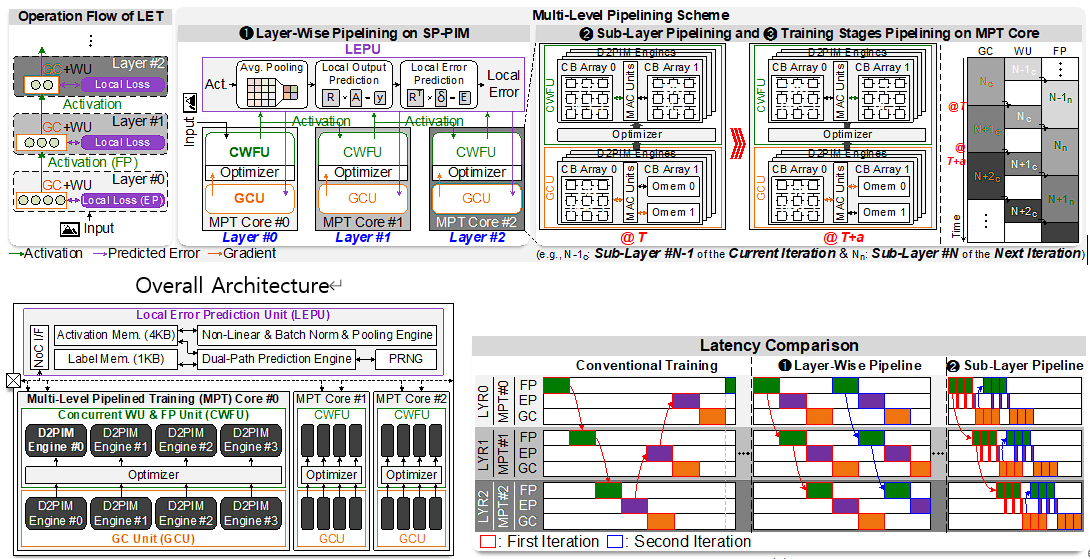

Main Figure