Jae-Min Park, Won-Jun Jang, Tae-Hyun Oh, Si-Hyeon Lee, “Overcoming Client Data Deficiency in Federated Learning by Exploiting Unlabeled Data on the Server,” IEEE Access, Sep. 2024

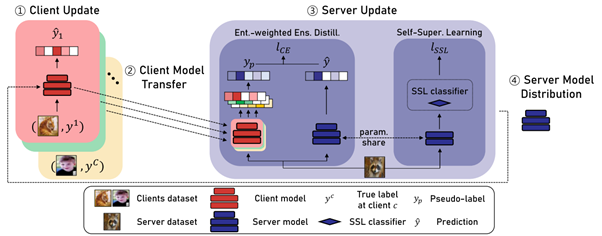

Abstract: Federated Learning (FL) is a distributed machine learning paradigm involving multiple clients to train a server model. In practice, clients often possess limited data and are not always available for simultaneous participation in FL, which can lead to data deficiency. This data deficiency degrades the entire learning process. To address this, we propose Federated learning with entropy-weighted ensemble Distillation and Self-supervised learning (FedDS). FedDS effectively handles situations with limited data per client and few clients. The key idea is to exploit the unlabeled data available on the server in the aggregating step of client models into a server model. We distill the multiple client models to a server model in an ensemble way. To robustly weigh the quality of source pseudo-labels from the client models, we propose an entropy weighting method and show a favorable tendency that our method assigns higher weights to more accurate predictions. Furthermore, we jointly leverage a separate self-supervised loss for improving generalization of the server model. We demonstrate the effectiveness of our FedDS both empirically and theoretically. For CIFAR-10, our method shows an improvement over FedAVG of 12.54% in the data deficient regime, and of 17.16% and 23.56% in the more challenging scenarios of noisy label or Byzantine client cases, respectively. For CIFAR-100 and ImageNet-100, our method shows an improvement over FedAVG of 18.68% and 15.06% in the data deficient regime, respectively.

Main figure