Abstract

Cache coherence interconnects have recently emerged to integrate CPUs, accelerators, and memory components into a unified, heterogeneous computing domain. These interconnect technologies ensure data coherency between CPU memory and device-attached private memory, creating a new paradigm of globally shared memory and network space. Among several efforts to establish such connectivity, including Gen-Z [1] and Cache coherent interconnect for accelerators (CCIX) [2], Compute Express Link (CXL) has become the first open interconnect protocol capable of supporting diverse processors and device endpoints. With the absorption of Gen-Z, CXL stands out as a promising interconnect interface due to its highspeed coherence control and seamless compatibility with the widely adopted PCIe standard. This makes it particularly advantageous for a wide range of datacenter-scale hardware, including CPUs, GPUs, FPGAs, and domain-specific ASICs. Furthermore, the CXL consortium has highlighted its potential for memory disaggregation, enabling pooling of DRAM and byte-addressable persistent memory.

Main Figure

Abstract

Eating disorders (ED) are complex mental health conditions that require long-term management and support. Recent advancements in large language model (LLM)-based chatbots offer the potential to assist individuals in receiving immediate support. Yet, concerns remain about their reliability and safety in sensitive contexts such as ED. We explore the opportunities and potential harms of using LLM-based chatbots for ED recovery. We observe the interactions between 26 participants with ED and an LLM-based chatbot, WellnessBot, designed to support ED recovery, over 10 days. We discovered that our participants have felt empowered in recovery by discussing ED-related stories with the chatbot, which served as a personal yet social avenue. However, we also identified harmful chatbot responses, especially concerning individuals with ED, that went unnoticed partly due to participants’ unquestioning trust in the chatbot’s reliability. Based on these findings, we provide design implications for safe and effective LLM-based interventions in ED management.

Main Figure

Abstract

The rapid growth of video-sharing platforms has driven immense storage demands, with disaggregated cloud storage emerging as a scalable and reliable solution. However, the proportional cost of cloud storage relative to capacity and duration limits the cost-efficiency for managing large-scale video data. This is particularly critical for cold videos, which constitute the majority of video data but are accessed infrequently. To address this challenge, this paper proposes Neural Cloud Storage (NCS), leveraging content-aware super-resolution (SR) powered by deep neural networks. By reducing the resolution of cold videos, NCS decreases file sizes while preserving perceptual quality. optimizing the cost trade-offs in multi-tiered disaggregated storage. This approach extends the cost-efficiency benefits to a greater range of cold videos and achieves up to a 21.2% reduction in total cost of ownership (TCO), providing a scalable, cost-effective solution for video storage.

Main Figure

Abstract

Facts evolve over time, making it essential for Large Language Models (LLMs) to handle time-sensitive factual knowledge accurately and reliably. While factual Time-Sensitive Question-Answering (TSQA) tasks have been widely studied, existing benchmarks often rely on manual curation or a small, fixed set of predefined templates, which restricts scalable and comprehensive TSQA evaluation. To address these challenges, we propose TDBench, a new benchmark that systematically constructs TSQA pairs by harnessing temporal databases and database techniques such as temporal SQL and functional dependencies. We also introduce a fine-grained evaluation metric called time accuracy, which assesses the validity of time references in model explanations alongside traditional answer accuracy to enable a more reliable TSQA evaluation. Extensive experiments on contemporary LLMs show how \ours{} enables scalable and comprehensive TSQA evaluation while reducing the reliance on human labor, complementing existing Wikipedia/Wikidata-based TSQA evaluation approaches by enabling LLM evaluation on application-specific data and seamless multi-hop question generation.

Main Figure

Abstract

Recommendation systems are crucial for personalizing userexperiences on online platforms. While Deep Learning Recommendation Models (DLRMs) have been the state-of-the-art for nearly a decade, their scalability is limited, as model quality scales poorly with compute. Recently, there have been research efforts applying Transformer architecture to recommendation systems, and Hierarchical Sequential Transaction Unit (HSTU), an encoder architecture, has been proposed to address scalability challenges. Although HSTU-based generative recommenders show significant potential, they have received little attention from computer architects. In this paper, we analyze the inference process of HSTU-based generative recommenders and perform an in-depth characterization of the model. Our findings indicate the attention mechanism is a major performance bottleneck. We further discuss promising research directions and optimization strategies that can potentially enhance the efficiency of HSTU models.

Main Figure

Abstract

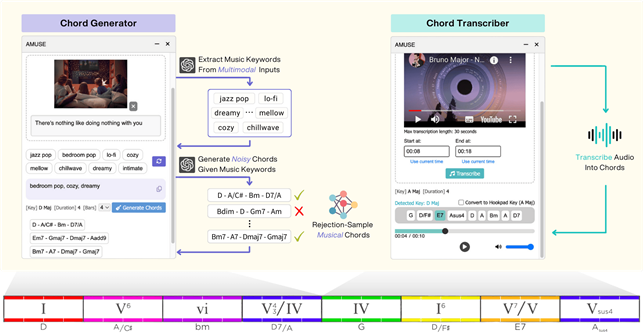

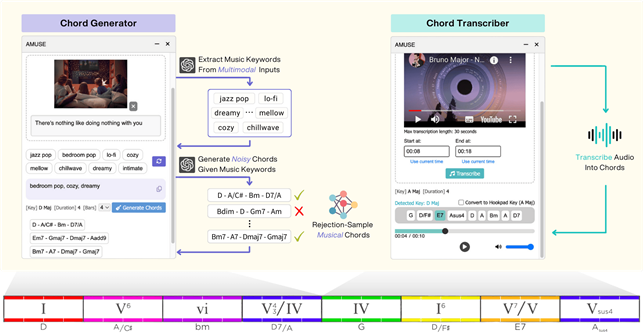

Songwriting is often driven by multimodal inspirations, such as imagery, narratives, or existing music, yet songwriters remain unsupported by current music AI systems in incorporating these multimodal inputs into their creative processes. We introduce Amuse, a songwriting assistant that transforms multimodal (image, text, or audio) inputs into chord progressions that can be seamlessly incorporated into songwriters’ creative process. A key feature of Amuse is its novel method for generating coherent chords that are relevant to music keywords in the absence of datasets with paired examples of multimodal inputs and chords. Specifically, we propose a method that leverages multimodal LLMs to convert multimodal inputs into noisy chord suggestions and uses a unimodal chord model to filter the suggestions. A user study with songwriters shows that Amuse effectively supports transforming multimodal ideas into coherent musical suggestions, enhancing users’ agency and creativity throughout the songwriting process.

Main Figure

Abstract

Multi-criteria (MC) recommender systems, which utilize MC rating information for recommendation, are increasingly widespread in various e-commerce domains. However, the MC recommendation using training-based collaborative filtering, requiring consideration of multiple ratings compared to single-criterion counterparts, often poses practical challenges in achieving state-of-the-art performance along with scalable model training. To solve this problem, we propose CA-GF, a training-free MC recommendation method, which is built upon criteria-aware graph filtering for efficient yet accurate MCrecommendations. Specifically, first, we construct an item–item similarity graph using an MC user-expansion graph. Next, we design CA-GF composed of the following key components, including 1) criterion-specific graph filtering where the optimal filter for each criterion is found using various types of polynomial low-pass filters and 2) criteria preference-infused aggregation where the smoothed signals from each criterion are aggregated. We demonstrate that CA-GF is (a) efficient: providing the computational efficiency, offering the extremely fast runtime of less than 0.2 seconds even on the largest benchmark dataset, (b) accurate: outperforming benchmarkMCrecommendationmethods,achievingsubstantialaccuracy gains up to 24% compared to the best competitor, and (c) interpretable: providing interpretations for the contribution of each criterion to the model prediction based on visualizations.

Main Figure

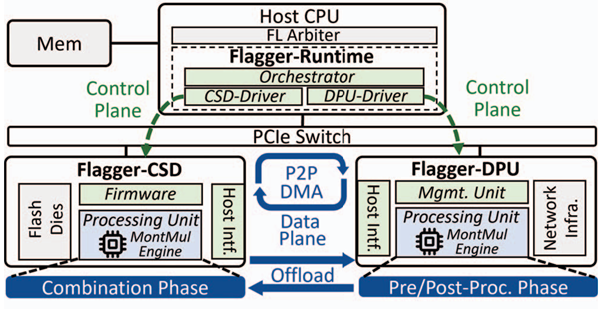

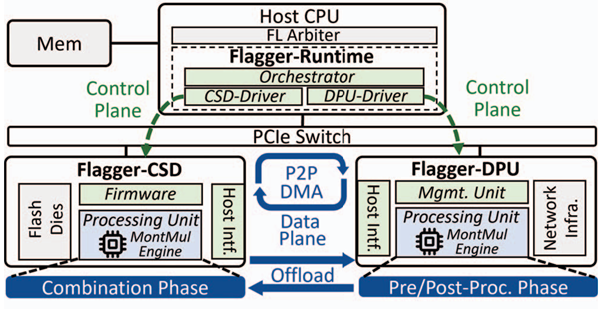

Abstract: Cross-silo federated learning (FL) leverages homomorphic encryption (HE) to obscure the model updates from the clients. However, HE poses the challenges of complex cryptographic computations and inflated ciphertext sizes. As cross-silo FL scales to accommodate larger models and more clients, the overheads of HE can overwhelm a CPU-centric aggregator architecture, including excessive network traffic, enormous data volume, intricate computations, and redundant data movements. Tackling these issues, we propose Flagger, an efficient and high-performance FL aggregator. Flagger meticulously integrates the data processing unit (DPU) with computational storage drives (CSD), employing these two distinct near-data processing (NDP) accelerators as a holistic architecture to collaboratively enhance FL aggregation. With the delicate delegation of complex FL aggregation tasks, we build Flagger-DPU and Flagger-CSD to exploit both in-network and in-storage HE acceleration to streamline FL aggregation. We also implement Flagger-Runtime, a dedicated software layer, to coordinate NDP accelerators and enable direct peer-to-peer data exchanges, markedly reducing data migration burdens. Our evaluation results reveal that Flagger expedites the aggregation in FL training iterations by 436% on average, compared with traditional CPU-centric aggregators.

Main figure:

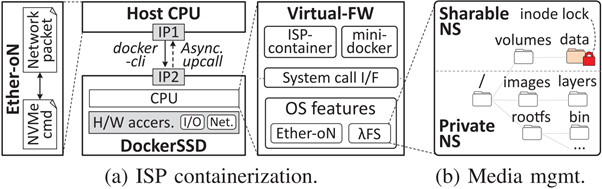

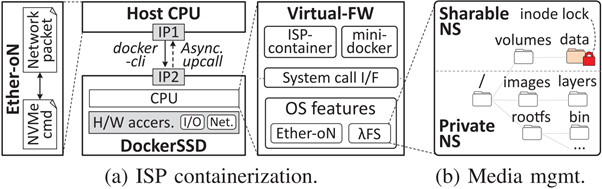

Abstract: Processing data in storage is an energy-efficient solution to examine massive datasets. However, a general incarnation of such well-known task-offloading model in a real system is unfortunately unsuccessful due to not only poor performance but also many practical challenges, such as limited processing capabilities and high vulnerabilities at the storage-level. We propose DockerSSD, a fully flexible in-storage processing (ISP) model that can run a variety of applications near flash without their source-level modification. Specifically, it enables lightweight OS-level virtualization in modern SSDs, which allows the storage intelligence to be well harmonized with existing computing environment and makes ISP even faster. Instead of developing a vendor-specific ISP to offload, DockerSSD can reuse existing Docker images, create containers as a self-governing execution object in storage, and process data directly where they are in real-time. To this end, we design a new communication method and virtual firmware that operate together to download Docker images and manage their container execution without a change of the existing storage interface and runtime. We further accelerate ISP and reduce the execution latency by automating container-related network and I/O handling data paths over hardware. Our evaluation shows that DockerSSD is 2.0 × faster than state-of-the-art ISP models for workloads with a high volume of system calls or file accesses. Moreover, it demonstrates a reduction in power and energy consumption by 1.6 × and 2.3 × respectively.

Main figure:

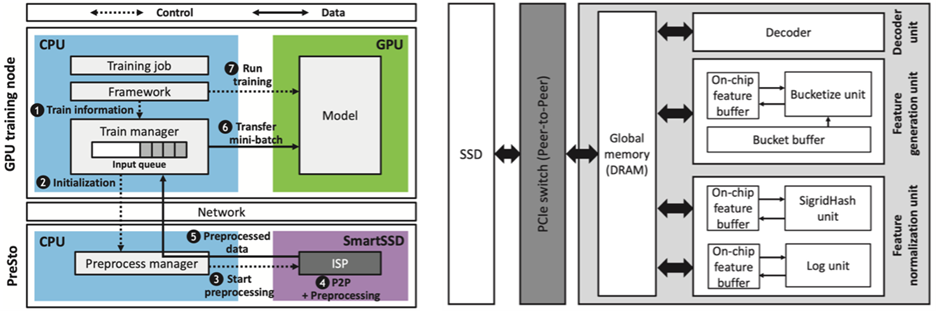

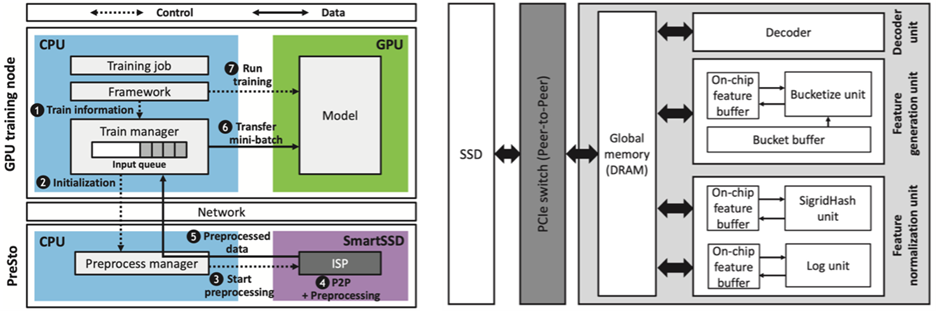

Abstract: Training recommendation systems (RecSys) faces several challenges as it requires the “data preprocessing” stage to preprocess an ample amount of raw data and feed them to the GPU for training in a seamless manner. To sustain high training throughput, state-of-the-art solutions reserve a large fleet of CPU servers for preprocessing which incurs substantial deployment cost and power consumption. Our characterization reveals that prior CPU-centric preprocessing is bottlenecked on feature generation and feature normalization operations as it fails to reap out the abundant inter-/intra-feature parallelism in RecSys preprocessing. PreSto is a storage-centric preprocessing system leveraging In-Storage Processing (ISP), which offloads the bottlenecked preprocessing operations to our ISP units. We show that PreSto outperforms the baseline CPU-centric system with a 9.6× speedup in end-to-end preprocessing time, 4.3× enhancement in cost-efficiency, and 11.3× improvement in energy-efficiency on average for production-scale RecSys preprocessing.

Main figure: