The research study authored by Daewoo Kim, Sangwoo Moon, David Hostallero, Wan Ju Kang, Taeyoung Lee, Kyunghwan Son, and Yung Yi was accepted at 7th International Conference on Learning Representations (ICLR 2019)

Title: Learning to Schedule Communication in Multi-agent Reinforcement Learning

Authors: Daewoo Kim, Sangwoo Moon, David Hostallero, Wan Ju Kang, Taeyoung Lee, Kyunghwan Son, and Yung Yi

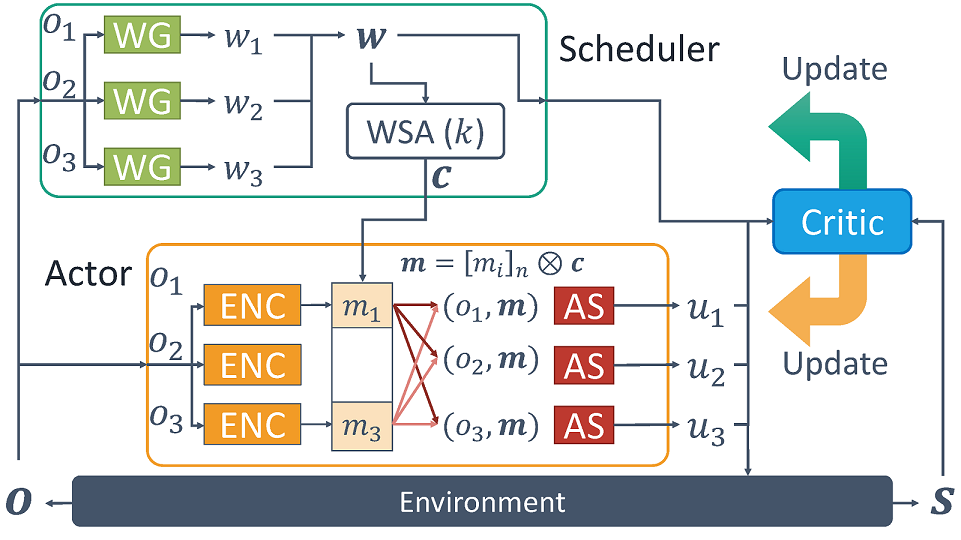

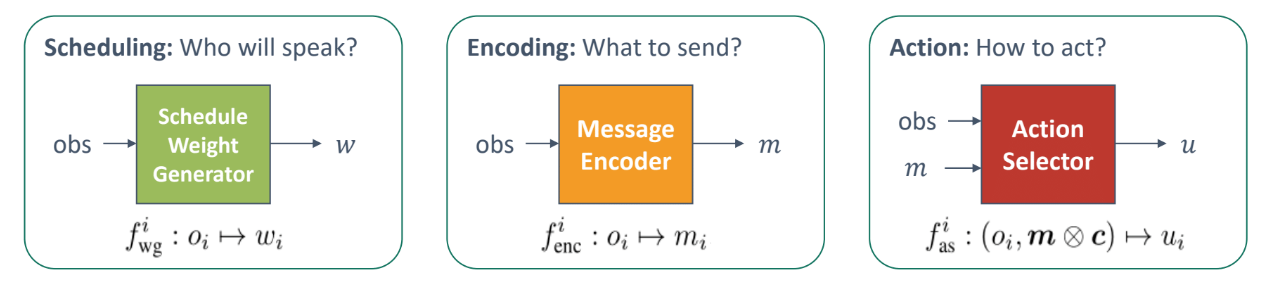

We present a first-of-its-kind study of communication-aided cooperative multi-agent reinforcement learning tasks with two realistic constraints on communication: (i) limited bandwidth and (ii) medium contention. Bandwidth limitation restricts the amount of information that can be exchanged by any agent accessing the medium for inter-agent communication, whereas medium contention confines the number of agents to access the channel itself, as in the state-of-the-art wireless networking standards, such as 802.11. These constraints call for a certain form of scheduling. In that regard, we propose a multi-agent deep reinforcement learning framework, called SchedNet, in which agents learn how to schedule themselves, how to encode messages, and how to select actions based on received messages and on its own observation of the environment. SchedNet enables the agents to decide, in a distributed manner, among themselves who should be entitled to broadcasting their encoded messages, by learning to gauge the importance of their (partially) observed information. We evaluate SchedNet against multiple baselines under two different applications, namely, cooperative communication and navigation, and predator-prey. Our experiments show a non-negligible performance gap between SchedNet and other mechanisms such as the ones without communication with vanilla scheduling methods, e.g., round robin, ranging from 32% to 43%.