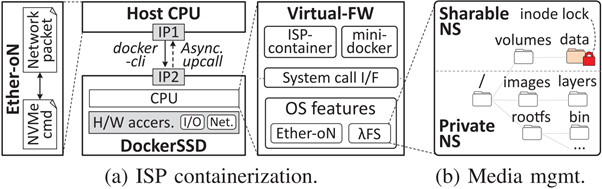

Abstract: Processing data in storage is an energy-efficient solution to examine massive datasets. However, a general incarnation of such well-known task-offloading model in a real system is unfortunately unsuccessful due to not only poor performance but also many practical challenges, such as limited processing capabilities and high vulnerabilities at the storage-level. We propose DockerSSD, a fully flexible in-storage processing (ISP) model that can run a variety of applications near flash without their source-level modification. Specifically, it enables lightweight OS-level virtualization in modern SSDs, which allows the storage intelligence to be well harmonized with existing computing environment and makes ISP even faster. Instead of developing a vendor-specific ISP to offload, DockerSSD can reuse existing Docker images, create containers as a self-governing execution object in storage, and process data directly where they are in real-time. To this end, we design a new communication method and virtual firmware that operate together to download Docker images and manage their container execution without a change of the existing storage interface and runtime. We further accelerate ISP and reduce the execution latency by automating container-related network and I/O handling data paths over hardware. Our evaluation shows that DockerSSD is 2.0 × faster than state-of-the-art ISP models for workloads with a high volume of system calls or file accesses. Moreover, it demonstrates a reduction in power and energy consumption by 1.6 × and 2.3 × respectively.

Main figure: