Abstract

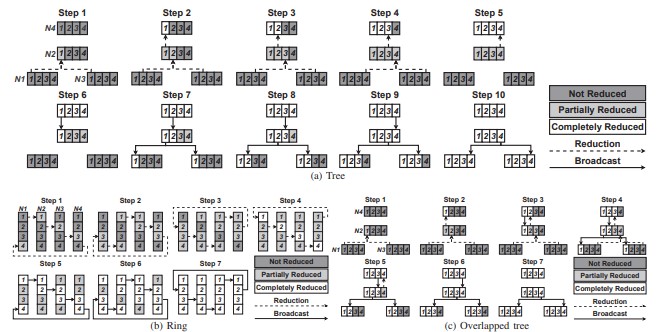

Training is an important aspect of deep learning to enable network models to be deployed. To scale training, multiple GPUs are commonly used with data parallelism to exploit the additional GPU compute and memory capacity. However, one challenge in scalability is the collective communication between GPUs. In this work, we propose to accelerate the AllReduce collective. AllReduce communication is often based on a logical topology (e.g., ring or tree algorithms) that is mapped to a physical topology or the physical connectivity between the nodes. In this work, we propose a logical/physical topology-aware collective communication that we refer to as C-Cube architecture – Chaining Collective Communication with Computation. C-Cube exploits the opportunity to overlap or chain different phases of collective communication as well as forward computation in a tree algorithm AllReduce. We exploit the communication pattern in a logical tree topology to overlap the different phases of communication. Since ordering is maintained in the tree collective algorithm, we propose gradient queuing to enable chaining of communication with forward computation to accelerate overall performance while having no impact on training accuracy. We also exploit the physical topology characteristics to further improve the performance, including proposing detour connections for collective communication while leveraging the additional connectivity to enable a double-tree C-Cube implementation. We implement a C-Cube proof-of-concept on a real system (8- GPU NVIDIA DGX-1) and show C-Cube results in performance improvement in communication performance compared to nonoverlapped tree algorithms as well as overall performance.