Title: Extending Contrastive Learning to Unsupervised Redundancy Identification (Applied Sciences 2022)

Authors: Jegonwoo Ju(KAIST), Heechul Jung(Kyungpook National University), Junmo Kim (KAIST)

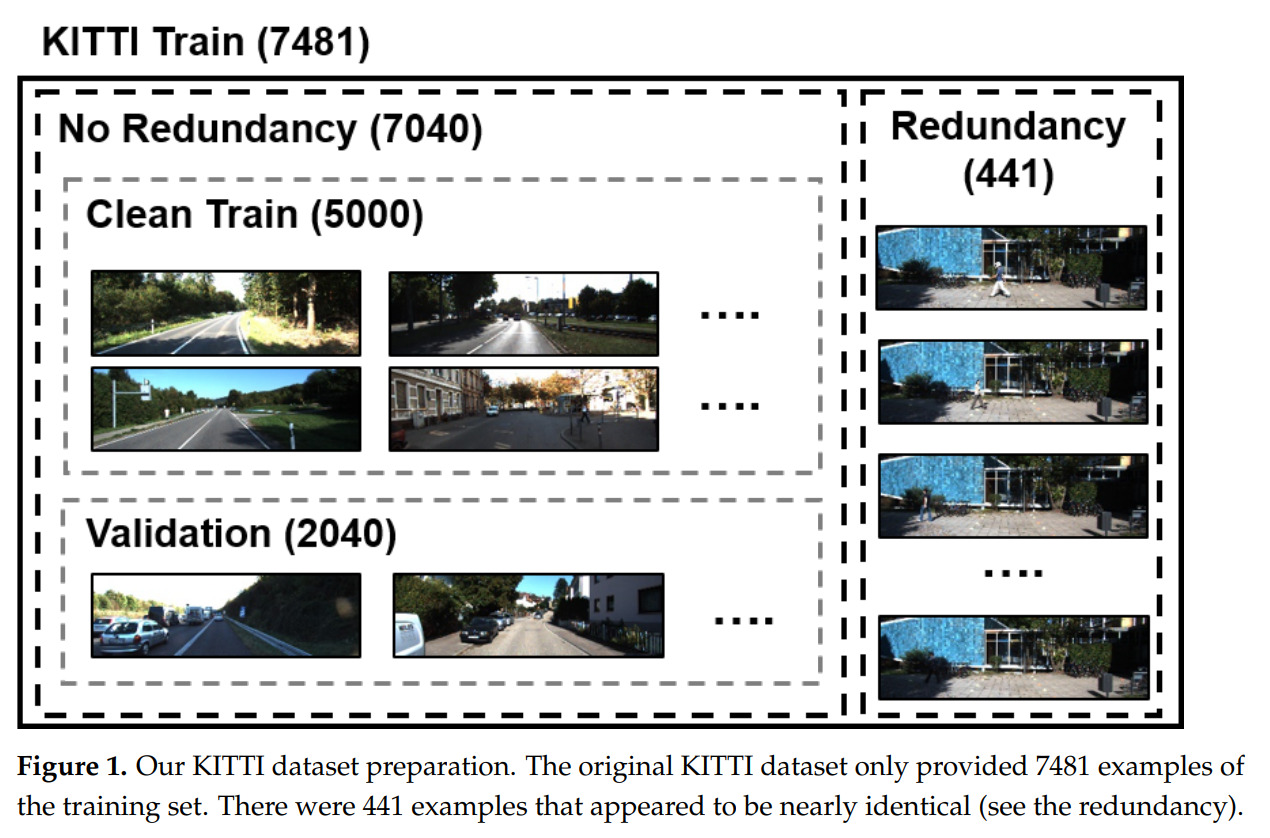

Abstract: Modern deep neural network (DNN)-based approaches have delivered great performance for computer vision tasks; however, they require a massive annotation cost due to their data-hungry nature. Hence, given a fixed budget and unlabeled examples, improving the quality of examples to be annotated is a clever step to obtain good generalization of DNN. One of key issues that could hurt the quality of examples is the presence of redundancy, in which the most examples exhibit similar visual context (e.g., same background). Redundant examples barely contribute to the performance but rather require additional annotation cost. Hence, prior to the annotation process, identifying redundancy is a key step to avoid unnecessary cost. In this work, we proved that the coreset score based on cosine similarity (cossim) is effective for identifying redundant examples. This is because the collective magnitude of the gradient over redundant examples exhibits a large value compared to the others. As a result, contrastive learning first attempts to reduce the loss of redundancy. Consequently, cossim for the redundancy set exhibited a high value (low coreset score). We first viewed the redundancy identification as the gradient magnitude. In this way, we effectively removed redundant examples from two datasets (KITTI, BDD10K), resulting in a better performance in terms of detection and semantic segmentation.