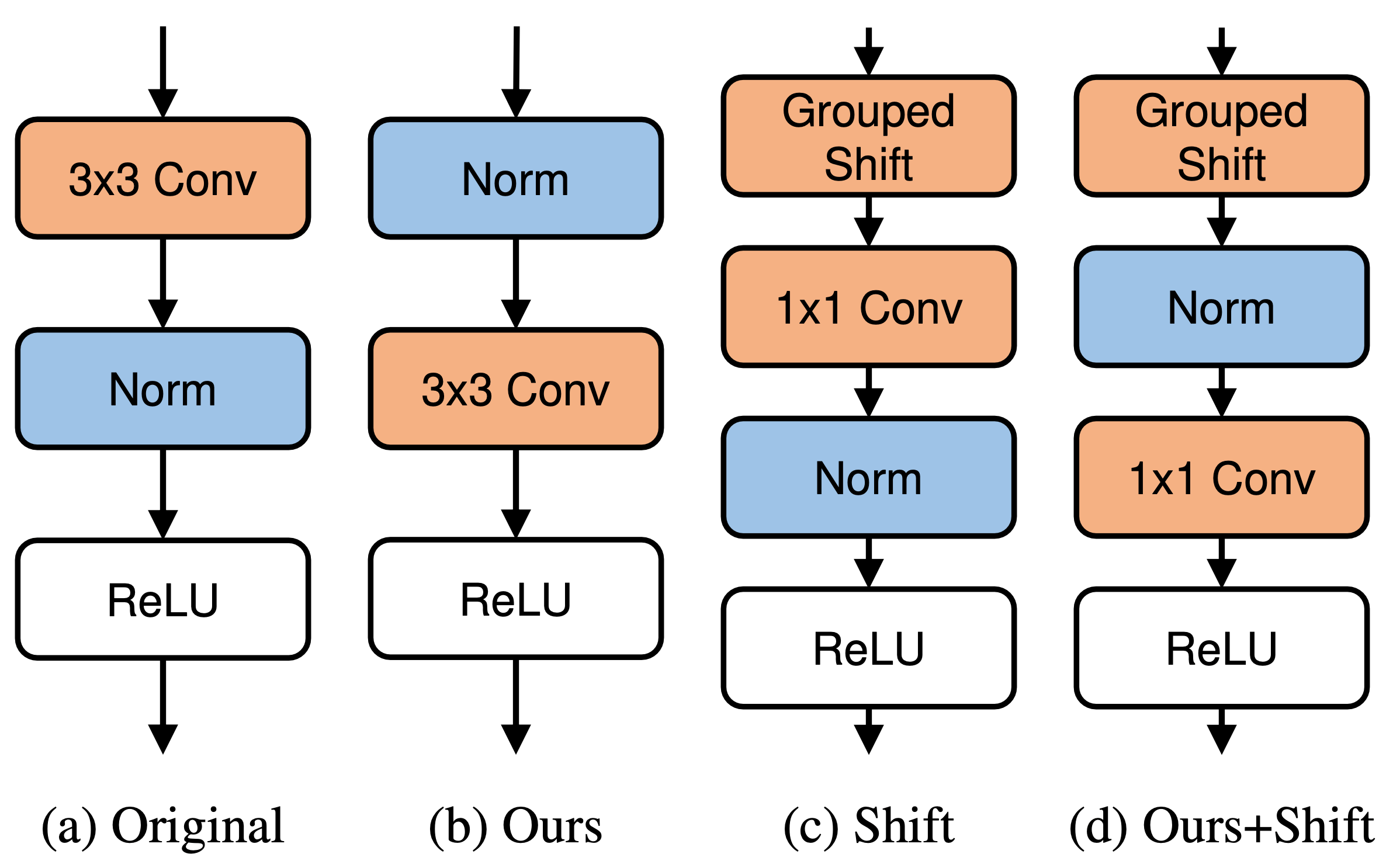

Title: Improving Generalization of Batch Whitening by Convolutional Unit Optimization

Authors: Yooshin Cho, Hanbyel Cho, Youngsoo Kim, and Junmo Kim

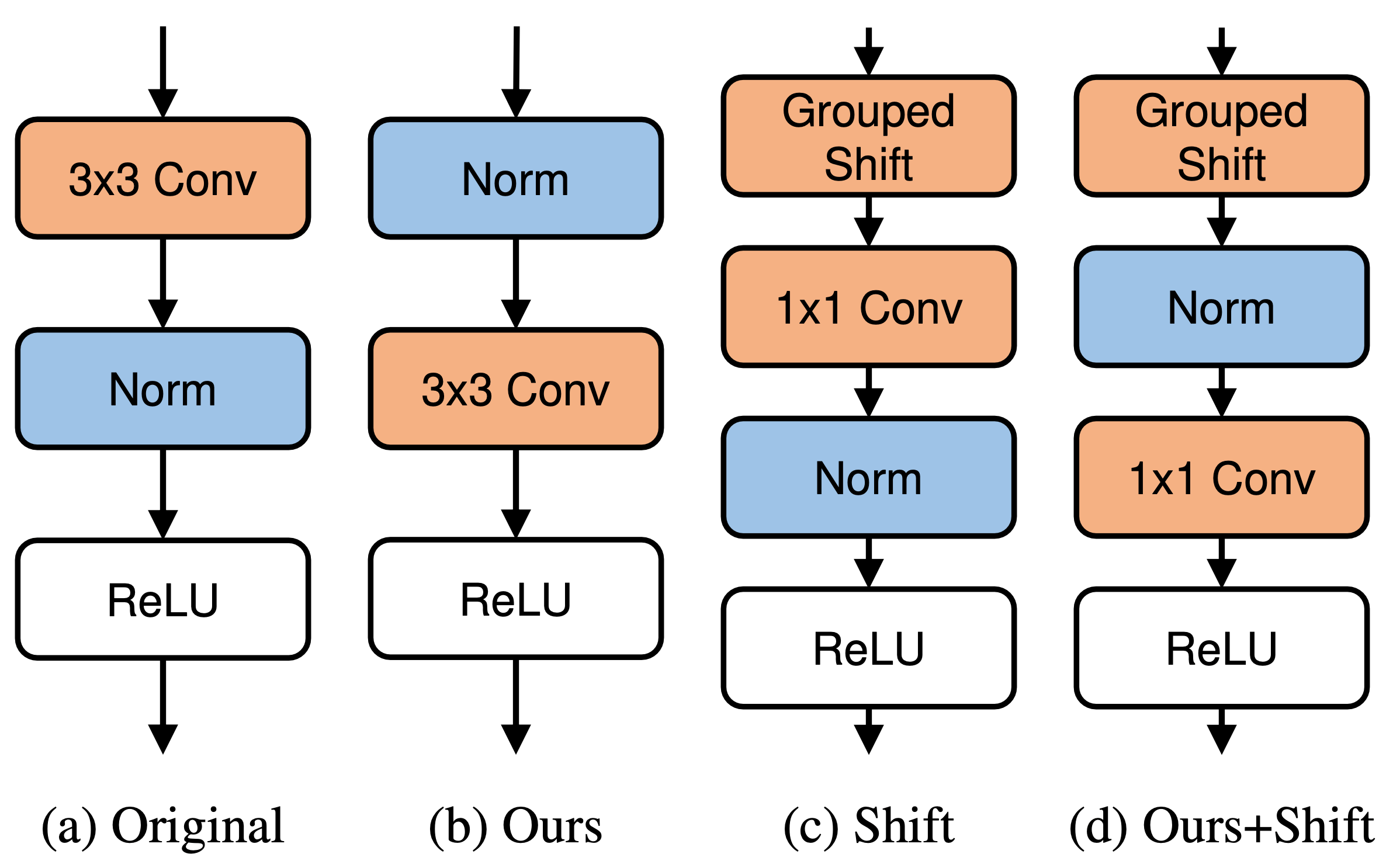

Batch Whitening is a technique that accelerates and stabilizes training by transforming input features to have a zero mean (Centering) and a unit variance (Scaling), and by removing linear correlation between channels (Decorrelation). In commonly used structures, which are empirically optimized with Batch Normalization, the normalization layer appears between convolution and activation function. Following Batch Whitening studies have employed the same structure without further analysis; even Batch Whitening was analyzed on the premise that the input of a linear layer is whitened. To bridge the gap, we propose a new Convolutional Unit that is in line with the theory, and our method generally improves the performance of Batch Whitening. Moreover, we show the inefficacy of the original Convolutional Unit by investigating rank and correlation of features. As our method is employable off-the-shelf whitening modules, we use Iterative Normalization (IterNorm), the state-of-the-art whitening module, and obtain significantly improved performance on five image classification datasets: CIFAR-10, CIFAR-100, CUB-200-2011, Stanford Dogs, and ImageNet. Notably, we verify that our method improves stability and performance of whitening when using large learning rate, group size, and iteration number.

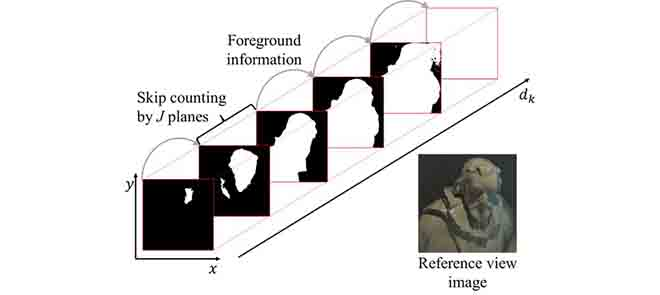

Title: Occlusion Handling by Successively Excluding Foregrounds for Light Field Depth Estimation Based on Foreground-Background Separation

Author: Jae Young Lee, Rae-Hong Park, Junmo Kim

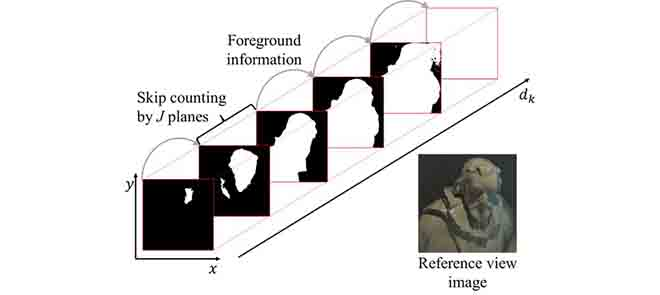

This paper proposes a depth from light field (DFLF) method specifically to deal with occlusion based on the foreground-background separation (FBS). The FBS-based methods infer the disparity maps by accumulating the binary maps which divide whether each pixel is the foreground or background. Although there have been widely studied to handle the occlusion problem with the cost-based method, there are not enough researches to handle the occlusion problem with the FBS-based methods yet. We found that errors around the occlusion boundary in the resulting disparity maps of the FBS-based methods arise from the fattened foreground by the light field reprameterization. To avoid fattened foregrounds, the inferred foreground maps in the front region with respect to the disparity axis could be utilized in the back region in the three-dimensional volume construction, which corresponds to the cost volume construction in the cost-based methods. With the front-to-back scanning manner of the FBS-based method, by successively excluding inferred foreground maps, errors around occlusion boundary could be effectively reduced in the resulting disparity maps. With synthetic and real LF images, the proposed method shows reasonable performance compared to the existing methods and better performance than existing FBS-based methods.

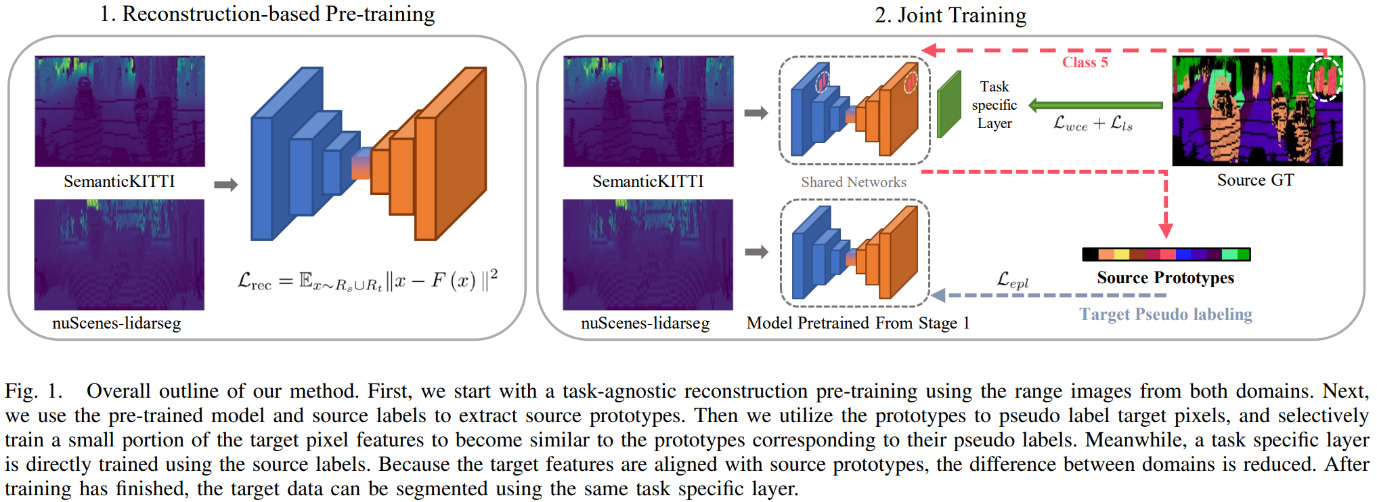

Title: Enhanced Prototypical Learning for Unsupervised Domain Adaptation in LiDAR Semantic Segmentation

Authors: Eojindl Yi, Juyoung Yang and Junmo Kim

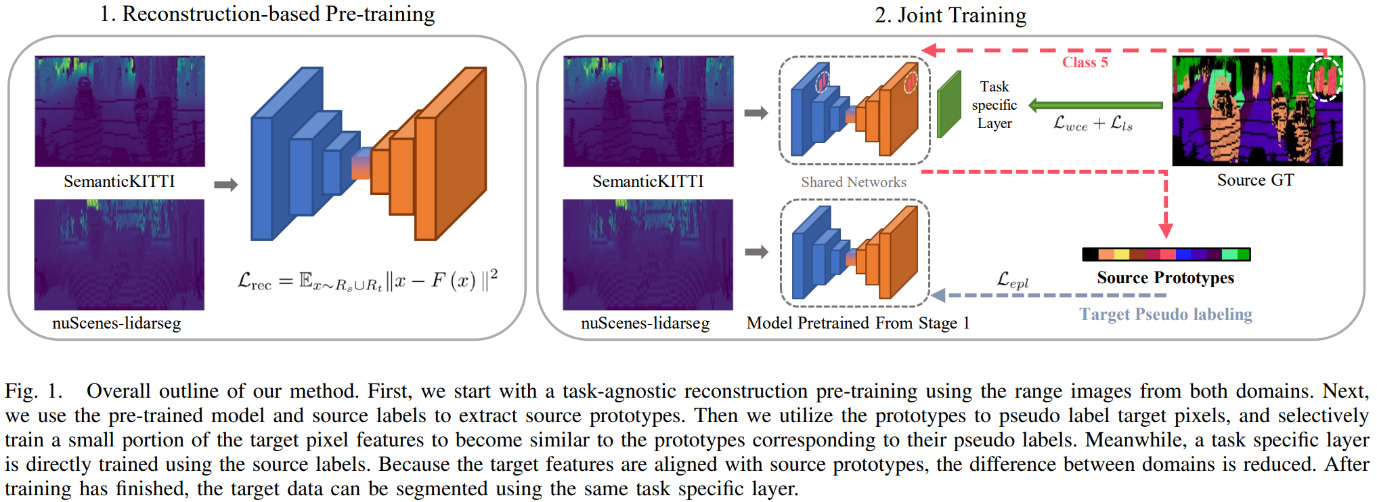

Abstract: In this paper, we propose a range image-based, effective and efficient method for solving UDA on LiDAR segmentation. The method exploits class prototypes from the source domain to pseudo label target domain pixels, which is a research direction showing good performance in UDA for natural image semantic segmentation. Applying such approaches to LiDAR scans has not been considered because of the severe domain shift and lack of pre-trained feature extractor that is unavailable in the LiDAR segmentation setup. However, we show that proper strategies, including reconstruction-based pre-training, enhanced prototypes, and selective pseudo labeling based on distance to prototypes, is sufficient enough to enable the use of prototypical approaches. We evaluate the performance of our method on the recently proposed LiDAR segmentation UDA scenarios. Our method achieves remarkable performance among contemporary methods.

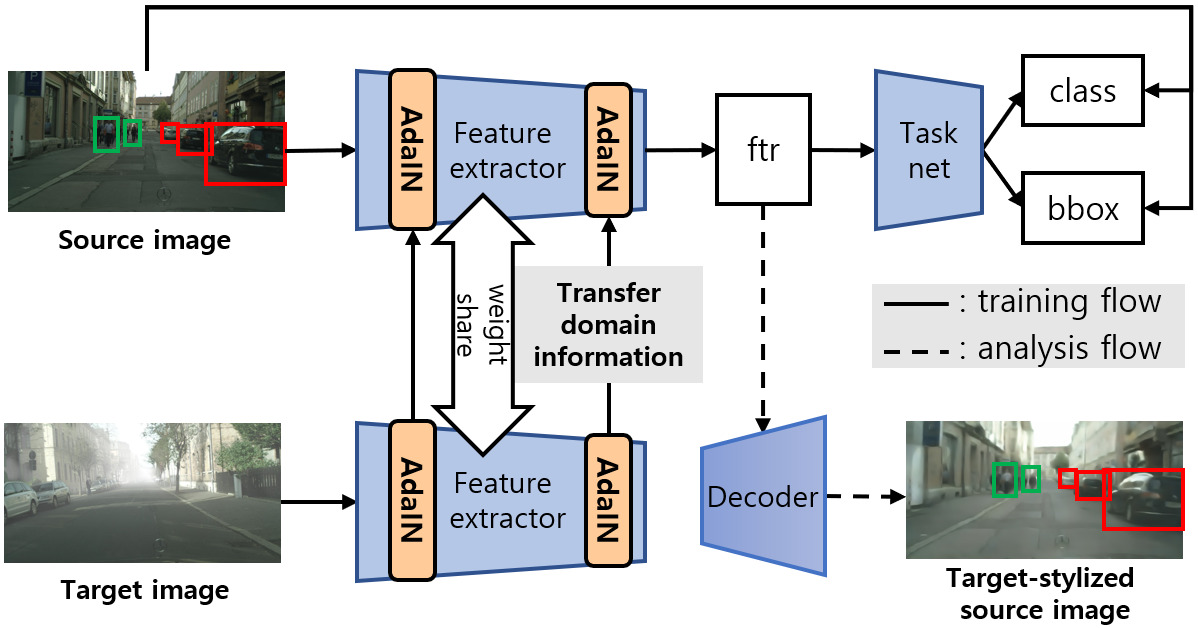

Title: Target-style-aware Unsupervised Domain Adaptation for Object Detection

Authors: Woo-han Yun, ByungOk Han, Jaeyeon Lee, Jaehong Kim, and Junmo Kim

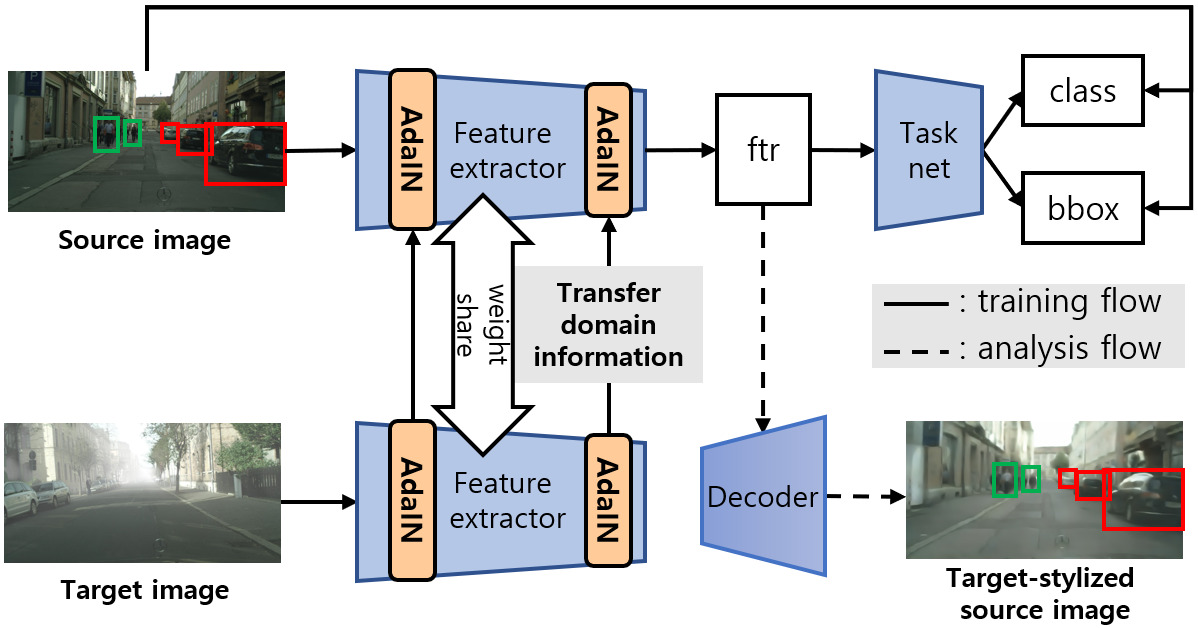

Vision modules running on mobility platforms, such as robots and cars, often face challenging situations such as a domain shift where the distributions of training (source) data and test (target) data are different. The domain shift is caused by several variation factors, such as style, camera viewpoint, object appearance, object size, backgrounds, and scene layout. In this work, we propose an object detection training framework for unsupervised domain-style adaptation. The proposed training framework transfers target-style information to source samples and simultaneously trains the detection network with these target-stylized source samples in an end-to-end manner. The detection network can learn the target domain from the target-stylized source samples. The style is extracted from object areas obtained by using pseudo-labels to reflect the style of the object areas more than that of the irrelevant backgrounds. We empirically verified that the proposed methods improve detection accuracy in diverse domain shift scenarios using the Cityscapes, FoggyCityscapes, Sim10k, BDD100k, PASCAL, and Watercolor datasets.

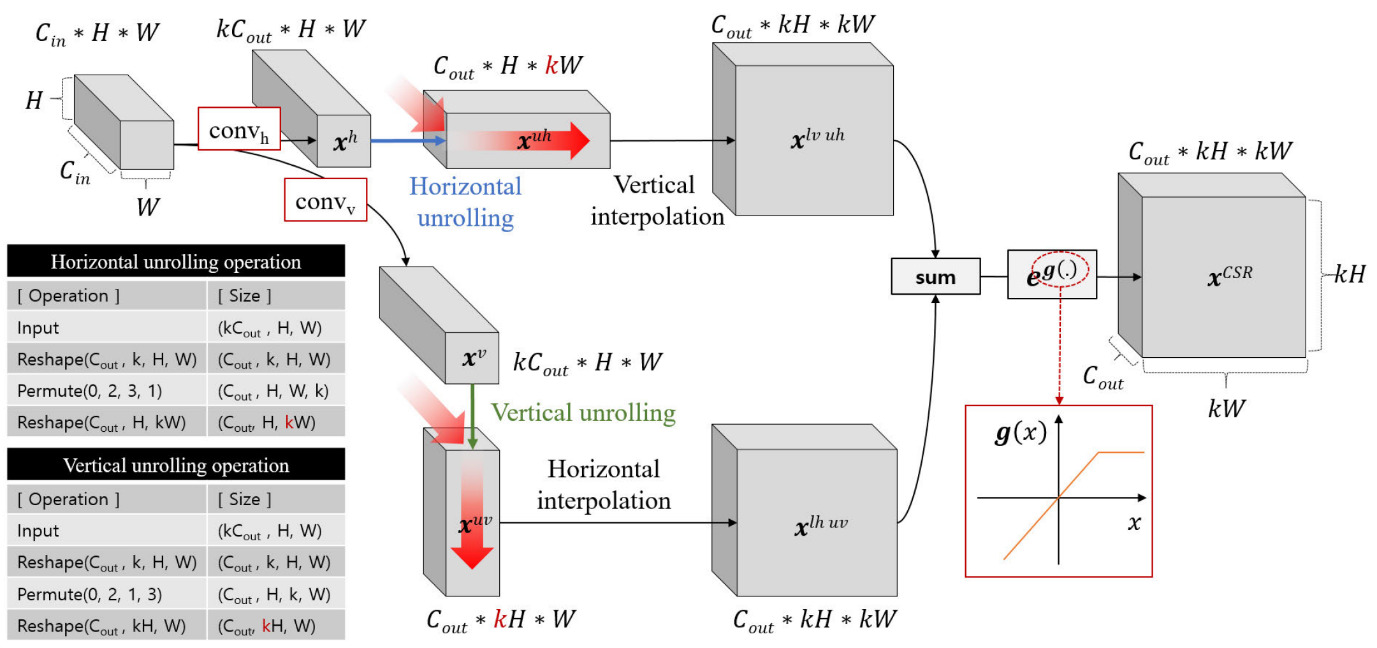

Title : Cocktail Glass Network: Fast Depth Estimation Using Channel to Space Unrolling

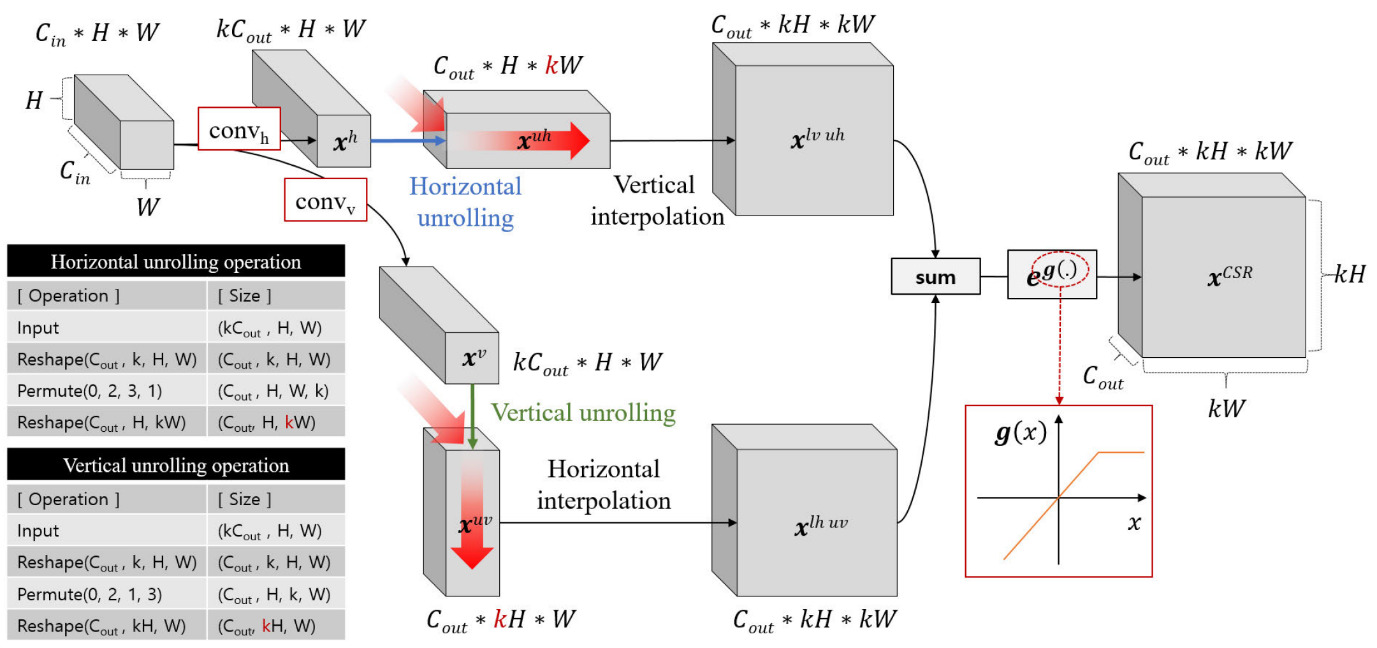

Authors : Jung-Jae Yu, Jong-Gook Ko, AND Junmo Kim

Depth-estimation from a single input image can be used in applications such as robotics and autonomous driving. Recently, depth-estimation networks with UNet encoder/decoder structures have been widely used. In these decoders, operations are repeated to gradually increase the image resolution, while decreasing the channel size. If the upsampling operation at a high magni_cation can be processed at once, the amount of computation in the decoder can be dramatically reduced. To achieve this, we propose a new network structure, i.e., a cocktail glass network. In this network, convolution layers in the decoder are reduced, and a novel fast upsampling method is used that is known as channel-to-space unrolling, which converts thick channel data into high-resolution data. The proposed method can be easily implemented using simple reshaping operations; therefore, it is suitable for reducing the depth-estimation network. Considering the experimental results based on the NYU V2 and KITTI datasets, we demonstrate that the proposed method reduces the amount of computation in the decoder by half, while maintaining the same level of accuracy; it can be used in both lightweight and large-model-capacity networks.

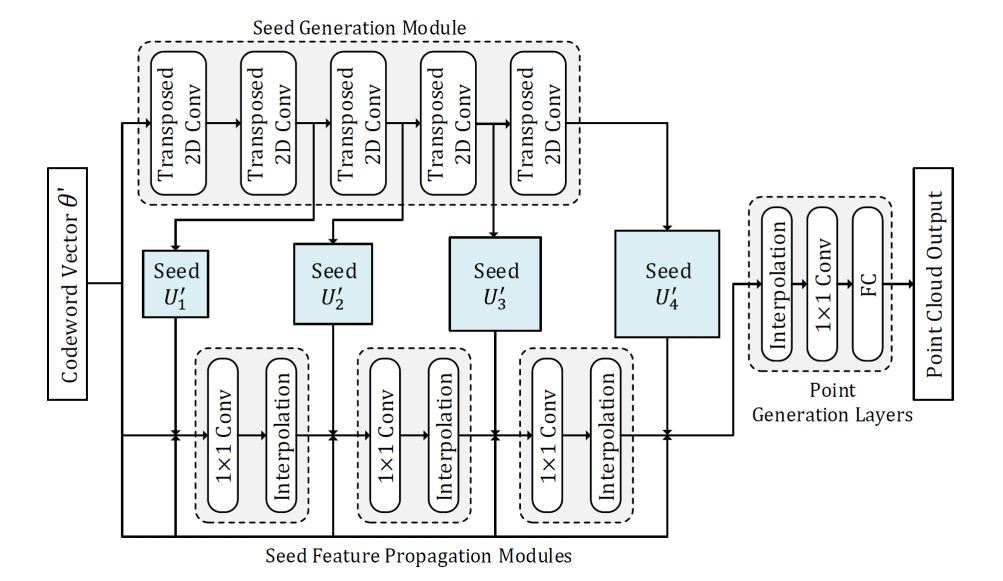

Title: Progressive Seed Generation Auto-encoder for Unsupervised Point Cloud Learning

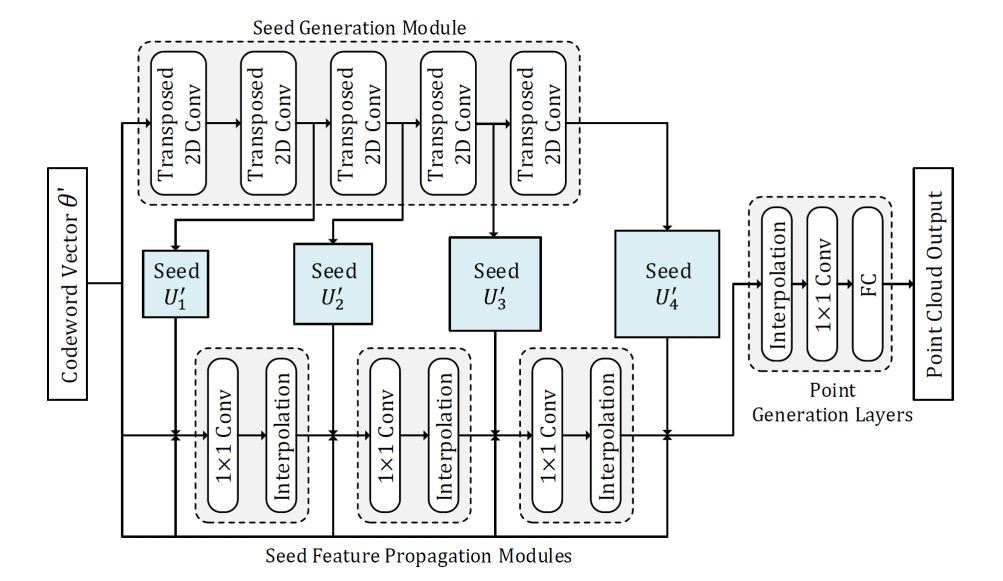

Authors: Juyoung Yang, Pyunghwan Ahn, Doyeon Kim, Haeil Lee and Junmo Kim

With the development of 3D scanning technologies, 3D vision tasks have become a popular research area. Owing to the large amount of data acquired by sensors, unsupervised learning is essential for understanding and utilizing point clouds without an expensive annotation process. In this paper, we propose a novel framework and an effective auto-encoder architecture named “PSG-Net” for reconstruction-based learning of point clouds. Unlike existing studies that used fixed or random 2D points, our framework generates input-dependent point-wise features for the latent point set. PSG-Net uses the encoded input to produce point-wise features through the seed generation module and extracts richer features in multiple stages with gradually increasing resolution by applying the seed feature propagation module progressively. We prove the effectiveness of PSG-Net experimentally; PSG-Net shows state-of-the-art performances in point cloud reconstruction and unsupervised classification, and achieves comparable performance to counterpart methods in supervised completion.

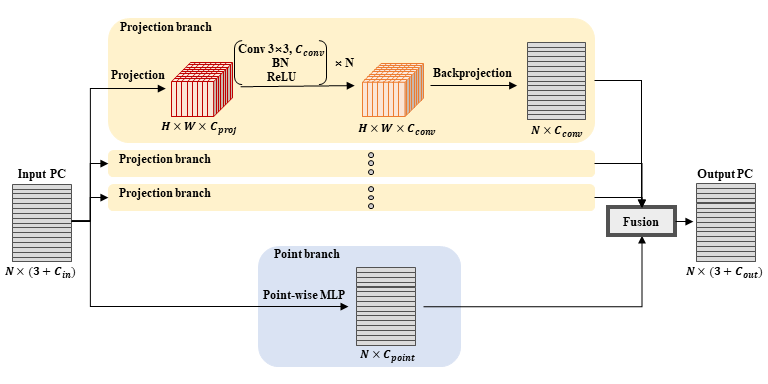

Title: Projection-based Point Convolution for Efficient Point Cloud Segmentation

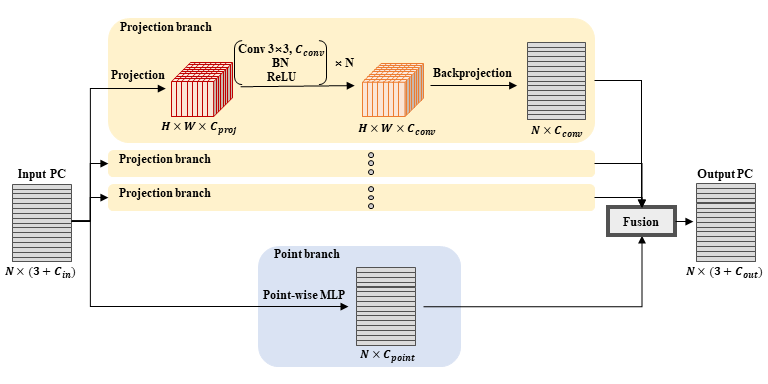

Authors: Pyunghwan Ahn, Juyoung Yang, Eojindl Yi, Chanho Lee, and Junmo Kim

Abstract: Understanding point cloud has recently gained huge interests following the development of 3D scanning devices and the accumulation of large-scale 3D data. Most point cloud processing algorithms can be classified as either point-based or voxel-based methods, both of which have severe limitations in processing time or memory, or both. To overcome these limitations, we propose a point convolutional module that uses 2D convolutions and multi-layer perceptrons (MLPs) as its components. As PPConv does not use point-based or voxel-based convolutions, it has advantages in fast point cloud processing. We demonstrate the efficiency of PPConv in terms of the trade-off between inference time and segmentation performance using S3DIS and ShapeNetPart dataset, and show that PPConv is the most efficient method among the compared ones.

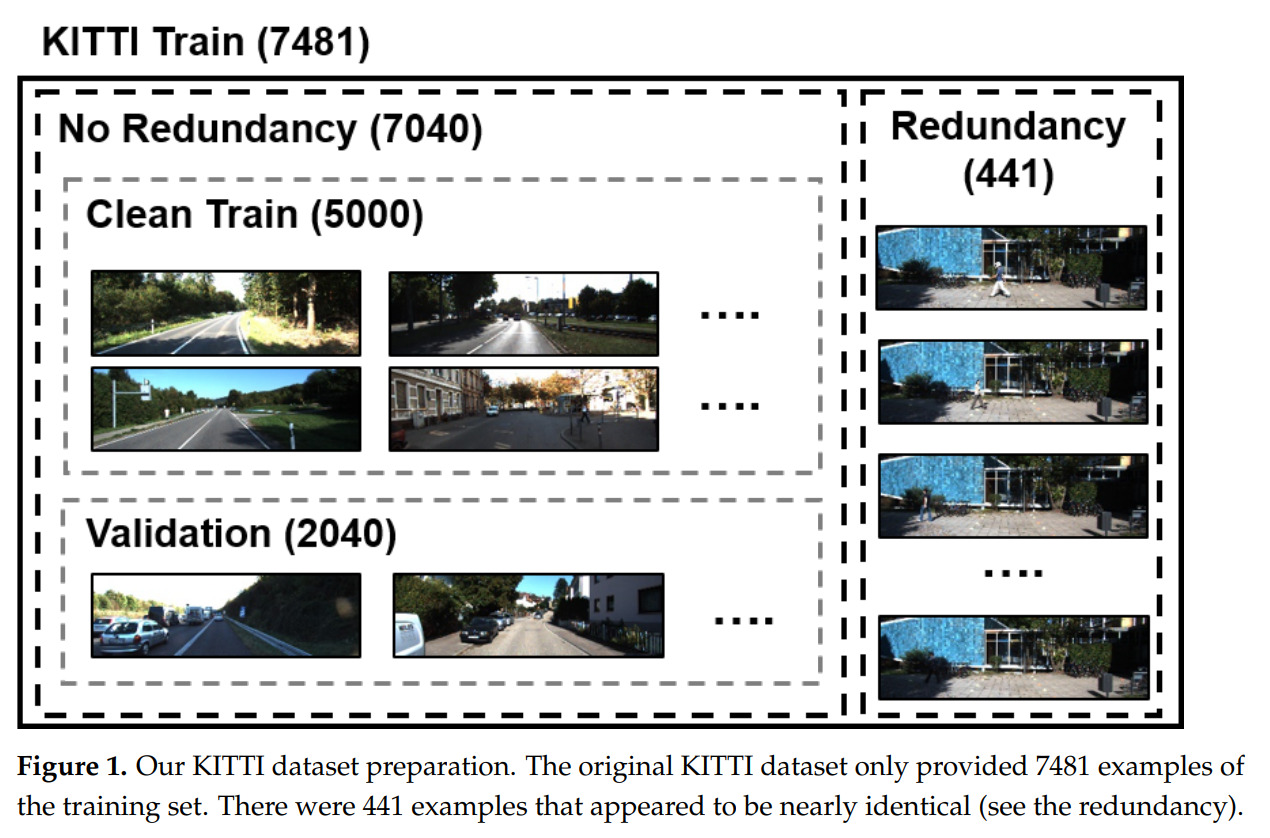

Title: Extending Contrastive Learning to Unsupervised Redundancy Identification (Applied Sciences 2022)

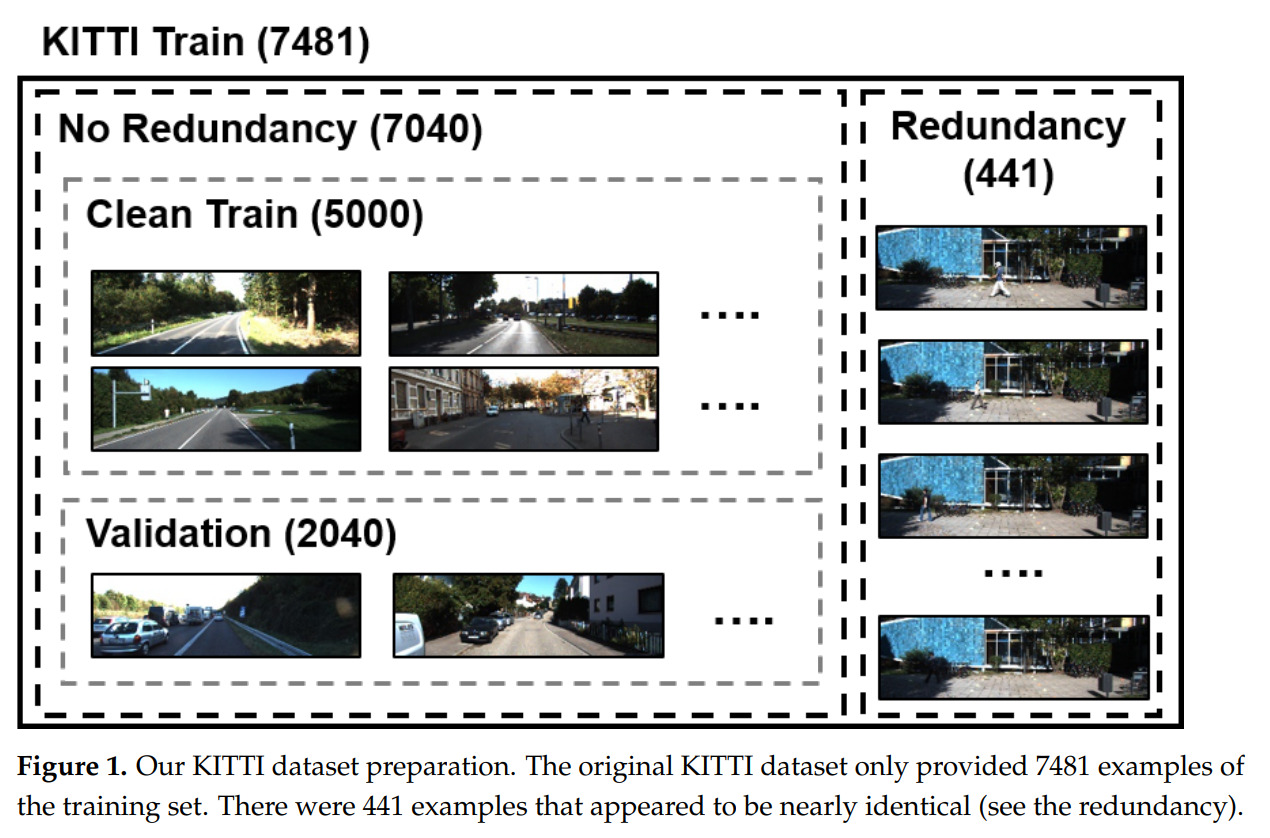

Authors: Jegonwoo Ju(KAIST), Heechul Jung(Kyungpook National University), Junmo Kim (KAIST)

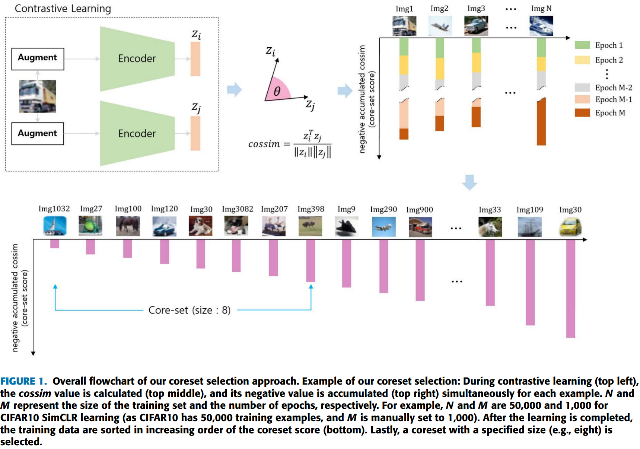

Abstract: Modern deep neural network (DNN)-based approaches have delivered great performance for computer vision tasks; however, they require a massive annotation cost due to their data-hungry nature. Hence, given a fixed budget and unlabeled examples, improving the quality of examples to be annotated is a clever step to obtain good generalization of DNN. One of key issues that could hurt the quality of examples is the presence of redundancy, in which the most examples exhibit similar visual context (e.g., same background). Redundant examples barely contribute to the performance but rather require additional annotation cost. Hence, prior to the annotation process, identifying redundancy is a key step to avoid unnecessary cost. In this work, we proved that the coreset score based on cosine similarity (cossim) is effective for identifying redundant examples. This is because the collective magnitude of the gradient over redundant examples exhibits a large value compared to the others. As a result, contrastive learning first attempts to reduce the loss of redundancy. Consequently, cossim for the redundancy set exhibited a high value (low coreset score). We first viewed the redundancy identification as the gradient magnitude. In this way, we effectively removed redundant examples from two datasets (KITTI, BDD10K), resulting in a better performance in terms of detection and semantic segmentation.

Title: Extending Contrastive Learning to Unsupervised Coreset Selection (IEEE Access 2022)

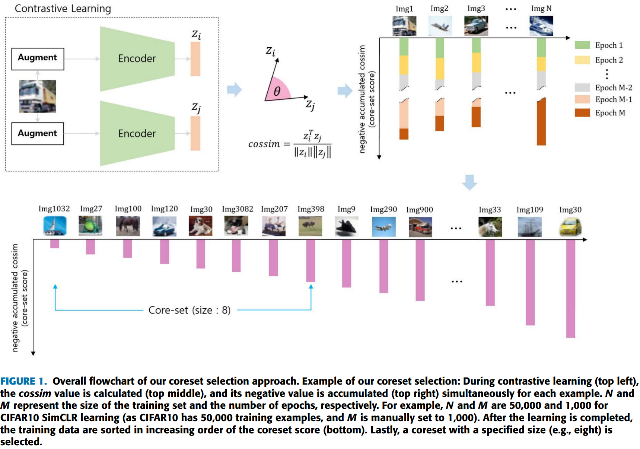

Authors: Jegonwoo Ju(KAIST), Heechul Jung(Kyungpook National University), Yoonju Oh(Kyungpook National University), Junmo Kim (KAIST)

Abstract: Self-supervised contrastive learning offers a means of learning informative features from a pool of unlabeled data. In this paper, we investigate another useful approach. We propose an entirely unlabeled coreset selection method. In this regard, contrastive learning, one of several self-supervised methods, was recently proposed and has consistently delivered the highest performance. This prompted us to choose two leading methods for contrastive learning: the simple framework for contrastive learning of visual representations (SimCLR) and the momentum contrastive (MoCo) learning framework. We calculated the cosine similarities for each example of an epoch for the entire duration of the contrastive learning process and subsequently accumulated the cosine similarity values to obtain the coreset score. Our assumption was that a sample with low similarity would likely behave as a coreset. Compared with existing coreset selection methods with labels, our approach reduced the cost associated with human annotation. In this study, the unsupervised method implemented for coreset selection achieved improvements of 1.25% (for CIFAR10), 0.82% (for SVHN), and 0.19% (for QMNIST) over a randomly selected subset with a size of 30%. Furthermore, our results are comparable to those of the existing supervised coreset selection methods. The differences between the proposed and the above mentioned supervised coreset selection method (forgetting events) were 0.81% on the CIFAR10 dataset, −2.08% on the SVHN dataset (the proposed method outperformed the existing method), and 0.01% on the QMNIST dataset at a subset size of 30%. In addition, our proposed approach exhibited robustness even if the coreset selection model and target model were not identical (e.g., using ResNet18 as a selection model and ResNet101 as the target model). Lastly, we obtained more concrete proof that our coreset examples are highly informative by showing the performance gap between the coreset and non-coreset samples in the coreset cross test experiment. We observed a pair of performance ( ( testing: non-coreset, training: coreset ), ( testing: coreset, training: non-coreset ) ), i.e. (94.27%, 67.39 %) for CIFAR10, (98.24%, 83.30%) for SVHN, and (99.89%, 93.07%) for QMNIST with a subset size of 30%.

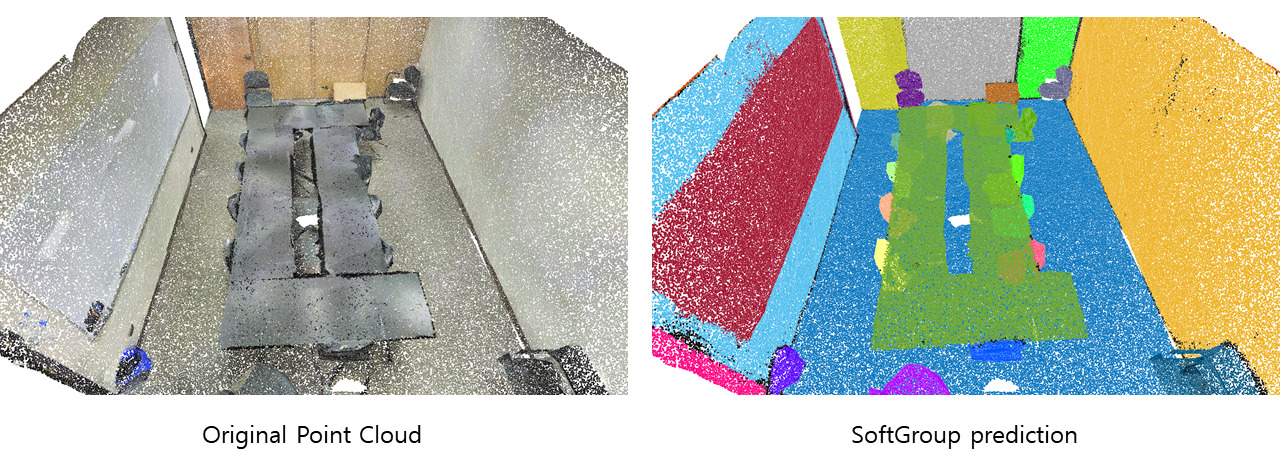

– 논문제목: SoftGroup for 3D Instance Segmentation on Point Clouds

– 발표학회: The IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR)2022

– 발표일정/장소: 2022.6.21(TUE)/ New Orleans, Louisiana USA

[전기및전자공학부 유창동교수, Vu Van Thang(박사과정), 김국회(석사과정), 왼쪽부터]

최근 자율 주행, 로봇 운용 및 증강현실 등 많은 분야에서 3차원 데이터를 활용하고 있다. 3D point cloud는 3차원 좌표값을 가진 점들의 집합으로 이루어진 데이터이며 본 연구는 3차원 데이터 중 하나인 3D point cloud를 기반한 정밀 객체 분할 기술인 SoftGroup을 개발하였다. 각 점에 대해 예측된 다중 class 기반 그룹화 기술인 Soft Grouping을 통해 단일 class 예측 오류가 객체 분할 결과로 전파되는 것을 방지하고 정밀한 객체 분할 추론을 달성하여 기존 기술 대비 8% 이상의 정밀도 향상을 이루었다. 이 기술은 사진보다 정확한 3D 공간 정보를 담고 있는 point cloud를 분할할 수 있는 기술로 3D point cloud를 활용하는 자율 주행, 로봇, 증강 현실 등의 분야에서 폭넓게 활용될 수 있을 것으로 기대된다.