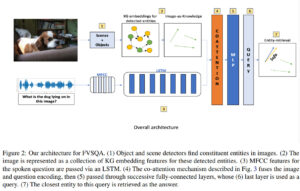

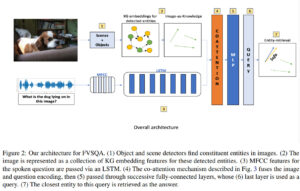

Although Question-Answering has long been of research interest, its accessibility to users through a speech interface and its support to multiple languages have not been addressed in prior studies. Towards these ends, we present a new task and a synthetically-generated dataset to do Fact-based Visual Spoken-Question Answering (FVSQA). FVSQA is based on the FVQA dataset, which requires a system to retrieve an entity from Knowledge Graphs (KGs) to answer a question about an image. In FVSQA, the question is spoken rather than typed. Three sub-tasks are proposed: (1) speech-to-text based, (2) end-to-end, without speech-to-text as an intermediate component, and (3) cross-lingual, in which the question is spoken in a language different from that in which the KG is recorded. The end-to-end and cross-lingual tasks are the first to require world knowledge from a multi-relational KG as a differentiable layer in an end-to-end spoken language understanding task, hence the proposed reference implementation is called WorldlyWise (WoW). WoW is shown to perform endto-end cross-lingual FVSQA at same levels of accuracy across 3 languages – English, Hindi, and Turkish.

This paper defines fair principal component analysis (PCA) as minimizing the maximum mean discrepancy (MMD) between dimensionality-reduced conditional distributions of different protected classes. The incorporation of MMD naturally leads to an exact and tractable mathematical formulation of fairness with good statistical properties. We formulate the problem of fair PCA subject to MMD constraints as a non-convex optimization over the Stiefel manifold and solve it using the Riemannian Exact Penalty Method with Smoothing (REPMS; Liu and Boumal, 2019). Importantly, we provide local optimality guarantees and explicitly show the theoretical effect of each hyperparameter in practical settings, extending previous results. Experimental comparisons based on synthetic and UCI datasets show that our approach outperforms prior work in explained variance, fairness, and runtime.

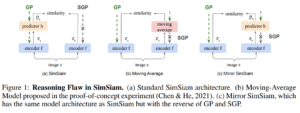

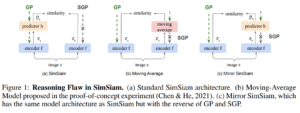

To avoid collapse in self-supervised learning (SSL), a contrastive loss is widely used but often requires a large number of negative samples. Without negative samples yet achieving competitive performance, a recent work~\citep{chen2021exploring} has attracted significant attention for providing a minimalist simple Siamese (SimSiam) method to avoid collapse. However, the reason for how it avoids collapse without negative samples remains not fully clear and our investigation starts by revisiting the explanatory claims in the original SimSiam. After refuting their claims, we introduce vector decomposition for analyzing the collapse based on the gradient analysis of the l2-normalized representation vector. This yields a unified perspective on how negative samples and SimSiam alleviate collapse. Such a unified perspective comes timely for understanding the recent progress in SSL.

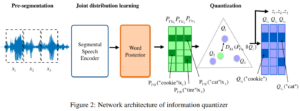

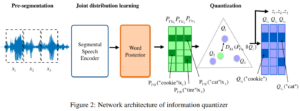

Phonemes are defined by their relationship to words: changing a phoneme changes the word. Learning a phoneme inventory with little supervision has been a longstanding challenge with important applications to under-resourced speech technology. In this paper, we bridge the gap between the linguistic and statistical definition of phonemes and propose a novel neural discrete representation learning model for self-supervised learning of phoneme inventory with raw speech and word labels. Under mild assumptions, we prove that the phoneme inventory learned by our approach converges to the true one with an exponentially low error rate. Moreover, in experiments on TIMIT and Mboshi benchmarks, our approach consistently learns a better phoneme-level representation and achieves a lower error rate in a zero-resource phoneme recognition task than previous state-of-the-art self-supervised representation learning algorithms.

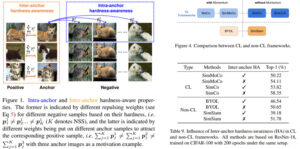

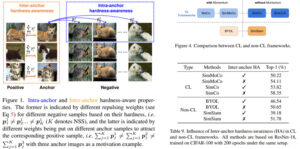

Contrastive learning (CL) is widely known to require many negative samples, 65536 in MoCo for instance, for which the performance of a dictionary-free framework is often inferior because the negative sample size (NSS) is limited by its mini-batch size (MBS). To decouple the NSS from the MBS, a dynamic dictionary has been adopted in a large volume of CL frameworks, among which arguably the most popular one is MoCo family. In essence, MoCo adopts a momentum-based queue dictionary, for which we perform a fine-grained analysis of its size and consistency. We point out that InfoNCE loss used in MoCo implicitly attract anchors to their corresponding positive sample with various strength of penalties and identify such inter-anchor hardness-awareness property as a major reason for the necessity of a large dictionary. Our findings motivate us to simplify MoCo v2 via the removal of its dictionary as well as momentum. Based on an InfoNCE with the proposed dual temperature, our simplified frameworks, SimMoCo and SimCo, outperform MoCo v2 by a visible margin. Moreover, our work bridges the gap between CL and non-CL frameworks, contributing to a more unified understanding of these two mainstream frameworks in SSL.

Existing state-of-the-art 3D instance segmentation methods perform semantic segmentation followed by grouping. The hard predictions are made when performing semantic segmentation such that each point is associated with a single class. However, the errors stemming from hard decision propagate into grouping that results in (1) low overlaps between the predicted instance with the ground truth and (2) substantial false positives. To address the aforementioned problems, this paper proposes a 3D instance segmentation method referred to as SoftGroup by performing bottom-up soft grouping followed by top-down refinement. SoftGroup allows each point to be associated with multiple classes to mitigate the problems stemming from semantic prediction errors and suppresses false positive instances by learning to categorize them as background. Experimental results on different datasets and multiple evaluation metrics demonstrate the efficacy of SoftGroup. Its performance surpasses the strongest prior method by a significant margin of +6.2% on the ScanNet v2 hidden test set and +6.8% on S3DIS Area 5 in terms of AP50. SoftGroup is also fast, running at 345ms per scan with a single Titan X on ScanNet v2 dataset.

Title: Fine-Grained Multi-Class Object Counting

Abstract: Many animal species in the wild are at the risk of extinction. To deal with this situation, ecologists have monitored the population changes of endangered species. However, the current wildlife monitoring method is extremely laborious as the animals are counted manually. Automated counting of animals by species can facilitate this work and further renew the ways for ecological studies. However, to the best of our knowledge, few works and publicly available datasets have been proposed on multi-class object counting which is applicable to counting several animal species. In this paper, we propose a fine-grained multi-class object counting dataset, named KRGRUIDAE, which contains endangered red-crowned crane and white-naped crane in the family Gruidae. We also propose a specialized network for multi-class object counting and line segment density maps, and show their effectiveness by comparing results of existing crowd counting methods on the KR-GRUIDAE dataset.

Title: Weakly-Supervised Multiple Object Tracking via a Masked Center Point Warping Loss

Abstract: Multiple object tracking (MOT), a popular subject in computer vision with broad application areas, aims to detect and track multiple objects across an input video. However, recent learning-based MOT methods require strong supervision on both the bounding box and the ID of each object for every frame used during training, which induces a heightened cost for obtaining labeled data. In this paper, we propose a weakly-supervised MOT framework that enables the accurate tracking of multiple objects while being trained without object ID ground truth labels. Our model is trained only with the bounding box information with a novel masked warping loss that drives the network to indirectly learn how to track objects through a video. Specifically, valid object center points in the current frame are warped with the predicted offset vector and enforced to be equal to the valid object center points in the previous frame. With this approach, we obtain an MOT accuracy on par with those of the state-of-the-art fully supervised MOT models, which use both the bounding boxes and object ID as ground truth labels, on the MOT17 dataset.

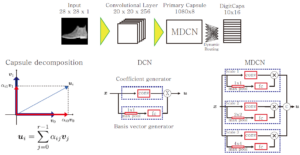

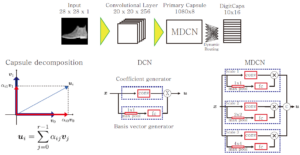

Title: A Parameter Efficient Multi-Scale Capsule Network

Abstract: Capsule networks consider spatial relationships in an input image. The relationship-based feature propagation in capsule networks shows promising results. However, a large number of trainable parameters limit their widespread use. In this paper, we propose Decomposed Capsule Network (DCN) to reduce the number of training parameters in the primary capsule generation stage. Our DCN represents a capsule as a combination of basis vectors. Generating basis vectors and their coefficients notably reduce the total number of training parameters. Moreover, we introduce an extension of the DCN architecture, named Multi-scale Decomposed Capsule Network (MDCN). The MDCN architecture integrates features from multiple scales to synthesize capsules with fewer parameters. Our proposed networks show better performance on the Fashion-MNIST dataset and the CIFAR10 dataset with fewer parameters than the original network.

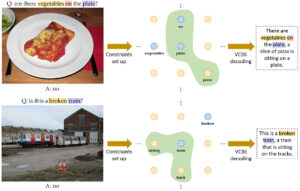

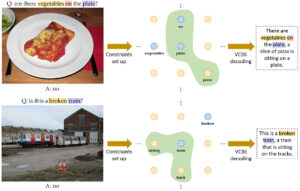

Title: Understanding VQA for Negative Answers through Visual and Linguistic Inference

Abstract: In order to make Visual Question Answering (VQA) explainable, previous studies not only visualize the attended region of a VQA model, but also generate textual explanations for its answers. However, when the model’s answer is “no,” existing methods have difficulty in revealing detailed arguments that lead to that answer. In addition, previous methods are insufficient to provide logical bases when the question requires common sense to answer. In this paper, we propose a novel textual explanation method to overcome the aforementioned limitations. First, we extract keywords that are essential to infer an answer from a question. Second, we utilize a novel Variable-Constrained Beam Search (VCBS) algorithm to generate explanations that best describe the circumstances in images. Furthermore, if the answer to the question is “yes” or “no,” we apply Natural Langauge Inference (NLI) to determine if contents of the question can be inferred from the explanation using common sense. Our user study, conducted in Amazon Mechanical Turk (MTurk), shows that our proposed method generates more reliable explanations compared to the previous methods. Moreover, by modifying the VQA model’s answer through the output of the NLI model, we show that VQA performance increases by 1.1% from the original model.