Title: An Optimized Design Technique of Low-bit Neural Network Training for Personalization on IoT Devices

Authors: Seung-Kyu Choi, Jae-Kang Shin, Yeong-Jae Choi, and Lee-Sup Kim

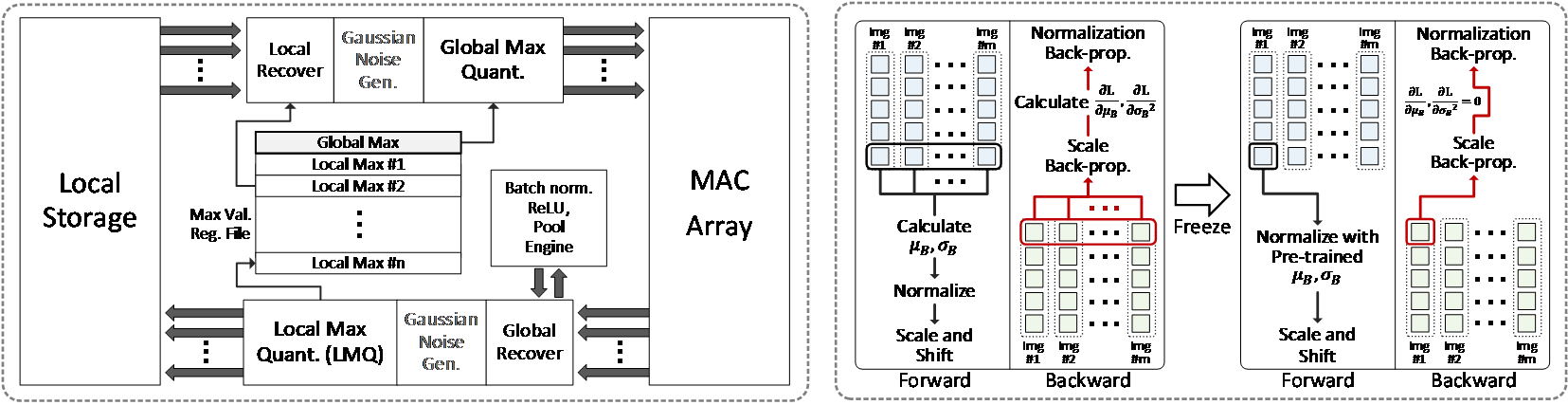

Personalization by incremental learning has become essential for IoT devices to enhance the performance of the deep learning models trained with global datasets. To avoid massive transmission traffic in the network, exploiting on-device learning is necessary. We propose a software/hardware co-design technique that builds an energy-efficient low-bit trainable system: (1) software optimizations by local low-bit quantization and computation freezing to minimize the on-chip storage requirement and computational complexity, (2) hardware design of a bit-flexible multiply-and-accumulate (MAC) array sharing the same resources in inference and training. Our scheme saves 99.2% on on-chip buffer storage and achieves 12.8x higher peak energy efficiency compared to previous trainable accelerators.

Figure 1. Optimizations to operate multiply-and-accumulate for CNN training in fixed-point based MAC units