Title: NAND-Net: Minimizing Computational Complexity of In-Memory Processing for Binary Neural Networks

Authors: Hyeon-Uk Kim, Jae-Hyeong Sim, Yeong-Jae Choi, Lee-Sup Kim

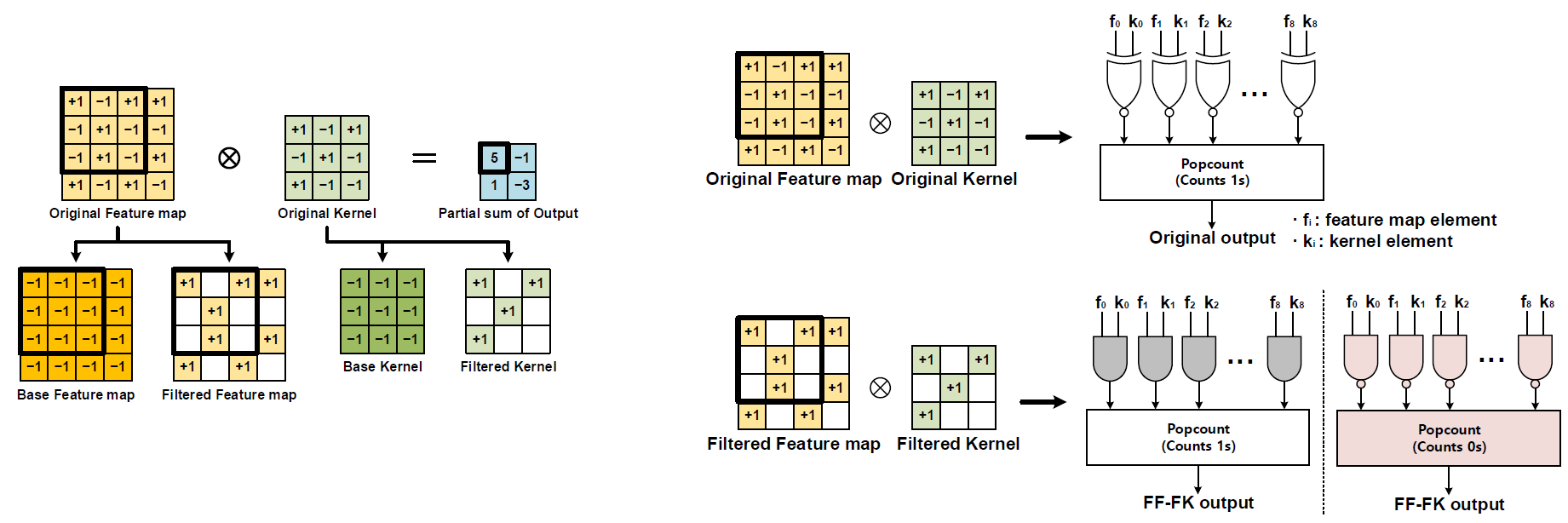

Popular deep learning technologies suffer from memory bottlenecks, which significantly degrade the energy-efficiency, especially in mobile environments. In-memory processing for binary neural networks (BNNs) has emerged as a promising solution to mitigate such bottlenecks, and various relevant works have been presented accordingly. However, their performances are severely limited by the overheads induced by the modification of the conventional memory architectures. To alleviate the performance degradation, we propose NAND-Net, an efficient architecture to minimize the computational complexity of in-memory processing for BNNs. Based on the observation that BNNs contain many redundancies, we decomposed each convolution into sub-convolutions and eliminated the unnecessary operations. In the remaining convolution, each binary multiplication (bitwise XNOR) is replaced by a bitwise NAND operation, which can be implemented without any bit cell modifications. This NAND operation further brings an opportunity to simplify the subsequent binary accumulations (popcounts). We reduced the operation cost of those popcounts by exploiting the data patterns of the NAND outputs. Compared to the prior state-of-the-art designs, NAND-Net achieves 1.04-2.4x speedup and 34-59% energy saving, thus making it a suitable solution to implement efficient in-memory processing for BNNs.