Title: 1.32 TOPS/W Energy Efficient Deep Neural Network Learning Processor with Direct Feedback Alignment based Heterogeneous Core Architecture

Authors: Dong-Hyeon Han, Jin-Su Lee, Jin-Mook Lee and Hoi-Jun Yoo

An energy efficient deep neural network (DNN) learning processor is proposed using direct feedback alignment (DFA).

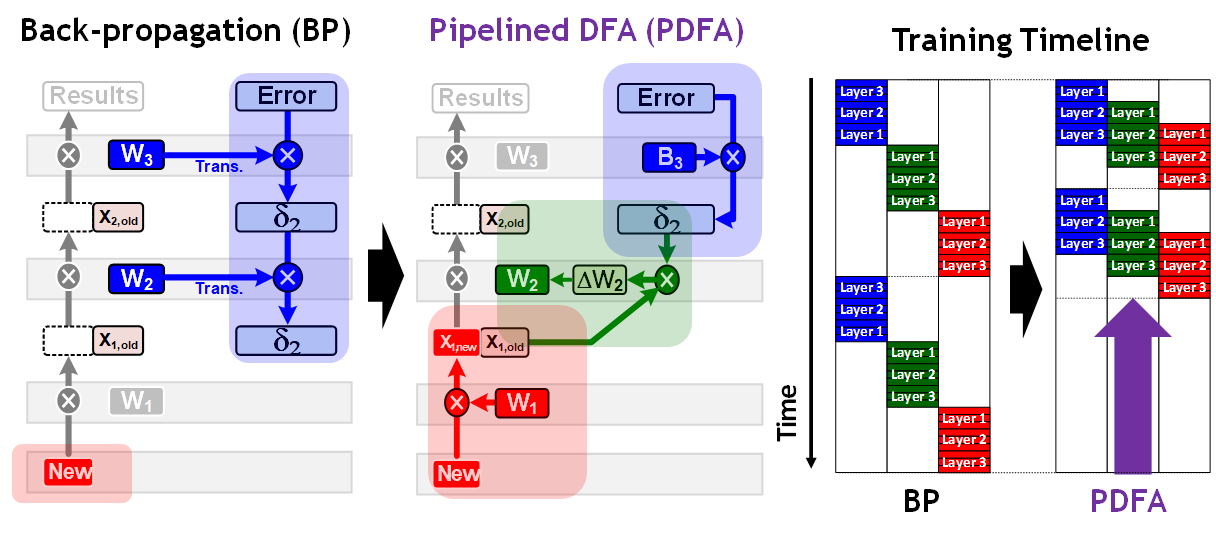

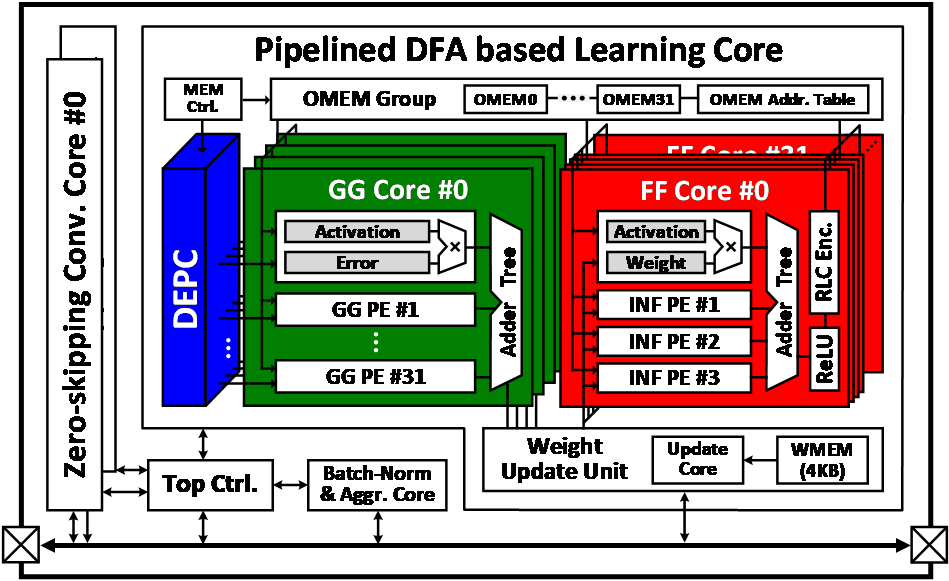

The proposed processor achieves 2.2 × faster learning speed compared with the previous learning processors by the pipelined DFA (PDFA). Since the computation direction of the back-propagation (BP) is reversed from the inference, the gradient of the 1st layer cannot be generated until the errors are propagated from the last layer to the 1st layer. On the other hand, the proposed processor applies DFA which can propagate the errors directly from the last layer. This means that the PDFA can propagate errors during the next inference computation and that weight update of the 1st layer doesn’t need to wait for error propagation of all the layers. In order to enhance the energy efficiency by 38.7%, the heterogeneous learning core (LC) architecture is optimized with the 11-stage pipeline data-path. It show 2 × longer data reusing compared with the conventional BP. Furthermore, direct error propagation core (DEPC) utilizes random number generators (RNG) to remove external memory access (EMA) caused by error propagation (EP) and improve the energy efficiency by 19.9%.

The proposed PDFA based learning processor is evaluated on the object tracking (OT) application, and as a result, it shows 34.4 frames-per-second (FPS) throughput with 1.32 TOPS/W energy efficiency.

Figure 1. Back-propagation vs Pipelined DFA

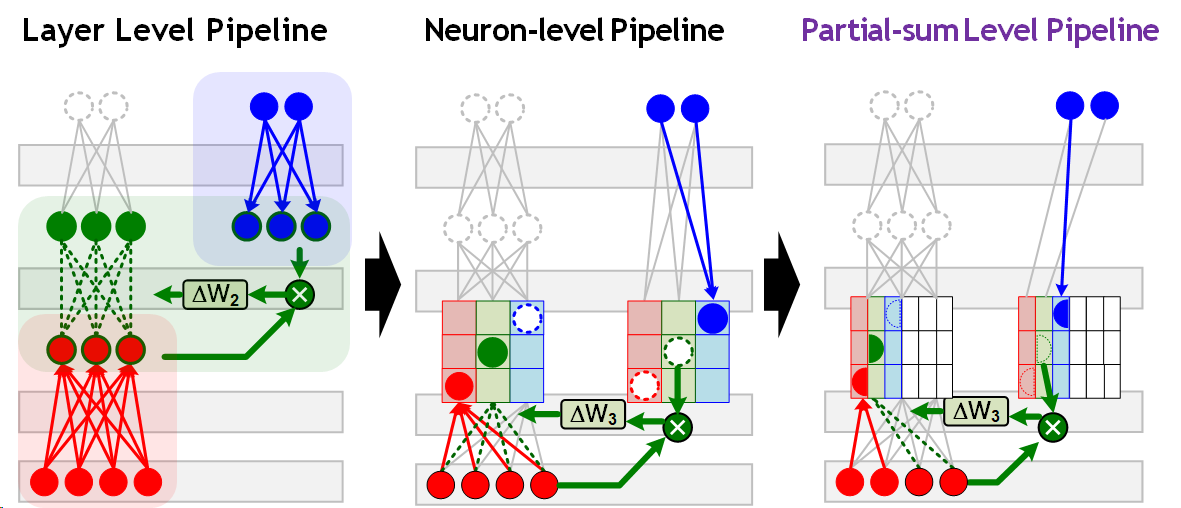

Figure 2. Layer Level vs Neuron-level vs Partial-sum Level Pipeline

Figure 3. Overall Architecture of Proposed Processor