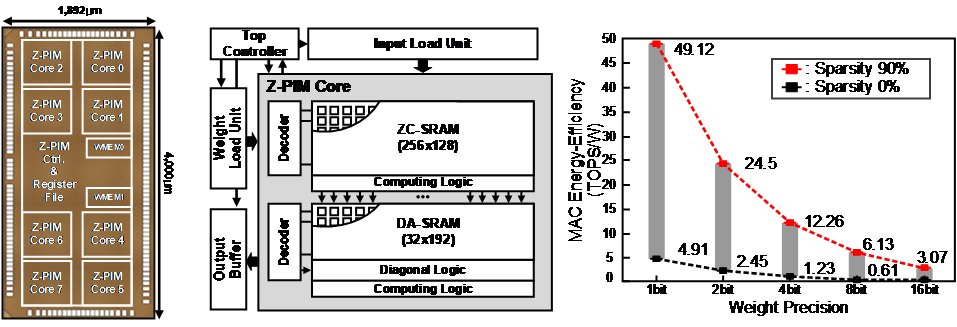

We present an energy-efficient processing-in-memory (PIM) architecture named Z-PIM that supports both sparsity handling and fully-variable bit-precision in weight data for energy-efficient deep neural networks. Z-PIM adopts the bit-serial arithmetic that performs a multiplication bit-by-bit through multiple cycles to reduce the complexity of the operation in a single cycle and to provide flexibility in bit-precision. To this end, it employs a zero-skipping convolution SRAM, which performs in-memory AND operations based on custom 8T-SRAM cells and channel-wise accumulations, and a diagonal accumulation SRAM that performs bit-wise and spatial-wise accumulation on the channel-wise accumulation results using diagonal logic and adders to produce the final convolution outputs. We propose the hierarchical bit-line structure for energy-efficient weight bit pre-charging and computational read-out by reducing the parasitic capacitances of the bit-lines. Its charge reuse scheme reduces the switching rate by 95.42% for the convolution layers of VGG-16 model. In addition, Z-PIM’s channel-wise data mapping enables sparsity handling by skip-reading the input channels with zero weight. Its read-operation pipelining enabled by a read-sequence scheduling improves the throughput by 66.1%. The Z-PIM chip is fabricated in 65-nm CMOS process on a 7.568 mm2 die while it consumes average 5.294mW power at 1.0V voltage and 200MHz frequency. It achieves 0.31-49.12 TOPS/W energy efficiency for convolution operations as the weight sparsity and bit-precision vary from 0.1 to 0.9 and 1-bit to 16-bit, respectively. For the figure of merit considering input bit-width, weight bit-width, and energy efficiency, the Z-PIM shows more than 2.1 times improvement over the state-of-the-art PIM implementations.