Title : A Convergence Monitoring Method for DNN Training of On-Device Task Adaptation

Author : Seungkyu Choi, Jaekang Shin, Lee-Sup Kim

Conference : IEEE/ACM International Conference On Computer Aided Design 2021

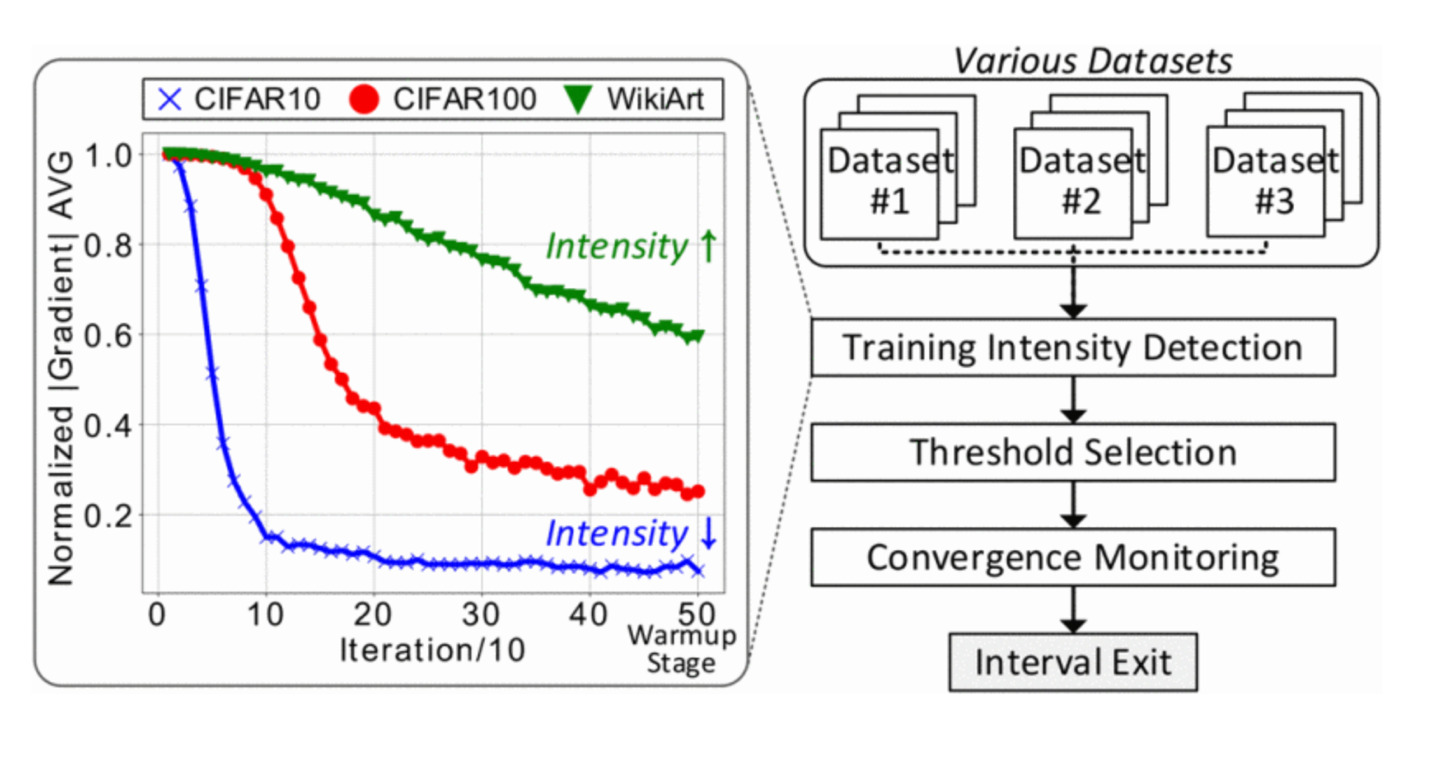

Abstract: DNN training has become a major workload in on-device situations to execute various vision tasks with high performance. Accordingly, training architectures accompanying approximate computing have been steadily studied for efficient acceleration. However, most of the works examine their scheme on from-the-scratch training where inaccurate computing is not tolerable. Moreover, previous solutions are mostly provided as an extended version of the inference works, e.g., sparsity/pruning, quantization, dataflow, etc. Therefore, unresolved issues in practical workloads that hinder the total speed of the DNN training process remain still. In this work, with targeting the transfer learning-based task adaptation of the practical on-device training workload, we propose a convergence monitoring method to resolve the redundancy in massive training iterations. By utilizing the network’s output value, we detect the training intensity of incoming tasks and monitor the prediction convergence with the given intensity to provide early-exits in the scheduled training iteration. As a result, an accurate approximation over various tasks is performed with minimal overhead. Unlike the sparsity-driven approximation, our method enables runtime optimization and can be easily applicable to off-the-shelf accelerators achieving significant speedup. Evaluation results on various datasets show a geomean of 2.2× speedup over baseline and 1.8× speedup over the latest convergence-related training method.