Title: An Optimized Design Technique of Low-bit Neural Network Training for Personalization on IoT Devices

Authors: Seung-Kyu Choi, Jae-Kang Shin, Yeong-Jae Choi, and Lee-Sup Kim

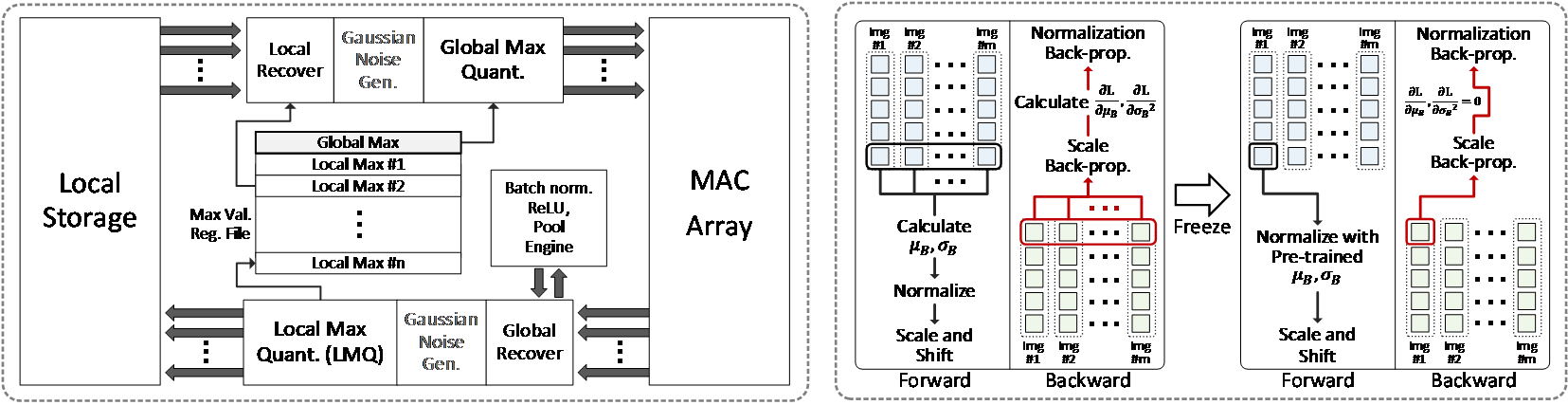

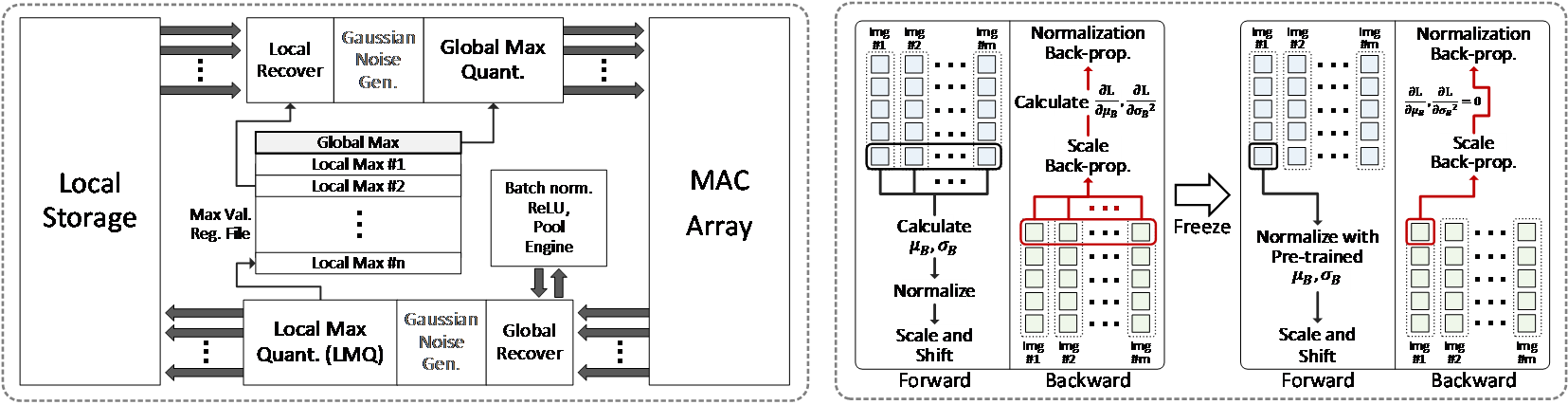

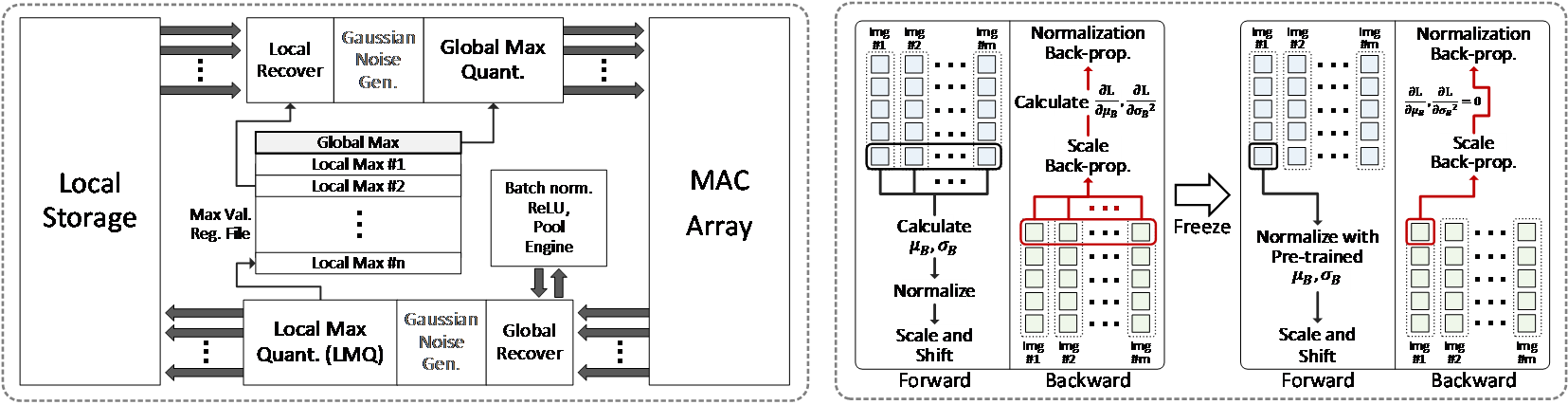

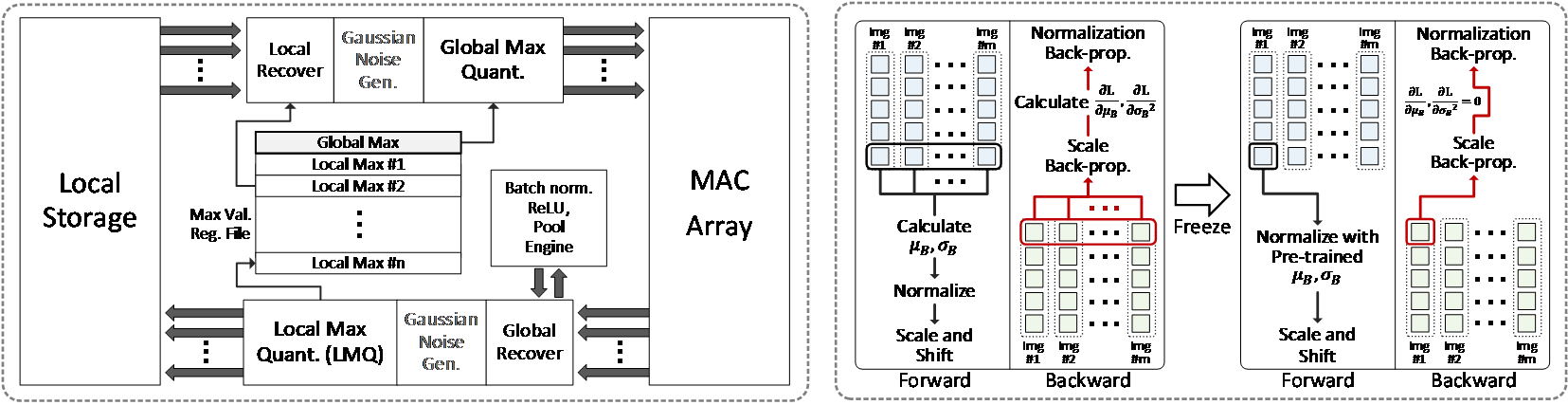

Personalization by incremental learning has become essential for IoT devices to enhance the performance of the deep learning models trained with global datasets. To avoid massive transmission traffic in the network, exploiting on-device learning is necessary. We propose a software/hardware co-design technique that builds an energy-efficient low-bit trainable system: (1) software optimizations by local low-bit quantization and computation freezing to minimize the on-chip storage requirement and computational complexity, (2) hardware design of a bit-flexible multiply-and-accumulate (MAC) array sharing the same resources in inference and training. Our scheme saves 99.2% on on-chip buffer storage and achieves 12.8x higher peak energy efficiency compared to previous trainable accelerators.

Figure 1. Optimizations to operate multiply-and-accumulate for CNN training in fixed-point based MAC units

Ph.D. student Sung-Pill Choi (Advised by Hoi-Jun Yoo) won the Best Paper Award at AICAS 2019 (IEEE International Conference on Artificial Intelligence Circuits and Systems). The paper was entitled, “CNNP-v2: An ‘Energy Efficient Memory-Centric Convolutional Neural Network Processor Architecture’”, and it was authored by Sung-Pill Choi, Kyeong-Ryeol Bong, Dong-Hyeon Han, and Hoi-Jun Yoo.

Through utilizing a memory-centric architecture, rather than the traditional operator-centric CNN architecture, the researchers have achieved 91.5% MAC unit utilization in high parallel (1024-way) processing, and high energy efficiency of 3.1 TOPS/W through a near-threshold operation of 0.46V. They have developed a chip that can process a real-time face recognition system with IoT or wearable devices at 9.4mW of electricity, which is the highest level in the world.

Congratulations to Sung-Pill Choi and Professor Hoi-Jun Yoo!

– Demonstration Session Certificate of Recognition (award granted to the best two school demos released by ISSCC)

“UNPU: A 50.6TOPS/W Unified Deep Neural Network Accelerator with 1b-to-16b Fully-Variable Weight Bit-Precision”

Jin-Muk Lee, Chang-Hyun Kim, Sang-Hyun Kang, Dong-Ju Shin, Sang-Hyup Kim (Advised by Hoi-Jun Yoo)

– Demonstration Session Certificate of Recognition (award granted to the best two school demos released by ISSCC)

“A 9.02mW CNN-Stereo-Based Real-Time 3D Hand-Gesture Recognition Processor for Smart Mobile Devices”

Seong-Pil Choi, Jin-Soo Lee, Kyu-Ho Lee (Advised by Hoi-Jun Yoo)

– Student Research Preview (SRP) Outstanding Poster Award (award granted to the best poster presented in the ISSCC SRP)

“A 4.54uW, 0.77pJ/c.s. Dual Quantization-Based Capacitance-to-Digital

Converter for Acceleration, Humidity, and Pressure Sensing in 0.18μm CMOS”

Su-Jin Park (Advised by Seong-Hwan Cho)

Please accept our sincere congratulations!