Title: Population-Guided Parallel Policy Search for Reinforcement Learning

Authors: Whiyoung Jung, Giseung Park and Youngchul Sung

Presented at International Conference on Learning Representation (ICLR) 2020

In this work, a new population-guided parallel learning scheme is proposed to enhance the performance of off-policy reinforcement learning (RL). In the proposed scheme, multiple identical learners with their own value-functions and policies share a common experience replay buffer, and search a good policy in collaboration with the guidance of the best policy information. The key point is that the information of the best policy is fused in a soft manner by constructing an augmented loss function for policy update to enlarge the overall search region by the multiple learners. The guidance by the previous best policy and the enlarged range enable faster and better policy search. Monotone improvement of the expected cumulative return by the proposed scheme is proved theoretically. Working algorithms are constructed by applying the proposed scheme to the twin delayed deep deterministic (TD3) policy gradient algorithm. Numerical results show that the constructed algorithm outperforms most of the current state-of-the-art RL algorithms, and the gain is significant in the case of sparse reward environment.

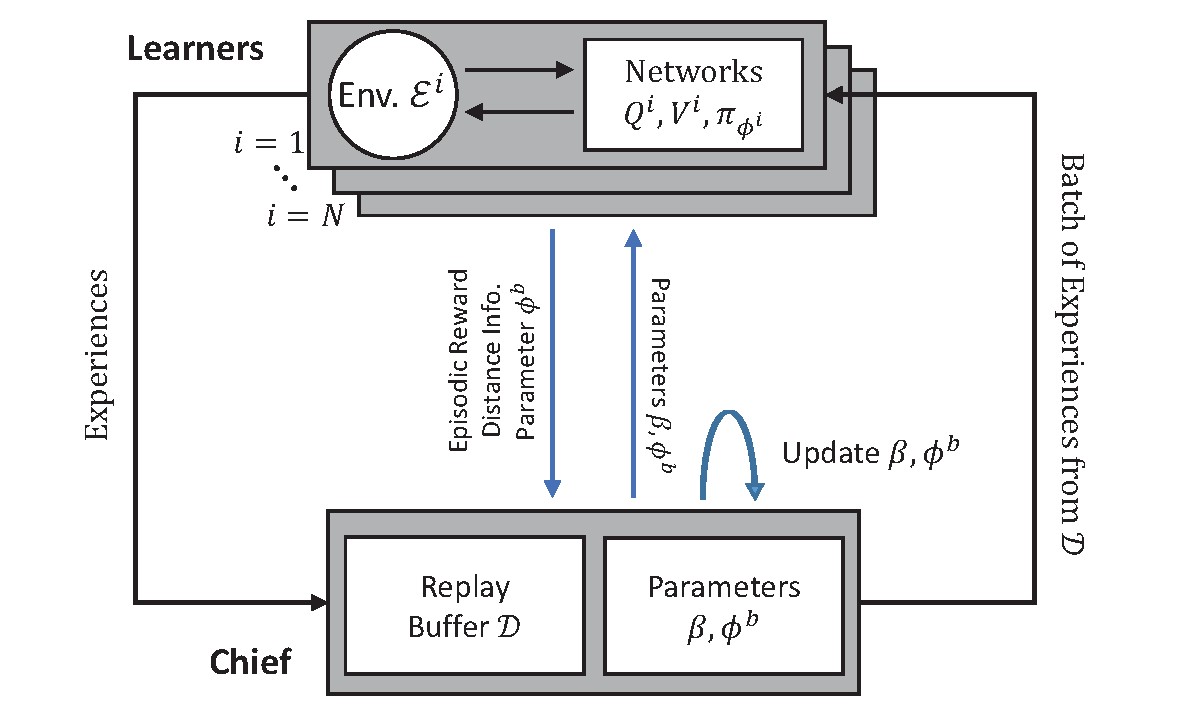

Fig. 1. The overall proposed structure (P3S):

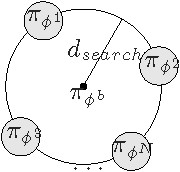

Fig. 2. The conceptual search coverage in the policy space by parallel learners:

Fig. 3. Performance of different parallel learning methods on MuJoCo environments (up), on delayed MuJoCo environments (down)

Fig. 3. Performance of different parallel learning methods on MuJoCo environments (up), on delayed MuJoCo environments (down)

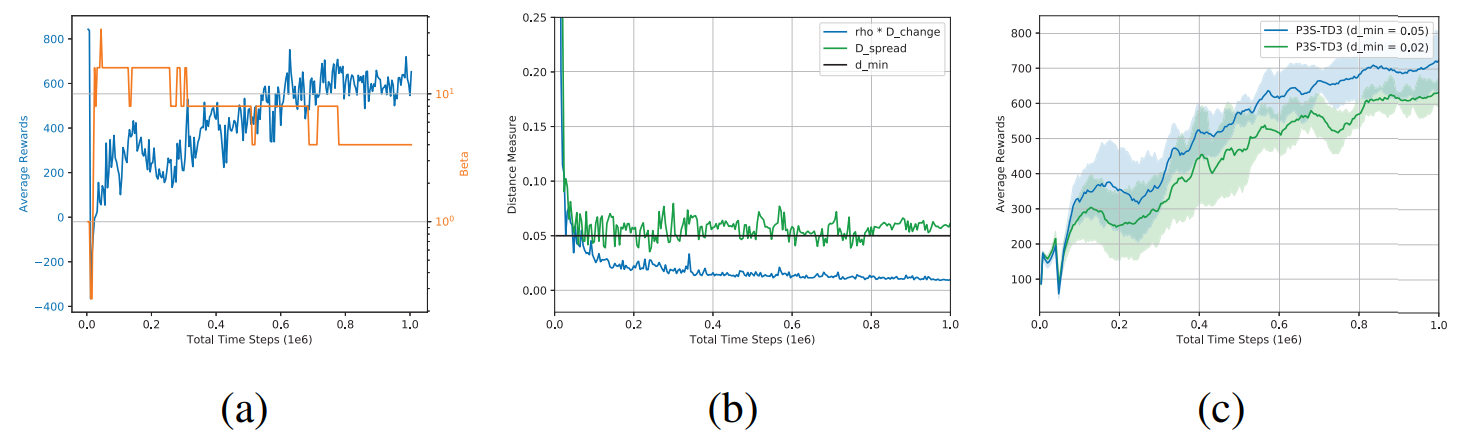

Fig. 4. Benefits of P3S (a) Performance and beta (1 seed) with d_min = 0.05, (b) Distance measures with d_min = 0.05, and (c) Comparison with different d_min = 0.02, 0.05.