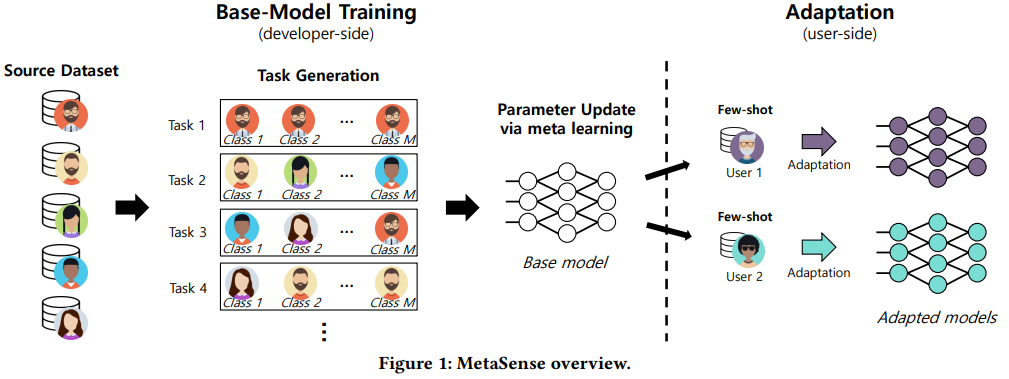

Many applications utilize sensors on mobile devices and apply deep learning for diverse applications. However, they have rarely enjoyed mainstream adoption due to many different individual conditions users encounter. Individual conditions are characterized by users’ unique behaviors and different devices they carry, which collectively make sensor inputs different. It is impractical to train countless individual conditions beforehand and we thus argue meta-learning is a great approach in solving this problem. We present MetaSense that leverages “seen” conditions in training data to adapt to an “unseen” condition (i.e., the target user). Specifically, we design a meta-learning framework that learns “how to adapt” to the target via iterative training sessions of adaptation. MetaSense requires very few training examples from the target (e.g., one or two) and thus requires minimal user effort. In addition, we propose a similar condition detector (SCD) that identifies when the unseen condition has similar characteristics to seen conditions and leverages this hint to further improve the accuracy. Our evaluation with 10 different datasets shows that MetaSense improves the accuracy of state-of-the-art transfer learning and meta learning methods by 15% and 11%, respectively. Furthermore, our SCD achieves additional accuracy improvement (e.g., 15% for human activity recognition).