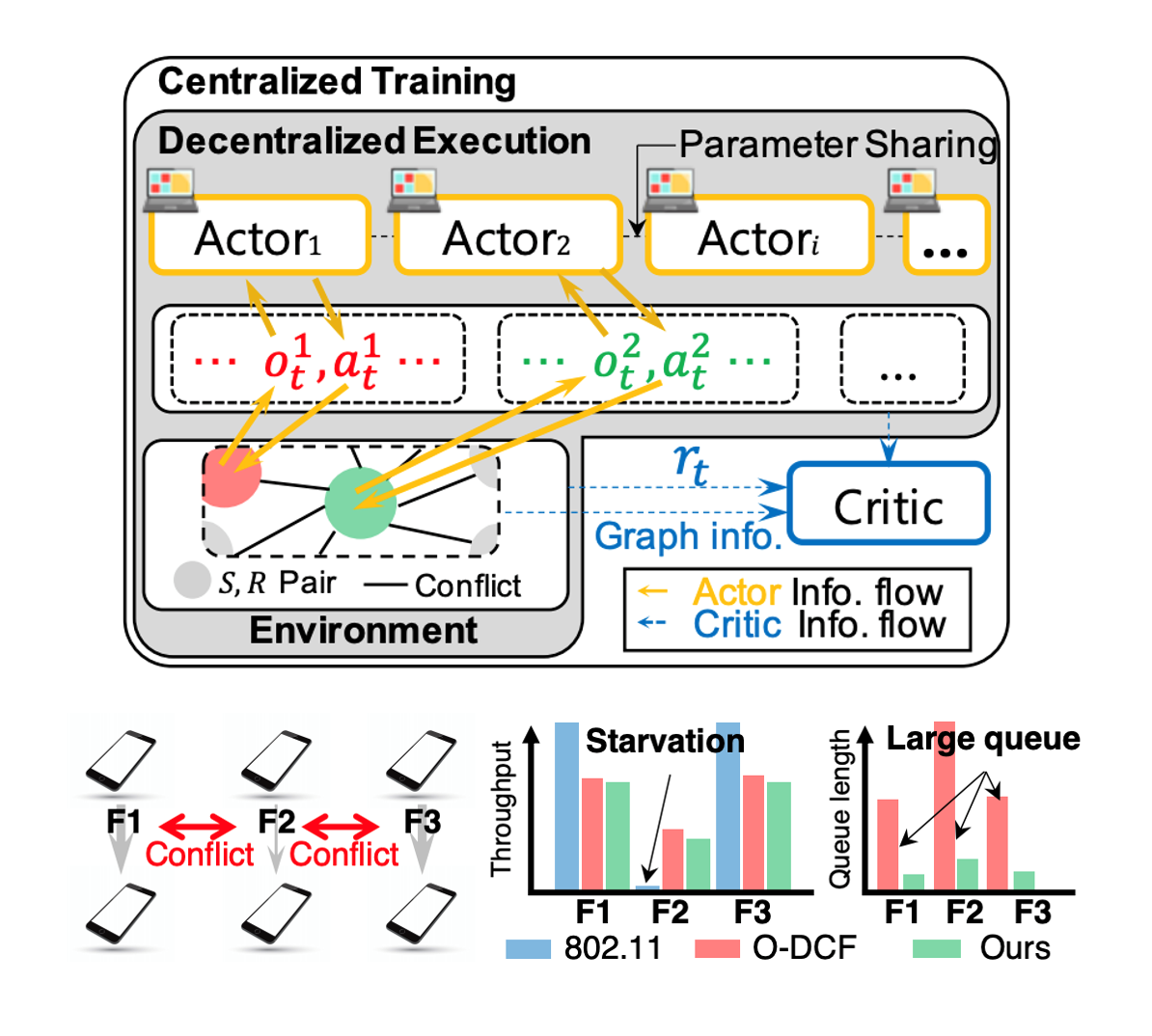

The carrier sense multiple access (CSMA) algorithm has been used in the wireless medium access control (MAC) under standard 802.11 implementation due to its simplicity and generality. An extensive body of research on CSMA has long been made not only in the context of practical protocols, but also in a distributed way of optimal MAC scheduling. However, the current state-of-the-art CSMA (or its extensions) still suffers from poor performance, especially in multi-hop scenarios, and often requires patch-based solutions rather than a universal solution. In this paper, we propose an algorithm which adopts an experience-driven approach and train CSMA-based wireless MAC by using deep reinforcement learning. We name our protocol, Neuro-DCF. Two key challenges are: (i) a stable training method for distributed execution and (ii) a unified training method for embracing various interference patterns and configurations. For (i), we adopt a multi-agent reinforcement learning framework, and for (ii) we introduce a novel graph neural network (GNN) based training structure. We provide extensive simulation results which demonstrate that our protocol, Neuro-DCF, significantly outperforms 802.11 DCF and O-DCF, a recent theory-based MAC protocol, especially in terms of improving delay performance while preserving optimal utility. We believe our multi-agent reinforcement learning based approach would get broad interest from other learning-based network controllers in different layers that require distributed operation.