Conference/Journal, Year: ICRA, 2020

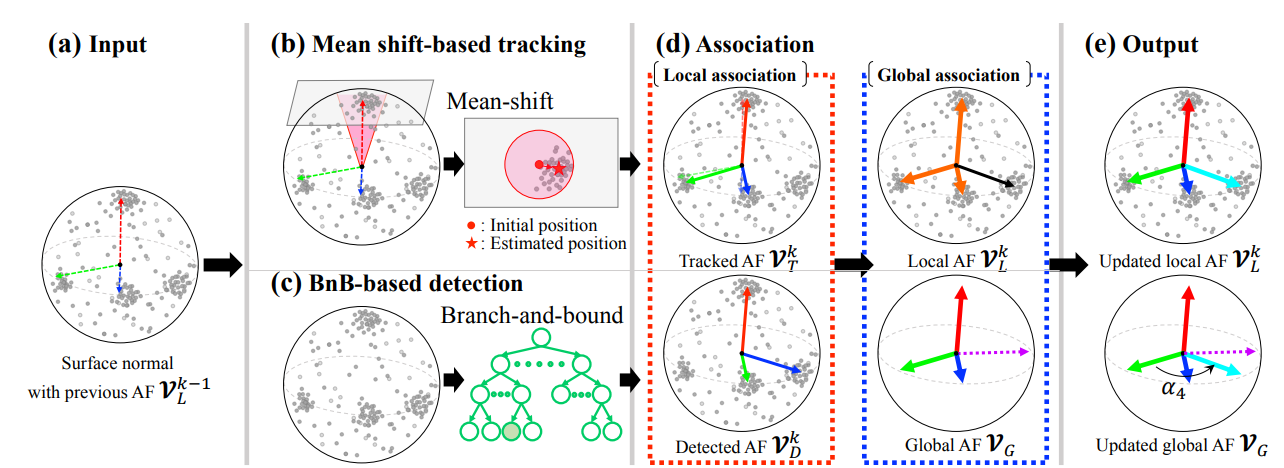

We present a new linear method for RGB-D based simultaneous localization and mapping (SLAM). Compared to existing techniques relying on the Manhattan world assumption defined by three orthogonal directions, our approach is designed for the more general scenario of the Atlanta world. It consists of a vertical direction and a set of horizontal directions orthogonal to the vertical direction and thus can represent a wider range of scenes. Our approach leverages the structural regularity of the Atlanta world to decouple the non-linearity of camera pose estimations. This allows us separately to estimate the camera rotation and then the translation, which bypasses the inherent non-linearity of traditional SLAM techniques. To this end, we introduce a novel tracking-by-detection scheme to estimate the underlying scene structure by Atlanta representation. Thereby, we propose an Atlanta frame-aware linear SLAM framework which jointly estimates the camera motion and a planar map supporting the Atlanta structure through a linear Kalman filter. Evaluations on both synthetic and real datasets demonstrate that our approach provides favorable performance compared to existing state-of-the-art methods while extending their working range to the Atlanta world.