Conference/Journal, Year: IROS 2020

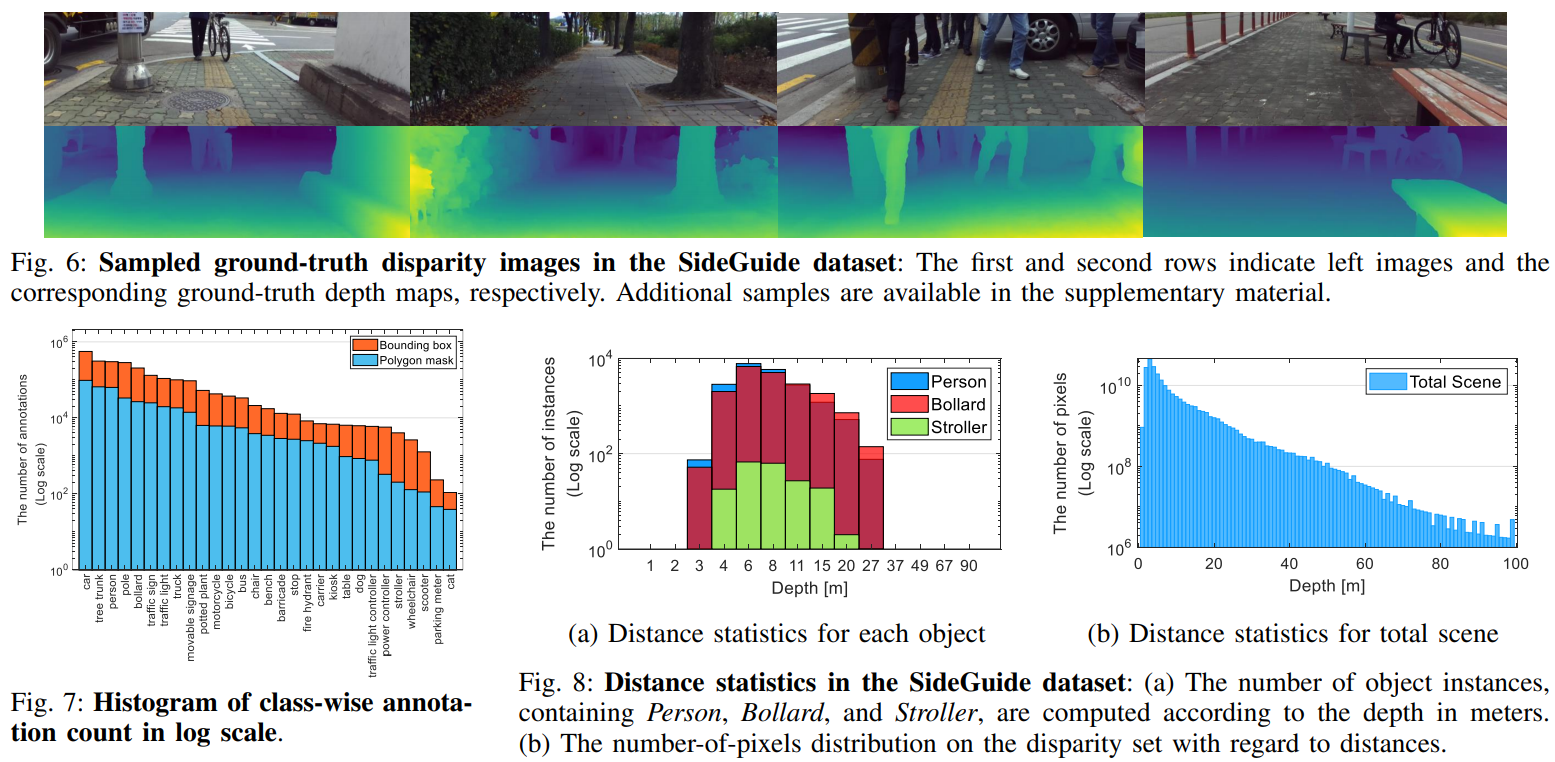

In this paper, we introduce a new large-scale sidewalk dataset called SideGuide that could potentially help impaired people. Unlike most previous datasets, which are focused on road environments, we paid attention to sidewalks, where understanding the environment could provide the potential for improved walking of humans, especially impaired people. Concretely, we interviewed impaired people and carefully selected target objects from the interviewees’ feedback (objects they encounter on sidewalks). We then acquired two different types of data: crowd-sourced data and stereo data. We labeled target objects at instance-level (i.e., bounding box and polygon mask) and generated a ground-truth disparity map for the stereo data. SideGuide consists of 350K images with bounding box annotation, 100K images with a polygon mask, and 180K stereo pairs with the ground-truth disparity. We analyzed our dataset by performing baseline analysis for object detection, instance segmentation, and stereo matching tasks. In addition, we developed a prototype that recognizes the target objects and measures distances, which could potentially assist people with disabilities. The prototype suggests the possibility of practical application of our dataset in real life.