Dysarthria is a condition where people experience a reduction in speech intelligibility due to a neuromotor disorder. Previous works in dysarthric speech recognition have focused on accurate recognition of words encountered in training data. Due to the rarity of dysarthria in the general population, a relatively small amount of publicly-available training data exists for dysarthric speech. The number of unique words in these datasets is small, so ASR systems trained with existing dysarthric speech data are limited to recognition of those words. In this paper, we propose a data augmentation method using voice conversion that allows dysarthric ASR systems to accurately recognize words outside of the training set vocabulary. We demonstrate that a small amount of dysarthric speech data can be used to capture the relevant vocal characteristics of a speaker with dysarthria through a parallel voice conversion system. We show that it’s possible to synthesize utterances of new words that were never recorded by speakers with dysarthria, and that these synthesized utterances can be used to train a dysarthric ASR system.

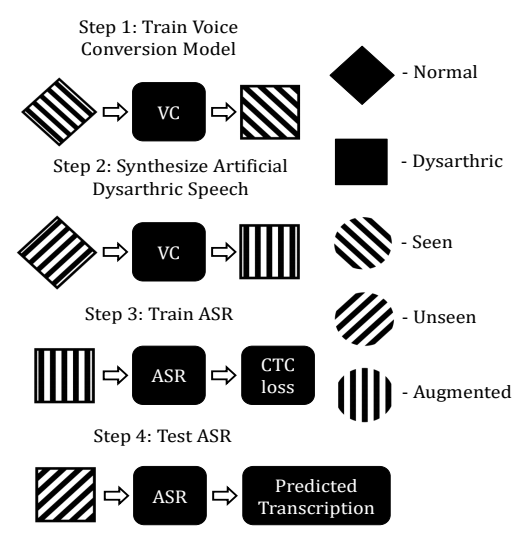

Figure 4. Proposed Approach: Diamond or square shapes refer to normal or dysarthric speech, respectively. Overlay patterns denote whether data is from the seen or unseen partition, or is augmented (synthetic).