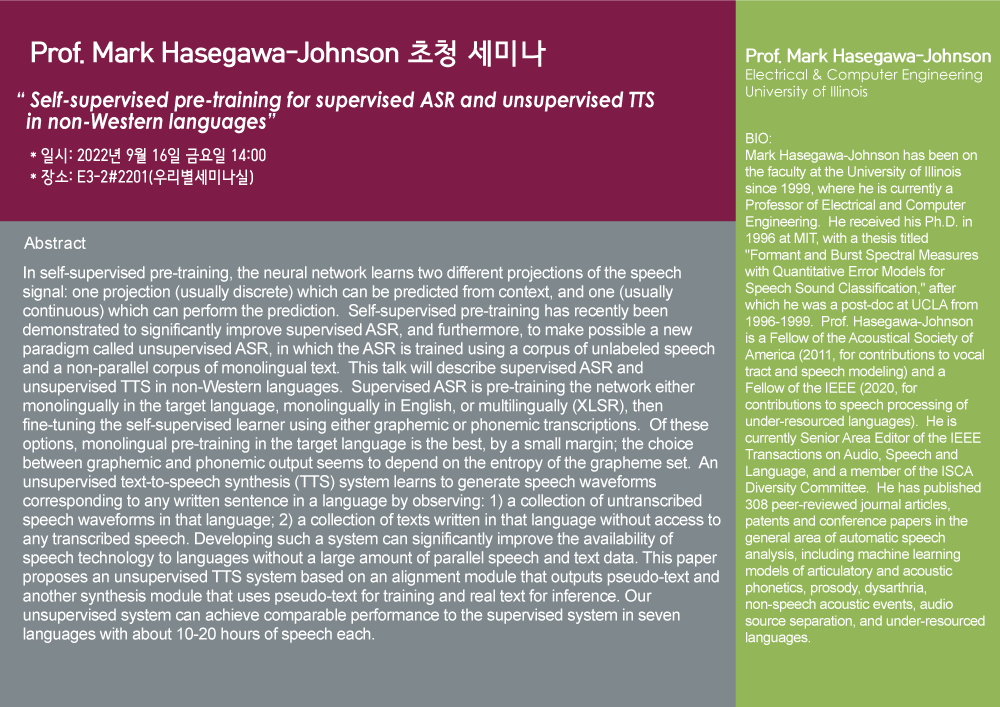

O Speaker:Prof. Mark Hasegawa-Johnson(Electrical & Computer Engineering/University of Illinois)

O Title: Self-supervised pre-training for supervised ASR and unsupervised TTS in non-Western language

O Date: 2022.9.16(Fri)

O Start Time: 14:00

O Venue: E3-2 Building #2201

OAbstract:

In self-supervised pre-training, the neural network learns two different projections of the speech signal: one projection (usually discrete) which can be predicted from context, and one (usually continuous) which can perform the prediction. Self-supervised pre-training has recently been demonstrated to significantly improve supervised ASR, and furthermore, to make possible a new paradigm called unsupervised ASR, in which the ASR is trained using a corpus of unlabeled speech and a non-parallel corpus of monolingual text. This talk will describe supervised ASR and unsupervised TTS in non-Western languages. Supervised ASR is pre-training the network either monolingually in the target language, monolingually in English, or multilingually (XLSR), then fine-tuning the self-supervised learner using either graphemic or phonemic transcriptions. Of these options, monolingual pre-training in the target language is the best, by a small margin; the choice between graphemic and phonemic output seems to depend on the entropy of the grapheme set. An unsupervised text-to-speech synthesis (TTS) system learns to generate speech waveforms corresponding to any written sentence in a language by observing: 1) a collection of untranscribed speech waveforms in that language; 2) a collection of texts written in that language without access to any transcribed speech. Developing such a system can significantly improve the availability of speech technology to languages without a large amount of parallel speech and text data. This paper proposes an unsupervised TTS system based on an alignment module that outputs pseudo-text and another synthesis module that uses pseudo-text for training and real text for inference. Our unsupervised system can achieve comparable performance to the supervised system in seven languages with about 10-20 hours of speech each.

O Bio:

Mark Hasegawa-Johnson has been on the faculty at the University of Illinois since 1999, where he is currently a Professor of Electrical and Computer Engineering. He received his Ph.D. in 1996 at MIT, with a thesis titled “Formant and Burst Spectral Measures with Quantitative Error Models for Speech Sound Classification,” after which he was a post-doc at UCLA from 1996-1999. Prof. Hasegawa-Johnson is a Fellow of the Acoustical Society of America (2011, for contributions to vocal tract and speech modeling) and a Fellow of the IEEE (2020, for contributions to speech processing of under-resourced languages). He is currently Senior Area Editor of the IEEE Transactions on Audio, Speech and Language, and a member of the ISCA Diversity Committee. He has published 308 peer-reviewed journal articles, patents and conference papers in the general area of automatic speech analysis, including machine learning models of articulatory and acoustic phonetics, prosody, dysarthria, non-speech acoustic events, audio source separation, and under-resourced languages.

–

Copyright ⓒ 2015 KAIST Electrical Engineering. All rights reserved. Made by PRESSCAT

Copyright ⓒ 2015 KAIST Electrical Engineering. All rights reserved. Made by PRESSCAT

Copyright ⓒ 2015 KAIST Electrical Engineering. All rights reserved. Made by PRESSCAT

Copyright ⓒ 2015 KAIST Electrical

Engineering. All rights reserved.

Made by PRESSCAT