Title: NAND-Net: A 133.6TOPS/W Compute-In-Memory SRAM Macro with Fully Parallel One-Step Multi-Bit Computation

Authors: Edward Choi, Injun Choi, Chanhee Jeon, Gichan Yun, Donghyeon Yi, Sohmyung Ha, Ik-Joon Chang, Minkyu Je1

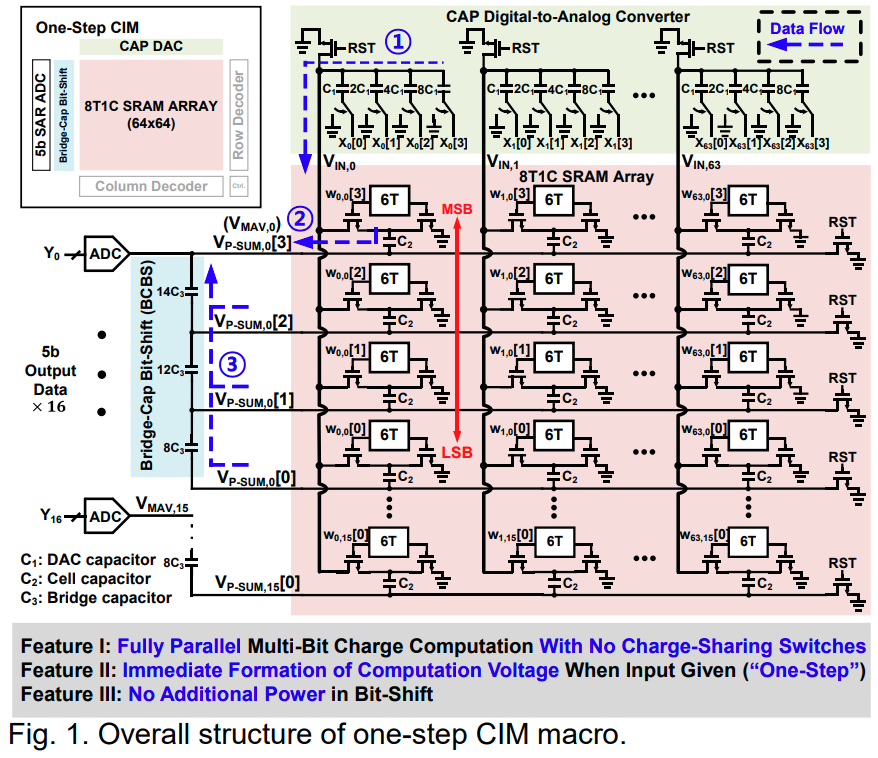

SRAM-based compute-in-memory (CIM) structures have shown ways to perform deep neural network (DNN) computations in the mixed-signal domain with high energy efficiency but suffer from the tradeoff and limitations in their accuracy arising from analog nonidealities. Recently, circuit techniques were developed to support multi-bit analog computations in SRAM-based CIM macro [1], [2], which computes multiplication and accumulation by using transistor currents. However, the transistor current has nonlinear characteristics with respect to the gate voltage, significantly degrading the accuracies of DNNs. Some works address this problem by using charge-based computation [3], [4], where the multiplication results between 1b weight and multi-bit inputs are firstly stored in capacitors. Multi-bit-weight computations are then achieved by shifting and adding the multiplication result outputs either in the digital domain [3] or in the analog domain using a charge-sharing method [1]. The digital method typically requires a higher ADC precision and one ADC for every accumulation, becoming power heavy. The analog charge-sharing method requires switches to control, being exposed to charge injection noise and dissipating considerable power to turn on and off the switches. To address these issues, this work proposes an 8T1C SRAM-based CIM macro structure, which supports (1) multi-bit-weight chargebased computation without additional switches used for charge sharing; (2) a simple and fast computation where multi-bit-weight multiply-accumulate-averaging (MAV) voltage is immediately formed when the input is given, namely “one-step” computation; (3) compact 8T1C bit cell using metal-oxide-metal (MOM) capacitor which incurs only 1.5× cell area of the conventional 6T SRAM under logic rules; and (4) no additional power consumption in bit-shift for energy-efficient computing. We fabricated the proposed 4kb SRAM CIM macro in a 65nm process, whose structure is shown in Fig. 1, supporting a fully parallel computation of 1024 MAV operations with 64 4b inputs and 16 4b weights.