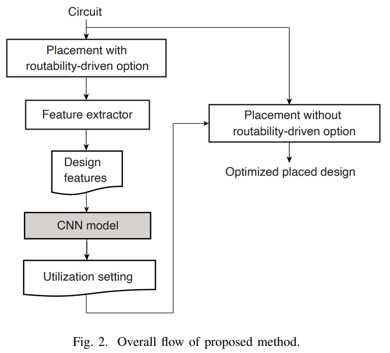

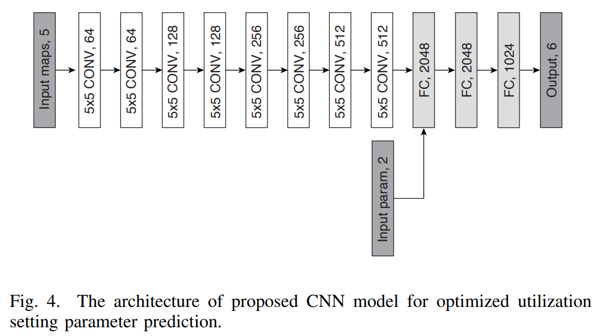

Circuits that are placed with very low (or high) aspect ratio are susceptible to routing overflows. Such designs are difficult to close and usually end up with larger area with low area utilization. We observe that non-uniform setting of utilization target greatly helps in these designs, specifically low utilization in the center and gradually higher utilization toward the ends. We introduce a convolutional neural network (CNN) model to predict the setting of utilization target values. Experiments indicate that routing congestion overflows are reduced by 29% on average of test designs with 40% reduction in wirelength.

Title: ARCHON: A 332.7TOPS/W 5b Variation-Tolerant Analog CNN Processor Featuring Analog Neuronal Computation Unit and Analog Memory

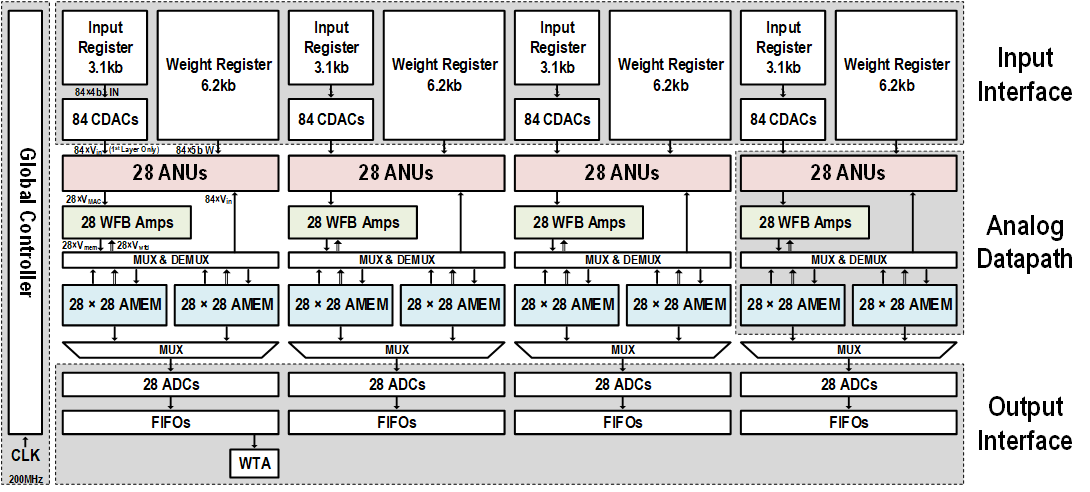

Abstract: In this paper, we present a fully analog CNN processor featuring convolution, pooling, and non-linearity (RELU) datapath fully (end-to-end) in the analog domain, with no analog-to-digital conversion between layers. The processor adopts a variation-tolerant analog design approach, including analog memory with a write-with-feedback scheme that allows the fully analog processor to be robust to PVT variations. The 28nm chip achieves a peak efficiency of 332.7TOPS/W for 5b equivalent precision.

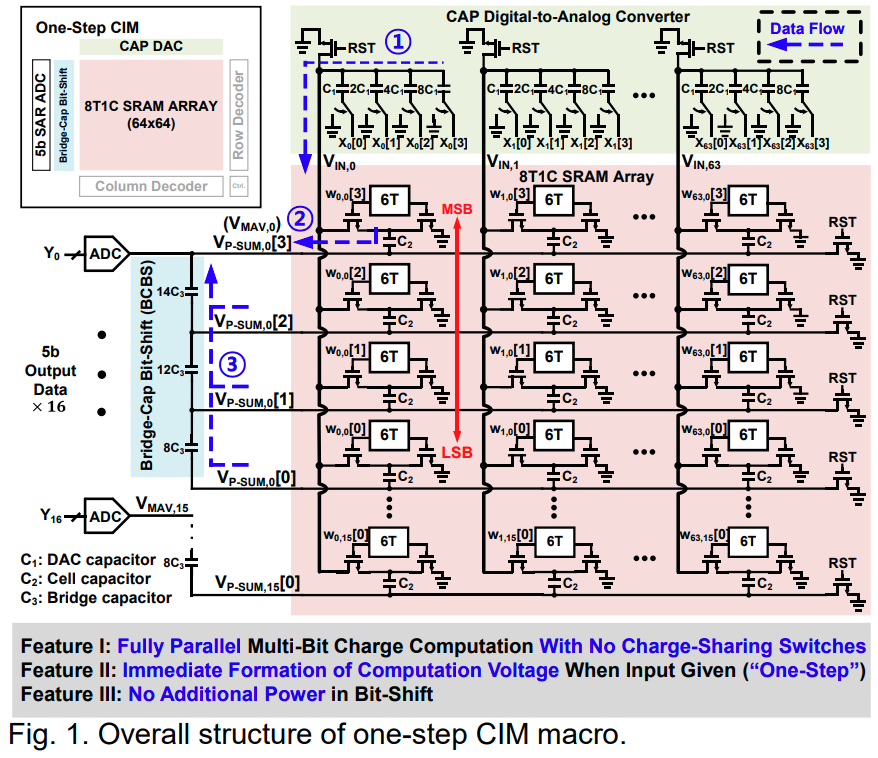

Title: NAND-Net: A 133.6TOPS/W Compute-In-Memory SRAM Macro with Fully Parallel One-Step Multi-Bit Computation

Authors: Edward Choi, Injun Choi, Chanhee Jeon, Gichan Yun, Donghyeon Yi, Sohmyung Ha, Ik-Joon Chang, Minkyu Je1

SRAM-based compute-in-memory (CIM) structures have shown ways to perform deep neural network (DNN) computations in the mixed-signal domain with high energy efficiency but suffer from the tradeoff and limitations in their accuracy arising from analog nonidealities. Recently, circuit techniques were developed to support multi-bit analog computations in SRAM-based CIM macro [1], [2], which computes multiplication and accumulation by using transistor currents. However, the transistor current has nonlinear characteristics with respect to the gate voltage, significantly degrading the accuracies of DNNs. Some works address this problem by using charge-based computation [3], [4], where the multiplication results between 1b weight and multi-bit inputs are firstly stored in capacitors. Multi-bit-weight computations are then achieved by shifting and adding the multiplication result outputs either in the digital domain [3] or in the analog domain using a charge-sharing method [1]. The digital method typically requires a higher ADC precision and one ADC for every accumulation, becoming power heavy. The analog charge-sharing method requires switches to control, being exposed to charge injection noise and dissipating considerable power to turn on and off the switches. To address these issues, this work proposes an 8T1C SRAM-based CIM macro structure, which supports (1) multi-bit-weight chargebased computation without additional switches used for charge sharing; (2) a simple and fast computation where multi-bit-weight multiply-accumulate-averaging (MAV) voltage is immediately formed when the input is given, namely “one-step” computation; (3) compact 8T1C bit cell using metal-oxide-metal (MOM) capacitor which incurs only 1.5× cell area of the conventional 6T SRAM under logic rules; and (4) no additional power consumption in bit-shift for energy-efficient computing. We fabricated the proposed 4kb SRAM CIM macro in a 65nm process, whose structure is shown in Fig. 1, supporting a fully parallel computation of 1024 MAV operations with 64 4b inputs and 16 4b weights.

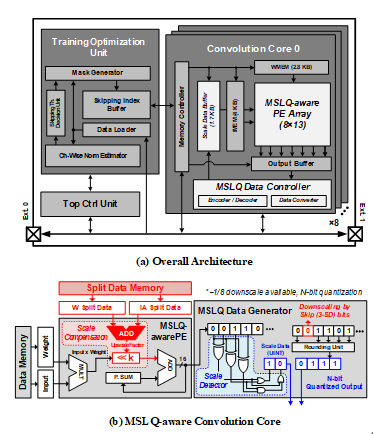

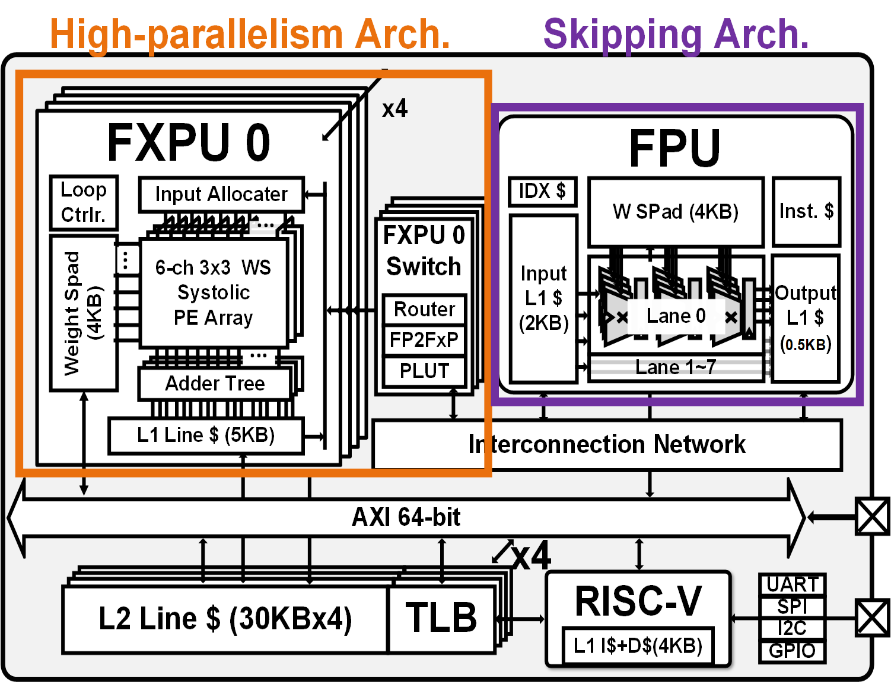

Online training is essential to maintain a high object detection (OD) in various environments. However, additional computation workload, EMA, and high bit precision is the problem of conventional online learning scheme on mobile devices. Therefore, a low power real-time online learning OD processor is proposed with three key features. In this paper, we present low power online learning processor for mobile devices with 3 key features: 1) Multiscale linear quantization and architecture to support it for low-bit fxp-based arithmetic at all stages of online learning. 2) Low-gradient channel skipping for computation reduction and EMA reduction. 3) Gradient Norm Estimation to support gradient norm clipping with less than 0.1% additional computations for fast adaptation. As a result, the proposed processor achieves 34 frame-per-second real-time OD with accurate online learning while only consuming 49.5mW.

Related papers:

Song, Seokchan, et al. “A 49.5 mW Multi-scale Linear Quantized Online Learning Processor for Real-Time Adaptive Object Detection.” IEEE Transactions on Circuits and Systems II: Express Briefs (2022).

Song, Seokchan, et al. “A 49.5 mW Multi-Scale Linear Quantized Online Learning Processor for Real-Time Adaptive Object Detection”, IEEE International Symposium on Circuits and Systems (ISCAS), May. 2022

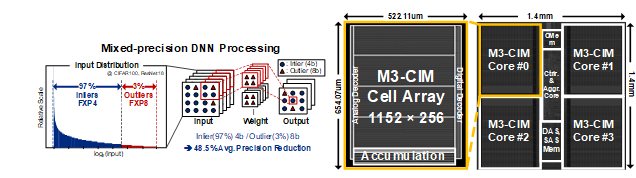

A Mixed-mode Computing-in memory (CIM) processor for the mixed-precision Deep Neural Network (DNN) processing is proposed. Due to the bit-serial processing for the multi-bit data, the previous CIM processors could not exploit the energy-efficient computation of mixed-precision DNNs. This paper proposes an energy-efficient mixed-mode CIM processor with two key features: 1) Mixed-Mode Mixed precision CIM (M3-CIM) which achieves 55.46% energy efficiency improvement. 2) Digital-CIM for In-memory MAC for the increased throughput of M3-CIM. The proposed CIM processor was simulated in 28nm CMOS technology and occupies 1.96 mm2. It achieves a state-of-the-art energy efficiency of 161.6 TOPS/W with 72.8% accuracy at ImageNet (ResNet50).

Related papers:

Wooyoung Jo, Sangjin Kim, Juhyoung Lee, Soyeon Um, Zhiyong Li, and Hoi-jun Yoo, “A 161.6 TOPS/W Mixed-mode Computing-in-Memory Processor for Energy-Efficient Mixed-Precision Deep Neural Networks”, Int’l Symp. on Circuits and Systems (ISCAS), May 2022.

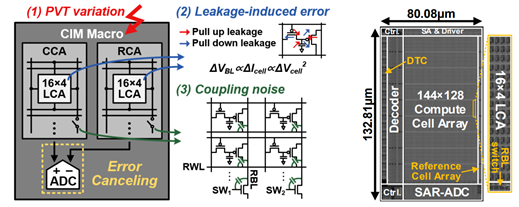

Computing-in-memory (CIM) shows high energy-efficiency through the analog DNN computation inside the memory macros. However, as the DNN size increases, the energy-efficiency of CIM is reduced by external memory access (EMA). One of the promising solutions is eDRAM based CIM to increase memory capacity with a high density cell. Although the eDRAM-CIM has a higher density than the SRAM-CIM, it suffers from both poor robustness and a low signal-to-noise ratio (SNR). In this work, the energy-efficient eDRAM-CIM macro is proposed while improving computational robustness and SNR with three key features: 1) High SNR voltage-based accumulation with segmented BL architecture (SBLA), resulting in 17.1 dB higher SNR, 2) canceling PVT/leakage-induced error with common-mode error canceling (CMEC) circuit, resulting in 51.4% PVT variation reduction and 51.4% refresh power reduction, 3) a ReLU-based zero-gating ADC (ZG-ADC), resulting in ADC power reduction up to 58.1%. According to these new features, the proposed eDRAM-CIM macro achieves 81.5-to-115.0 TOPS/W energy-efficiency with 209-to-295 μW power consumption when 4b×4b MAC operation is performed with 250 MHz core frequency. The proposed macro also achieves 91.52% accuracy at the CIFAR-10 object classification dataset (ResNet-20) without accuracy drop even with PVT variation.

Related papers:

Ha, Sangwoo, et al. “A 36.2 dB High SNR and PVT/Leakage-robust eDRAM Computing-In-Memory Macro with Segmented BL and Reference Cell Array.” IEEE Transactions on Circuits and Systems II: Express Briefs (2022).

Ha, Sangwoo, et al. “A 36.2 dB High SNR and PVT/Leakage-robust eDRAM Computing-In-Memory Macro with Segmented BL and Reference Cell Array”, IEEE International Symposium on Circuits and Systems (ISCAS), May. 2022

With the rise of contactless communication and streaming services, Super-resolution (SR) in mobile devices has become one of the most important image processing technologies. Also, the popularity of high-end Application Processor (AP) and high-resolution display in mobile drives the development of the lightweight mobile SR-CNNs, which show the high reconstruction quality. However, the large size and wide dynamic range of both images and intermediate feature maps in CNN hidden layers pose challenges for mobile platforms. Constraints from the limited power and shared bandwidth on mobile platform, a low power and energy-efficient architecture is required.

This paper presents an image processing SoC exploiting non-sparse SR task. It contributes 2 following key features: 1) Heterogeneous architecture with only 8bit FP-FXP hybrid-precision for SR task, and 2) data lifetime-aware two-way optimized cache subsystem for energy-efficient depth-first image processing. With highly optimized heterogeneous cores and cache subsystem, our SoC presents 2.6x higher energy-efficiency than previous SRNPU and 107 frame-per-second (fps) framerate running 4x SR image generation to Full-HD scale with 0.92 mJ/frame energy consumption.

Related papers:

Z. Li, S. Kim, D. Im, D. Han and H. -J. Yoo, “An 0.92 mJ/frame High-quality FHD Super-resolution Mobile Accelerator SoC with Hybrid-precision and Energy-efficient Cache,” 2022 IEEE Custom Integrated Circuits Conference (CICC), 2022.

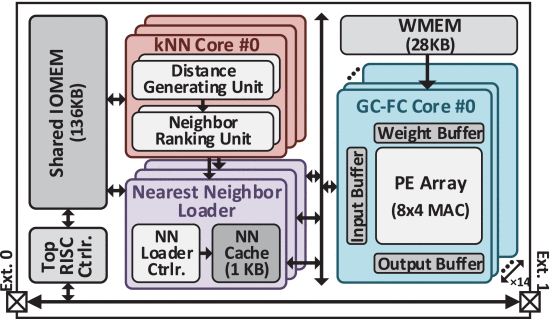

A low-power graph convolutional network (GCN) processor is proposed for accelerating 3D point cloud semantic segmentation (PCSS) in real-time on mobile devices. Three key features enable the low-power GCN-based 3D PCSS. First, the new hardware-friendly GCN algorithm, sparse grouping-based dilated graph convolution (SG-DGC) is proposed. SG-DGC reduces 71.7% of the overall computation and 76.9% of EMA through the sparse grouping of the point cloud. Second, the two-level pipeline (TLP) consisting of the point-level pipeline (PLP) and group-level pipelining (GLP) was proposed to improve low utilization by the imbalanced workload of GCN. The PLP enables point-level module-wise fusion (PMF) which reduces 47.4% of EMA for low power consumption. Also, center point feature reuse (CPFR) reuses computation results of the redundant operation and reduces 11.4% of computation. Finally, the GLP increased the core utilization by 21.1% by balancing the workload of graph generation and graph convolution and enable 1.1× higher throughput. The processor is implemented with 65nm CMOS technology, and the 4.0mm 2 3D PCSS processor show 95mW power consumption while operating in real-time of 30.8 fps in the 3D PCSS of the indoor scene with 4k points.

Related papers:

Kim, Sangjin, et al. “A Low-Power Graph Convolutional Network Processor With Sparse Grouping for 3D Point Cloud Semantic Segmentation in Mobile Devices.” IEEE Transactions on Circuits and Systems I: Regular Papers (2022).

Kim, Sangjin, et al. “A 54.7 fps 3D Point Cloud Semantic Segmentation Processor with Sparse Grouping based Dilated Graph Convolutional Network for Mobile Devices.” 2020 IEEE International Symposium on Circuits and Systems (ISCAS). IEEE, 2020.

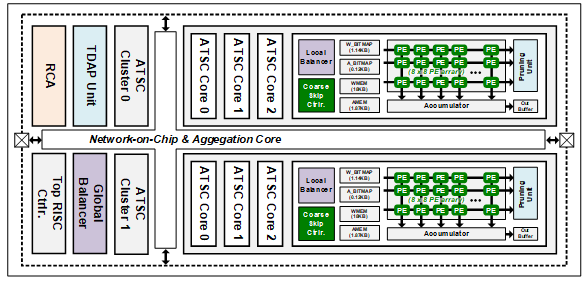

이 논문에서는 에너지 효율적인 심층 신경망 훈련을 지원하는 TSUNAMI를 제안한다. TSUNAMI는 활성화 및 가중치에서 0을 생성하기 위해 다중 모드 반복 가지치기를 지원한다. 타일 기반 동적 활성화 프루닝 유닛과 가중치 메모리 공유 프루닝 유닛은 프루닝으로 인해 요구되는 추가 메모리 액세스를 제거한다. Coarse-zero skipping 컨트롤러는 여러 개의 불필요한 MAC(multiply-and-accumulation) 연산을 한 번에 생략하고, fine-zero skipping 컨트롤러는 임의로 찾은 불필요한 MAC 연산을 생략한다. 가중치 희소성 balancer는 가중치 희소성 불균형으로 인한 활용도 저하를 해결하고, 각 convolution core의 작업량은 랜덤 채널 allocator에 의해 할당된다. TSUNAMI는 부동 소수점 8비트 활성화 및 가중치로 0.78V 및 50MHz에서 3.42TFLOPS/W의 에너지 효율을 달성하였고, 90% 희소성 조건에서 405.96 TFLOPS/W의 세계 최고 수준의 에너지 효율을 달성했다.

Related papers:

Kim, Sangyeob, et al. “TSUNAMI: Triple Sparsity-Aware Ultra Energy-Efficient Neural Network Training Accelerator With Multi-Modal Iterative Pruning.” IEEE Transactions on Circuits and Systems I: Regular Papers (2022).