Abstract

Main Figure

Main Figure

Abstract

Recent advances in LLM agents have largely built on reasoning backbones like ReAct (Yaoet al., 2023), which interleaves thought and action in complex environments. However, ReAct often produces ungrounded or incoherent reasoning steps, leading to misalignment between the agent’s actual state and goal. Our analysis finds that this stems from ReAct’s inability to maintain consistent internal beliefs and goal alignment, causing compounding errors and hallucinations. To address this, we introduce ReflAct, a novel backbone that shifts reasoning from merely planning next actions to continuously reflecting on the agent’s state relative to its goal. By explicitly grounding decisions in states and enforcing ongoing goal alignment, ReflAct dramatically improves strategic reliability. This design delivers substantial empirical gains: ReflAct surpasses ReAct by 27.7% on average, achieving a 93.3% success rate in ALFWorld. Notably, ReflAct even outperforms ReAct with added enhancement modules (e.g., Reflexion, WKM), showing that strengthening the core reasoning backbone is key to reliable agent performance.

Abstract

Reinforcement learning with offline data suffers from Q-value extrapolation errors. To address this issue, we first demonstrate that linear extrapolation of the Q-function beyond the data range is particularly problematic. To mitigate this, we propose guiding the gradual decrease of Q-values outside the data range, which is achieved through reward scaling with layer normalization (RS-LN) and a penalization mechanism for infeasible actions (PA). By combining RS-LN and PA, we develop a new algorithm called PARS. We evaluate PARS across a range of tasks, demonstrating superior performance compared to state-of-the-art algorithms in both offline training and online fine-tuning on the D4RL benchmark, with notable success in the challenging AntMaze Ultra task.

Main Figure

Cell-free multiple-input-multiple-output (MIMO) is poised to enable scalable next-generation cellular networks. To this end, it is crucial to optimize the cell-free MIMO link configuration, including user associations, data stream allocation, and beamforming (BF). However, the scalability of link configuration optimization is significantly challenged as signaling and computational costs increase with the number of base stations (BSs) and user equipments (UEs). To address this scalability issue, this paper proposes a distributed multi-agent deep reinforcement learning (MADRL)-based cell-free MIMO link configuration

framework that leverages interference approximation to minimize signaling overhead required for channel state information (CSI) exchange. Our proposed framework reduces the solution search space suitable for distributed MADRL, by decomposing the original sum rate maximization problem into BS-specific

tasks. Simulation results show that our proposed method achieves scalability, as the sum rate increases with the number of BSs and UEs.

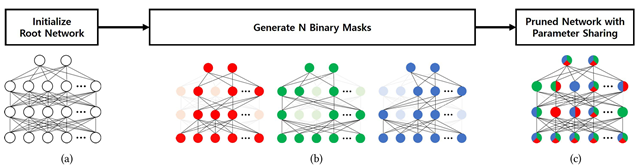

Title: Parameter Sharing with Network Pruning for Scalable Multi-Agent Deep Reinforcement Learning

Venue: AAMAS 2023

Abstract: Handling the problem of scalability is one of the essential issues for multi-agent reinforcement learning (MARL) algorithms to be applied to real-world problems typically involving massively many agents. For this, parameter sharing across multiple agents has widely been used since it reduces the training time by decreasing the number of parameters and increasing the sample efficiency. However, using the same parameters across agents limits the representational capacity of the joint policy and consequently, the performance can be degraded in multi-agent tasks that require different behaviors for different agents. In this paper, we propose a simple method that adopts structured pruning for a deep neural network to increase the representational capacity of the joint policy without introducing additional parameters. We evaluate the proposed method on several benchmark tasks, and numerical results show that the proposed method significantly outperforms other parameter-sharing methods.

Main Figure:

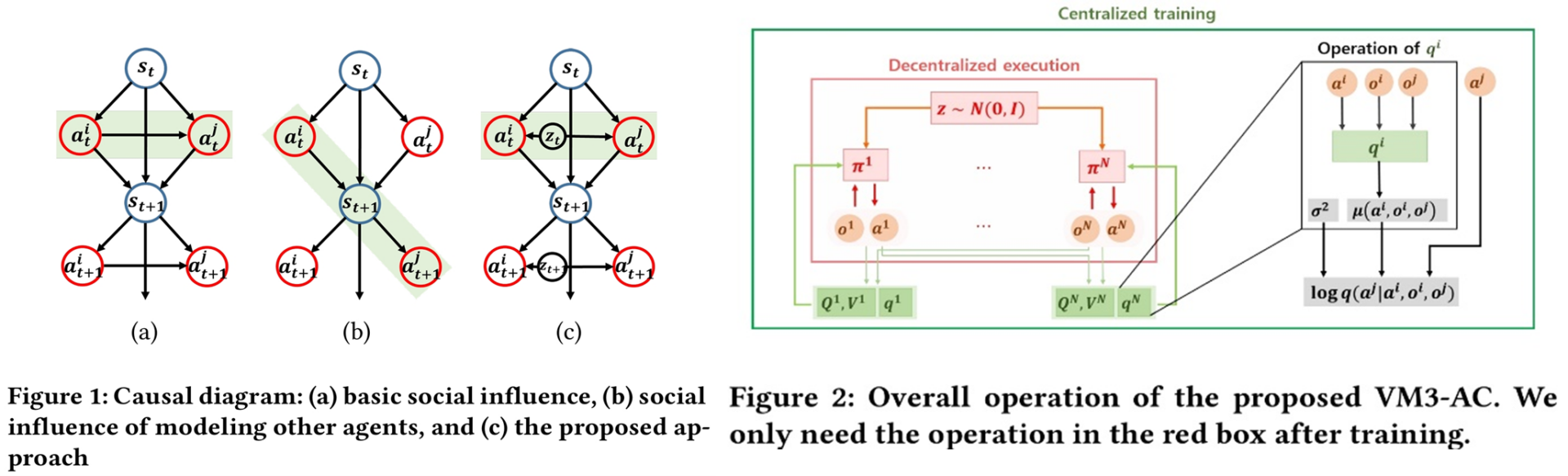

Title: A Variational Approach to Mutual Information-Based Coordination for Multi-Agent Reinforcement Learning

Venue: AAMAS 2023

Abstract: In this paper, we propose a new mutual information framework for multi-agent reinforcement learning to enable multiple agents to learn coordinated behaviors by regularizing the accumulated return with the simultaneous mutual information between multi-agent actions. By introducing a latent variable to induce nonzero mutual information between multi-agent actions and applying a variational bound, we derive a tractable lower bound on the considered MMI-regularized objective function. The derived tractable objective can be interpreted as maximum entropy reinforcement learning combined with uncertainty reduction of other agents actions. Applying policy iteration to maximize the derived lower bound, we propose a practical algorithm named variational maximum mutual information multi-agent actor-critic, which follows centralized learning with decentralized execution. We evaluated VM3-AC for several games requiring coordination, and numerical results show that VM3-AC outperforms other MARL algorithms in multi-agent tasks requiring high-quality coordination.

Main Figure:

Hyun-Young Park, Shahab Asoodeh, Si-Hyeon Lee, “Exactly Minimax-Optimal Locally Differentially Private Sampling,” NeurIPS 2024, Dec. 2024

Abstract: The sampling problem under local differential privacy requirements has recently been studied with potential applications to generative models, but a fundamental analysis of its privacy-utility trade-off (PUT) remains incomplete. In this work, we define the fundamental PUT of private sampling in the minimax sense, using the f-divergence between original and sampling distributions as the utility measure. We characterize the exact PUT for both finite and continuous data spaces under some mild conditions on the data distributions, and propose sampling mechanisms that are universally optimal for all f-divergences. Our numerical experiments demonstrate the superiority of our mechanisms over baselines, in terms of theoretical utilities for finite data space and of empirical utilities for continuous data space.

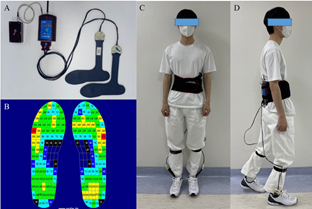

Jae-Min Park, Chang-Won Moon, Byung Chan Lee, Eungseok Oh, Juhyun Lee, Won-Jun Jang, Kang Hee Cho, Si-Hyeon Lee, “ Detection of Freezing of Gait in Parkinson’s Disease from Foot-pressure Sensing Insoles using a Temporal Convolutional Neural Network,” Frontiers in Aging Neuroscience, Jul. 2024

Abstract: Freezing of gait (FoG) is a common and debilitating symptom of Parkinson’s disease (PD) that can lead to falls and reduced quality of life. Wearable sensors have been used to detect FoG, but current methods have limitations in accuracy and practicality. In this paper, we aimed to develop a deep learning model using pressure sensor data from wearable insoles to accurately detect FoG in PD patients.

We recruited 14 PD patients and collected data from multiple trials of a standardized walking test using the pedar insole system. We proposed temporal convolutional neural network (TCNN) and applied rigorous data filtering and selective participant inclusion criteria to ensure the integrity of the dataset. We mapped the sensor data to a structured matrix and normalized it for input into our TCNN. We used a train-test split to evaluate the performance of the model.

We found that TCNN model achieved the highest accuracy, precision, sensitivity, specificity, and F1 score for FoG detection compared to other models. The TCNN model also showed good performance in detecting FoG episodes, even in various types of sensor noise situations.

We demonstrated the potential of using wearable pressure sensors and machine learning models for FoG detection in PD patients. The TCNN model showed promising results and could be used in future studies to develop a real-time FoG detection system to improve PD patients’ safety and quality of life. Additionally, our noise impact analysis identifies critical sensor locations, suggesting potential for reducing sensor numbers.

Main figure:

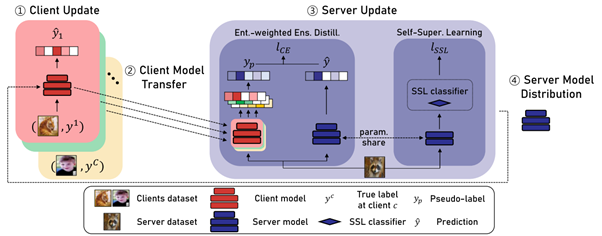

Jae-Min Park, Won-Jun Jang, Tae-Hyun Oh, Si-Hyeon Lee, “Overcoming Client Data Deficiency in Federated Learning by Exploiting Unlabeled Data on the Server,” IEEE Access, Sep. 2024

Abstract: Federated Learning (FL) is a distributed machine learning paradigm involving multiple clients to train a server model. In practice, clients often possess limited data and are not always available for simultaneous participation in FL, which can lead to data deficiency. This data deficiency degrades the entire learning process. To address this, we propose Federated learning with entropy-weighted ensemble Distillation and Self-supervised learning (FedDS). FedDS effectively handles situations with limited data per client and few clients. The key idea is to exploit the unlabeled data available on the server in the aggregating step of client models into a server model. We distill the multiple client models to a server model in an ensemble way. To robustly weigh the quality of source pseudo-labels from the client models, we propose an entropy weighting method and show a favorable tendency that our method assigns higher weights to more accurate predictions. Furthermore, we jointly leverage a separate self-supervised loss for improving generalization of the server model. We demonstrate the effectiveness of our FedDS both empirically and theoretically. For CIFAR-10, our method shows an improvement over FedAVG of 12.54% in the data deficient regime, and of 17.16% and 23.56% in the more challenging scenarios of noisy label or Byzantine client cases, respectively. For CIFAR-100 and ImageNet-100, our method shows an improvement over FedAVG of 18.68% and 15.06% in the data deficient regime, respectively.

Main figure