Authors: Mohammadmahdi Rahimi, Younghyun Park, Humaira Kousar, Hasnain Bhatti, Jaekyun Moon

Conference: Neural Information Processing Systems (NeurIPS) 2023

Abstract:

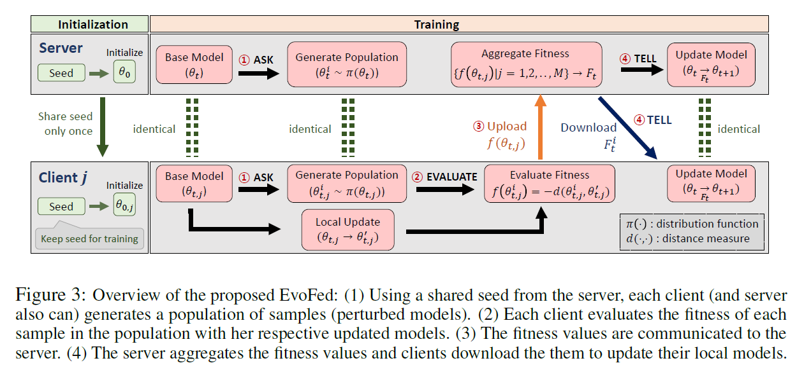

Federated Learning (FL) is a decentralized machine learning paradigm that enables collaborative model training across dispersed nodes without having to force individual nodes to share data.However, its broad adoption is hindered by the high communication costs of transmitting large model parameters. This paper presents EvoFed, a novel approach that integrates Evolutionary Strategies (ES) with FL to address these challenges.EvoFed employs a concept of ‘fitness-based information sharing’, deviating significantly from the conventional model-based FL. Rather than exchanging the actual updated model parameters, each nodetransmits a distance-based similarity measure between the locally updated model and each member of the noise-perturbed model population. Each node, as well as the server, generates an identical population set of perturbed models in a completely synchronized fashion using the same random seeds. With properly chosen noise variance and population size, certain perturbed models will closely reflect the actual model updated using the local dataset, allowing the transmitted similarity measures (or fitness values) to carry nearly the full information about the model parameters.As the size of the population is typically much smaller than the number of model parameters, the savings in communication load is large. The server aggregates these fitness values, weightedby the client’s batch sizes, and is able to update the global model. This global fitnessvector is then disseminated back to the nodes, each of which applies the same ES updateto be synchronized to the global model.Our experimental and theoretical analyses demonstrate that EvoFed achieves performance comparable to FedAvg and compresses the model more than 98.8\% on FMNIST and 99.7\% on CIFAR-10, reducing overall communication.EvoFed significantly reduces communication overhead and enhances privacy byproviding protection against eavesdroppers who do not participate in collaborative learning.These advancements position EvoFed as a significant step forward in FL, paving the way for more efficient and scalable decentralized machine learning.