Authors: Jungwuk Park*, Dong-Jun Han*, Soyeong Kim, Jaekyun Moon (*=equal contribution)

Conference: International Conference on Machine Learning (ICML) 2023

Abstract:

In domain generalization (DG), the target domain is unknown when the model is being trained, and the trained model should successfully work on an arbitrary (and possibly unseen) target domain during inference. This is a difficult problem, and despite active studies in recent years, it remains a great challenge. In this paper, we take a simple yet effective approach to tackle this issue. We propose test-time style shifting, which shifts the style of the test sample (that has a large style gap with the source domains) to the nearest source domain that the model is already familiar with, before making the prediction. This strategy enables the model to handle any target domains with arbitrary style statistics, without additional model update at test-time. Additionally, we propose style balancing, which provides a great platform for maximizing the advantage of test-time style shifting by handling the DG-specific imbalance issues. The proposed ideas are easy to implement and successfully work in conjunction with various other DG schemes. Experimental results on different datasets show the effectiveness of our methods.

Authors: Hyun-Young Park, Seung-Hyun Nam, Si-Hyeon Lee

Conference: 2023 IEEE International Symposium on Information Theory (ISIT)

Abstract: In this paper, we propose a new class of local differential privacy (LDP) schemes based on combinatorial block designs for a discrete distribution estimation. This class not only recovers many known LDP schemes in a unified framework of combinatorial block design, but also suggests a novel way of finding new schemes achieving the optimal (or near-optimal) privacy-utility trade-off with lower communication costs. Indeed, we find many new LDP schemes that achieve both the optimal privacy-utility trade-off and the minimum communication cost among all the unbiased schemes for a certain set of input data size and LDP constraint. Furthermore, to partially solve the sparse existence issue of block design schemes, we consider a broader class of LDP schemes based on regular and pairwise-balanced designs, called RPBD schemes, which relax one of the symmetry requirements on block designs. By considering this broader class of RPBD schemes, we can find LDP schemes achieving near-optimal privacy-utility trade-off with reasonably low communication costs for a much larger set of input data size and LDP constraint.

Authors: Hyeon-Seong Im, Si-Hyeon Lee

Journal: IEEE Transactions on Information Forensics and Security

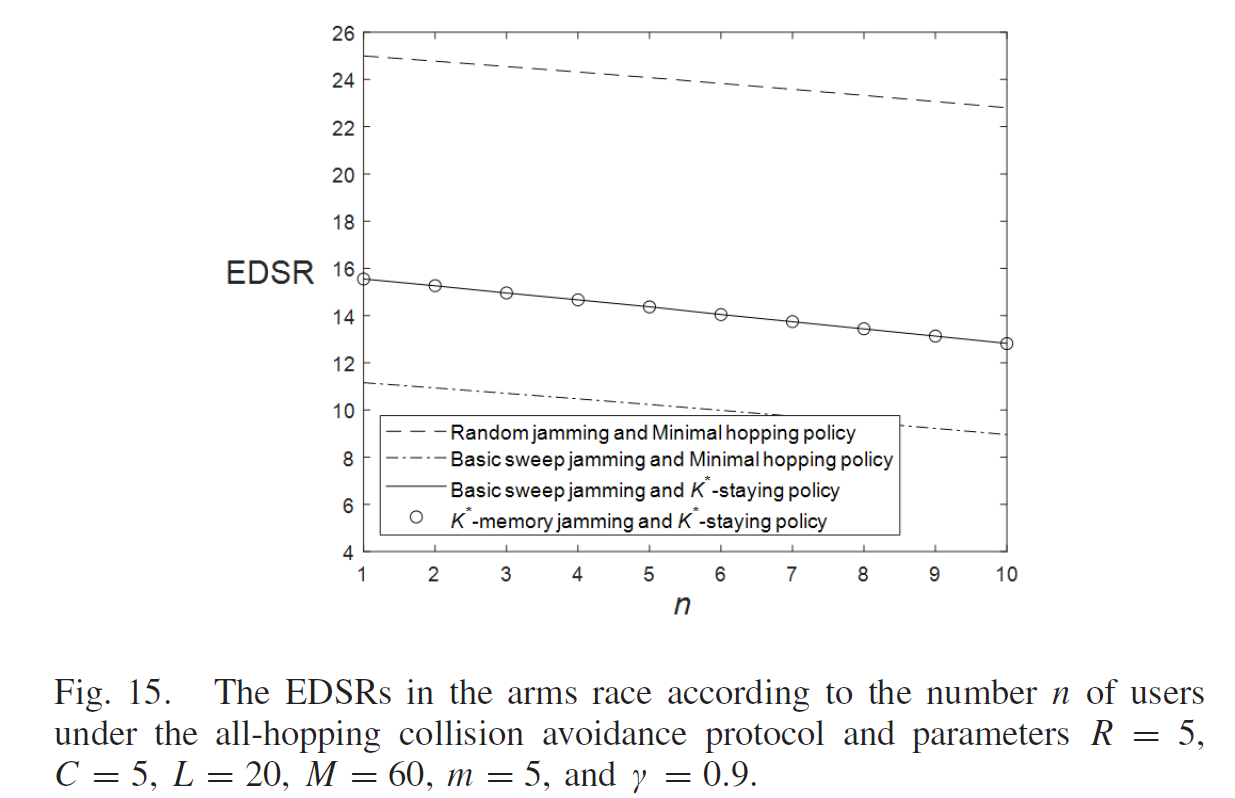

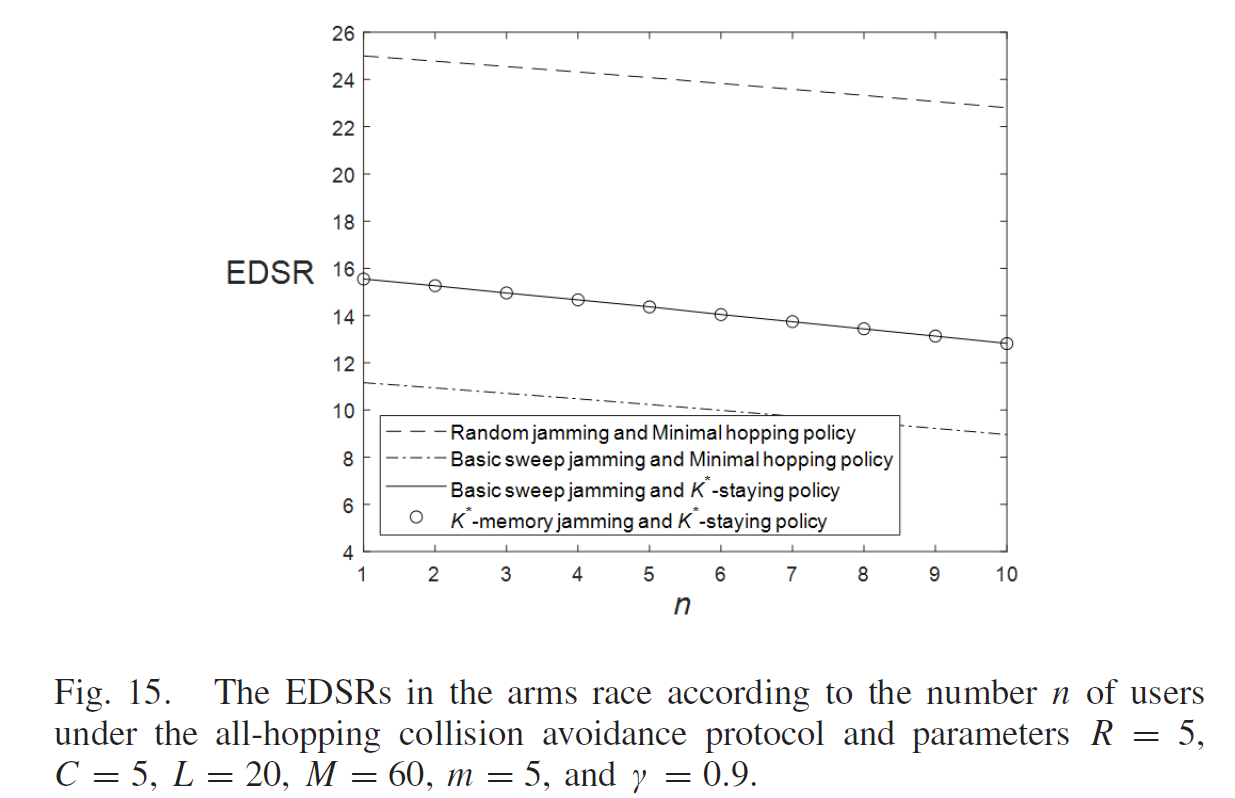

Abstract: For multi-band wireless ad hoc networks of multiple users, an anti-jamming game between the users and a jammer is studied. In this game, the users (resp. jammer) want to maximize (resp. minimize) the expected rewards of the users taking into account various factors such as communication rate, hopping cost, and jamming loss. We analyze the arms race of the game and derive an optimal frequency hopping policy at each stage of the arms race based on the Markov decision process (MDP). It is analytically shown that the arms race reaches an equilibrium after a few rounds, and a frequency hopping policy and a jamming strategy at the equilibrium are characterized. We propose two kinds of collision avoidance protocols to ensure that at most one user communicates in each frequency band, and provide various numerical results that show the effects of the reward parameters and collision avoidance protocols on the optimal frequency hopping policy and the expected rewards at the equilibrium. Moreover, we discuss about equilibria for the case where the jammer adopts some unpredictable jamming strategies.

Authors: Seokhwa Hong, Kyuyeong Kim, Si-Hyeon Lee

Journal: IEEE Communications Letters (2023)

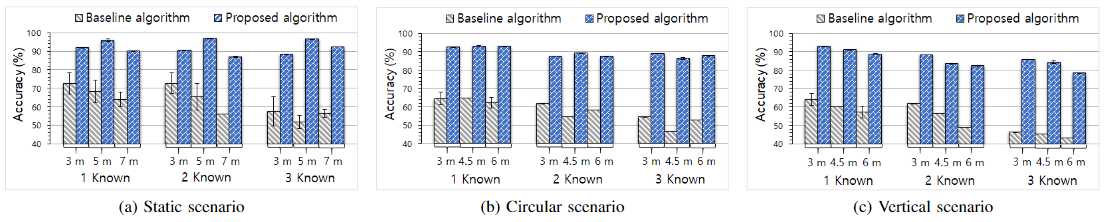

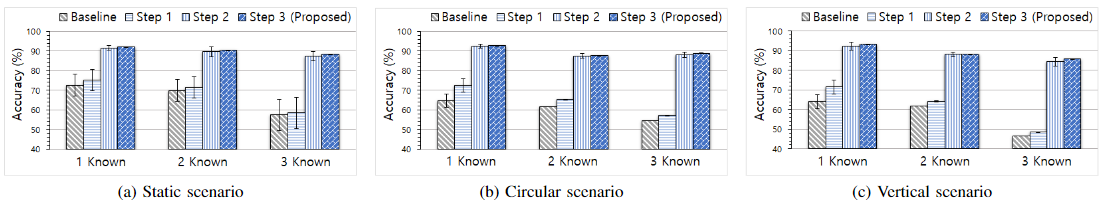

Abstract: In this paper, we propose a novel machine learning based jamming detection algorithm that can classify known attacks used for training and detect unknown attacks not used for training. The proposed algorithm has a hybrid structure of simple classification and anomaly detection models, which are decision tree (DT) and isolation forest (IF), respectively. After a test data passes through a DT that only classifies the data as normal or one of the known attacks, it enters an IF algorithm that determines if the DT’s decision is indeed correct. Furthermore, an ensemble method is applied to reduce the deviation. The proposed algorithm is evaluated on real datasets from wireless modems operating in the C-band under static and mobile environments with a total of four types of jamming attacks. For the simultaneous classification and detection task, the proposed algorithm is shown to achieve superior performance over a baseline algorithm for all the cases of jamming distances, the number of known jamming attacks, and mobility scenarios.

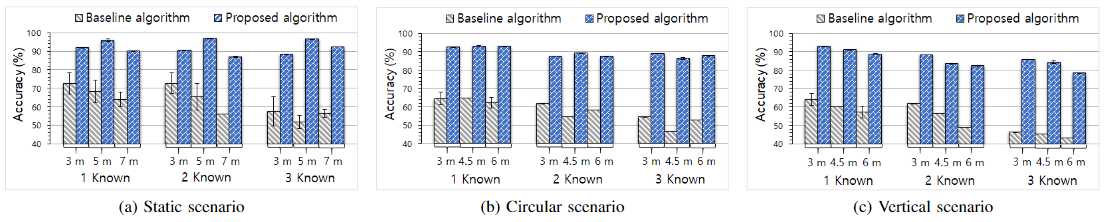

Fig. 1: Classification accuracy

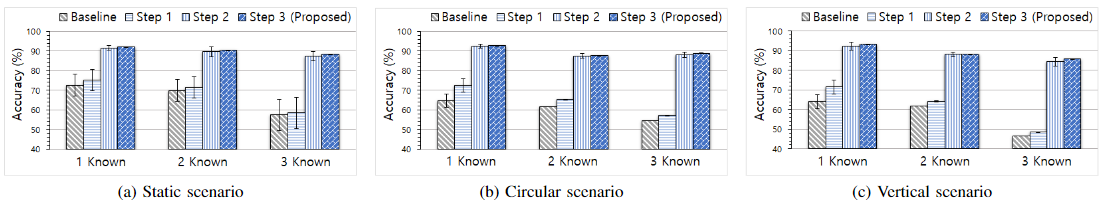

Fig. 2: Step-by-step performance improvements beyond

Authors: Nohyun Ki, Hoyong Choi, Hye Won Chung

Conference: International Conference on Learning Representations (ICLR) 2023

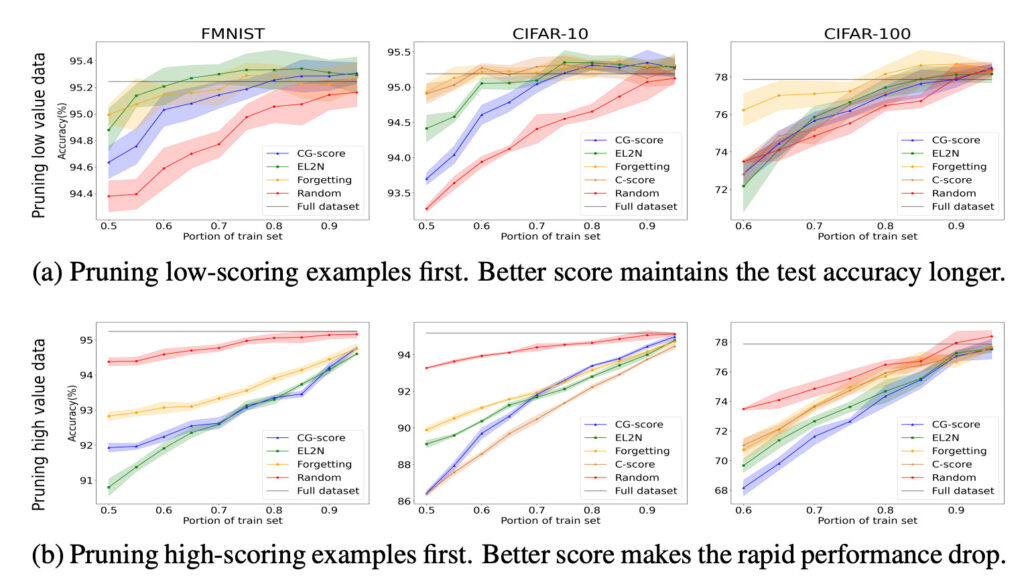

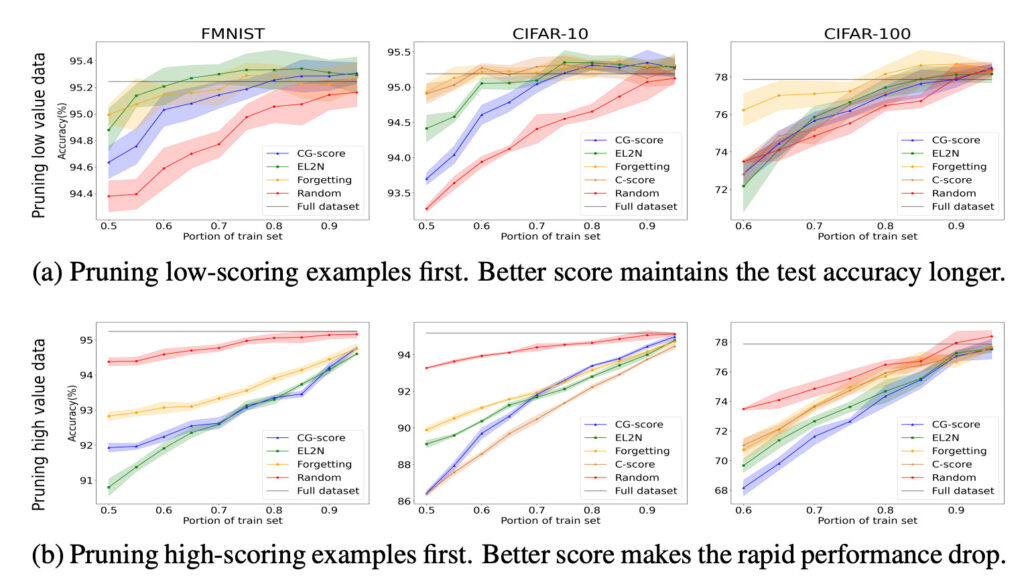

Abstract: Many recent works on understanding deep learning try to quantify how much individual data instances influence the optimization and generalization of a model. Such attempts reveal characteristics and importance of individual instances, which may provide useful information in diagnosing and improving deep learning. However, most of the existing works on data valuation require actual training of a model, which often demands high-computational cost. In this paper, we provide a training-free data valuation score, called complexity-gap score, which is a data-centric score to quantify the influence of individual instances in generalization of two-layer overparameterized neural networks. The proposed score can quantify irregularity of the instances and measure how much each data instance contributes in the total movement of the network parameters during training. We theoretically analyze and empirically demonstrate the effectiveness of the complexity-gap score in finding `irregular or mislabeled’ data instances, and also provide applications of the score in analyzing datasets and diagnosing training dynamics. Our code is publicly available at https://github.com/JJchy/CG_score.

Authors: Minguk Jang, Sae-Young Chung, and Hye Won Chung

Conference: International Conference on Learning Representations (ICLR) 2023

Abstract:

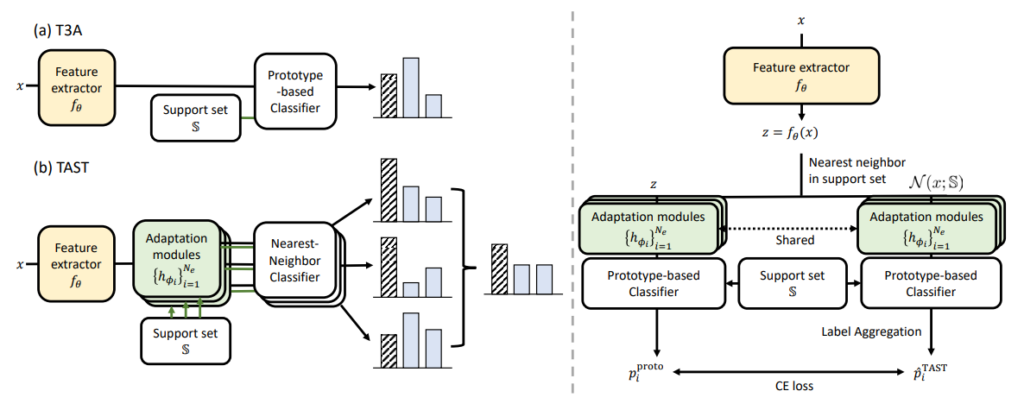

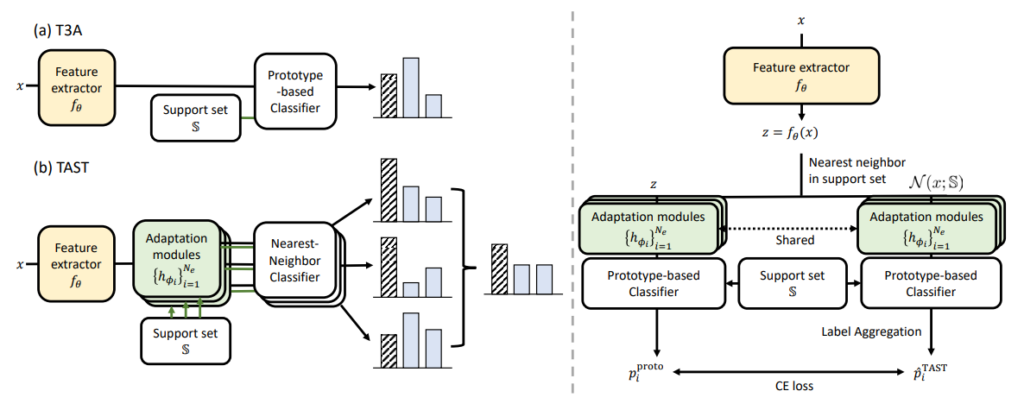

Test-time adaptation (TTA) aims to adapt a trained classifier using online unlabeled test data only, without any information related to the training procedure. Most existing TTA methods adapt the trained classifier using the classifier’s prediction on the test data as pseudo-label. However, under test-time domain shift, accuracy of the pseudo labels cannot be guaranteed, and thus the TTA methods often encounter performance degradation at the adapted classifier. To overcome this limitation, we propose a novel test-time adaptation method, called Test-time Adaptation via Self-Training with nearest neighbor information (TAST), which is composed of the following procedures: (1) adds trainable adaptation modules on top of the trained feature extractor; (2) newly defines a pseudo-label distribution for the test data by using the nearest neighbor information; (3) trains these modules only a few times during test time to match the nearest neighbor-based pseudo label distribution and a prototype-based class distribution for the test data; and (4) predicts the label of test data using the average predicted class distribution from these modules. The pseudo-label generation is based on the basic intuition that a test data and its nearest neighbor in the embedding space are likely to share the same label under the domain shift. By utilizing multiple randomly initialized adaptation modules, TAST extracts useful information for the classification of the test data under the domain shift, using the nearest neighbor information. TAST showed better performance than the state-of-the-art TTA methods on two standard benchmark tasks, domain generalization, namely VLCS, PACS, OfficeHome, and TerraIncognita, and image corruption, particularly CIFAR-10/100C.

Conference: IEEE International Conference on Computer Communications (INFOCOM) 2023.

Abstract:

A fundamental challenge to providing edge-AI services is the need for a machine learning (ML) model that achieves personalization (i.e., to individual clients) and generalization (i.e., to unseen data) properties concurrently. Existing techniques in federated learning (FL) have encountered a steep tradeoff between these objectives and impose large computational requirements on edge devices during training and inference. In this paper, we propose SplitGP, a new split learning solution that can simultaneously capture generalization and personalization capabilities for efficient inference across resource-constrained clients (e.g., mobile/IoT devices). Our key idea is to split the full ML model into client-side and server-side components, and impose different roles to them: the client-side model is trained to have strong personalization capability optimized to each client’s main task, while the server-side model is trained to have strong generalization capability for handling all clients’ out-ofdistribution tasks. We analytically characterize the convergence behavior of SplitGP, revealing that all client models approach stationary points asymptotically. Further, we analyze the inference time in SplitGP and provide bounds for determining model split ratios. Experimental results show that SplitGP outperforms existing baselines by wide margins in inference time and test accuracy for varying amounts of out-of-distribution samples.

Conference: International Conference on Learning Representations (ICLR) 2023.

Abstract:

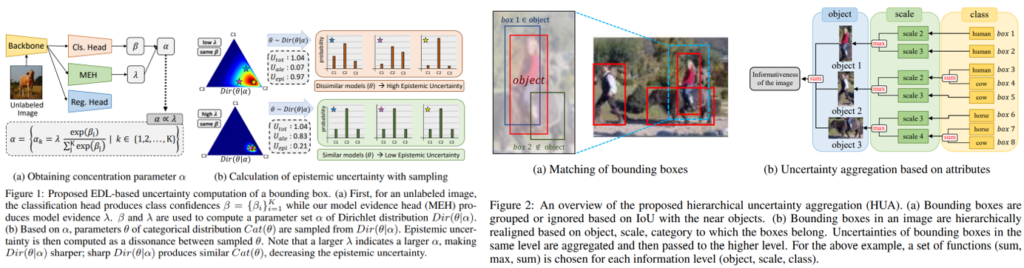

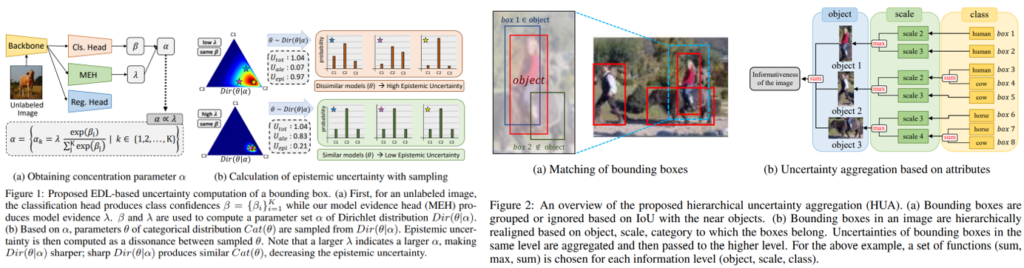

Despite the huge success of object detection, the training process still requires an immense amount of labeled data. Although various active learning solutions for object detection have been proposed, most existing works do not take advantage of epistemic uncertainty, which is an important metric for capturing the usefulness of the sample. Also, previous works pay little attention to the attributes of each bounding box (e.g., nearest object, box size) when computing the informativeness of an image. In this paper, we propose a new active learning strategy for object detection that overcomes the shortcomings of prior works. To make use of epistemic uncertainty, we adopt evidential deep learning (EDL) and propose a new module termed model evidence head (MEH), that makes EDL highly compatible with object detection. Based on the computed epistemic uncertainty of each bounding box, we propose hierarchical uncertainty aggregation (HUA) for obtaining the informativeness of an image. HUA realigns all bounding boxes into multiple levels based on the attributes and aggregates uncertainties in a bottom-up order, to effectively capture the context within the image. Experimental results show that our method outperforms existing state-of-the-art methods by a considerable margin.

Conference: International Conference on Learning Representations (ICLR) 2023.

Abstract:

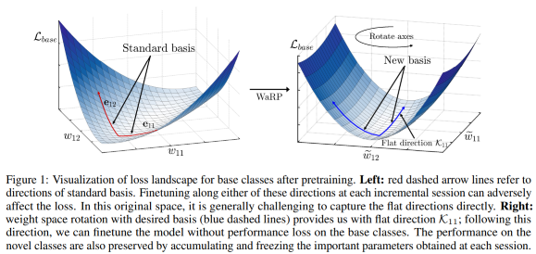

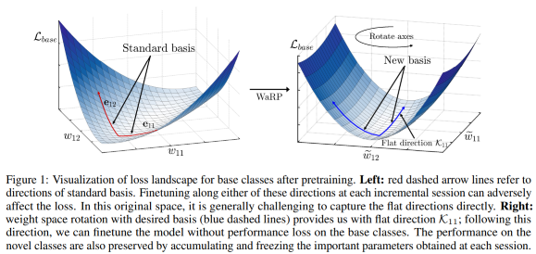

Class-incremental few-shot learning, where new sets of classes are provided sequentially with only a few training samples, presents a great challenge due to catastrophic forgetting of old knowledge and overfitting caused by lack of data. During finetuning on new classes, the performance on previous classes deteriorates quickly even when only a small fraction of parameters are updated, since the previous knowledge is broadly associated with most of the model parameters in the original parameter space. In this paper, we introduce WaRP, the weight space rotation process, which transforms the original parameter space into a new space so that we can push most of the previous knowledge compactly into only a few important parameters. By properly identifying and freezing these key parameters in the new weight space, we can finetune the remaining parameters without affecting the knowledge of previous classes. As a result, WaRP provides an additional room for the model to effectively learn new classes in future incremental sessions. Experimental results confirm the effectiveness of our solution and show the improved performance over the state-of-the-art methods.

Conference: International Conference on Machine Learning (ICML) 2022.

Abstract:

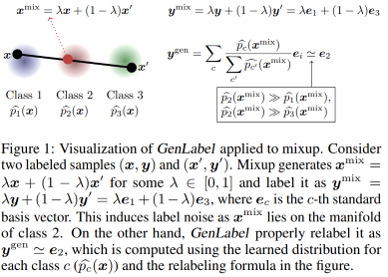

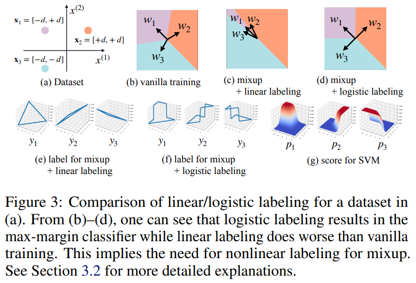

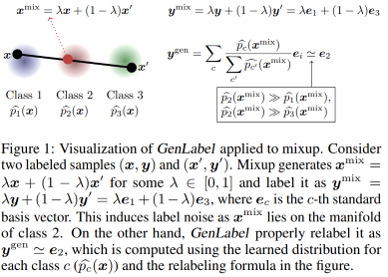

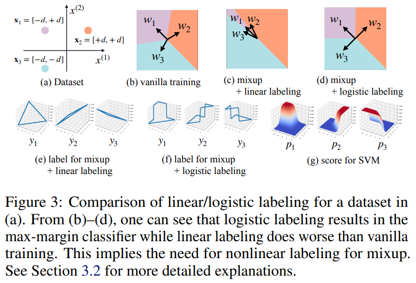

Mixup is a data augmentation method that generates new data points by mixing a pair of input data. While mixup generally improves the prediction performance, it sometimes degrades the performance. In this paper, we first identify the main causes of this phenomenon by theoretically and empirically analyzing the mixup algorithm. To resolve this, we propose GenLabel, a simple yet effective relabeling algorithm designed for mixup. In particular, GenLabel helps the mixup algorithm correctly label mixup samples by learning the class-conditional data distribution using generative models. Via theor etical and empirical analysis, we show that mixup, when used together with GenLabel, can effectively resolve the aforementioned phenomenon, improving the accuracy of mixup-trained model.