Authors: Hyeryung Jang, Hyungseok Song, and Yung Yi

Journal: IEEE/ACM Transactions on Networking, 2022 (Early Access)

Abstract

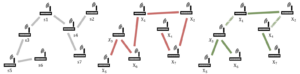

본 연구는 센서 네트워크, 소셜 네트워크와 같이 여러 노드들이 서로 다른 정보를 가지고 공간적으로 분리되어 있는 시스템에서의 효율적인 통신 방법에 대한 연구이다. 이러한 시스템에서 시스템 내의 노드들은 서로간의 통계적 데이터 관계성과 물리적 관계성을 가지고 있으며, 효율적인 통신을 위해서는 데이터를 통한 학습의 정확도와 통신에 필요한 메시지 전달 비용을 모두 고려해야한다. 이러한 네트워크 시스템의 분석을 위하여 우리는 이 시스템을 머신러닝 프레임워크 중 하나인 그래피컬 모델로 모델링하고, 여러 대표적인 메시지 전달 메커니즘들에 대해 이론적 분석과 시뮬레이션 결과를 통하여 제안된 방법이 효율적임을 보인다.

Figure. 물리적인 관계성과 데이트 관계성이 다른 네트워크 그래프 예시. (Left) 물리적 관계성 그래프 (Middle) 통계적 데이터 관계성 그래프 (Right) 노드들간의 메시지 전달 비용을 고려한 통계적 데이터 관계성 그래프

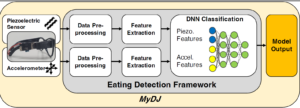

Various automated eating detection wearables have been proposed to monitor food intakes. While these systems overcome the forgetfulness of manual user journaling, they typically show low accuracy at outside-the-lab environments or have intrusive form-factors (e.g., headgear). Eyeglasses are emerging as a socially-acceptable eating detection wearable, but existing approaches require custom-built frames and consume large power. We propose MyDJ, an eating detection system that could be attached to any eyeglass frame. MyDJ achieves accurate and energy-efficient eating detection by capturing complementary chewing signals on a piezoelectric sensor and an accelerometer. We evaluated the accuracy and wearability of MyDJ with 30 subjects in uncontrolled environments, where six subjects attached MyDJ on their own eyeglasses for a week. Our study shows that MyDJ achieves 0.919 F1-score in eating episode coverage, with 4.03× battery time over the state-of-the-art systems. In addition, participants reported wearing MyDJ was almost as comfortable (94.95%) as wearing regular eyeglasses.

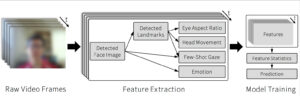

The importance of online education has been brought to the forefront due to COVID. Understanding students’ attentional states are crucial for lecturers, but this could be more difficult in online settings than in physical classrooms. Existing methods that gauge online students’ attention status typically require specialized sensors such as eye-trackers and thus are not easily deployable to every student in real-world settings. To tackle this problem, we utilize facial video from student webcams for attention state prediction in online lectures. We conduct an experiment in the wild with 37 participants, resulting in a dataset consisting of 15 hours of lecture-taking students’ facial recordings with corresponding 1,100 attentional state probings. We present PAFE (Predicting Attention with Facial Expression), a facial-video-based framework for attentional state prediction that focuses on the vision-based representation of traditional physiological mind-wandering features related to partial drowsiness, emotion, and gaze. Our model only requires a single camera and outperforms gaze-only baselines.

저자:

김우중, 윤찬현(지도교수)

Abstract:

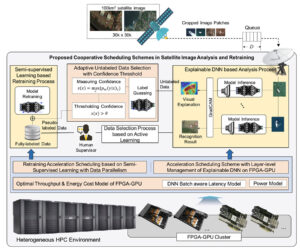

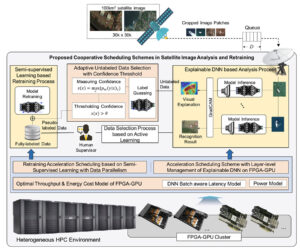

딥 러닝 기반 위성 이미지 분석 및 이를 위한 학습 시스템은 지상 물체의 정교한 분석 능력을 향상시키는 방향으로 새로운 기술들이 개발되고 있다. 이러한 기술들 기반에서 위성 이미지 분석 및 학습 과정에 설명 가능한 DNN 모델을 적용하기 위해 새로운 가속 스케줄링 메커니즘을 제안하고자 한다. 특히, 기존의 DNN 가속 기법들로는 설명 가능한 DNN 모델의 연산 복잡성과 위성 이미지 분석 및 재학습 비용으로 인해 연산 처리 및 서비스 측면에서의 성능 저하를 초래한다. 본 논문에서는 이러한 성능 저하를 극복하기 위해 위성 이미지 분석 및 재학습 프로세스에서 설명 가능한 DNN 가속을 위한 협력 스케줄링 체계를 제안한다. 이를 위해 설명 가능한 DNN 가속화에 필요한 최적화된 처리 시간과 비용을 도출하기 위한 지연 시간 및 에너지 비용 모델링을 정의한다. 이를 토대로, FPGA-GPU 가속 시스템에서 설명 가능한 DNN의 계층 수준 관리를 통한 스케줄링 기법을 제안하며, 해당 기법을 통해 연산 처리 비용을 최소화할 수 있음을 확인하였다. 또한, 재학습 과정을 가속화하는 데 있어 신뢰 임계값과 준지도 학습 기반 데이터 병렬화 체계를 적용한 적응형 Unlabeled 데이터 선택 기법을 제안한다. 실험 성능 평과 결과, 제안된 기법이 지연 시간 제약을 보장하는 동시에 기존 DNN 가속 시스템의 에너지 비용을 최대 40%까지 절감한다는 것을 확인하였다.

논문 정보:

Kim, Woo-Joong, and Chan-Hyun Youn. “Cooperative Scheduling Schemes for Explainable DNN Acceleration in Satellite Image Analysis and Retraining.” IEEE Transactions on Parallel and Distributed Systems 33.7, 2021, p.1605-1618.

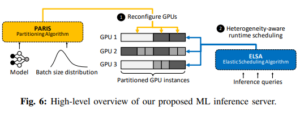

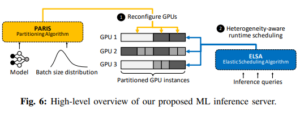

In cloud machine learning (ML) inference systems, providing low latency to end-users is of utmost importance. However, maximizing server utilization and system throughput is also crucial for ML service providers as it helps lower the total-cost-of-ownership. GPUs have oftentimes been criticized for ML inference usages as its massive compute and memory throughput is hard to be fully utilized under lowbatch inference scenarios. To address such limitation, NVIDIA’s recently announced Ampere GPU architecture provides features to “reconfigure” one large, monolithic GPU into multiple smaller “GPU partitions”. Such feature provides cloud ML service providers the ability to utilize the reconfigurable GPU not only for large-batch training but also for small-batch inference with the potential to achieve high resource utilization. In this paper, we study this emerging GPU architecture with reconfigurability to develop a high-performance multi-GPU ML inference server. Our first proposition is a sophisticated partitioning algorithm for reconfigurable GPUs that systematically determines a heterogeneous set of multi-granular GPU partitions, best suited for the inference server’s deployment. Furthermore, we co-design an elastic scheduling algorithm tailored for our heterogeneously partitioned GPU server which effectively balances low latency and high GPU utilization.

Yunseong Kim, Yujeong Choi, and Minsoo Rhu, “PARIS and ELSA: An Elastic Scheduling Algorithm for Reconfigurable Multi-GPU Inference Servers,” The 59th ACM/ESDA/IEEE Design Automation Conference (DAC), San Francisco, CA, Jul. 2022

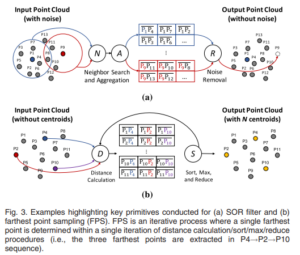

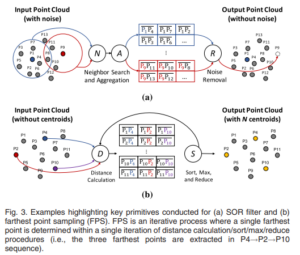

A point cloud is a collection of points, which is measured by time-offlight information from LiDAR sensors, forming geometrical representations of the surrounding environment. With the algorithmic success of deep learning networks, point clouds are not only used in traditional application domains like localization or HD map construction but also in a variety of avenues including object classification, 3D object detection, or semantic segmentation. While point cloud analytics are gaining significant traction in both academia and industry, the computer architecture community has only recently begun exploring this important problem space. In this paper, we conduct a detailed, end-to-end characterization on deep learning based point cloud analytics workload, root-causing the frontend data preparation stage as a significant performance limiter. Through our findings, we discuss possible future directions to motivate continued research in this emerging application domain.

Bongjoon Hyun, Jiwon Lee, and Minsoo Rhu, “Characterization and Analysis of Deep Learning for 3D Point Cloud Analytics”, IEEE Computer Architecture Letters, Jul. 2021

Graph neural networks (GNNs) can extract features by learning both the representation of each objects (i.e., graph nodes) as well as the relationship across different objects (i.e., the edges that connect nodes), achieving state-of-the-art performance on a wide range of graph-based tasks. Despite its strengths, utilizing these algorithms in a production environment faces several key challenges as the number of graph nodes and edges amount to several billions to hundreds of billions scale, requiring substantial storage space for training. Unfortunately, existing ML frameworks based on the in-memory processing model significantly hamper the productivity of algorithm developers as it mandates the overall working set to fit within DRAM capacity constraints. In this work, we first study state-of-the-art, largescale GNN training algorithms. We then conduct a detailed characterization on utilizing capacity-optimized non-volatile memory solutions for storing memoryhungry GNN data, exploring the feasibility of SSDs for large-scale GNN training

Yunjae Lee, Youngeun Kwon, and Minsoo Rhu, “Understanding the Implication of Non-Volatile Memory for Large-Scale Graph Neural Network Training”, IEEE Computer Architecture Letters, Jul. 2021

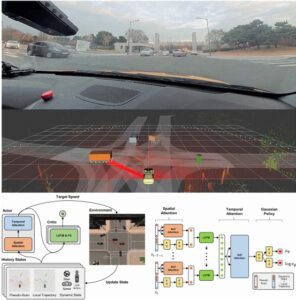

Autonomous driving in an urban environment with surrounding agents remains challenging. One of the key challenges is to accurately predict the traversability map that probabilistically represents future trajectories considering multiple contexts: inertial, environmental, and social. To address this, various approaches have been proposed; however, they mainly focus on considering the individual context. In addition, most studies utilize expensive prior information (such as HD maps) of the driving environment, which is not a scalable approach. In this study, we extend a deep inverse reinforcement learning-based approach that can predict the traversability map while incorporating multiple contexts for autonomous driving in a dynamic environment. Instead of using expensive prior information of the driving scene, we propose a novel deep neural network to extract contextual cues from sensing data and effectively incorporate them in the output, i.e., the reward map. Based on the reward map, our method predicts the ego-centric traversability map that represents the probability distribution of the plausible and socially acceptable future trajectories. The proposed method is qualitatively and quantitatively evaluated in real-world traffic scenarios with various baselines. The experimental results show that our method improves the prediction accuracy compared to other baseline methods and can predict future trajectories similar to those followed by a human driver.

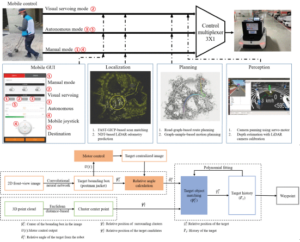

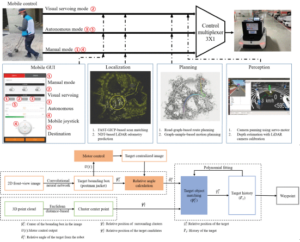

This paper introduces a robot system that is designed to assist postal workers by carrying heavy packages in a complex urban environment such as apartment complex. Since most of such areas do not have access to reliable GPS signal reception, we propose a 3-D point cloud map based matching localization with robust position estimation along with a perception-based visual servoing algorithm. The delivery robot is also designed to communicate with the control center so that the operator can monitor the current and past situation using onboard videos, obstacle information, and emergency stop logs. Also, the postal worker can choose between autonomous driving mode and follow-me mode using his/her mobile device. To validate the performance of the proposed robot system, we collaborated with full-time postal workers performing their actual delivery services for more than four weeks to collect the field operation data. Using this data, we were able to confirm that the proposed map-matching algorithm performs well in various environments where the robot could navigate with reliable position accuracy and obstacle avoidance capability.

Driving at an unsignalized intersection is a complex traffic scenario that requires both traffic safety and efficiency. At the unsignalized intersection, the driving policy does not simply maintain a safe distance for all vehicles. Instead, it pays more attention to vehicles that potentially have conflicts with the ego vehicle, while guessing the intentions of other vehicles. In this paper, we propose an attention-based driving policy for handling unprotected intersections using deep reinforcement learning. By leveraging attention, our policy learns to focus on more spatially and temporally important features within its egocentric observation. This selective attention enables our policy to make safe and efficient driving decisions in various congested intersection environments. Our experiments show that the proposed policy not only outperforms other baseline policies in various intersection scenarios and traffic density conditions but also has interpretability in its decision process. Furthermore, we verify our policy model’s feasibility in real-world deployment by transferring the trained model to a full-scale vehicle system. Our model successfully performs various intersection scenarios, even with noisy sensory data and delayed responses. Our approach reveals more opportunities for implementing generic and interpretable policy models in realworld autonomous driving.