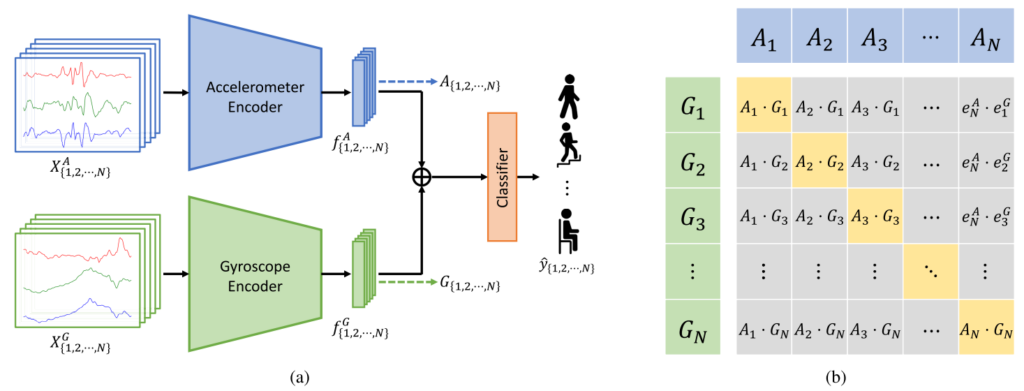

Recently, the widespread use of smart devices has invoked more interest in sensor-based applications. Human activity recognition (HAR) with body-worn sensors is an underlying task that aims to recognize a person’s physical activity from on-body sensor readings. In this article, we address the HAR problem that utilizes accelerometers and gyroscopes. While deep-learning-based feature extraction methods are advancing, recent HAR systems with multimodal sensors mostly use data-level fusion, aggregating different sensor signals into one multichannel input. However, they neglect the fact that different sensors capture different physical properties and produce distinct patterns. In this article, we propose a two-stream convolutional neural network (CNN) model that processes the accelerometer and gyroscope signals separately. The modality-specific features are fused in feature-level and jointly used for the recognition task. Furthermore, we introduce a self-supervised learning (SSL) task that pairs the accelerometer and the gyroscope embeddings acquired from the same activity instance. This auxiliary objective allows the feature extractors of our model to communicate during training and exploit complementary information, achieving better representations for HAR. We name our end-to-end multimodal HAR system the Contrastive Accelerometer–Gyroscope Embedding (CAGE) model. CAGE outperforms preceding HAR models in four publicly available benchmarks. The code is available at github.com/quotation2520/CAGE4HAR.

Recently, the widespread use of smart devices has invoked more interest in sensor-based applications. Human activity recognition (HAR) with body-worn sensors is an underlying task that aims to recognize a person’s physical activity from on-body sensor readings. In this article, we address the HAR problem that utilizes accelerometers and gyroscopes. While deep-learning-based feature extraction methods are advancing, recent HAR systems with multimodal sensors mostly use data-level fusion, aggregating different sensor signals into one multichannel input. However, they neglect the fact that different sensors capture different physical properties and produce distinct patterns. In this article, we propose a two-stream convolutional neural network (CNN) model that processes the accelerometer and gyroscope signals separately. The modality-specific features are fused in feature-level and jointly used for the recognition task. Furthermore, we introduce a self-supervised learning (SSL) task that pairs the accelerometer and the gyroscope embeddings acquired from the same activity instance. This auxiliary objective allows the feature extractors of our model to communicate during training and exploit complementary information, achieving better representations for HAR. We name our end-to-end multimodal HAR system the Contrastive Accelerometer–Gyroscope Embedding (CAGE) model. CAGE outperforms preceding HAR models in four publicly available benchmarks. The code is available at github.com/quotation2520/CAGE4HAR.