Title:

SooJean Han, Soon-Jo Chung, Johanna Gustafson, “Congestion Control of Vehicle Traffic Networks by Learning Structural and Temporal Patterns.” Learning for Dynamics and Control Conference (L4DC), Jun 2023.

Abstract:

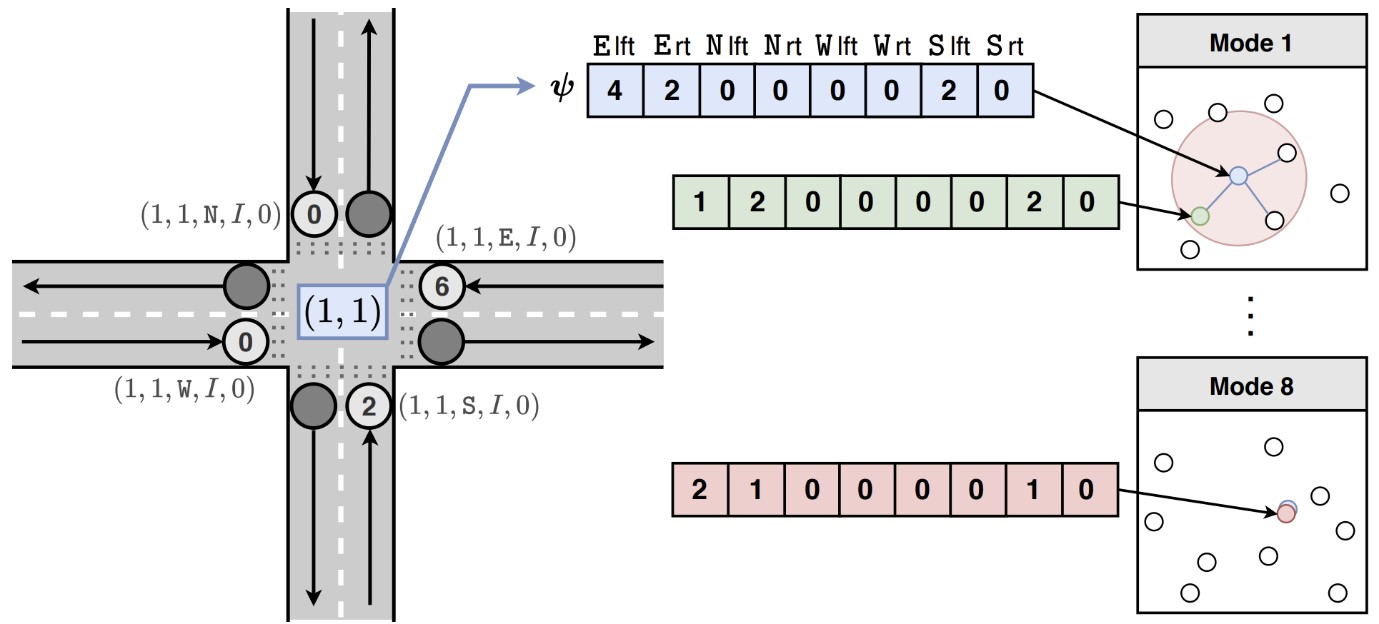

For many network control problems, there exist natural spatial structures and temporal repetition in the network that can be exploited so that controller synthesis does not spend unnecessary time and energy redundantly computing control laws. One notable example of this is vehicle traffic flow over metropolitan intersection networks: spatial symmetries of the network arise from the grid-like structure, while temporal symmetries arise from both the structure and from human routine. In this paper, we propose a controller architecture based on pattern-learning with memory and prediction (PLMP), which exploits these natural symmetries to perform congestion control without redundant computation of light signal sequences. Memory is implemented to store any patterns (intersection snapshots) that have occurred in the past “frequently enough”, and redundancy is reduced with an extension of the state-of-the-art episodic control method which builds equivalence classes to group together patterns that can be controlled using the same traffic light. Prediction is implemented to estimate future occurrence times of patterns by predicting vehicle arrivals at subsequent intersections; that way, we schedule light signal sequences in advance. We compare periodic baselines to various implementations of our controller model, including a version of PLMP with prediction excluded called pattern-learning with memory (PLM), by evaluating their performance according to three congestion metrics on two traffic datasets with varying arrival characteristics.