-메인 영상: https://youtu.be/JC1_bnTxPiQ

-쿠키 영상: https://youtu.be/mhUUZVbeDA0

-메인 영상: https://youtu.be/JC1_bnTxPiQ

-쿠키 영상: https://youtu.be/mhUUZVbeDA0

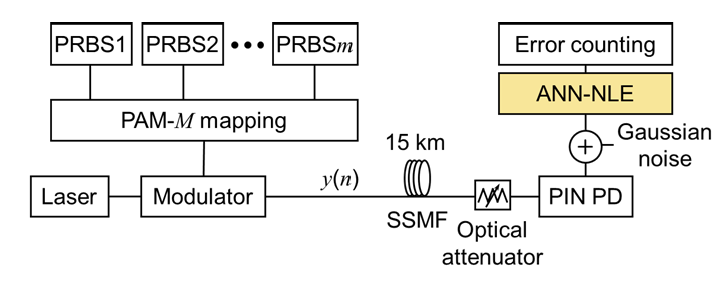

Abstract : Artificial neural network (ANN)-based nonlinear equalizers (NLEs) have been gaining

popularity as powerful waveform equalizers for intensity-modulation (IM)/direct-detection (DD)

systems. On the other hand, the M-ary pulse amplitude modulation (PAM-M) format is now

widely used for high-speed IM/DD systems. For the training of ANN-NLE in PAM-M IM/DD

systems, it is common to employ pseudorandom binary sequences (PRBSs) for the generation of

PAM-M training sequences. However, when the PRBS orders used for training are not sufficiently

high, the ANN-NLE might suffer from the overfitting problem, where the equalizer can estimate

one or more of the constituent PRBSs from a part of PAM-M training sequence, and as a result,

the trained ANN-NLE shows poor performance for new input sequences. In this paper, we

provide a selection guideline of the PRBSs to train the ANN-NLE for PAM-M signals without

experiencing the overfitting. For this purpose, we determine the minimum PRBS orders required

to train the ANN-NLE for a given input size of the equalizer. Our theoretical analysis is confirmed

through computer simulation. The selection guideline is applicable to training the ANN-NLE for

the PAM-M signals, regardless of symbol coding.

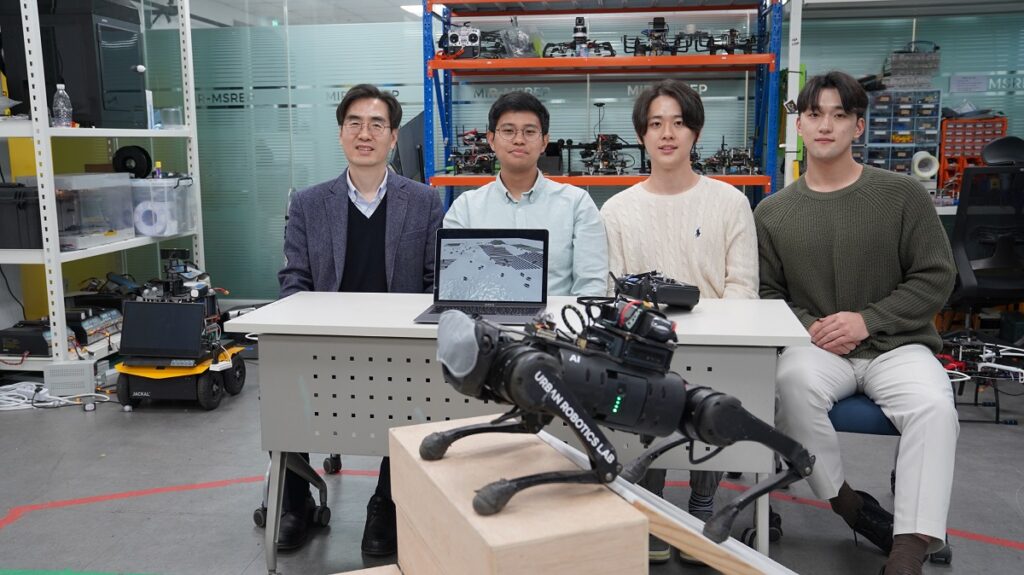

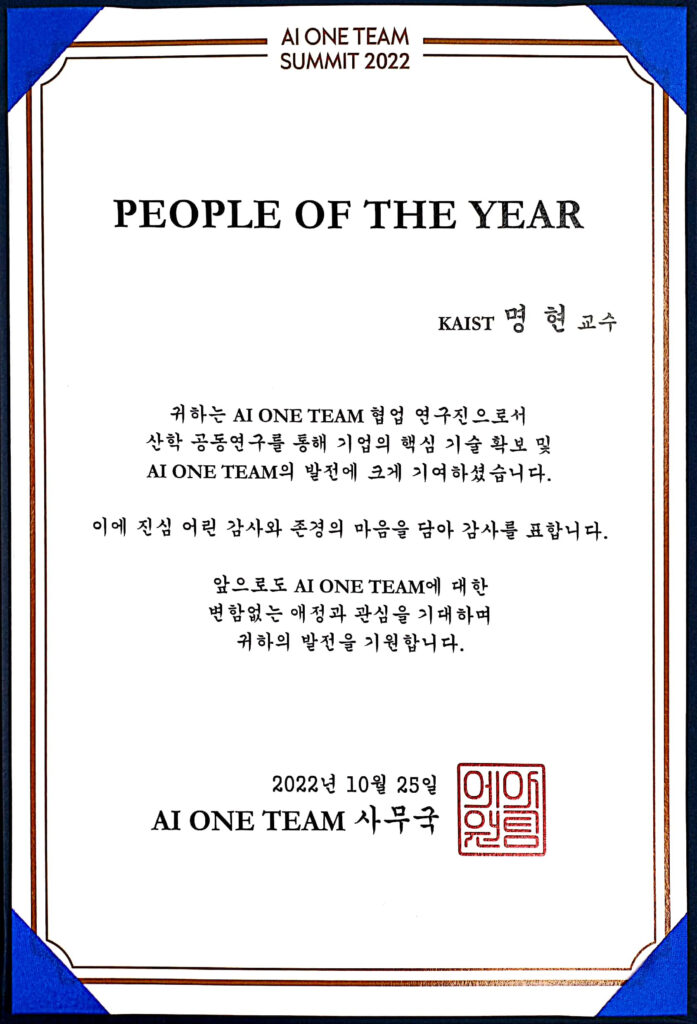

우리 학부 명현 교수가 지난 10월 25일 서울 소피텔 앰버서더 호텔에서 열린 AI 원팀 서밋 (One Team SUMMIT) 2022에서 “People of the Year 2022”로 선정되어 수상했다.

AI One Team은 국내 대표 산·학·연이 모여 대한민국의 AI 역량을 강화하고, 기업 및 산업의 AI 경쟁력을 드높이고자 2020년 2월에 출범한 협력체로서, KT, 현대중공업그룹, LG전자, 한국투자증권, 동원그룹, 우리은행, 한진, 녹십자홀딩스, KAIST, 한양대학교, 성균관대학교, 한국전자통신연구원(ETRI)이 모여 ▲AI 공동연구 ▲AI 생태계 조성 ▲AI 인재육성 분야에서 협력하고 있다.

각 참여사의 대표이사 및 총괄책임자, 각 대학 총장 등이 참석한 올해 행사에서는 산학 공동연구 성과가 우수한 연구팀을 선정하여 “People of the Year”를 수여했고, 명현 교수를 포함, 총 4명이 수상을 했다.

명현 교수팀은 KT와 2년 동안 “로봇 실내공간 지능기술 개발” 과제를 수행해왔고, IEEE RA-L (Robotics and Automation Letters) 2편을 포함한 SCI급 논문 3편 게재, 2022 IEEE ICRA (Int’l Conf. Robotics and Automation) HILTI SLAM Challenge 2등 수상 등의 우수한 실적을 도출하여 수상을 하게 되었다.

출처: AI타임스 (http://www.aitimes.com/news/articleView.html?idxno=147536)

이코노미스트 (https://economist.co.kr/2022/10/26/it/general/20221026100437726.html)

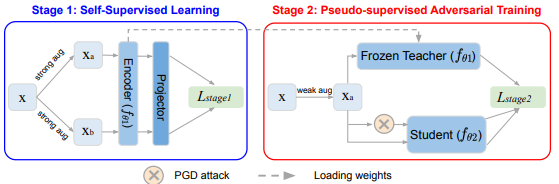

“KAIST 유창동/권인소 교수 연구팀, ECCV 2022에 대조학습 기반(Adversarial Learning)의 자기지도 학습 가능방법 (Self Supervised Learning) 우수(Oral Presentation)연구발표

KAIST 전기및전자공학부 유창동 교수 연구팀이 권인소 교수 연구팀과 공동연구를 통해 인공지능이 월등히 높은 강인성을 가지며, 적은 label데이터로 성능을 높일 수 있는 자기 지도 학습 방법(Self Supervised Learning)을 제안하였다.

[KAIST 유창동 교수, 권인소 교수, Chaoning Zhang 연구원, Kang Zhang 연구원,왼쪽부터]

ECCV는 1990년에 시작되었으며 영상 및 신호처리에 관한 인공지능 및 머신러닝의 최신 연구를 소개하는데 초점을 맞추고 있으며, Computer Vision 및 Deep Learning 분야의 최우수 학회 중 하나로 오랫동안 각광받고 있다. 올해 ECCV 2022 에서는 5,803 개의 제출 논문 중 1650 개의 논문 (28%) 이 채택되었으며 이중 158 개의 논문은 (2.7%) 우수 연구 성과로 채택되었다.

본 연구는 ‘Decoupled Adversarial Contrastive Learning for Self-supervised Adversarial Robustness’ 라는 제목으로 2022 년 10월 23에 Israel, Tel Aviv에서 우수 연구 성과로 발표될 예정이다.

인공지능이 많은 발전을 해서, 다양한 영역에서 좋은 성과를 내고 있다. 그렇지만 아직까지 사람의 완전한 신뢰를 받고 있지 못하다. 완전한 신뢰성을 확보하기 위해서는 적은 데이터로 학습이 되어야 하며, 강인성이 더 확보가 되어야 한다. 이 두 가지를 수행하기 위해 자기 지도학습 (Self-supervised learning) 과 적대적(adversarial learning)을 결합하는 노력들이 시도가 되었다.

이 논문에서는 그것을 증류기법을 이용해서 효율적으로 결합하여, label 없이 자가 학습을 할 수 있는 adversarial learning framework를 제안하였다.

본 연구는 ECCV Oral Presentation (acceptance rate 2.7%) 논문으로 채택이 되었다.

이 연구는 유창동 교수님과 권인소 교수님의 두 실험실에서 공동 협력을 통해 수행된 연구 결과이다. 앞으로 이 연구가 인공지능의 월등히 높은 강인성을 가지면서 적은 label데이터로 성능 높은 서비스를 제공하는데 많이 활용될 것을 기대한다.

[연구성과도 : Adversarial Learning 기반의 Self Supervised Learning 모식도]

이 연구는 과학기술정보통신부의 재원으로 정보통신기술진흥센터의 지원을 받아 수행됐다.

Abstract

Magnetization transfer contrast MR fingerprinting (MTC-MRF) is a novel quantitative imaging method that simultaneously quantifies free bulk water and semisolid macromolecule parameters using pseudo-randomized scan parameters. To improve acquisition efficiency and reconstruction accuracy, the optimization of MRF sequence design has been of recent interest in the MRF field, but has been challenging due to the large number of degrees of freedom to be optimized in the sequence. Herein, we propose a framework for learning-based optimization of the acquisition schedule (LOAS), which optimizes RF saturation-encoded MRF acquisitions with a minimal number of scan parameters for tissue parameter determination. In a supervised learning framework, scan parameters were subsequently updated to minimize a predefined loss function that can directly represent tissue quantification errors. We evaluated the performance of the proposed approach with a numerical phantom and in in vivo experiments. For validation, MRF images were synthesized using the tissue parameters estimated from a fully connected neural network framework and compared with references. Our results showed that LOAS outperformed existing indirect optimization methods with regard to quantification accuracy and acquisition efficiency. The proposed LOAS method could be a powerful optimization tool in the design of MRF pulse sequences.

Abstract

Anomaly detection in magnetic resonance imaging (MRI) is to distinguish the relevant biomarkers of diseases from those of normal tissues. In this paper, an unsupervised algorithm is proposed for pixel-level anomaly detection in multicontrast MRI. A deep neural network is developed, which uses only normal MR images as training data. The network has the two stages of feature generation and density estimation. For feature generation, relevant features are extracted from multicontrast MR images by performing contrast translation and dimension reduction. For density estimation, the distributions of the extracted features are estimated by using Gaussian mixture model (GMM). The two processes are trained to estimate normative distributions well presenting large normal datasets. In test phases, the proposed method can detect anomalies by measuring log-likelihood that a test sample belongs to the estimated normative distributions. The proposed method and its variants were applied to detect glioblastoma and ischemic stroke lesion. Comparison studies with six previous anomaly detection algorithms demonstrated that the proposed method achieved relevant improvements in quantitative and qualitative evaluations. Ablation studies by removing each module from the proposed framework validated the effectiveness of each proposed module. The proposed deep learning framework is an effective tool to detect anomalies in multicontrast MRI. The unsupervised approaches would have great potentials in detecting various lesions where annotated lesion data collection is limited.

Abstract

Deep neural networks (DNNs) are vulnerable to adversarial examples generated by adding malicious noise imperceptible to a human. The adversarial examples successfully fool the models under the white-box setting, but the performance of attacks under the black-box setting degrades significantly, which is known as the low transferability problem. Various methods have been proposed to improve transferability, yet they are not effective against adversarial training and defense models. In this paper, we introduce two new methods termed Lookahead Iterative Fast Gradient Sign Method (LI-FGSM) and Self-CutMix (SCM) to address the above issues. LI-FGSM updates adversarial perturbations with the accumulated gradient obtained by looking ahead. A previous gradient-based attack is used for looking ahead during N steps to explore the optimal direction at each iteration. It allows the optimization process to escape the sub-optimal region and stabilize the update directions. SCM leverages the modified CutMix, which copies a patch from the original image and pastes it back at random positions of the same image, to preserve the internal information. SCM makes it possible to generate more transferable adversarial examples while alleviating the overfitting to the surrogate model employed. Our two methods are easily incorporated with the previous iterative gradient-based attacks. Extensive experiments on ImageNet show that our approach acquires state-of-the-art attack success rates not only against normally trained models but also against adversarial training and defense models

Abstract

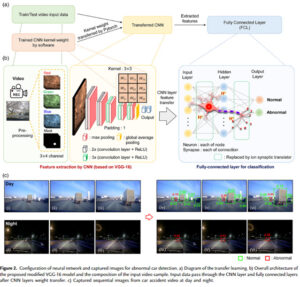

An artificial synapse is an essential element to construct a hardware-based artificial neural network (ANN). While various synaptic devices have been proposed along with studies on electrical characteristics and proper applications, a small number of conductance states with nonlinear and asymmetric conductance changes have been problematic and imposed limits on computational performance. Their applications are thus still limited to the classification of simple images or acoustic datasets. Herein, a polymer electrolyte-gated synaptic transistor (pEGST) is demonstrated for video-based learning and inference using transfer learning. In particular, abnormal car detection (ACD) is attempted with video-based learning and inference to avoid traffic accidents. The pEGST showed multiple states of 8,192 (=13 bits) for weight modulation with linear and symmetric conductance changes and helped reduce the error rate to 3% to judge whether a car in a video is abnormal.

Abstract

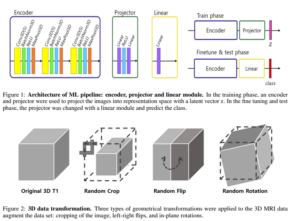

Introduction/purpose: Systemic lupus erythematosus (SLE) is a chronic auto-immune disease with a broad spectrum of clinical presentations, including heterogeneous neuropsychiatric (NP) syndromes. Structural brain abnormalities are commonly found in SLE and NPSLE, but their role in diagnosis is limited, and their usefulness in distinguishing between NPSLE patients and patients in which the NP symptoms are not primarily attributed to SLE (non-NPSLE) is non-existent. Self-supervised contrastive learning algorithms proved to be useful in classification tasks in rare diseases with limited number of datasets. Our aim was to apply self-supervised contrastive learning on T1-weighted images acquired from a well-defined cohort of SLE patients, aiming to distinguish between NPSLE and non-NPSLE patients.

Subjects and methods: We used 3T MRI T1-weighted images of 163 patients. The training set comprised 68 non-NPSLE and 34 NPSLE patients. We applied random geometric transformations between iterations to augment our data sets. The ML pipeline consisted of convolutional base encoder and linear projector. To test the classification task, the projector was removed and one linear layer was measured. Validation of the method consisted of 6 repeated random sub-samplings, each using a random selection of a small group of patients of both subtypes.

Results: In the 6 trials, between 79% and 83% of the patients were correctly classified as NPSLE or non-NPSLE. For a qualitative evaluation of spatial distribution of the common features found in both groups, Gradient-weighted Class Activation Maps (Grad-CAM) were examined. Thresholded Grad-CAM maps show areas of common features identified for the NPSLE cohort, while no such communality was found for the non-NPSLE group.

Discussion/conclusion: The self-supervised contrastive learning model was effective in capturing common brain MRI features from a limited but well-defined cohort of SLE patients with NP symptoms. The interpretation of the Grad-CAM results is not straightforward, but indicates involvement of the lateral and third ventricles, periventricular white matter and basal cisterns. We believe that the common features found in the NPSLE population in this study indicate a combination of tissue loss, local atrophy and to some extent that of periventricular white matter lesions, which are commonly found in NPSLE patients and appear hypointense on T1-weighted images.

Keywords: Systemic Lupus Erythematosus; cohort studies; magnetic resonance imaging; neuroimaging; unsupervised machine learning.

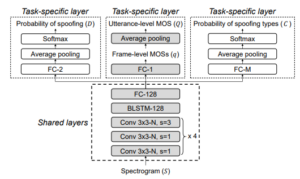

Abstract: Several studies have proposed deep-learning-based models to predict the mean opinion score (MOS) of synthesized speech, showing the possibility of replacing human raters. However, inter- and intra-rater variability in MOSs makes it hard to ensure the high performance of the models. In this paper, we propose a multi-task learning (MTL) method to improve the performance of a MOS prediction model using the following two auxiliary tasks: spoofing detection (SD) and spoofing type classification (STC). Besides, we use the focal loss to maximize the synergy between SD and STC for MOS prediction. Experiments using the MOS evaluation results of the Voice Conversion Challenge 2018 show that proposed MTL with two auxiliary tasks improves MOS prediction. Our proposed model achieves up to 11.6% relative improvement in performance over the baseline model.