Signal Integrity/Power Integrity Design for AI Computing Hardware

1. Signal Integrity/Power Integrity Design of Energy-efficient Processing-in-memory in High Bandwidth Memory (PIM-HBM) Architecture to Accelerate AI Applications

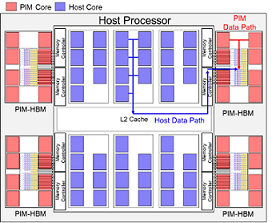

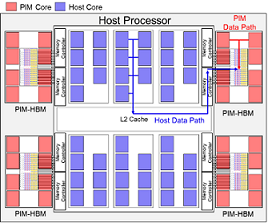

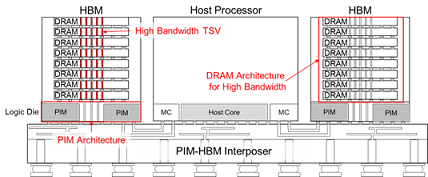

Fig. 1. Conceptual view of heterogeneous PIM-HBM architecture

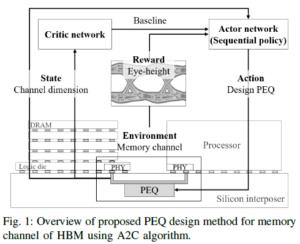

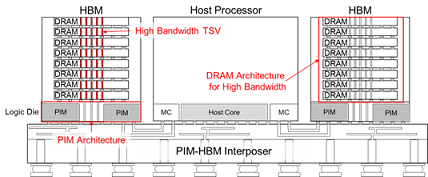

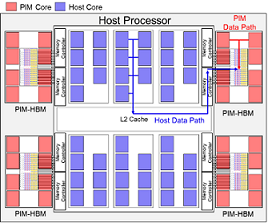

As the demand on high computing performance for artificial intelligence is increasing, parallel processing accelerators are the key factor of system performance. The important feature of these accelerator is high DRAM bandwidth required. That mean DRAM access occurs more frequently. In addition, the energy of DRAM access is about 200 times one 32bit floating-point operation and this gap increases with transistor scaling. In aspect of accelerator, the number of cores is continuously increasing, which requires more off-chip memory bandwidth and area. As a result, it not only increases the energy consumed by interconnection, but also limits system performance by insufficient off-chip memory bandwidth. In order to overcome the limitation, Processing-In-Memory (PIM) architecture is re-emerged. PIM architecture is the integration of processing units with memory, which can be implemented by 3D-stack high bandwidth memory (HBM).

Our lab’s AI hardware group focused on the optimized design of PIM-HBM architecture and interconnection considering signal integrity (SI) / power integrity (PI). In order to provide high memory bandwidth to the PIM core using through silicon via (TSV), area or data rates of TSV should be increased. However, more than 30% of DRAM area is already occupied by TSV, and data rates of TSV is determined by SI. Therefore, optimal design of TSV should be essential for small area and high bandwidth. Also, when the number of PIM cores increases for high performance, more area of logic die is required. That mean memory bandwidth for host processor is decreased by increased interposer channel length. Consequently, design of PIM-HBM logic die and interposer channel must be optimized for system performance without degradation of interposer bandwidth. Through system level optimization as mentioned above, our PIM-HBM architecture can achieve high energy-efficiency by drastically reducing interconnection lengths and improve system performance in memory-limited applications.

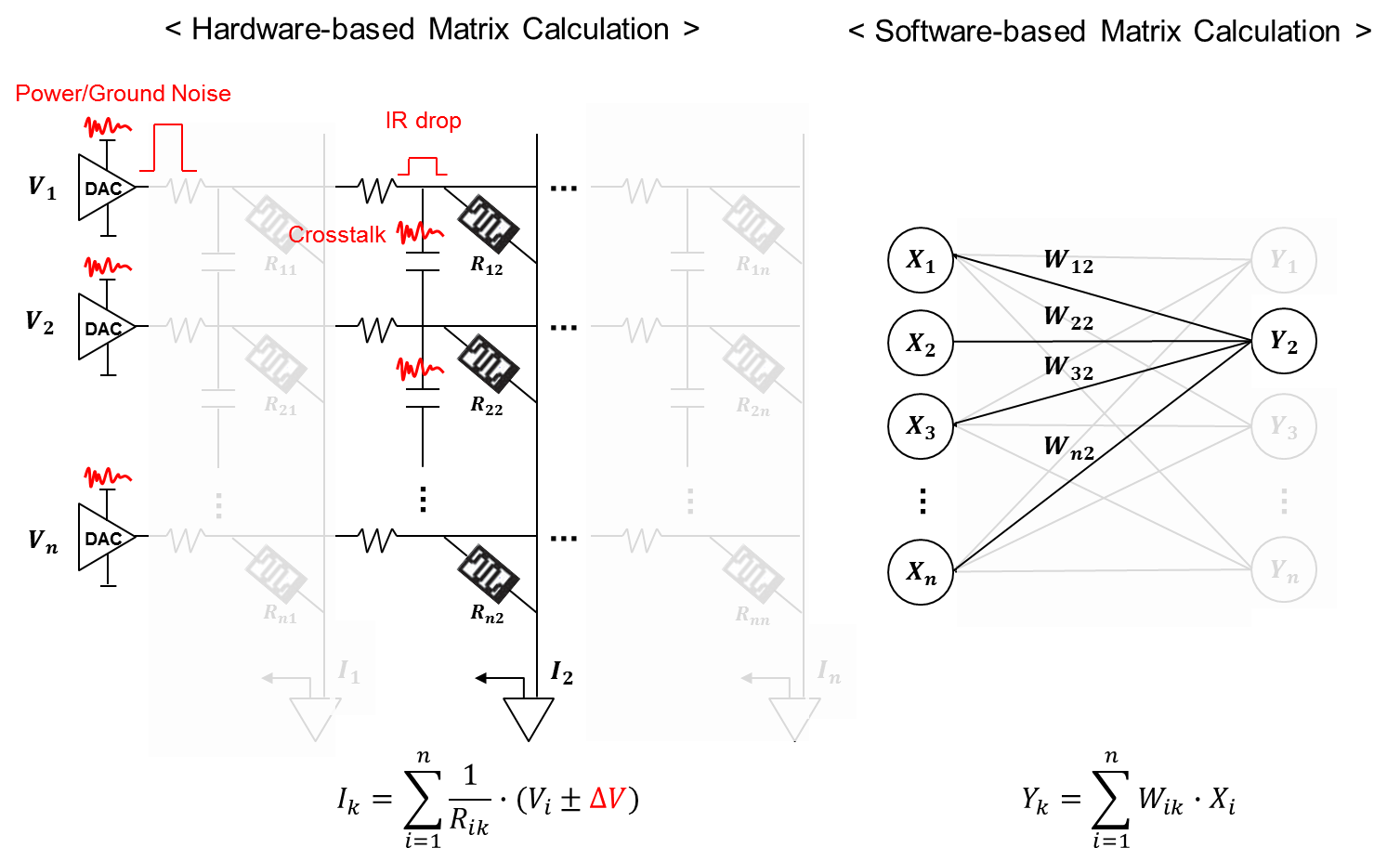

2. Signal Integrity/Power Integrity in a Memristor Crossbar Array for Neural Network Accelerator and Neuromorphic Chip

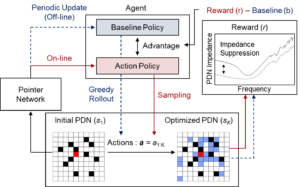

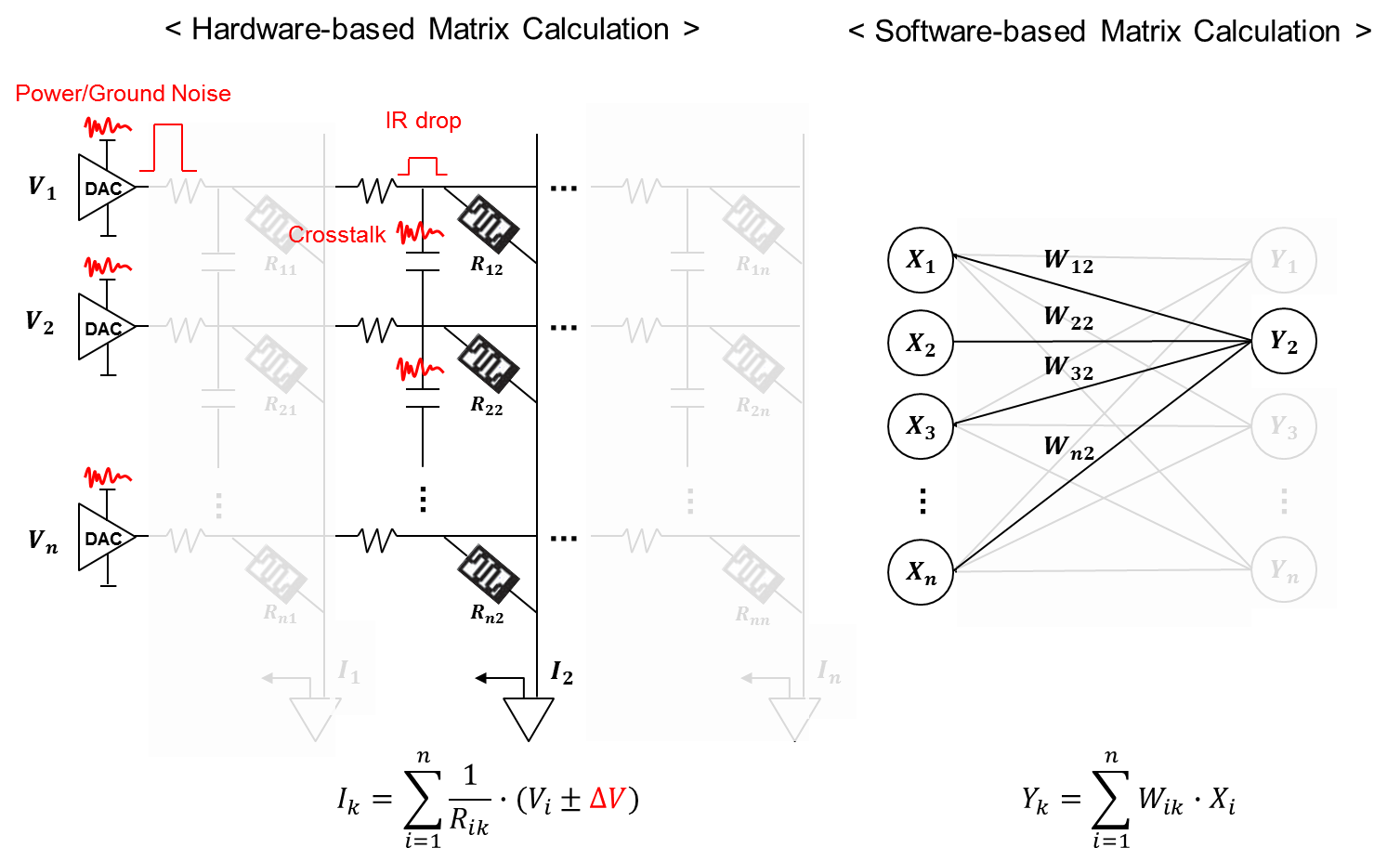

The most important part of artificial intelligence calculations is huge parallel matrix-vector multiplication. Such an operation method is inefficient in terms of calculation time and power in the conventional Von-Neumann computing architecture. This is because the data for the operation must be fetched from off-chip memory, which consumes lots of interconnection power, every clock cycle. Various AI hardware operation architectures are emerging to solve this problem. Among them, the promising structure is to integrate computation into the memory using non-volatile resistive memory. This architecture can reduce the access to off-chip memory for data fetch and calculate vector-matrix multiplication directly by reading current from the multiplication of voltage and conductance of memory as an analog computing approach. Thus, calculation for AI can be done very efficiently based on hardware structure target.

Our lab’s AI hardware group focused on the design of optimized computing architecture and interconnection considering signal integrity (SI) / power integrity (PI) for accurate hardware operation. Generally, memristor crossbar array has smaller size than the number of input neurons in a filter layer. But large-scale memristor crossbar array has serious IR drop problem, and can be more sensitive to noise such as crosstalk and ripple at high speed. In particular, it is more serious in a multi-level input calculation because of small voltage margin. We analyze SI/PI issues such as crosstalk noise between crossbar interconnection and power/ground noise that can affect to memristor resistance change and calculation. These noise can cause a large malfunction in the calculation of the small read voltage margin. Finally, we suggest design guide of memristor crossbar array for hardware AI operation.

Fig. 2. Signal Integrity/Power Integrity in a Memristor Crossbar Array for Hardware-based Matrix-Vector Multiplication