Professor Hoirin Kim’s research team wins ‘Best Student Paper Award’ at the International Conference on Acoustics, Speech, and Signal Processing (ICASSP)

<(From left) Certificate of Award, Award ceremony, PhD candidate Kangwook Jang (first author), PhD candidate Sungnyun Kim>

The research team of Prof. Hoirin Kim from our school has won the Best Student Paper Award at the IEEE International Conference on Acoustics, Speech, and Signal Processing, one of the top-tier international signal, speech, and acoustics conferences. This honor is only given to the top five papers from academic institutions out of 5,576 submitted papers.

The research team, consisting of Kangwook Jang (first author) from the School of Electrical and Electronic Engineering, Sungnyun Kim from the Graduate School of AI, and Professor Hoirin Kim, won the Best Student Paper Award by proposing a new distillation loss function for compressing of speech self-supervised learning (speech SSL) models using the speech temporal relation as a new distillation loss function.

Although speech self-supervised learning models perform well on various speech tasks such as speech recognition and speaker verification, it is still not sufficient for practical scenarios, such as on-device application, due to the very large number of parameters. Therefore, there has been a lot of researches on compression to reduce the number of parameters of these models through knowledge distillation (KD). However, most of the current techniques directly match the teacher’s speech representation to the student, which is over-constraint for students with weak model representation.

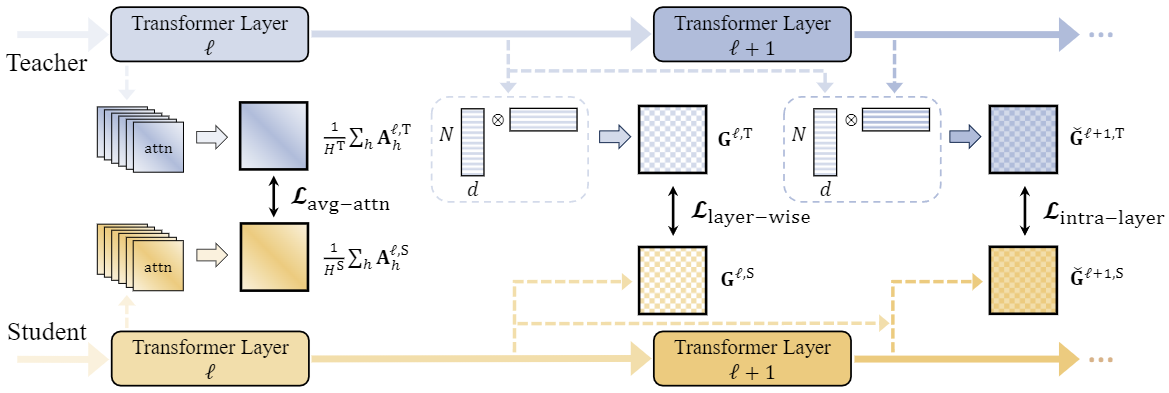

<Schematic diagram of the speech temporal relation loss function proposed by Prof. Hoirin Kim’s research team>

Prof. Hoirin Kim’s research team has explored various objectives to express the temporal relation between speech frames and proposed a loss function suitable for speech self-supervised learning models. The compressed student model is validated on a total of 10 speech-related tasks, and it performs the best among the models that compressed the parameters by about 30%.

This research was supported by the National Research Foundation of Korea grant funded by Korea government.