nd Professor Dong-Ho Kang (Gwangju Institute of Science and Technology, GIST). >

Our school’s research team has developed a next-generation image sensor that can autonomously adapt to drastic changes in illumination without any external image-processing pipeline. The technology is expected to be applicable to autonomous vehicles, intelligent robotics, security and surveillance, and other vision-centric systems.

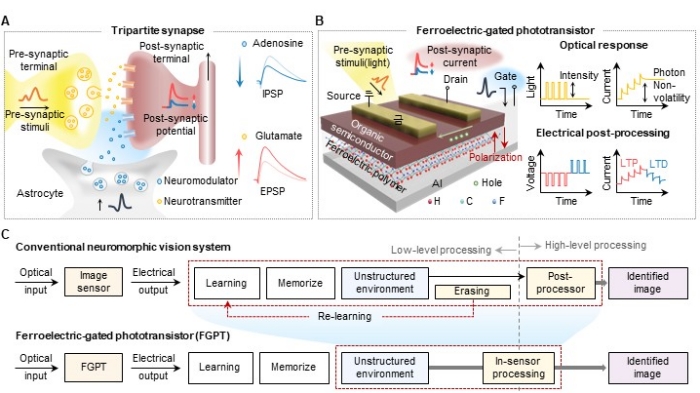

In this joint work, KAIST School of Electrical Engineering Professor Young Min Song and GIST Professor Dong-Ho Kang designed a ferroelectric-based optoelectronic device inspired by the brain’s neural architecture. The device integrates light sensing, memory (recording), and in-sensor processing within a single element, enabling a new class of image sensors.

As demand grows for “Visual AI,” there is an urgent need for high-performance visual sensors that operate robustly across diverse environments. Conventional CMOS-based image sensors* process each pixel’s signal independently; when scene brightness changes abruptly, they are prone to saturation, overexposure or underexposure, leading to information loss. *CMOS (complementary metal-oxide semiconductor) image sensors are fabricated using semiconductor processes and are widely used in digital cameras, smartphones, and other consumer electronics.

In particular, they struggle to adapt instantly to extremes such as day/night transitions, strong backlighting, or rapid indoor-outdoor changes, often requiring separate calibration or post-processing of the captured data.

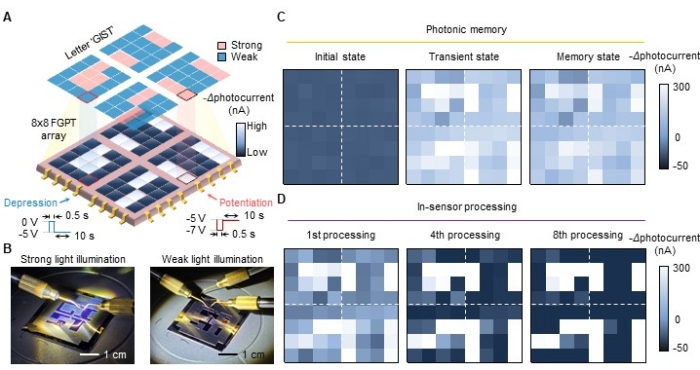

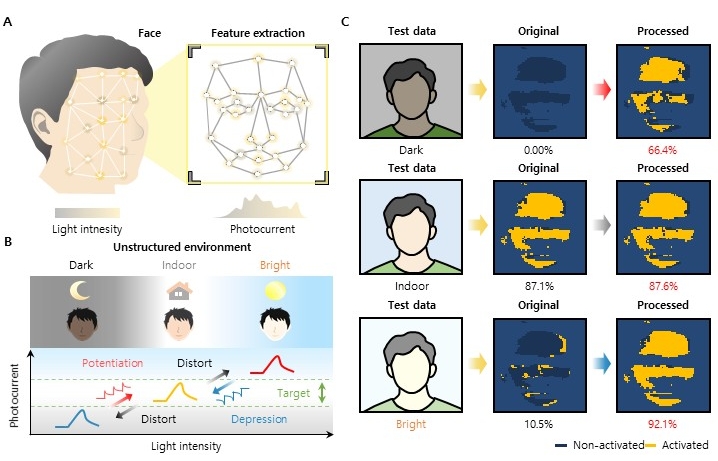

To address these limitations, the team designed a ferroelectric-based image sensor that draws on biological neural structures and learning principles to remain adaptive under extreme environmental variation. By controlling the ferroelectric polarization state, the device can retain sensed optical information for extended periods and selectively amplify or suppress it. As a result, it performs contrast enhancement, illumination compensation, and noise suppression on-sensor, eliminating the need for complex post-processing. The team demonstrated stable face recognition across day/night and indoor/outdoor conditions solely via in-sensor processing, without reconstructing training datasets or performing additional training to handle unstructured environments.

The proposed device is also highly compatible with established AI training algorithms such as convolutional neural networks (CNNs).

CNNs are deep-learning architectures specialized for 2D data such as images and videos, which extract features through convolution operations and perform classification. They are widely used in visual tasks including face recognition, autonomous driving, and medical image analysis.

Professor Young Min Song commented, “This study expands ferroelectric devices, traditionally used as electrical memory, into the domains of neuromorphic vision and in-sensor computing. Going forward, we plan to advance this platform into next-generation vision systems capable of precisely sensing and processing wavelength, polarization, and phase of light.”

This research was supported by the Mid-career Researcher Program of the Ministry of Science and ICT and the National Research Foundation of Korea (NRF). The results were published online in the international journal “Advanced Materials” on July 28th.