Title : A Framework for Accelerating Transformer-based Language Model on ReRAM-based Architecture

Author: Myeonggu Kang, Hyein Shin, Lee-Sup Kim

Journal : IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems

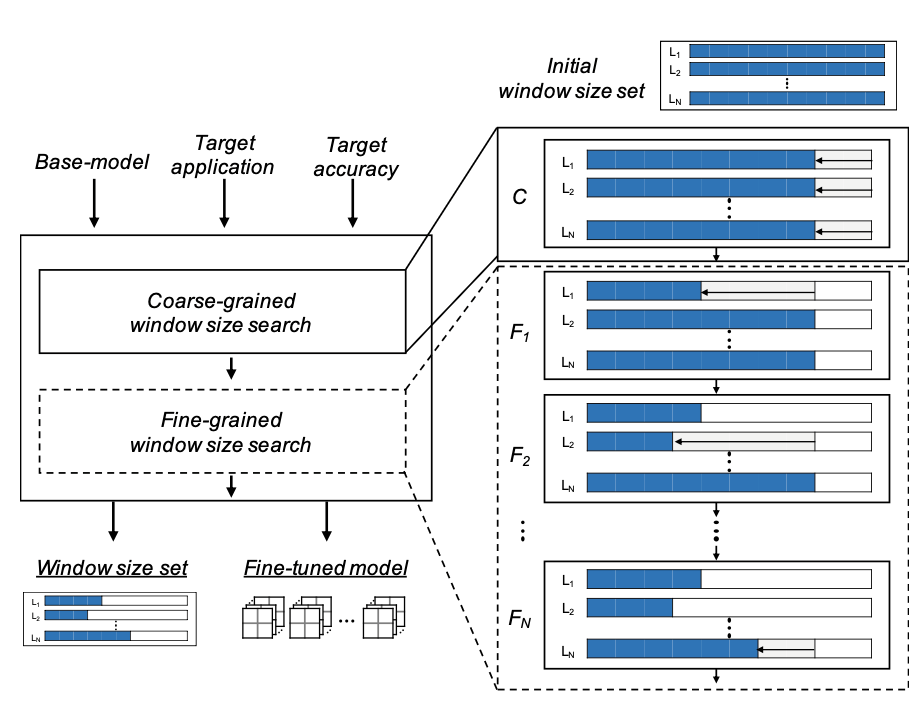

Abstract : Transformer-based language models have become the de-facto standard model for various NLP applications given the superior algorithmic performances. Processing a transformer-based language model on a conventional accelerator induces the memory wall problem, and the ReRAM-based accelerator is a promising solution to this problem. However, due to the characteristics of the self-attention mechanism and the ReRAM-based accelerator, the pipeline hazard arises when processing the transformer-based language model on the ReRAM-based accelerator. This hazard issue greatly increases the overall execution time. In this paper, we propose a framework to resolve the hazard issue. Firstly, we propose the concept of window self-attention to reduce the attention computation scope by analyzing the properties of the self-attention mechanism. After that, we present a window-size search algorithm, which finds an optimal window size set according to the target application/algorithmic performance. We also suggest a hardware design that exploits the advantages of the proposed algorithm optimization on the general ReRAM-based accelerator. The proposed work successfully alleviates the hazard issue while maintaining the algorithmic performance, leading to a 5.8× speedup over the provisioned baseline. It also delivers up to 39.2×/643.2× speedup/higher energy efficiency over GPU, respectively.