Title : A Framework for Area-efficient Multi-task BERT Execution on ReRAM-based Accelerators

Author : Myeonggu Kang, Hyein Shin, Jaekang Shin, Lee-Sup Kim

Conference : IEEE/ACM International Conference On Computer Aided Design 2021

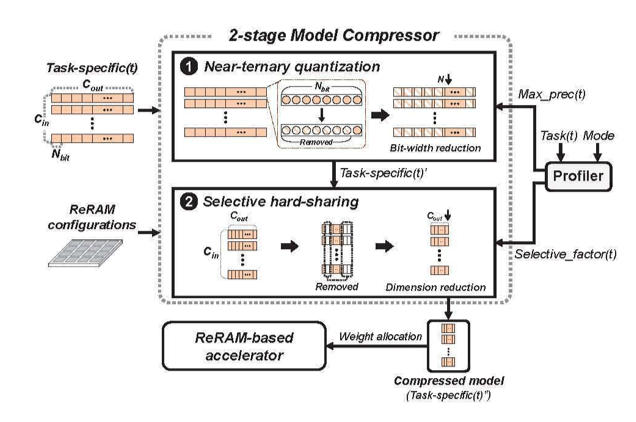

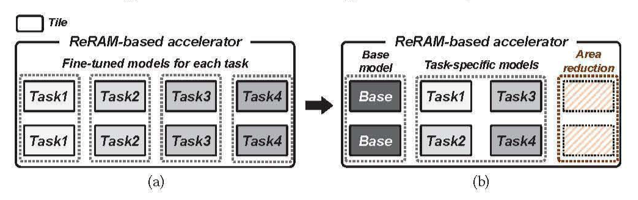

Abstract : With the superior algorithmic performances, BERT has become the de-facto standard model for various NLP tasks. Accordingly, multiple BERT models have been adopted on a single system, which is also called multi-task BERT. Although the ReRAM-based accelerator shows the sufficient potential to execute a single BERT model by adopting in-memory computation, processing multi-task BERT on the ReRAM-based accelerator extremely increases the overall area due to multiple fine-tuned models. In this paper, we propose a framework for area-efficient multi-task BERT execution on the ReRAM-based accelerator. Firstly, we decompose the fine-tuned model of each task by utilizing the base-model. After that, we propose a two-stage weight compressor, which shrinks the decomposed models by analyzing the properties of the ReRAM-based accelerator. We also present a profiler to generate hyper-parameters for the proposed compressor. By sharing the base-model and compressing the decomposed models, the proposed framework successfully reduces the total area of the ReRAM-based accelerator without an additional training procedure. It achieves a 0.26 x area than baseline while maintaining the algorithmic performances.